Project 18: Distributed Key-Value Store with RPC

Build a replicated RPC key-value store with local shared-memory cache.

Quick Reference

| Attribute | Value |

|---|---|

| Difficulty | Level 4 (Expert) |

| Time Estimate | 3 Weeks |

| Main Programming Language | C (Alternatives: ) |

| Alternative Programming Languages | N/A |

| Coolness Level | Level 4 (Hardcore Tech Flex) |

| Business Potential | Level 4 (Open Core) |

| Prerequisites | C programming, basic IPC familiarity, Linux tools (strace/ipcs) |

| Key Topics | replication, consistency, local cache, RPC |

1. Learning Objectives

By completing this project, you will:

- Build a working IPC-based system aligned with Stevens Vol. 2 concepts.

- Implement robust lifecycle management for IPC objects.

- Handle errors and edge cases deterministically.

- Document and justify design trade-offs.

- Benchmark or validate correctness under load.

2. All Theory Needed (Per-Concept Breakdown)

Replication, Consistency, and Failure Recovery

Fundamentals

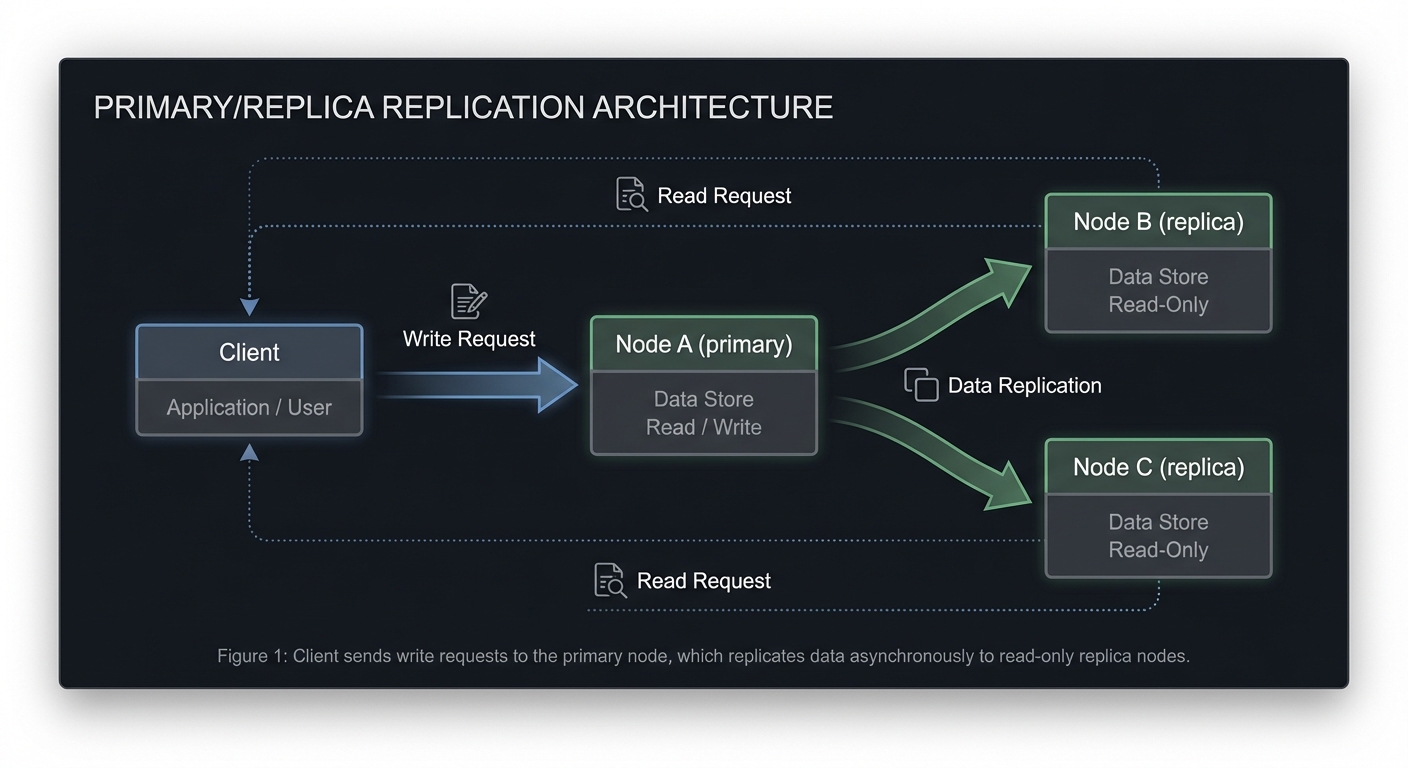

Replication keeps data available when servers fail. The simplest replication strategy is to have one primary and multiple secondaries, or to run all nodes as peers that forward updates to each other. Consistency describes how up-to-date a read is relative to the latest writes. A system that always returns the latest write is strongly consistent; a system that may return stale data is eventually consistent.

Deep Dive into the Concept

In a small RPC-based key-value store, replication can be implemented with synchronous or asynchronous forwarding. Synchronous replication means the primary waits for acknowledgments from peers before responding to the client. This provides stronger consistency but increases latency. Asynchronous replication responds immediately and forwards in the background, improving latency but allowing temporary inconsistencies.

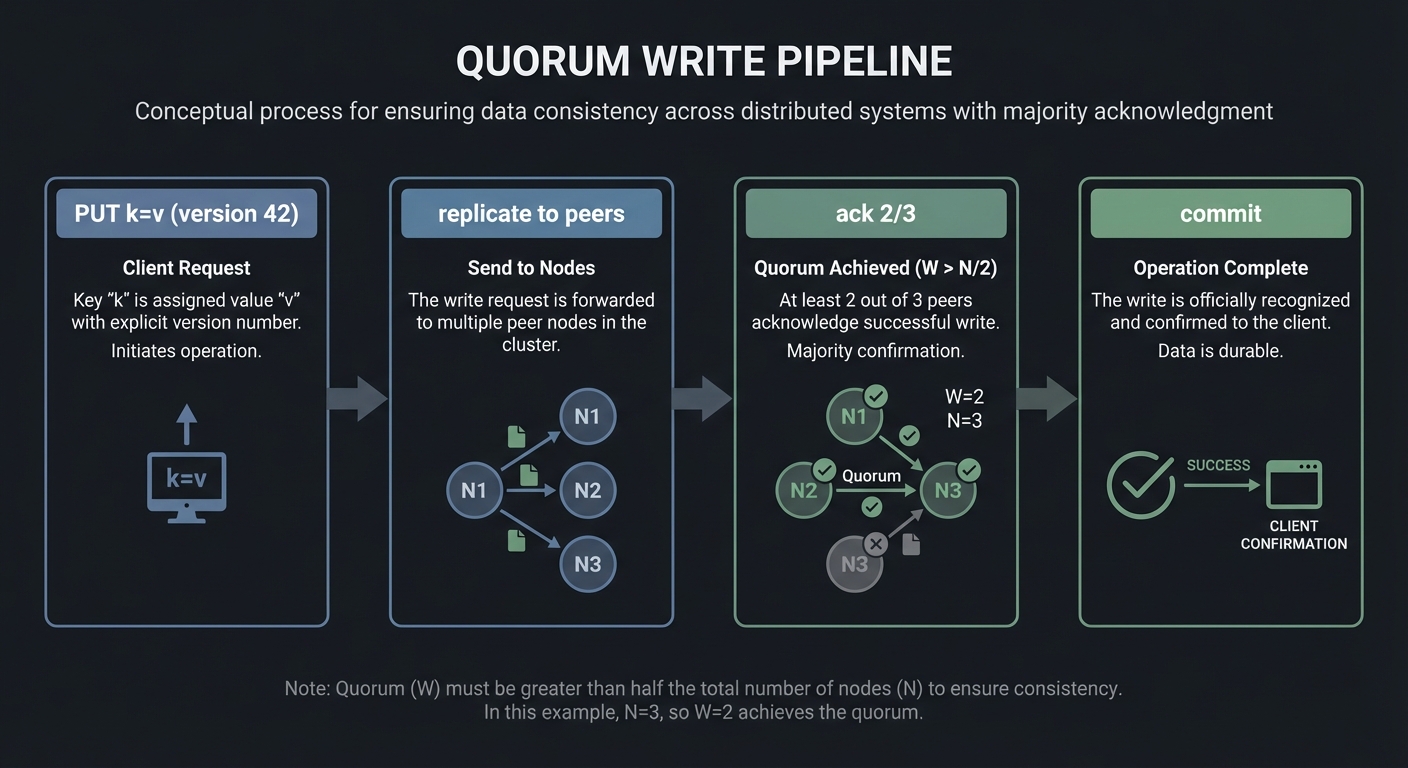

Failure recovery requires handling partial writes and crashes. If a node fails mid-update, other nodes may have applied the update while one did not. You must decide how to reconcile this. Common strategies include write-ahead logs, version numbers, and quorum-based replication. For example, you can assign a monotonically increasing version to each key and keep the highest version as the winner. You can also require a majority of nodes (quorum) to acknowledge a write before it is considered committed. This provides a simple balance between availability and consistency.

Another important concept is split brain: two nodes believe they are primary and accept writes independently, creating divergence. In a small project, you can avoid split brain by designating a fixed primary or using a simple leader election algorithm with heartbeats. For learning purposes, even a static primary with failover manual intervention is acceptable, as long as the behavior is documented.

How this fits on projects

Replication is the core of the distributed KV store project. It brings together RPC, shared memory caching, and synchronization into a system-level design.

Definitions & key terms

- Replication -> Keeping multiple copies of data.

- Consistency -> How current reads are relative to writes.

- Quorum -> Majority required for commit.

- Split brain -> Multiple primaries causing divergence.

Mental model diagram (ASCII)

Client -> Node A (primary) -> Node B, Node C (replicas)

ack quorum before reply

How it works (step-by-step, with invariants and failure modes)

- Client sends PUT to primary.

- Primary writes locally and forwards to replicas.

- Primary waits for quorum acks.

- Primary responds to client.

- Replicas apply updates and persist.

Failure modes: replica lag, network partition, split brain, stale reads.

Minimal concrete example

PUT k=v (version 42) -> replicate to peers -> ack 2/3 -> commit

Common misconceptions

- “Replication always means strong consistency.” -> It depends on policy.

- “Quorum solves all issues.” -> It trades availability for consistency.

- “Failover is automatic.” -> It requires detection and coordination.

Check-your-understanding questions

- What is the trade-off between synchronous and asynchronous replication?

- How does a quorum improve safety?

- What is split brain and how can you prevent it?

Check-your-understanding answers

- Sync gives consistency but higher latency; async gives speed but stale reads.

- A majority ensures at least one up-to-date node after failures.

- Split brain is concurrent primaries; prevent with leader election or leases.

Real-world applications

- Distributed key-value stores.

- Databases with replication (PostgreSQL, etcd).

Where you’ll apply it

- In this project: §3.2 Functional Requirements, §4.1 High-Level Design.

- Also used in: P16-rpc-calculator.md.

References

- “Designing Data-Intensive Applications” by Kleppmann.

- “UNP Vol 2” Ch. 16-18.

Key insights

- Replication is a policy decision: consistency and availability are a trade-off.

Summary

Replication keeps services available but requires explicit consistency and recovery strategies. A small KV store is the perfect lab for these trade-offs.

Homework/Exercises to practice the concept

- Implement a 2-of-3 quorum write rule.

- Simulate a replica failure during a write.

- Add version numbers and resolve conflicts.

Solutions to the homework/exercises

- Require two acknowledgments before commit.

- Kill a replica mid-write and verify read behavior.

- Keep the highest version or use last-writer-wins.

Shared Memory and Ring Buffers

Fundamentals

Shared memory is the fastest IPC mechanism because it avoids kernel copies. Multiple processes map the same physical pages into their address spaces, allowing them to read and write data directly. However, shared memory provides no synchronization; you must use mutexes, semaphores, or lock-free protocols to coordinate access.

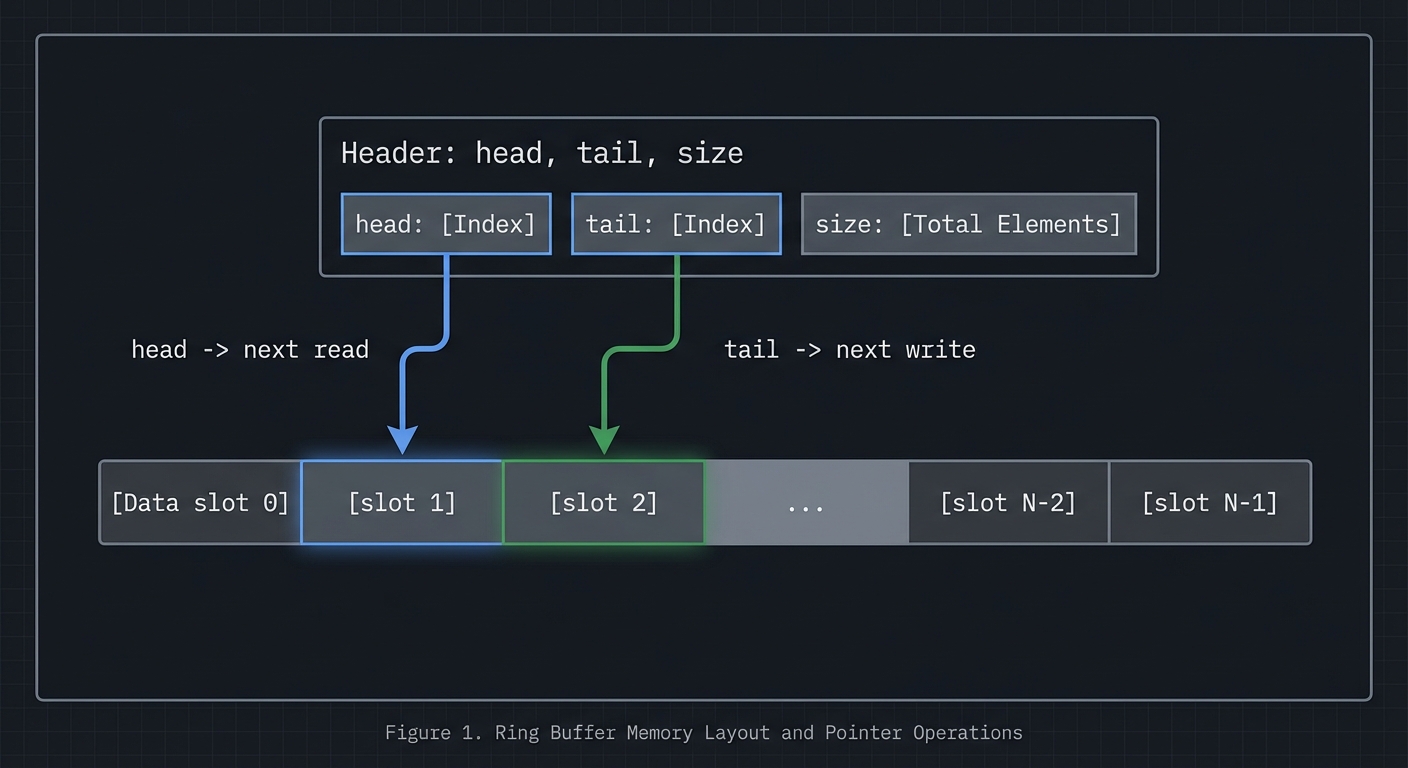

A ring buffer is a circular queue implemented in shared memory. It uses head and tail indices to track where data is written and read. This data structure is ideal for streaming data between processes because it provides constant-time operations and good cache locality.

Deep Dive into the Concept

In POSIX shared memory, you create a named object with shm_open, size it with ftruncate, and map it with mmap. System V shared memory uses shmget and shmat. Both approaches yield a pointer you can use like normal memory. The challenge is establishing a shared layout: both processes must agree on the structure of the shared memory region, including alignment and padding. A typical layout includes a header with metadata (size, head, tail, flags) followed by the data region.

Ring buffers require careful handling of wrap-around and full/empty detection. The simplest approach uses a count field protected by a mutex. More advanced approaches use head and tail indices with one slot left empty to distinguish full from empty. In shared memory across processes, you must also consider cache coherence and false sharing: if head and tail are updated by different processes, place them on separate cache lines to avoid contention.

Synchronization strategies vary. For single-producer/single-consumer, you can use semaphores or lock-free atomic indices. For multi-producer/multi-consumer, you typically need a mutex or more complex lock-free design. The design you choose affects latency, throughput, and complexity. This project will have you implement a correct, well-documented choice rather than chasing micro-optimizations.

How this fits on projects

Shared memory is the core of the ring buffer and image processor projects, and it is used as a local cache in the distributed KV store.

Definitions & key terms

- Shared memory -> Pages mapped into multiple processes.

- Ring buffer -> Circular queue with head/tail indices.

- False sharing -> Performance penalty from shared cache lines.

Mental model diagram (ASCII)

[Header: head, tail, size]

[Data slot 0][slot 1][slot 2]...[slot N-1]

head -> next read, tail -> next write

How it works (step-by-step, with invariants and failure modes)

- Create shared memory and map into processes.

- Initialize header fields and buffer.

- Producer writes at tail and advances index.

- Consumer reads at head and advances index.

- Synchronize to avoid reading empty or writing full.

Failure modes: inconsistent indices, missing synchronization, cache contention.

Minimal concrete example

struct shm_ring { size_t head, tail, cap; char data[CAP]; };

// producer writes data[tail % cap] then tail++

**Common misconceptions**

- "Shared memory is safe by default." -> It provides no synchronization.

- "Ring buffers are trivial." -> Full/empty detection is subtle.

- "More locking is always safer." -> It can kill performance and cause deadlocks.

**Check-your-understanding questions**

1. Why do you need synchronization with shared memory?

2. How can you distinguish full vs empty in a ring buffer?

3. What is false sharing and how do you avoid it?

**Check-your-understanding answers**

1. Multiple processes can write concurrently, causing races.

2. Reserve one slot or track count explicitly.

3. Place frequently updated fields on separate cache lines.

**Real-world applications**

- High-throughput logging pipelines.

- In-memory caches and queues.

**Where you’ll apply it**

- In this project: §3.2 Functional Requirements, §4.4 Data Structures.

- Also used in: [P13-sysv-shm-images.md](P13-sysv-shm-images.md), [P18-rpc-kvstore.md](P18-rpc-kvstore.md).

**References**

- Stevens, "UNP Vol 2" Ch. 12-13.

- `man 2 shm_open`, `man 2 shmget`, `man 2 mmap`.

**Key insights**

- Shared memory is fast because it removes copies, but it moves correctness into your hands.

**Summary**

Shared memory and ring buffers enable high throughput IPC, provided you design synchronization and layout carefully.

**Homework/Exercises to practice the concept**

1. Implement a ring buffer with a mutex and cond vars.

2. Add padding to reduce false sharing and measure throughput.

3. Test full/empty conditions under load.

**Solutions to the homework/exercises**

1. Use a shared header with head/tail/count and pthread mutex in shared memory.

2. Align head and tail on separate cache lines.

3. Run with a producer and consumer loop; assert invariants.

### Sun RPC, XDR, and rpcgen

**Fundamentals**

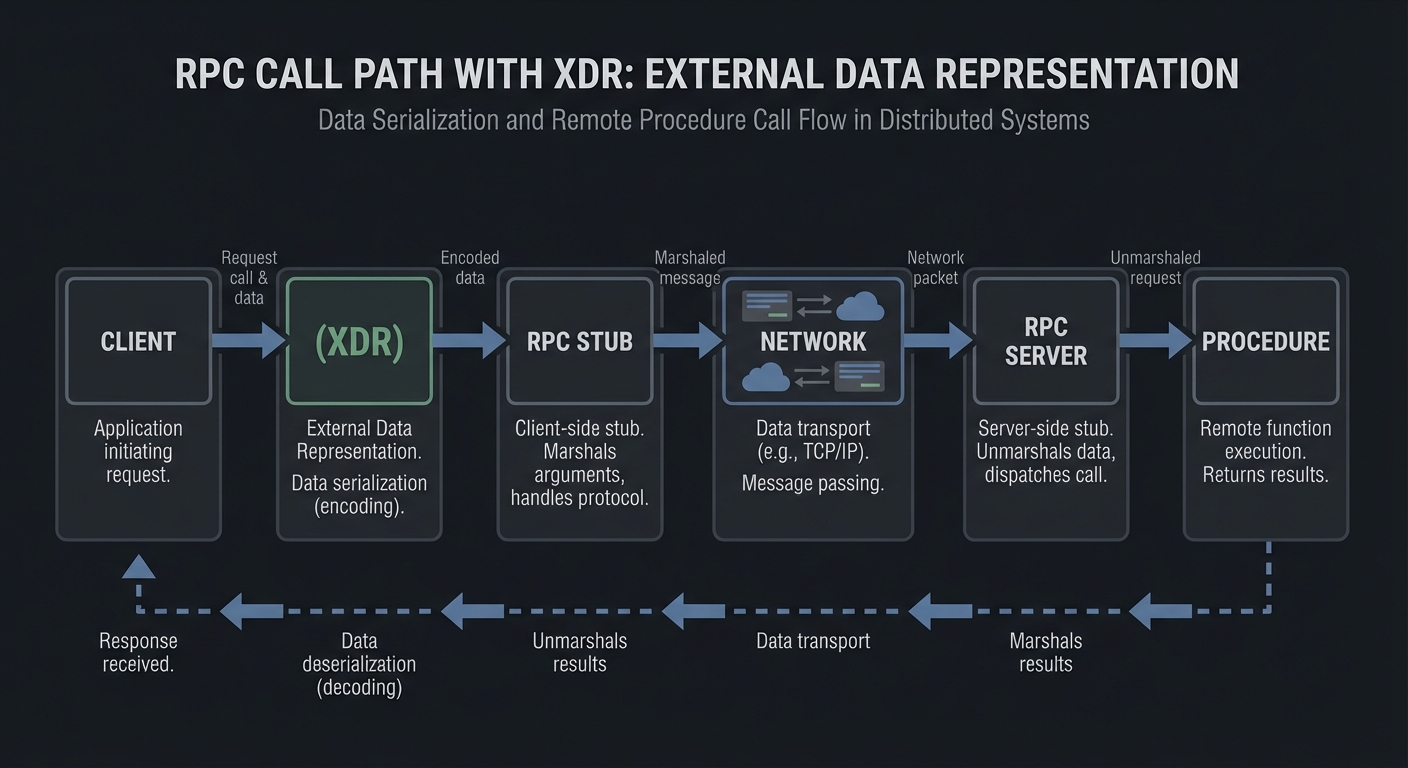

Sun RPC (ONC RPC) provides a way to call functions on remote machines as if they were local. You define an interface in a `.x` file using the RPC language, then `rpcgen` generates client stubs, server skeletons, and XDR serialization code. XDR (External Data Representation) is the wire format that ensures data is encoded consistently across different architectures.

RPC introduces a new layer to IPC: network transport, serialization, and service discovery. The client sends a request to the server, the server executes a procedure, and a response is returned. The complexity lies in timeouts, retries, and failures. RPC hides the network but cannot remove its unreliability.

**Deep Dive into the Concept**

RPC begins with an interface definition. The `.x` file specifies program numbers, version numbers, and procedure signatures. `rpcgen` uses this to create C code for marshalling and unmarshalling data. The generated client stub packs arguments into XDR format, sends them over TCP or UDP, and waits for a reply. The server skeleton unpacks the request, invokes your implementation, and sends a response. This generated code is a critical piece of understanding: it explains where your code runs and how arguments are serialized.

RPC discovery is handled by `rpcbind` (portmapper). Servers register their program and version numbers with rpcbind, which tells clients which port to use. If rpcbind is not running, clients cannot locate the service. This is often the first failure mode in RPC projects.

Another deep concept is idempotency. Because RPC clients may retry when timeouts occur, the server must be prepared to handle duplicate requests safely. For a calculator, this is easy; for a stateful service, you may need request IDs and deduplication. This is why RPC is often described as "at least once" by default.

**How this fits on projects**

This concept is the heart of the RPC calculator project and underlies the authenticated RPC and distributed KV store projects.

**Definitions & key terms**

- **XDR** -> Platform-independent serialization format.

- **rpcgen** -> Code generator for RPC stubs.

- **rpcbind** -> Service discovery for RPC.

- **Idempotency** -> Safe handling of repeated requests.

**Mental model diagram (ASCII)**

```text

Client -> (XDR) -> RPC stub -> network -> RPC server -> procedure

How it works (step-by-step, with invariants and failure modes)

- Define interface in

.xfile. - Run

rpcgento generate stubs. - Server registers program/version with rpcbind.

- Client calls stub; stub encodes XDR and sends request.

- Server decodes, executes, and replies.

Failure modes: rpcbind not running, XDR mismatch, non-idempotent retries.

Minimal concrete example

program CALC_PROG { version CALC_VERS { int ADD(ints)=1; }=1; }=0x31230000;

**Common misconceptions**

- "RPC is just a function call." -> It is a network protocol.

- "rpcgen hides all complexity." -> You still handle errors and retries.

- "XDR is optional." -> It is required for interoperability.

**Check-your-understanding questions**

1. Why does RPC need XDR?

2. What role does rpcbind play?

3. Why is idempotency important?

**Check-your-understanding answers**

1. To ensure consistent encoding across architectures.

2. It maps program/version numbers to ports.

3. Clients may retry, causing duplicate requests.

**Real-world applications**

- NFS (Network File System) uses ONC RPC.

- Legacy distributed services in Unix environments.

**Where you’ll apply it**

- In this project: §3.1 What You Will Build, §5.10 Phase 2.

- Also used in: [P17-rpc-auth.md](P17-rpc-auth.md), [P18-rpc-kvstore.md](P18-rpc-kvstore.md).

**References**

- Stevens, "UNP Vol 2" Ch. 16.

- `man 1 rpcgen`, `man 8 rpcbind`.

**Key insights**

- RPC is structured IPC across machines; the network always matters.

**Summary**

RPC turns function calls into network protocols using XDR and rpcgen. You gain productivity but must design for retries and failures.

**Homework/Exercises to practice the concept**

1. Write a simple `.x` file with two procedures.

2. Run `rpcgen` and inspect generated files.

3. Stop rpcbind and observe client failure.

**Solutions to the homework/exercises**

1. Define a program with ADD and SUBTRACT.

2. Look at `*_clnt.c` and `*_svc.c` files.

3. Run `rpcinfo -p` to see missing registration.

---

## 3. Project Specification

### 3.1 What You Will Build

Build a replicated RPC key-value store with local shared-memory cache.

### 3.2 Functional Requirements

1. **Requirement 1**: PUT/GET over RPC

2. **Requirement 2**: Replicate writes to peers

3. **Requirement 3**: Local shared-memory cache with locking

### 3.3 Non-Functional Requirements

- **Performance**: Must handle at least 10,000 messages/operations without failure.

- **Reliability**: IPC objects are cleaned up on shutdown or crash detection.

- **Usability**: CLI output is readable with clear error codes.

### 3.4 Example Usage / Output

```text

./kv_client --server=localhost:9001 put k v

### 3.5 Data Formats / Schemas / Protocols

RPC messages: {op, key, value, version}.

### 3.6 Edge Cases

- Peer unavailable

- Split brain

- Stale cache

### 3.7 Real World Outcome

You will have a working IPC subsystem that can be run, traced, and tested in a reproducible way.

#### 3.7.1 How to Run (Copy/Paste)

```bash

make

./run_demo.sh

#### 3.7.2 Golden Path Demo (Deterministic)

```bash

./run_demo.sh --mode=golden

Expected output includes deterministic counts and a final success line:

```text

OK: golden scenario completed

#### 3.7.3 Failure Demo (Deterministic)

```bash

./run_demo.sh --mode=failure

Expected output:

```text

ERROR: invalid input or unavailable IPC resource

exit=2

---

## 4. Solution Architecture

### 4.1 High-Level Design

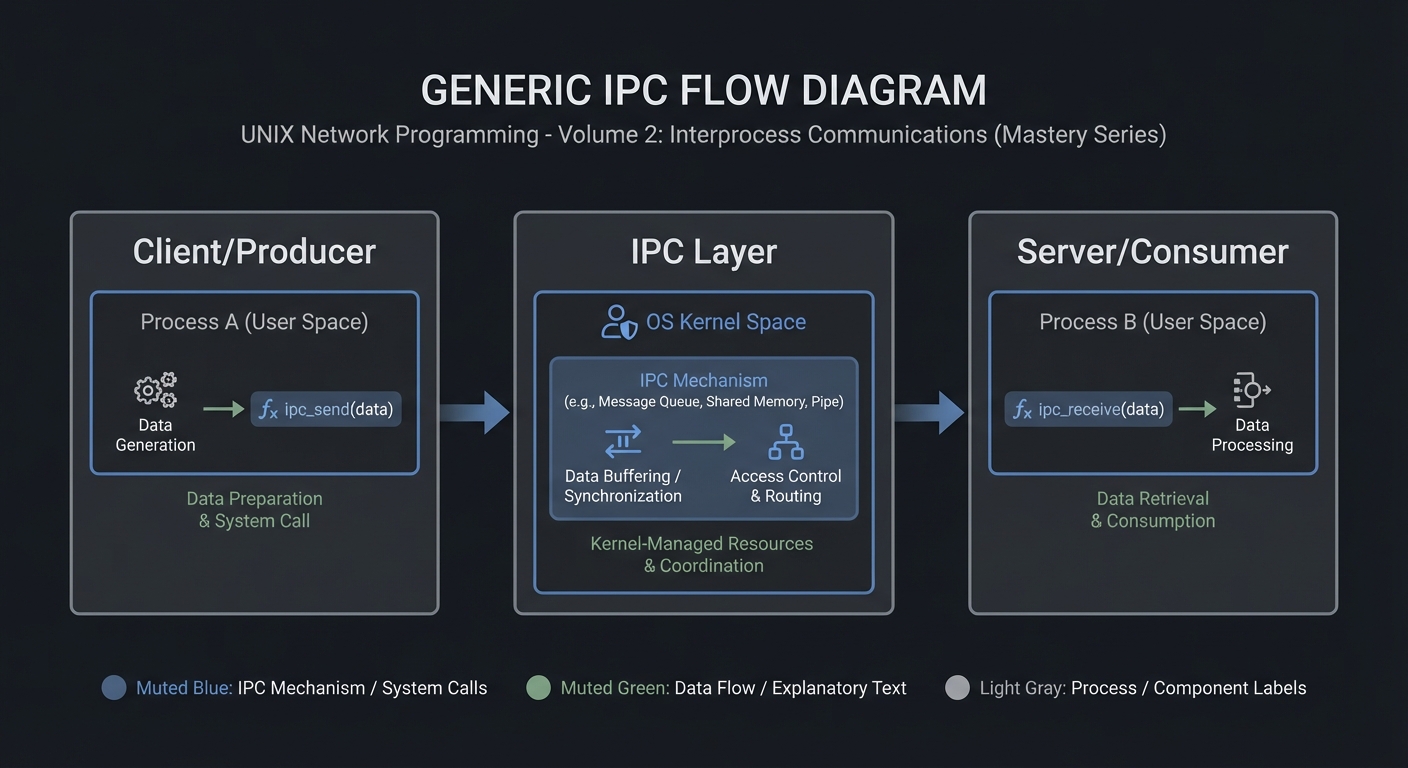

Client/Producer -> IPC Layer -> Server/Consumer

4.2 Key Components

| Component | Responsibility | Key Decisions |

|---|---|---|

| IPC Setup | Create/open IPC objects | POSIX vs System V choices |

| Worker Loop | Send/receive messages | Blocking vs non-blocking |

| Cleanup | Unlink/remove IPC objects | Crash safety |

4.3 Data Structures (No Full Code)

struct message {

int id;

int len;

char payload[256];

};

### 4.4 Algorithm Overview

**Key Algorithm: IPC Request/Response**

1. Initialize IPC resources.

2. Client sends request.

3. Server processes and responds.

4. Cleanup on exit.

**Complexity Analysis:**

- Time: O(n) in number of messages.

- Space: O(1) per message plus IPC buffer.

---

## 5. Implementation Guide

### 5.1 Development Environment Setup

```bash

sudo apt-get install build-essential

### 5.2 Project Structure

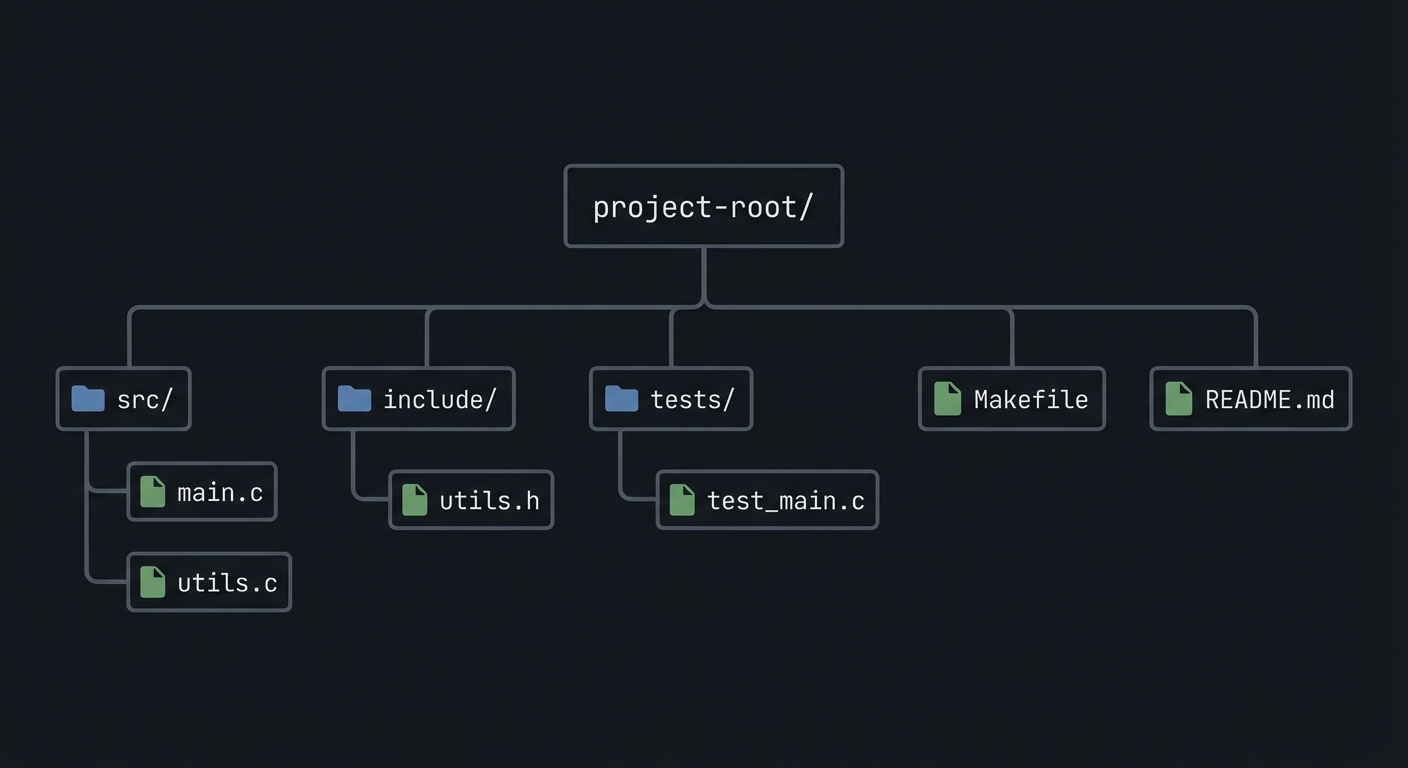

project-root/

├── src/

├── include/

├── tests/

├── Makefile

└── README.md

5.3 The Core Question You’re Answering

“How do you combine local IPC and RPC into a resilient system?”

5.4 Concepts You Must Understand First

- IPC object lifecycle (create/open/unlink)

- Blocking vs non-blocking operations

- Error handling with errno

5.5 Questions to Guide Your Design

- What invariants guarantee correctness in this IPC flow?

- How will you prevent resource leaks across crashes?

- How will you make the system observable for debugging?

5.6 Thinking Exercise

Before coding, sketch the IPC lifecycle and identify where deadlock could occur.

5.7 The Interview Questions They’ll Ask

- Why choose this IPC mechanism over alternatives?

- What are the lifecycle pitfalls?

- How do you test IPC code reliably?

5.8 Hints in Layers

Hint 1: Start with a single producer and consumer.

Hint 2: Add logging around every IPC call.

Hint 3: Use strace or ipcs to verify resources.

5.9 Books That Will Help

| Topic | Book | Chapter |

|---|---|---|

| IPC fundamentals | Stevens, UNP Vol 2 | Relevant chapters |

| System calls | APUE | Ch. 15 |

5.10 Implementation Phases

Phase 1: Foundation (2-4 hours)

Goals:

- Create IPC objects.

- Implement a minimal send/receive loop.

Tasks:

- Initialize IPC resources.

- Implement basic client and server.

Checkpoint: Single request/response works.

Phase 2: Core Functionality (4-8 hours)

Goals:

- Add error handling and cleanup.

- Support multiple clients or concurrent operations.

Tasks:

- Add structured message format.

- Implement cleanup on shutdown.

Checkpoint: System runs under load without leaks.

Phase 3: Polish & Edge Cases (2-4 hours)

Goals:

- Add deterministic tests.

- Document behaviors.

Tasks:

- Add golden and failure scenarios.

- Document limitations.

Checkpoint: Tests pass, behavior documented.

5.11 Key Implementation Decisions

| Decision | Options | Recommendation | Rationale |

|---|---|---|---|

| Blocking mode | blocking vs non-blocking | blocking | Simpler for first version |

| Cleanup | manual vs automated | explicit cleanup | Avoid stale IPC objects |

6. Testing Strategy

6.1 Test Categories

| Category | Purpose | Examples |

|---|---|---|

| Unit Tests | Validate helpers | message encode/decode |

| Integration Tests | IPC flow | client-server round trip |

| Edge Case Tests | Failure modes | missing queue, full buffer |

6.2 Critical Test Cases

- Single client request/response works.

- Multiple requests do not corrupt state.

- Failure case returns exit code 2.

6.3 Test Data

Input: “hello” Expected: “hello”

7. Common Pitfalls & Debugging

7.1 Frequent Mistakes

| Pitfall | Symptom | Solution |

|---|---|---|

| Not cleaning IPC objects | Next run fails | Add cleanup on exit |

| Blocking forever | Program hangs | Add timeouts or non-blocking mode |

| Incorrect message framing | Corrupted data | Add length prefix and validate |

7.2 Debugging Strategies

- Use

strace -fto see IPC syscalls. - Use

ipcsor/dev/mqueueto inspect objects.

7.3 Performance Traps

- Small queue sizes cause frequent blocking.

8. Extensions & Challenges

8.1 Beginner Extensions

- Add verbose logging.

- Add a CLI flag to toggle non-blocking mode.

8.2 Intermediate Extensions

- Add request timeouts.

- Add a metrics report.

8.3 Advanced Extensions

- Implement load testing with multiple clients.

- Add crash recovery logic.

9. Real-World Connections

9.1 Industry Applications

- IPC services in local daemons.

- Message-based coordination in legacy systems.

9.2 Related Open Source Projects

- nfs-utils - Uses RPC and IPC extensively.

- systemd - Uses multiple IPC mechanisms.

9.3 Interview Relevance

- Demonstrates system call knowledge and concurrency reasoning.

10. Resources

10.1 Essential Reading

- Stevens, “UNP Vol 2”.

- Kerrisk, “The Linux Programming Interface”.

10.2 Video Resources

- Unix IPC lectures from OS courses.

10.3 Tools & Documentation

man 7 ipc,man 2for each syscall.

10.4 Related Projects in This Series

11. Self-Assessment Checklist

11.1 Understanding

- I can describe IPC object lifecycle.

- I can explain blocking vs non-blocking behavior.

- I can reason about failure modes.

11.2 Implementation

- All functional requirements are met.

- Tests pass.

- IPC objects are cleaned up.

11.3 Growth

- I can explain design trade-offs.

- I can explain this project in an interview.

12. Submission / Completion Criteria

Minimum Viable Completion:

- Basic IPC flow works with correct cleanup.

- Error handling returns deterministic exit codes.

Full Completion:

- Includes tests and deterministic demos.

- Documents trade-offs and limitations.

Excellence (Going Above & Beyond):

- Adds performance benchmarking and crash recovery.