Sprint: Math for Machine Learning Mastery - Real World Projects

Goal: Build deep, implementation-level mathematical intuition for machine learning by moving from symbolic reasoning to numerical reasoning and finally to model training decisions. You will learn why each math concept exists, where it breaks in practice, and how it surfaces in real ML systems. By the end, you will be able to explain and implement core ML math workflows without treating libraries as black boxes. You will also develop the judgment to debug instability, pick appropriate loss/optimization strategies, and defend your model choices in interviews or production reviews.

Introduction

Math for machine learning is the language that connects data, models, and decisions under uncertainty. In this guide, you will build projects that start with expression parsing and end with full pipeline evaluation, so each abstract concept becomes an observable artifact.

- What this solves today: Teams often ship models that “work” but fail under shift, scale, or noisy data because foundational math assumptions were never validated.

- What you will build: 31 projects covering algebra, linear algebra, calculus, probability/statistics, optimization, matrix calculus, information theory, numerical stability, convex optimization, and advanced ML math extensions.

- In scope: Mathematical modeling, numerical behavior, optimization dynamics, and model evaluation.

- Out of scope: Framework-specific production deployment infrastructure (Kubernetes, feature stores, CI/CD for ML services).

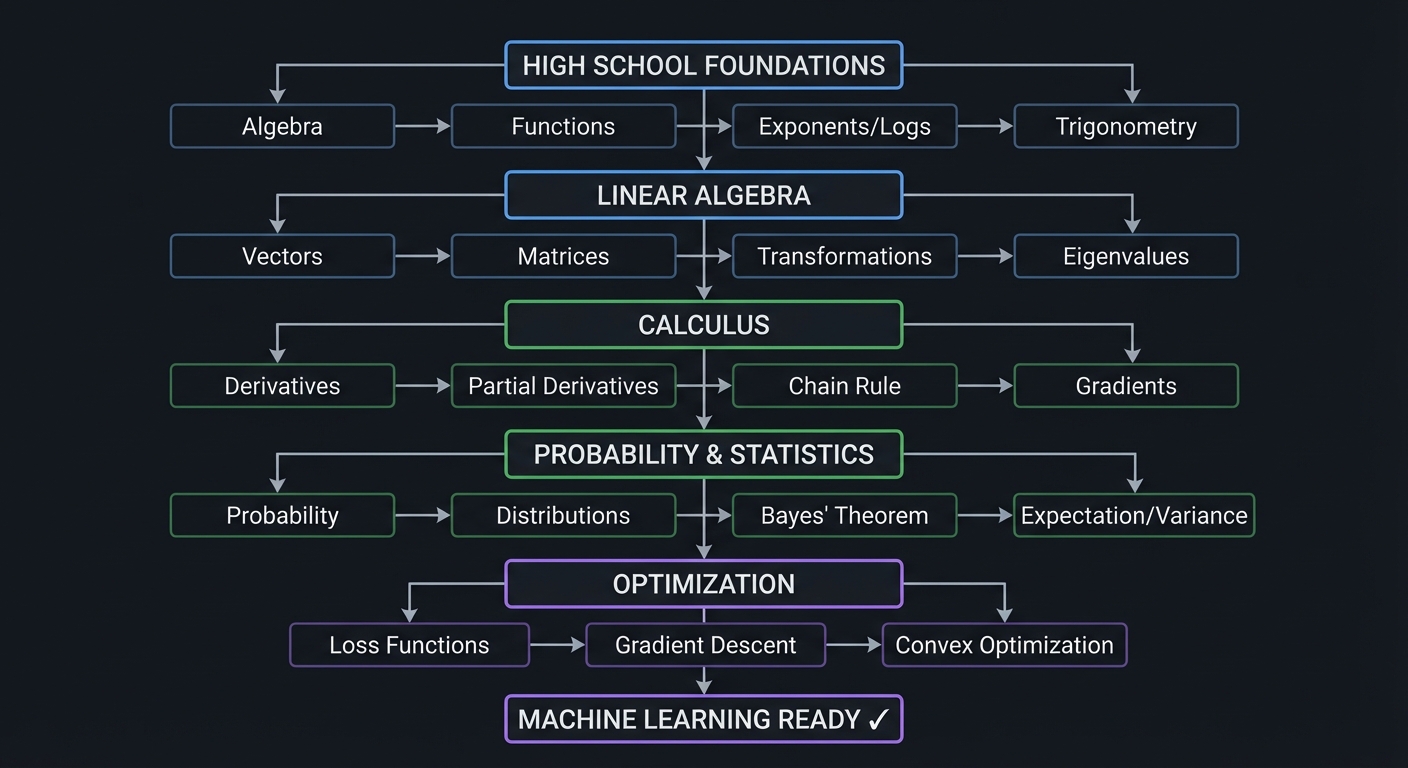

HIGH SCHOOL FOUNDATIONS

↓

Algebra → Functions → Exponents/Logs → Trigonometry

↓

LINEAR ALGEBRA

↓

Vectors → Matrices → Transformations → Eigenvalues

↓

CALCULUS

↓

Derivatives → Partial Derivatives → Chain Rule → Gradients

↓

PROBABILITY & STATISTICS

↓

Probability → Distributions → Bayes' Theorem → Expectation/Variance

↓

OPTIMIZATION

↓

Loss Functions → Gradient Descent → Convex Optimization

↓

MACHINE LEARNING READY ✓

How to Use This Guide

- Read the Theory Primer first, concept by concept, and take notes on failure modes and invariants.

- Do projects in order unless you already have mastery in a cluster; each later cluster assumes execution confidence from earlier ones.

- For each project, answer the Core Question before implementation.

- Validate with the Definition of Done checklists and keep a project log (inputs, configs, errors, fixes, outcomes).

- Treat all snippets as pseudocode or implementation guides; translate them deliberately to your language/runtime.

Prerequisites & Background Knowledge

Essential Prerequisites (Must Have)

- Basic programming in one language (Python recommended for speed of iteration)

- Comfort with loops, conditionals, arrays/lists, and functions

- Basic CLI usage and package management

- Recommended Reading: “Math for Programmers” by Paul Orland - Chapters 1-3

Helpful But Not Required

- Basic plotting and data manipulation tools (Matplotlib, Pandas, GNUplot, or equivalents)

- Introductory statistics vocabulary

- Familiarity with Git and reproducible experiments

Self-Assessment Questions

- Can you explain why

log(exp(x)) = xbut domain constraints still matter in implementation? - Can you solve a 2x2 linear system and interpret the geometry of the solution?

- Can you explain what a gradient means in plain language and how it affects parameter updates?

Development Environment Setup Required Tools:

- Python 3.11+ or equivalent language runtime

- Plotting library (Matplotlib or equivalent)

- CSV/JSON parsing support

- Test runner (pytest or equivalent)

Recommended Tools:

- Jupyter Notebook or Quarto for experiment logs

jqfor result-file inspection- A fixed random seed utility and simple benchmarking script

Testing Your Setup:

$ python --version

Python 3.11.x

$ python -c "print('env ok')"

env ok

Time Investment

- Simple projects: 4-8 hours each

- Moderate projects: 10-20 hours each

- Complex projects: 20-40 hours each

- Total sprint: ~8-12 months (part-time)

Important Reality Check Progress is non-linear. The hardest part is not syntax; it is learning to map symbolic equations to robust numerical procedures under finite precision and noisy data.

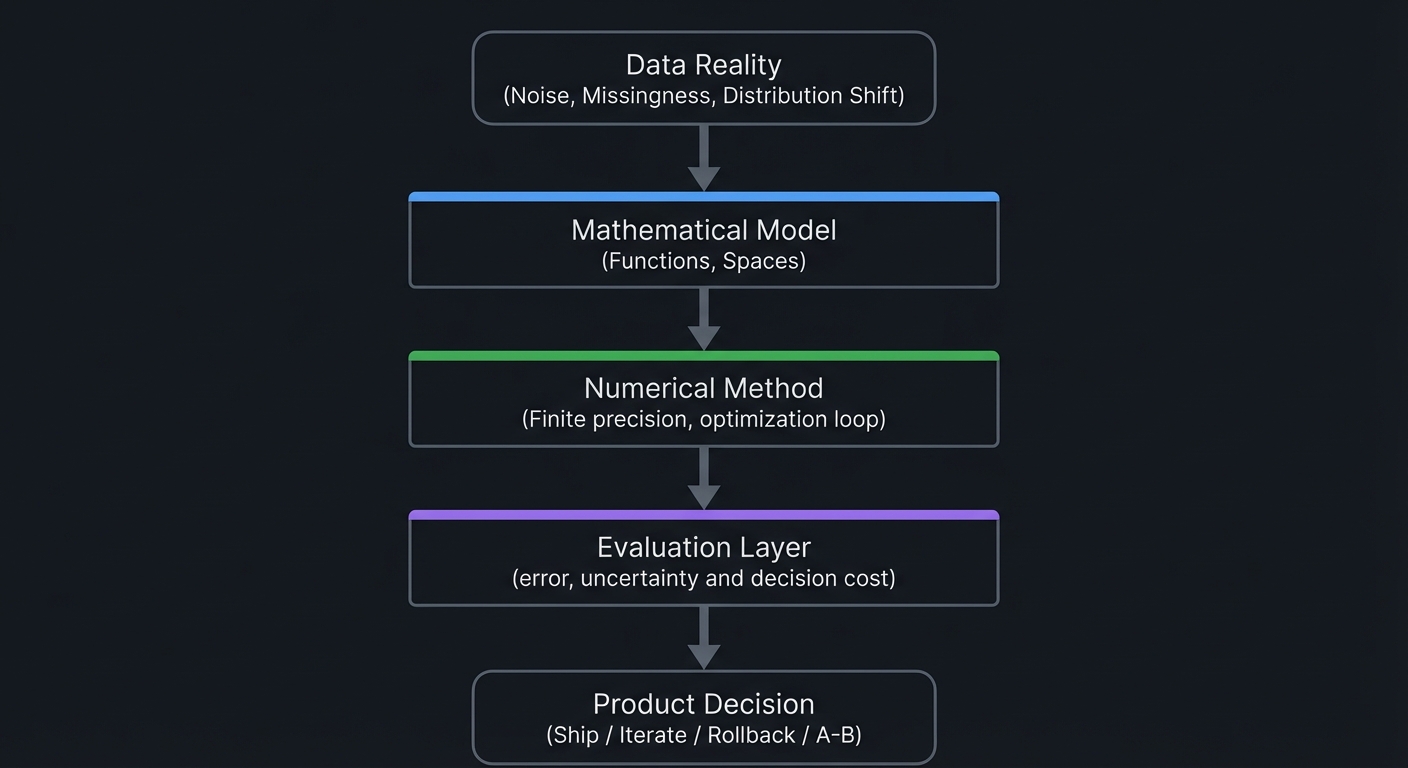

Big Picture / Mental Model

Data Reality

(Noise, Missingness,

Distribution Shift)

|

v

+--------------------+

| Mathematical Model |

| (Functions, Spaces)|

+--------------------+

|

v

+--------------------+

| Numerical Method |

| (Finite precision, |

| optimization loop) |

+--------------------+

|

v

+--------------------+

| Evaluation Layer |

| (error, uncertainty|

| and decision cost) |

+--------------------+

|

v

Product Decision

(Ship / Iterate /

Rollback / A-B)

Theory Primer

This primer keeps theory and implementation coupled. Every concept includes mental models, invariants, and failure modes so you can diagnose behavior in real training workflows.

This section provides detailed explanations of the core mathematical concepts that all 31 projects in this guide teach. Understanding these concepts deeply—not just procedurally—will transform you from someone who uses ML libraries to someone who truly understands what happens inside them.

\1

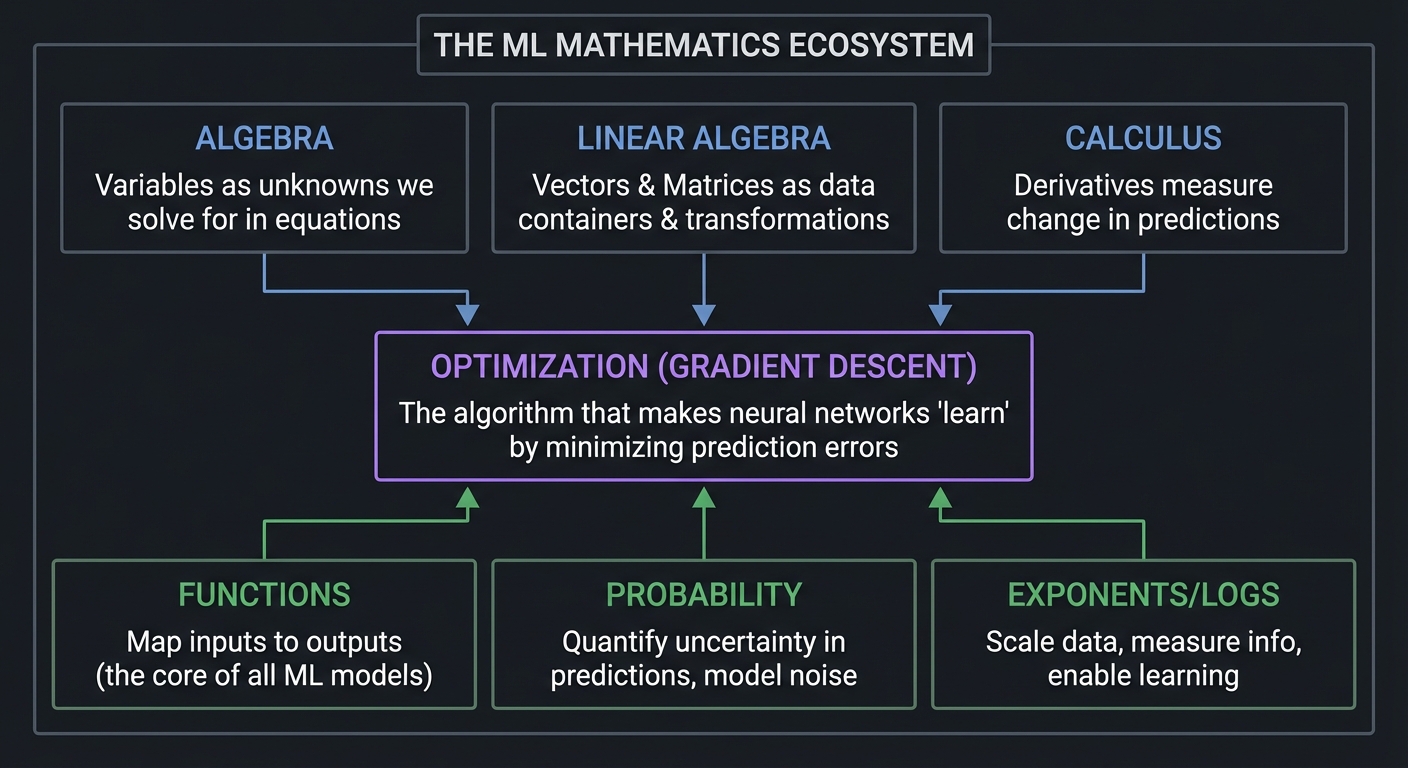

Before diving into each mathematical area, let’s understand why these specific topics are essential:

┌─────────────────────────────────────────────────────────────────────────┐

│ THE ML MATHEMATICS ECOSYSTEM │

├─────────────────────────────────────────────────────────────────────────┤

│ │

│ ALGEBRA LINEAR ALGEBRA CALCULUS │

│ ──────── ────────────── ──────── │

│ Variables as Vectors & Matrices Derivatives │

│ unknowns we solve ──▶ as data containers ──▶ measure change │

│ for in equations & transformations in predictions │

│ │ │ │ │

│ │ │ │ │

│ ▼ ▼ ▼ │

│ ┌─────────────────────────────────────────────────────────────────┐ │

│ │ OPTIMIZATION (GRADIENT DESCENT) │ │

│ │ The algorithm that makes neural networks "learn" │ │

│ │ by minimizing prediction errors │ │

│ └─────────────────────────────────────────────────────────────────┘ │

│ ▲ ▲ ▲ │

│ │ │ │ │

│ FUNCTIONS PROBABILITY EXPONENTS/LOGS │

│ ───────── ─────────── ────────────── │

│ Map inputs to Quantify uncertainty Scale data, │

│ outputs (the core in predictions, measure info, │

│ of all ML models) model noise enable learning │

│ │

└─────────────────────────────────────────────────────────────────────────┘

Every neural network is fundamentally:

- A composition of functions (algebra)

- Represented by matrices of weights (linear algebra)

- Trained by computing gradients (calculus)

- Making probabilistic predictions (probability)

- Optimized by gradient descent (optimization)

The math is not abstract theory—it is the actual implementation. When you run model.fit() in PyTorch or TensorFlow, these mathematical operations are exactly what happens inside.

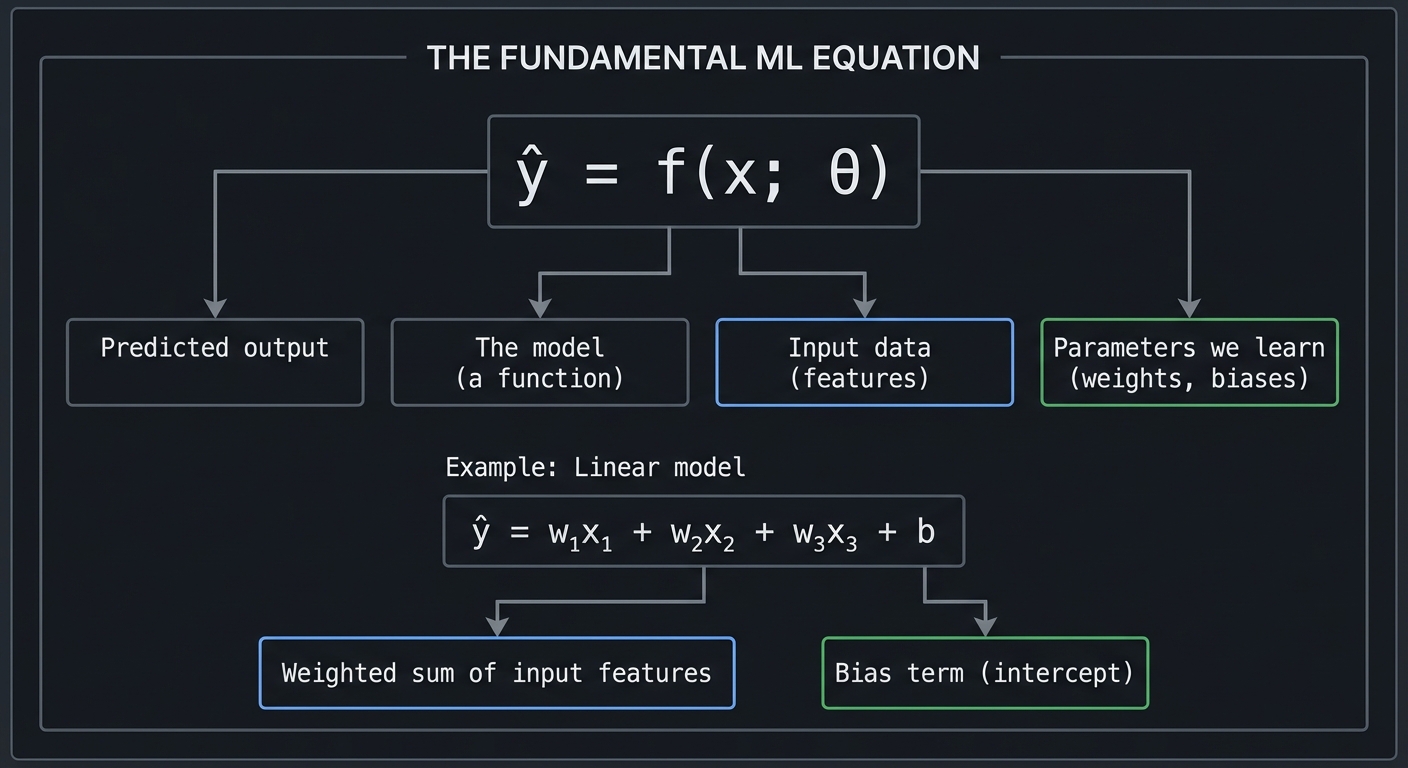

\1

Algebra is the foundation upon which all higher mathematics rests. At its core, algebra is about expressing relationships between quantities using symbols, then manipulating those symbols to discover new truths.

Variables: Placeholders for Unknown or Changing Quantities

In ML, we use variables constantly:

xrepresents input features (a single number or a vector of thousands)yrepresents the target we want to predictw(weights) andb(bias) are the parameters we learnθ(theta) represents all learnable parameters

THE FUNDAMENTAL ML EQUATION

ŷ = f(x; θ)

│ │ │ │

│ │ │ └── Parameters we learn (weights, biases)

│ │ └───── Input data (features)

│ └──────── The model (a function)

└──────────── Predicted output

Example: Linear model

ŷ = w₁x₁ + w₂x₂ + w₃x₃ + b

└────────┬─────────┘ │

│ │

Weighted sum of Bias term

input features (intercept)

Equations: Statements of Equality We Solve

An equation states that two expressions are equal. Solving equations means finding values that make this true.

SOLVING A LINEAR EQUATION

Find x such that: 3x + 7 = 22

Step 1: Subtract 7 from both sides

3x + 7 - 7 = 22 - 7

3x = 15

Step 2: Divide both sides by 3

3x/3 = 15/3

x = 5

Verification: 3(5) + 7 = 15 + 7 = 22 ✓

In ML, we don’t solve single equations—we solve systems of equations represented as matrices, or we use iterative methods (gradient descent) to find approximate solutions.

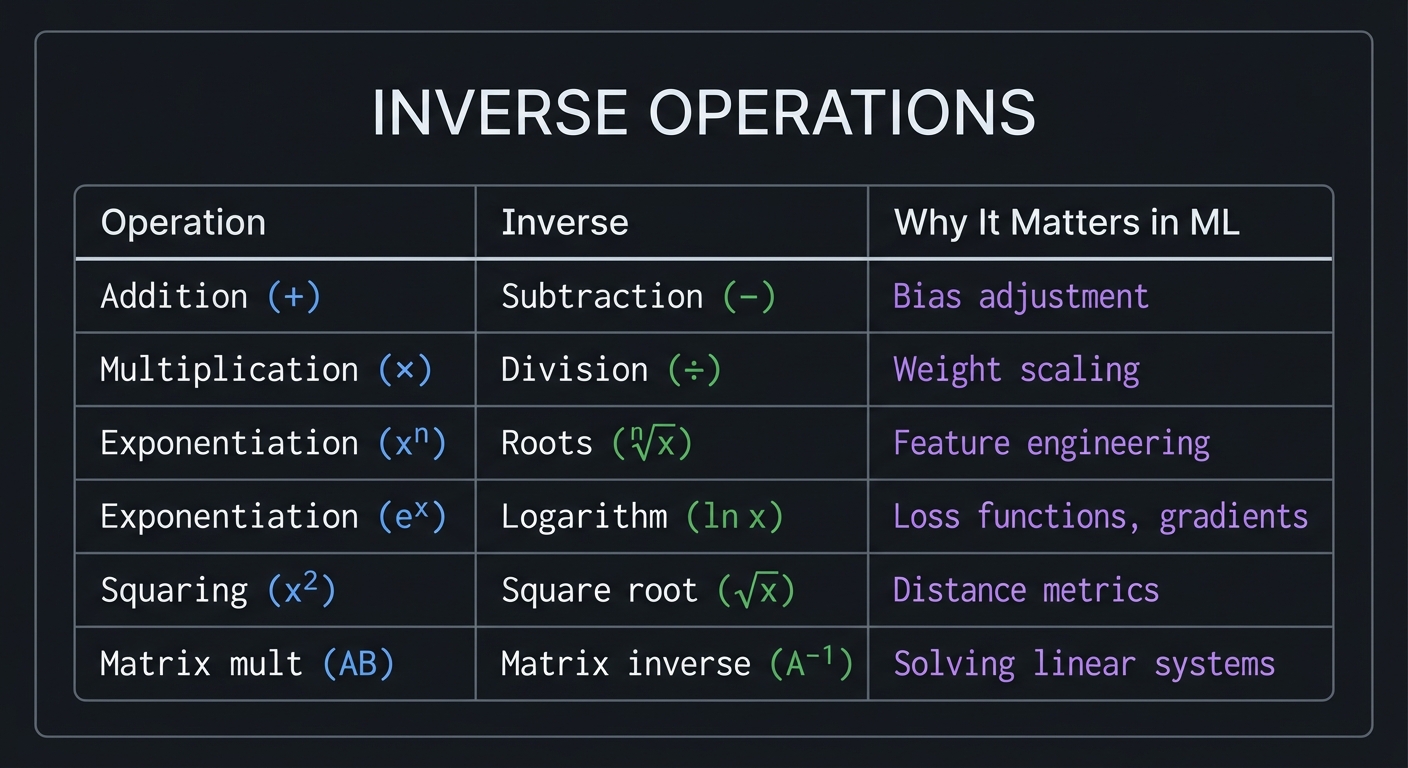

Inverse Operations: The Key to Solving

Every operation has an inverse that “undoes” it:

INVERSE OPERATIONS

Operation Inverse Why It Matters in ML

─────────────────────────────────────────────────────────────────

Addition (+) Subtraction (−) Bias adjustment

Multiplication (×) Division (÷) Weight scaling

Exponentiation (xⁿ) Roots (ⁿ√x) Feature engineering

Exponentiation (eˣ) Logarithm (ln x) Loss functions, gradients

Squaring (x²) Square root (√x) Distance metrics

Matrix mult (AB) Matrix inverse (A⁻¹) Solving linear systems

Reference: “Math for Programmers” by Paul Orland, Chapter 2, provides an excellent programmer-focused treatment of algebraic fundamentals.

\1

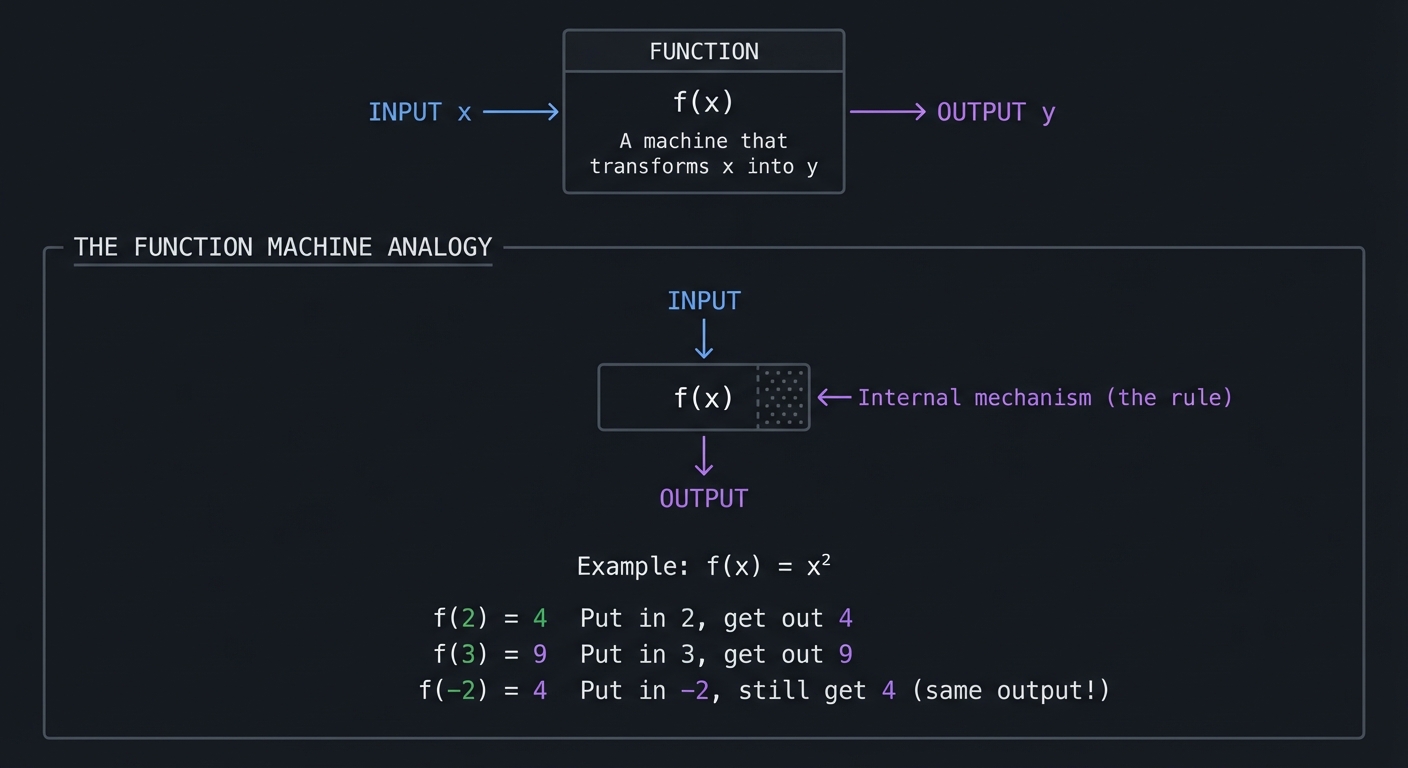

A function is a rule that takes an input and produces exactly one output. This is the most important concept for ML because every ML model is a function.

┌─────────────────────┐

│ │

INPUT ────────▶ │ FUNCTION │ ────────▶ OUTPUT

x │ f(x) │ y

│ │

│ "A machine that │

│ transforms x │

│ into y" │

└─────────────────────┘

┌────────────────────────────────────────────────────────────────────────┐

│ │

│ THE FUNCTION MACHINE ANALOGY │

│ ═══════════════════════════ │

│ │

│ INPUT │

│ │ │

│ ▼ │

│ ┌──────────────┐ │

│ │ ░░░░░░░░ │ ◄── Internal mechanism (the rule) │

│ │ ░ f(x) ░ │ │

│ │ ░░░░░░░░ │ │

│ └──────────────┘ │

│ │ │

│ ▼ │

│ OUTPUT │

│ │

│ Example: f(x) = x² │

│ │

│ f(2) = 4 "Put in 2, get out 4" │

│ f(3) = 9 "Put in 3, get out 9" │

│ f(-2) = 4 "Put in -2, still get 4" (same output!) │

│ │

└────────────────────────────────────────────────────────────────────────┘

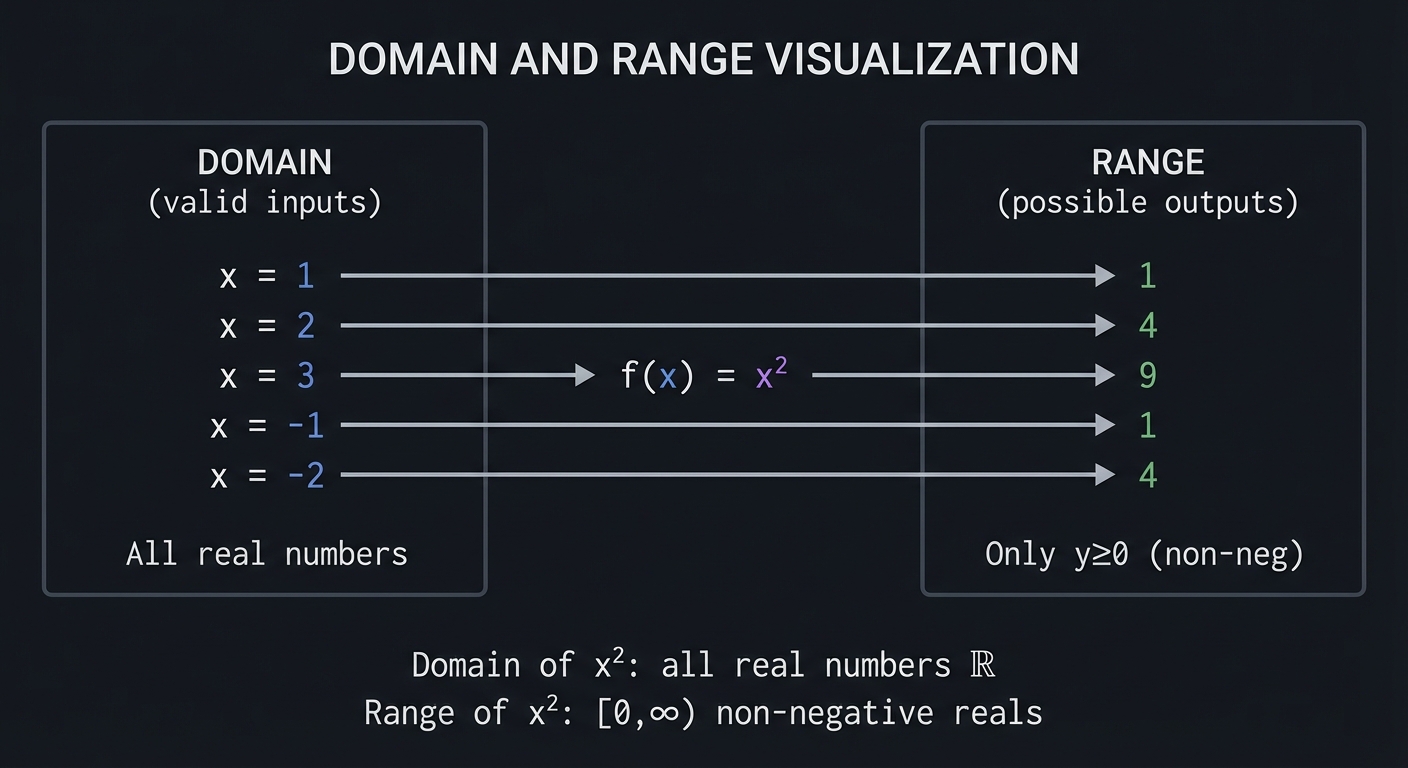

Domain and Range: What Goes In, What Comes Out

DOMAIN AND RANGE VISUALIZATION

DOMAIN RANGE

(valid inputs) (possible outputs)

┌───────────┐ ┌───────────┐

│ │ │ │

│ x = 1 ──┼──────── f(x) = x² ─────────▶│── 1 │

│ x = 2 ──┼──────────────────── ───────▶│── 4 │

│ x = 3 ──┼────────────────────────────▶│── 9 │

│ x = -1 ──┼────────────────────────────▶│── 1 │

│ x = -2 ──┼────────────────────────────▶│── 4 │

│ │ │ │

│ All real │ │ Only y≥0 │

│ numbers │ │ (non-neg)│

└───────────┘ └───────────┘

Domain of x²: all real numbers ℝ

Range of x²: [0, ∞) non-negative reals

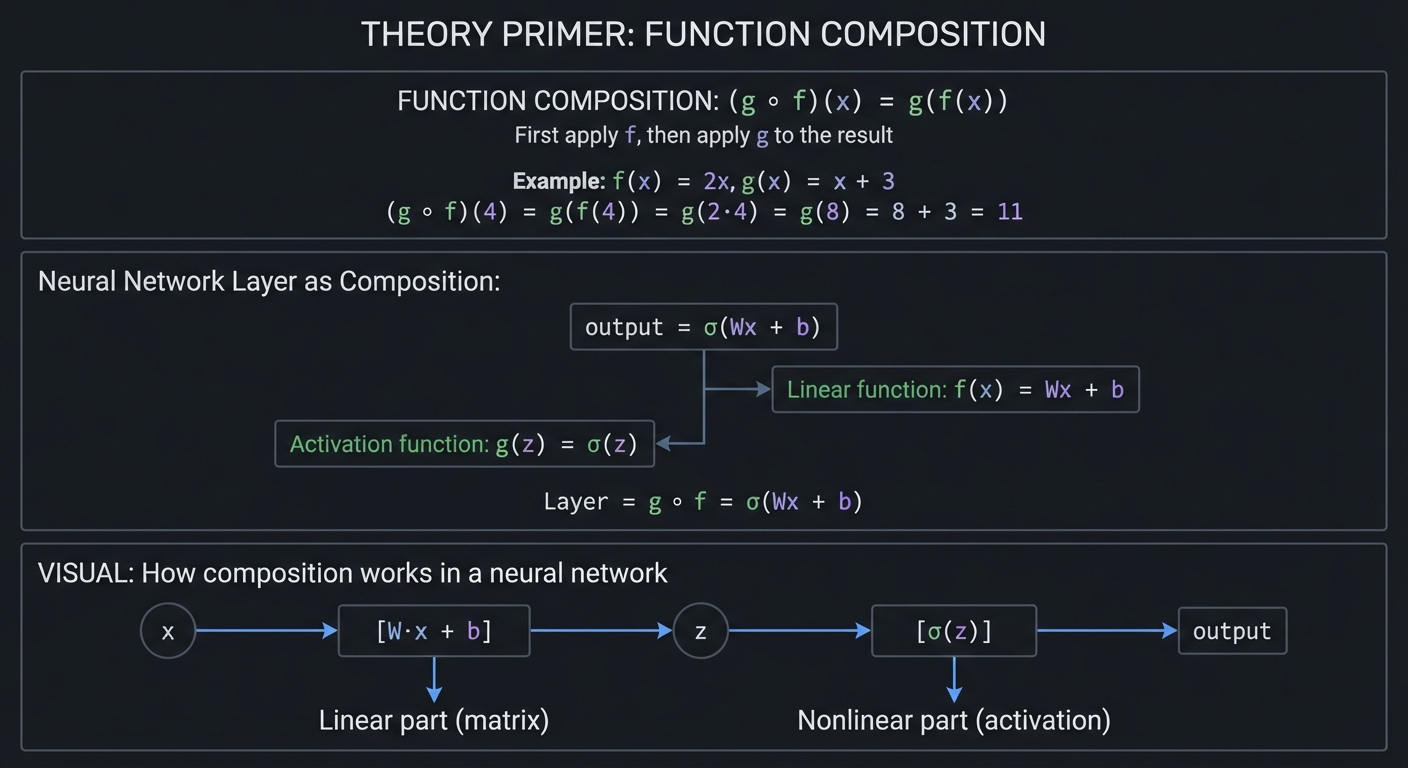

Function Composition: Combining Functions

Machine learning models are compositions of many functions. A neural network layer applies a linear function followed by a non-linear activation:

FUNCTION COMPOSITION: (g ∘ f)(x) = g(f(x))

"First apply f, then apply g to the result"

Example: f(x) = 2x, g(x) = x + 3

(g ∘ f)(4) = g(f(4))

= g(2·4)

= g(8)

= 8 + 3

= 11

Neural Network Layer as Composition:

────────────────────────────────────

output = σ(Wx + b)

│ └──┬──┘

│ │

│ └── Linear function: f(x) = Wx + b

│

└─────── Activation function: g(z) = σ(z)

Layer = g ∘ f = σ(Wx + b)

VISUAL: How composition works in a neural network

x ──▶ [W·x + b] ──▶ z ──▶ [σ(z)] ──▶ output

└───┬───┘ └──┬──┘

│ │

Linear part Nonlinear part

(matrix) (activation)

Reference: “Math for Programmers” by Paul Orland, Chapter 3, covers functions from a visual, computational perspective.

\1

Exponents and logarithms are inverse operations that appear throughout ML—in activation functions, loss functions, learning rate schedules, and information theory.

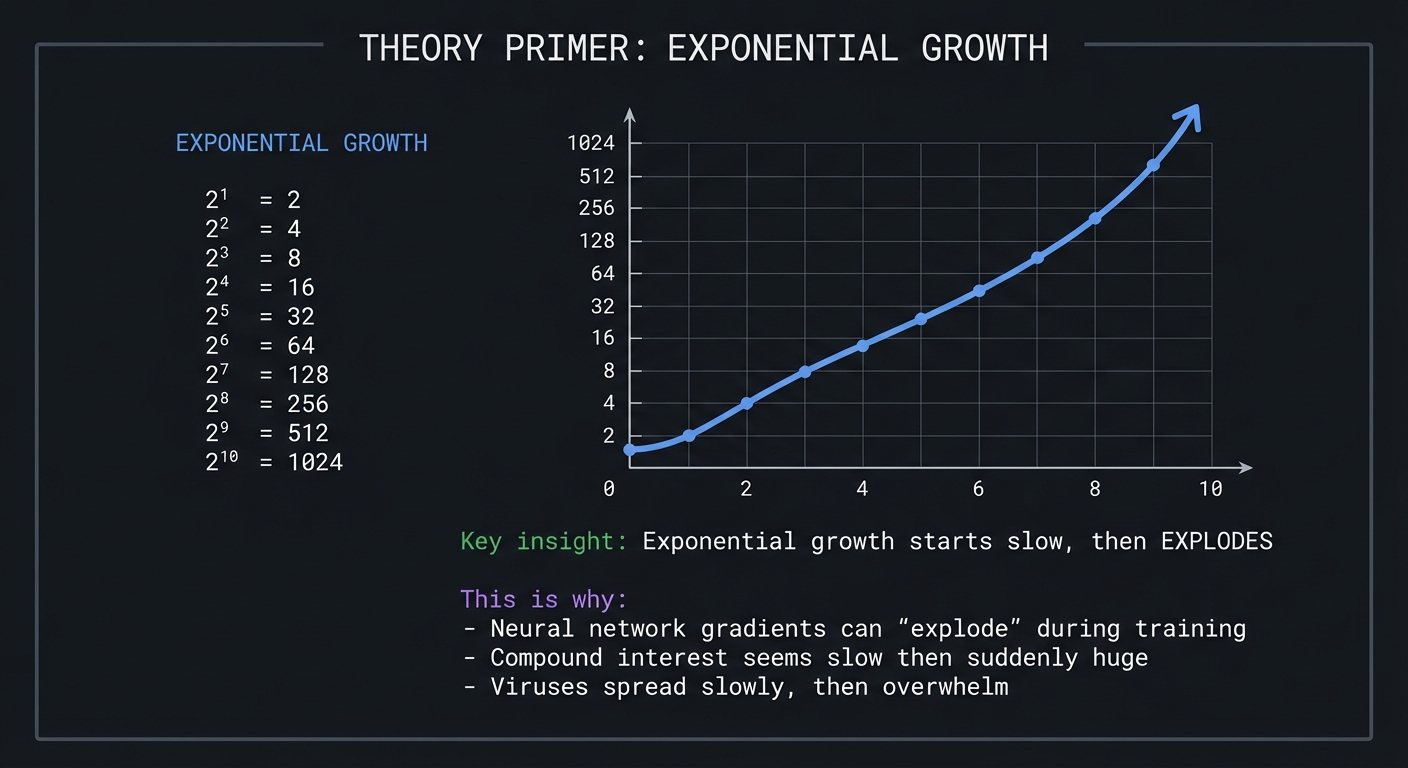

Exponential Growth: The Power of Repeated Multiplication

EXPONENTIAL GROWTH

2¹ = 2

2² = 4

2³ = 8

2⁴ = 16

2⁵ = 32

2⁶ = 64

2⁷ = 128

2⁸ = 256

2⁹ = 512

2¹⁰ = 1024

│

1024 ┼ ╭

│ ╱

│ ╱

512 ┼ ╱

│ ╱

256 ┼ ╱

│ ╱

128 ┼ ╱

│ ╱

64 ┼ ╱

32 ┼ ╱╱

16 ┼ ╱╱╱

8 ┼ ╱╱╱

4 ┼ ╱╱╱

2 ┼ ╱╱╱

└───────────────────────────────────────────────

0 2 4 6 8 10

Key insight: Exponential growth starts slow, then EXPLODES

This is why:

- Neural network gradients can "explode" during training

- Compound interest seems slow then suddenly huge

- Viruses spread slowly, then overwhelm

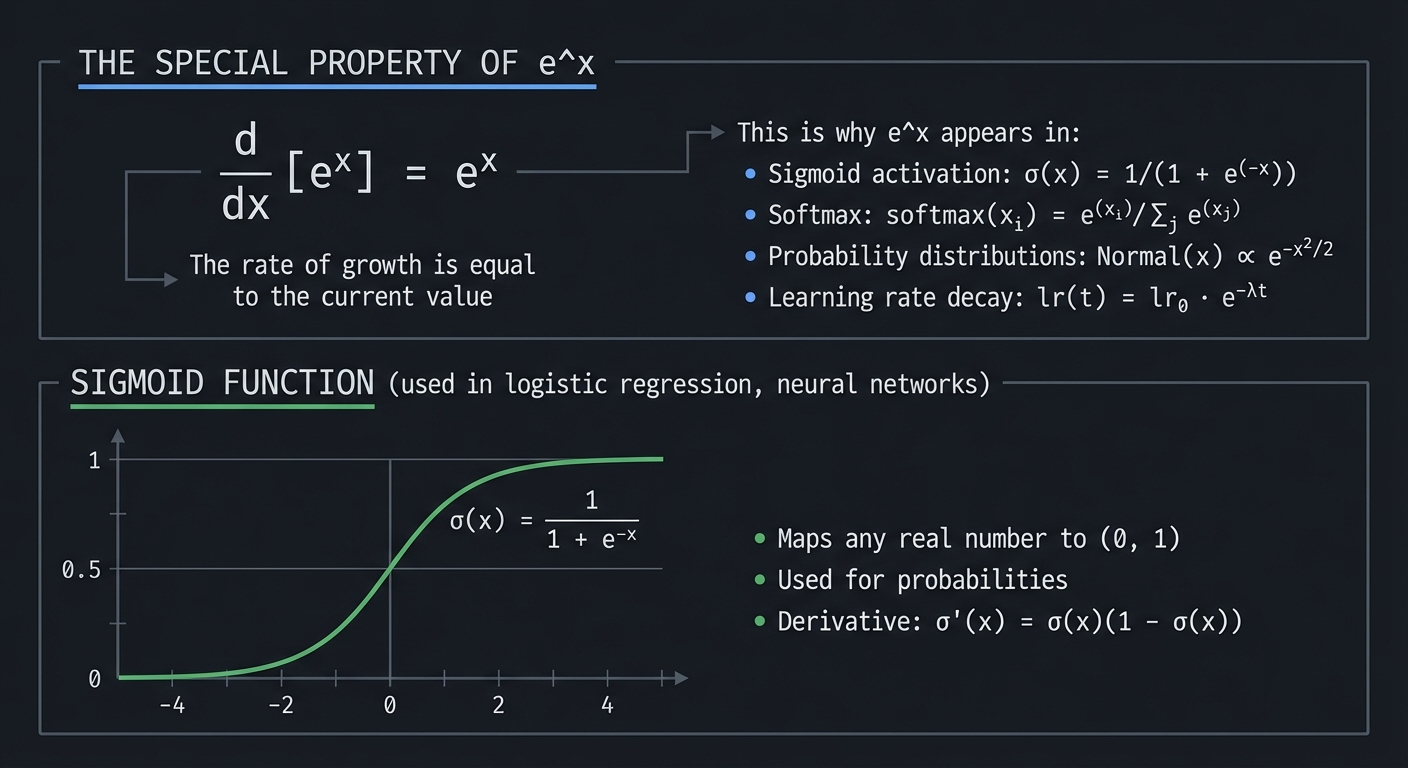

The Natural Exponential: e^x

The number e ≈ 2.71828… is special because the derivative of eˣ is itself:

THE SPECIAL PROPERTY OF e^x

d/dx [eˣ] = eˣ

"The rate of growth is equal to the current value"

This is why e^x appears in:

- Sigmoid activation: σ(x) = 1/(1 + e^(-x))

- Softmax: softmax(xᵢ) = e^(xᵢ) / Σⱼ e^(xⱼ)

- Probability distributions: Normal(x) ∝ e^(-x²/2)

- Learning rate decay: lr(t) = lr₀ · e^(-λt)

SIGMOID FUNCTION (used in logistic regression, neural networks)

1 ┼─────────────────────────────────────────

│ ╭───────────

│ ╱╱╱

0.5 ┼────────────────────╱╱╱───────────────

│ ╱╱╱

│ ╱╱╱╱

0 ┼────╱╱╱──────────────────────────────────

└──────┼───────┼───────┼───────┼──────────

-4 -2 0 2 4

σ(x) = 1 / (1 + e^(-x))

- Maps any real number to (0, 1)

- Used for probabilities

- Derivative: σ'(x) = σ(x)(1 - σ(x))

Logarithms: The Inverse of Exponentiation

LOGARITHMS AS INVERSE OPERATIONS

Exponential: 2³ = 8

Logarithmic: log₂(8) = 3

"2 to the power of WHAT equals 8?"

Answer: 3

THE RELATIONSHIP:

If b^y = x, then log_b(x) = y

b^(log_b(x)) = x (they undo each other)

log_b(b^x) = x (they undo each other)

COMMON LOGARITHMS IN ML:

log₂(x) - Base 2, used in information theory (bits)

log₁₀(x) - Base 10, used for order of magnitude

ln(x) - Natural log (base e), used in calculus/ML

ln(e) = 1

ln(1) = 0

ln(0) = -∞ (undefined, approaches negative infinity)

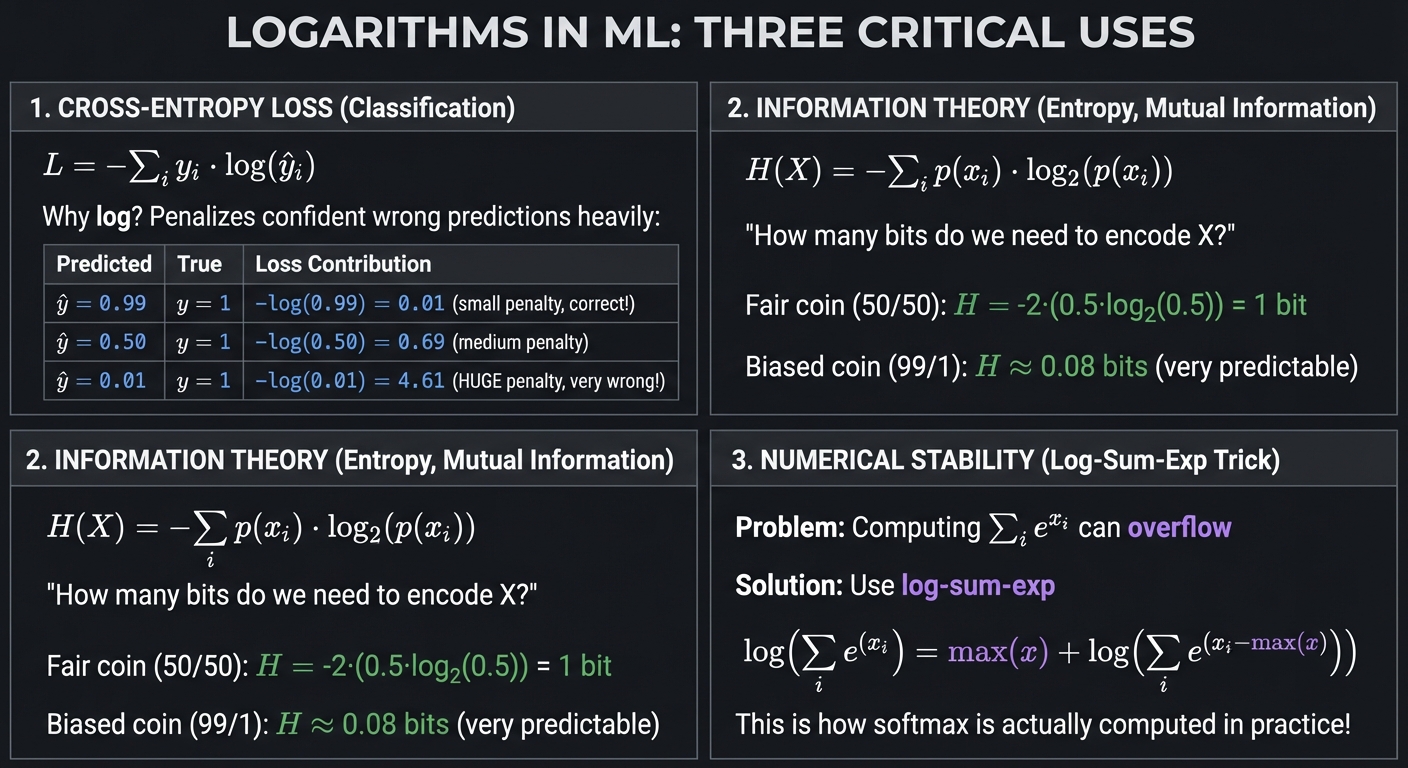

Why Logarithms Appear in Machine Learning

LOGARITHMS IN ML: THREE CRITICAL USES

1. CROSS-ENTROPY LOSS (Classification)

───────────────────────────────────────

L = -Σᵢ yᵢ · log(ŷᵢ)

Why log? Penalizes confident wrong predictions heavily:

Predicted True Loss Contribution

─────────────────────────────────────

ŷ = 0.99 y = 1 -log(0.99) = 0.01 (small penalty, correct!)

ŷ = 0.50 y = 1 -log(0.50) = 0.69 (medium penalty)

ŷ = 0.01 y = 1 -log(0.01) = 4.61 (HUGE penalty, very wrong!)

2. INFORMATION THEORY (Entropy, Mutual Information)

────────────────────────────────────────────────────

H(X) = -Σᵢ p(xᵢ) · log₂(p(xᵢ))

"How many bits do we need to encode X?"

Fair coin (50/50): H = -2·(0.5·log₂(0.5)) = 1 bit

Biased coin (99/1): H ≈ 0.08 bits (very predictable)

3. NUMERICAL STABILITY (Log-Sum-Exp Trick)

───────────────────────────────────────────

Problem: Computing Σᵢ e^(xᵢ) can overflow

Solution: Use log-sum-exp

log(Σᵢ e^(xᵢ)) = max(x) + log(Σᵢ e^(xᵢ - max(x)))

This is how softmax is actually computed in practice!

Reference: “C Programming: A Modern Approach” by K. N. King, Chapter 7, covers the numerical representation of these values, while “Math for Programmers” Chapter 2 provides the mathematical intuition.

\1

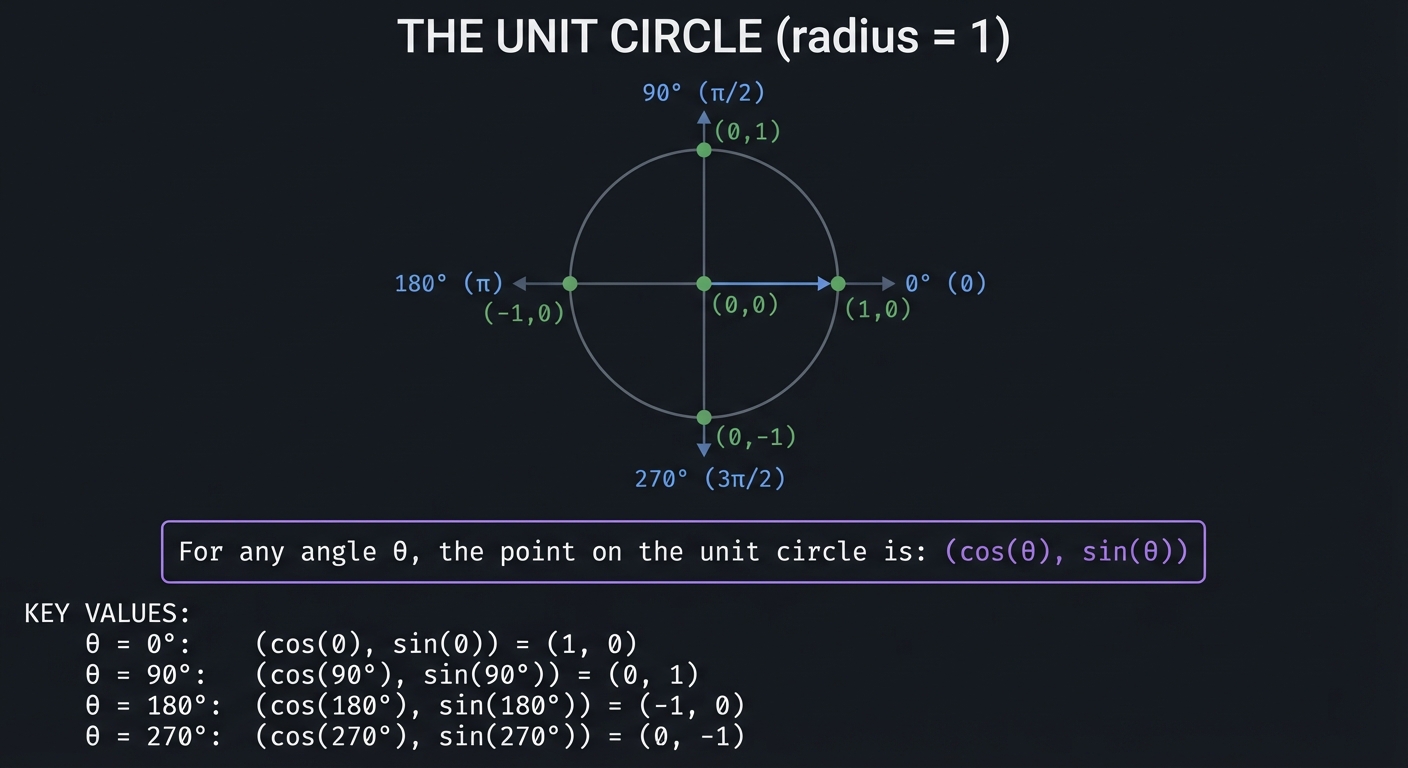

Trigonometry connects angles to ratios, circles to waves, and appears in ML through signal processing, attention mechanisms, and positional encodings.

The Unit Circle: Where It All Begins

THE UNIT CIRCLE (radius = 1)

90° (π/2)

│

(0,1) │

╱╲ │

╱ ╲ │

╱ ╲ │

╱ ╲│

180° (π) ─────●────────●────────● 0° (0)

(-1,0) │(0,0) (1,0)

╲│╱

│

│

(0,-1)

270° (3π/2)

For any angle θ, the point on the unit circle is:

(cos(θ), sin(θ))

KEY VALUES:

θ = 0°: (cos(0), sin(0)) = (1, 0)

θ = 90°: (cos(90°), sin(90°)) = (0, 1)

θ = 180°: (cos(180°), sin(180°)) = (-1, 0)

θ = 270°: (cos(270°), sin(270°)) = (0, -1)

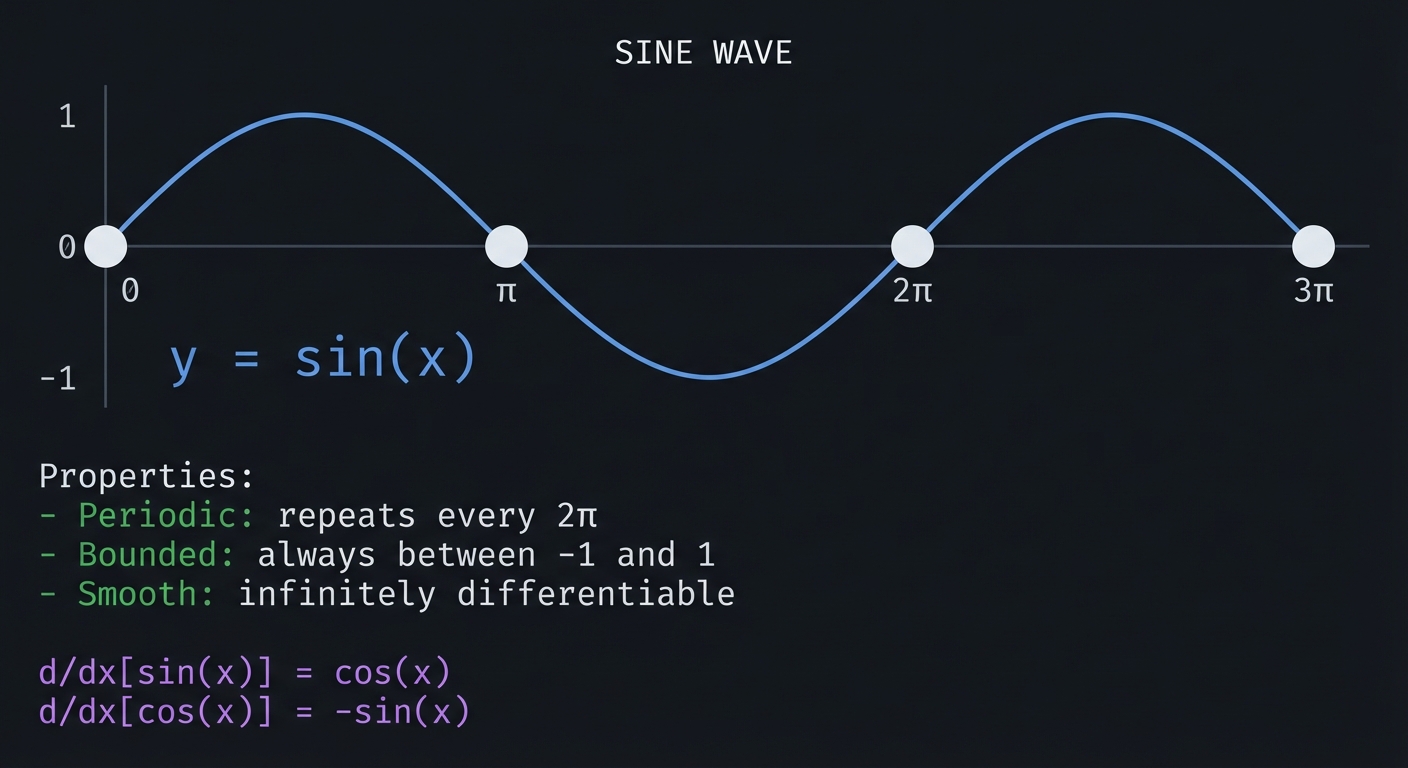

Sine and Cosine as Waves

SINE WAVE

1 ┼ ╭───╮ ╭───╮

│ ╱ ╲ ╱ ╲

│ ╱ ╲ ╱ ╲

0 ┼────────●─────────●─────────●─────────●────

│ 0 π 2π 3π

│ ╲ ╱ ╲ ╱

│ ╲ ╱ ╲ ╱

-1 ┼ ╰───╯ ╰───╯

y = sin(x)

Properties:

- Periodic: repeats every 2π

- Bounded: always between -1 and 1

- Smooth: infinitely differentiable

d/dx[sin(x)] = cos(x)

d/dx[cos(x)] = -sin(x)

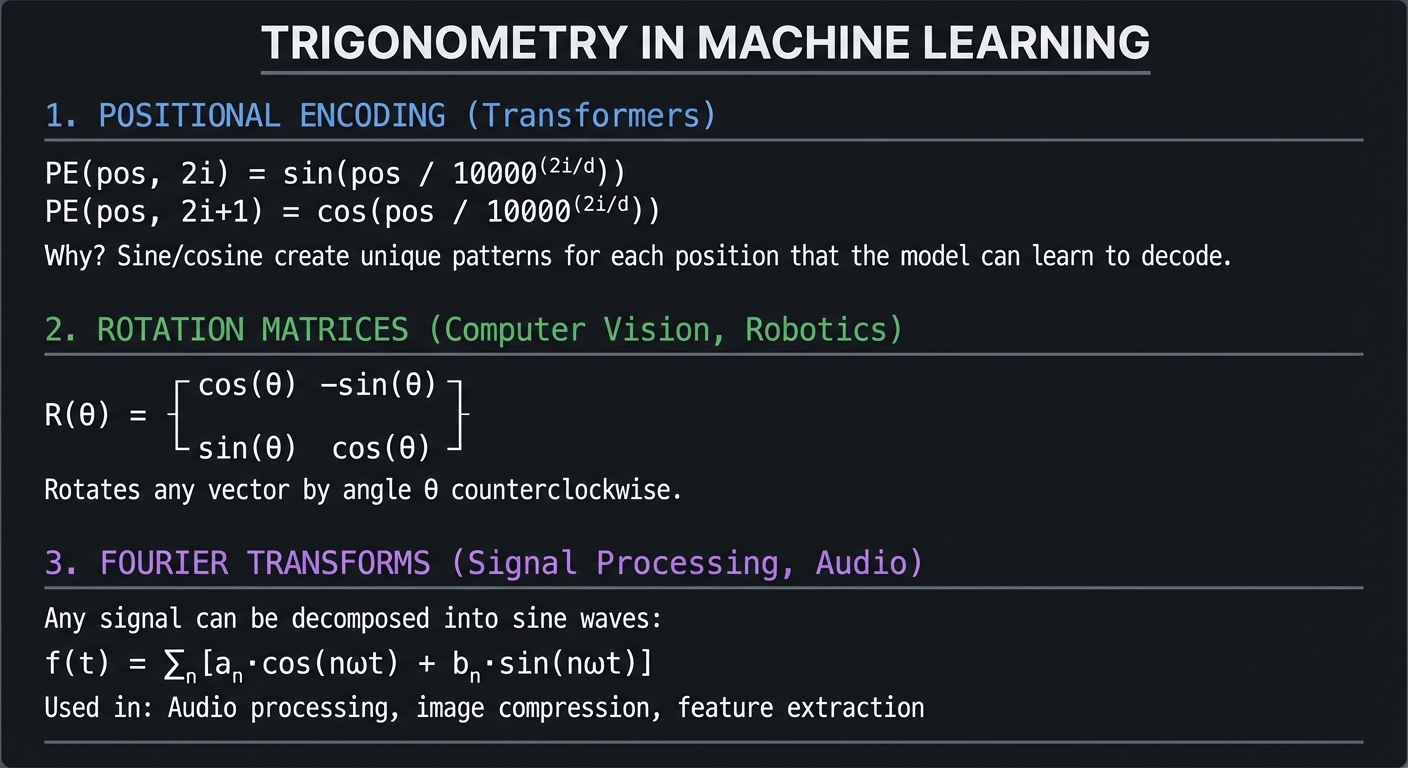

Why Trigonometry Matters for ML

TRIGONOMETRY IN MACHINE LEARNING

1. POSITIONAL ENCODING (Transformers)

─────────────────────────────────────

PE(pos, 2i) = sin(pos / 10000^(2i/d))

PE(pos, 2i+1) = cos(pos / 10000^(2i/d))

Why? Sine/cosine create unique patterns for each position

that the model can learn to decode.

2. ROTATION MATRICES (Computer Vision, Robotics)

────────────────────────────────────────────────

R(θ) = ┌ cos(θ) -sin(θ) ┐

│ │

└ sin(θ) cos(θ) ┘

Rotates any vector by angle θ counterclockwise.

3. FOURIER TRANSFORMS (Signal Processing, Audio)

────────────────────────────────────────────────

Any signal can be decomposed into sine waves:

f(t) = Σₙ [aₙ·cos(nωt) + bₙ·sin(nωt)]

Used in: Audio processing, image compression, feature extraction

Reference: “Computer Graphics from Scratch” by Gabriel Gambetta covers trigonometry in the context of rotations and projections.

\1

Linear algebra is not optional for ML—it IS the implementation. Every neural network forward pass is matrix multiplication. Every weight update is vector arithmetic. Every dataset is a matrix.

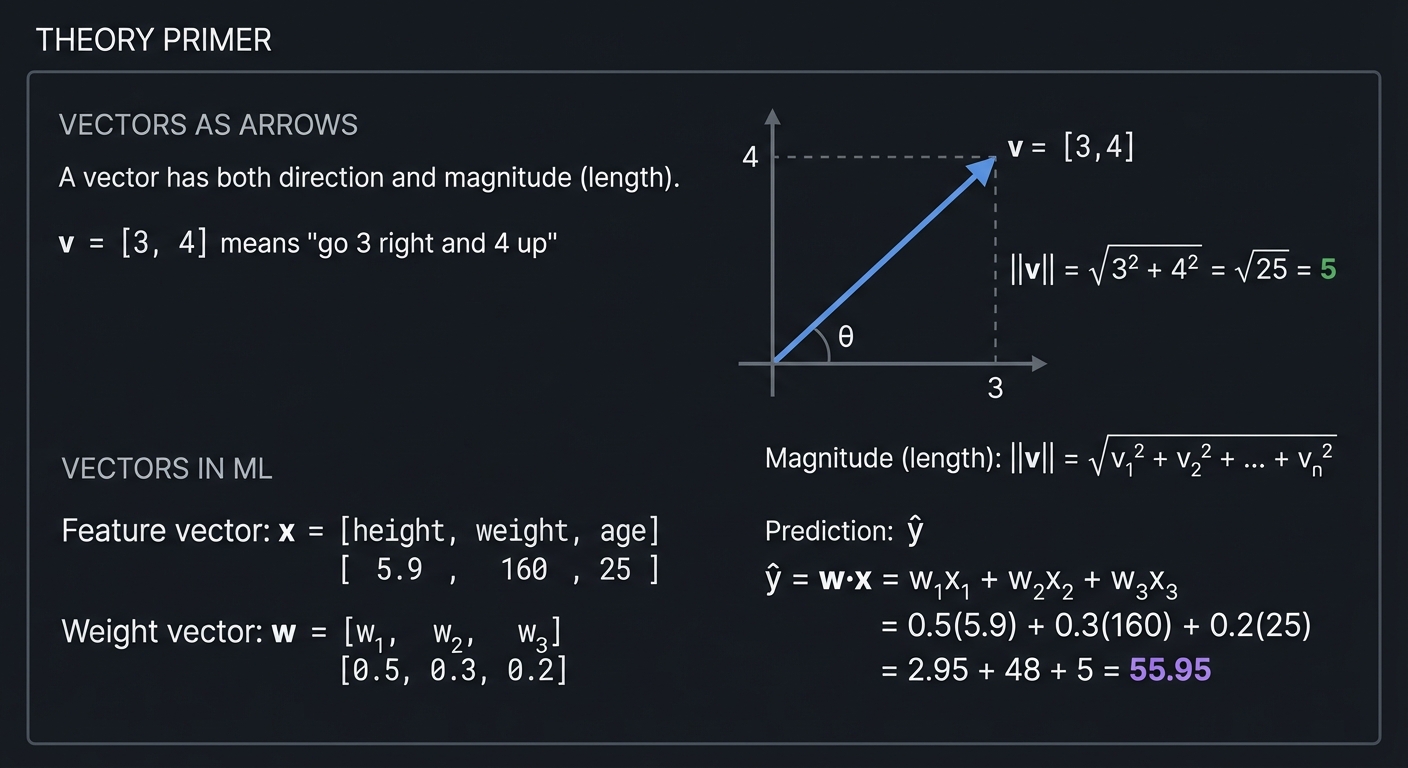

Vectors: Direction and Magnitude

VECTORS AS ARROWS

A vector has both direction and magnitude (length).

v = [3, 4] means "go 3 right and 4 up"

4 │ ↗ v = [3,4]

│ ╱

│ ╱

│ ╱ ||v|| = √(3² + 4²) = √25 = 5

│ ╱

│ ╱

│ ╱

│ ╱

│╱θ

└────────────────────

3

Magnitude (length): ||v|| = √(v₁² + v₂² + ... + vₙ²)

VECTORS IN ML:

Feature vector: x = [height, weight, age]

[ 5.9, 160, 25 ]

Weight vector: w = [w₁, w₂, w₃]

[0.5, 0.3, 0.2]

Prediction: ŷ = w·x = w₁x₁ + w₂x₂ + w₃x₃

= 0.5(5.9) + 0.3(160) + 0.2(25)

= 2.95 + 48 + 5 = 55.95

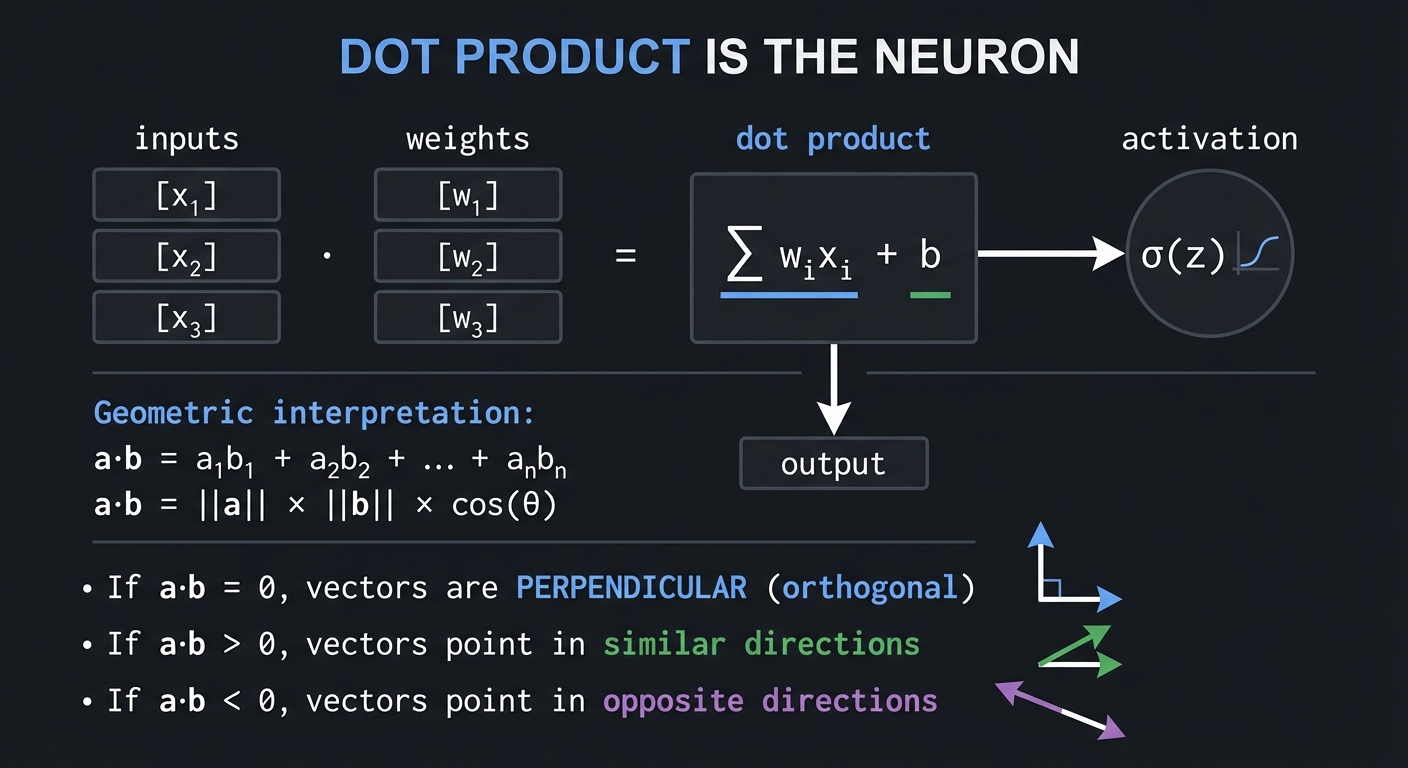

The Dot Product: The Neuron

DOT PRODUCT IS THE NEURON:

inputs weights dot product activation

[x₁] · [w₁] = Σ wᵢxᵢ + b → σ(z)

[x₂] [w₂] │

[x₃] [w₃] ▼

output

a·b = a₁b₁ + a₂b₂ + ... + aₙbₙ

Geometric interpretation:

a·b = ||a|| × ||b|| × cos(θ)

If a·b = 0, vectors are PERPENDICULAR (orthogonal)

If a·b > 0, vectors point in similar directions

If a·b < 0, vectors point in opposite directions

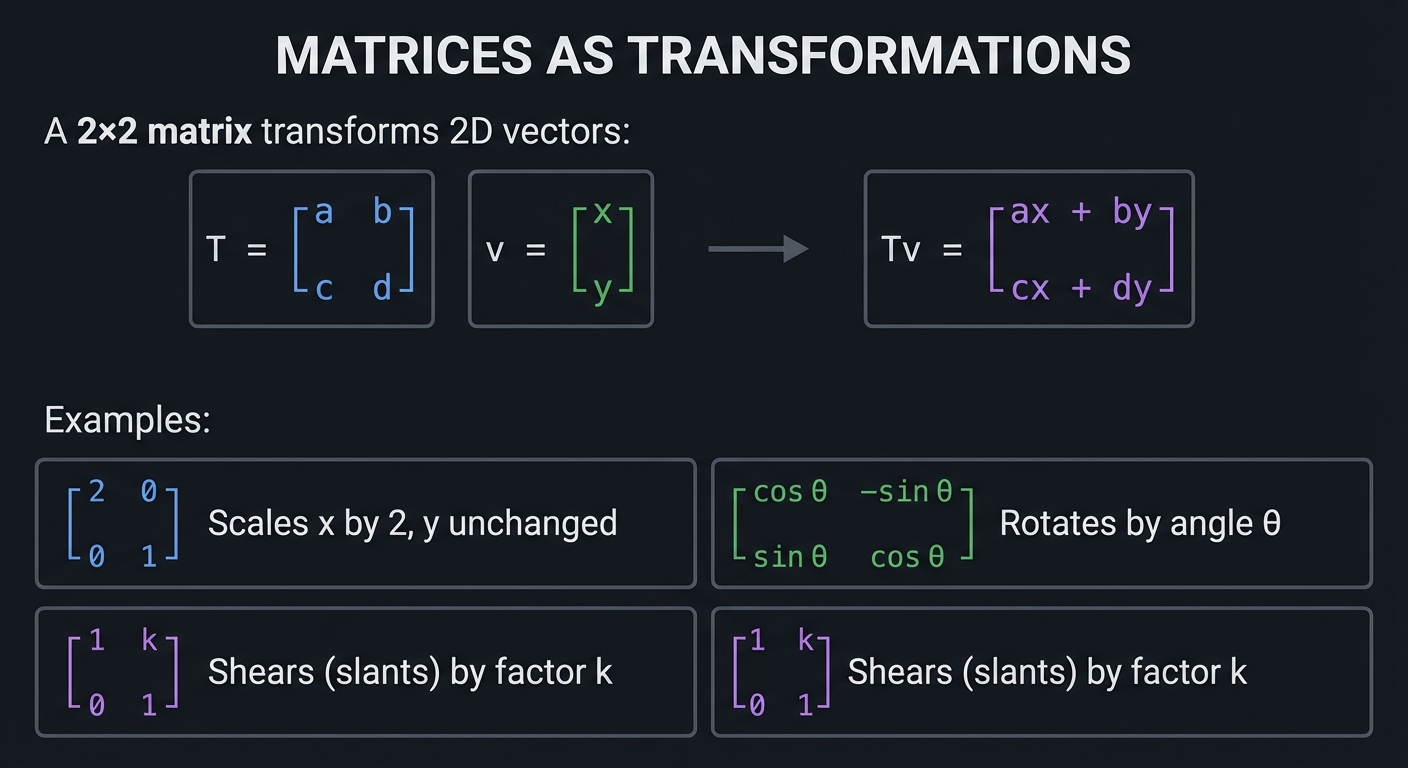

Matrices as Transformations

MATRICES AS TRANSFORMATIONS

A 2×2 matrix transforms 2D vectors:

T = ┌ a b ┐ v = ┌ x ┐

│ │ │ │

└ c d ┘ └ y ┘

Tv = ┌ ax + by ┐

│ │

└ cx + dy ┘

Examples:

┌ 2 0 ┐ Scales x by 2, y unchanged

│ │

└ 0 1 ┘

┌ cos θ -sin θ ┐ Rotates by angle θ

│ │

└ sin θ cos θ ┘

┌ 1 k ┐ Shears (slants) by factor k

│ │

└ 0 1 ┘

Eigenvalues and Eigenvectors: The Key ML Concept

EIGENVECTORS: SPECIAL DIRECTIONS

For a matrix A, an eigenvector v satisfies:

A·v = λ·v

"When A transforms v, v doesn't change direction,

only scales by factor λ (the eigenvalue)"

WHY EIGENVECTORS MATTER FOR ML:

1. PCA: Eigenvectors of covariance matrix = principal components

(directions of maximum variance in data)

2. PageRank: The ranking vector is the dominant eigenvector

of the link matrix

3. Spectral Clustering: Uses eigenvectors of similarity matrix

4. Stability: Eigenvalues tell if gradients will explode/vanish

|λ| > 1: grows exponentially (exploding gradients)

|λ| < 1: shrinks exponentially (vanishing gradients)

Reference: “Math for Programmers” by Paul Orland, Chapters 5-7, covers vectors and matrices with visual intuition. “Linear Algebra Done Right” by Sheldon Axler provides deeper theoretical foundations.

\1

Calculus answers the question: “How does the output change when I change the input?” This is fundamental to ML because training is about adjusting parameters to change (reduce) the loss.

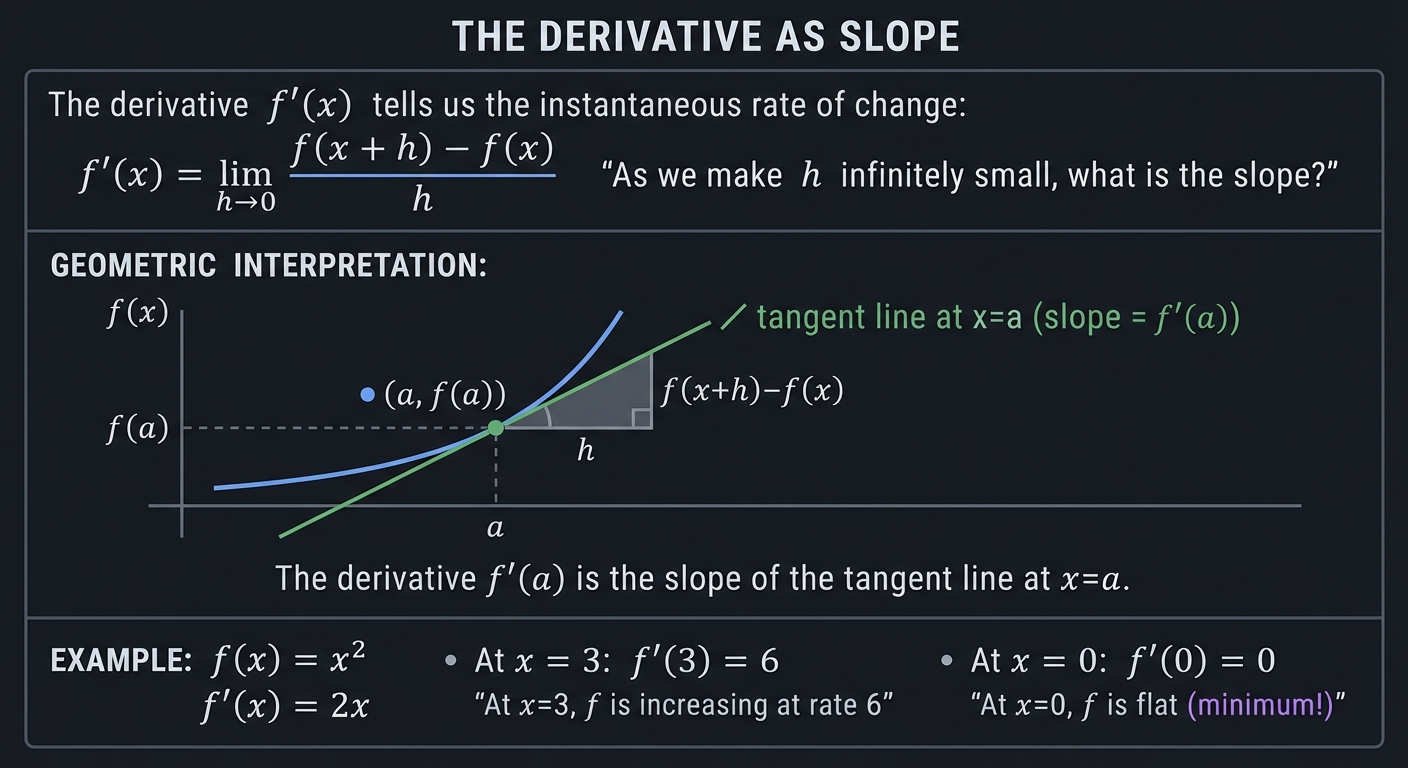

Derivatives: Rate of Change

THE DERIVATIVE AS SLOPE

The derivative f'(x) tells us the instantaneous rate of change:

f'(x) = lim f(x + h) - f(x)

h→0 ─────────────────

h

"As we make h infinitely small, what is the slope?"

GEOMETRIC INTERPRETATION:

f(x)

│ ╱ tangent line at x=a

│ ╱ (slope = f'(a))

│ ╱

│ ●─────────────

│ ╱╱│

│ ╱╱ │

│ ╱╱ │ f(a)

│╱╱──────┼─────────────────

a

The derivative f'(a) is the slope of the tangent line at x=a.

EXAMPLE: f(x) = x²

f'(x) = 2x

At x = 3: f'(3) = 6

"At x=3, f is increasing at rate 6"

At x = 0: f'(0) = 0

"At x=0, f is flat (minimum!)"

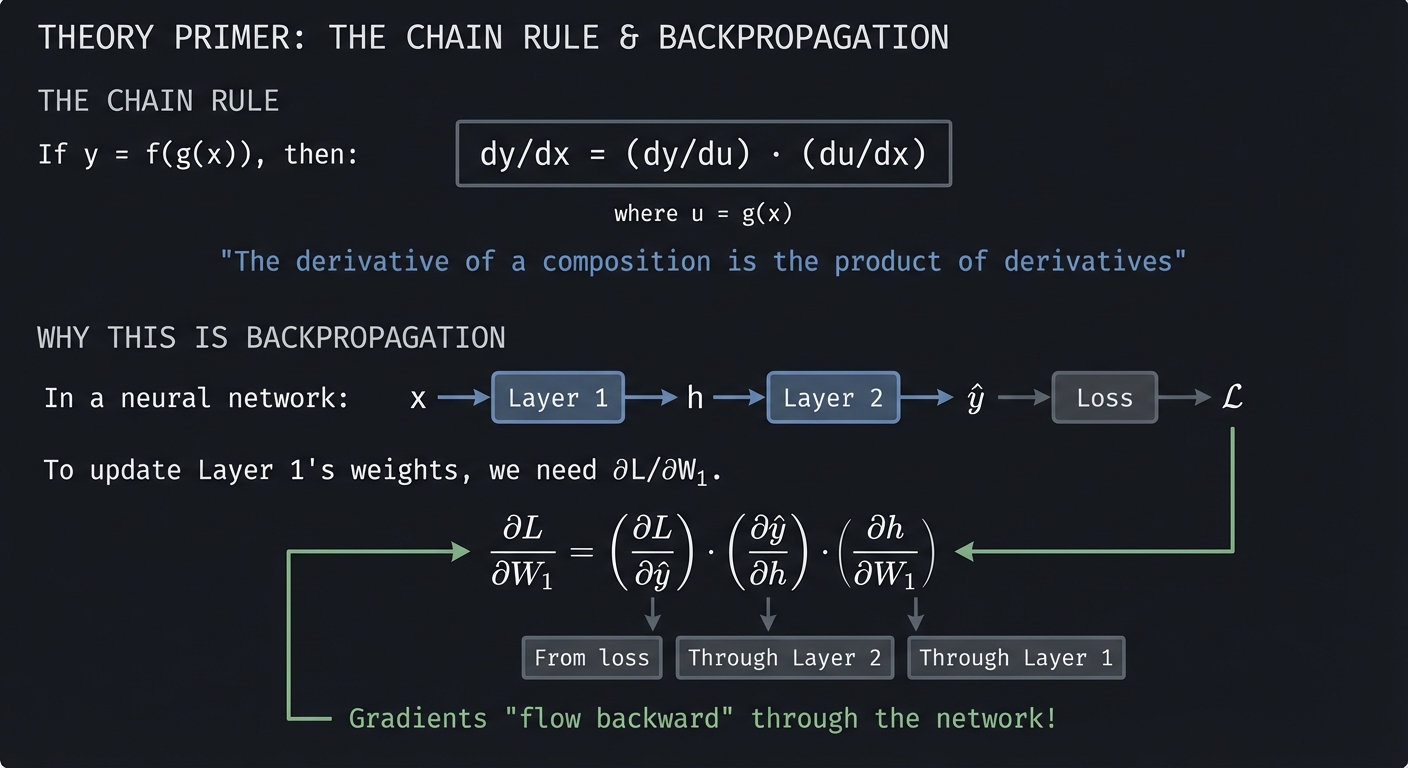

The Chain Rule: The Heart of Backpropagation

THE CHAIN RULE

If y = f(g(x)), then:

dy/dx = (dy/du) · (du/dx)

where u = g(x)

"The derivative of a composition is the product of derivatives"

WHY THIS IS BACKPROPAGATION:

In a neural network:

x → [Layer 1] → h → [Layer 2] → ŷ → [Loss] → L

To update Layer 1's weights, we need ∂L/∂W₁.

Chain rule:

∂L/∂W₁ = (∂L/∂ŷ) · (∂ŷ/∂h) · (∂h/∂W₁)

└──┬──┘ └──┬──┘ └──┬──┘

│ │ │

From loss Through Through

Layer 2 Layer 1

Gradients "flow backward" through the network!

Gradients: Derivatives in Multiple Dimensions

THE GRADIENT

The gradient ∇f collects all partial derivatives into a vector:

∇f = [∂f/∂x, ∂f/∂y, ∂f/∂z, ...]

For f(x, y) = x² + y²:

∇f = [2x, 2y]

At point (3, 4): ∇f = [6, 8]

CRITICAL PROPERTY:

The gradient points in the direction of STEEPEST ASCENT.

Therefore, to minimize f, we move in direction -∇f (steepest descent).

Reference: “Calculus” by James Stewart provides comprehensive coverage. “Neural Networks and Deep Learning” by Michael Nielsen explains backpropagation beautifully.

\1

ML models don’t just make predictions—they reason about uncertainty. Probability provides the framework for this reasoning.

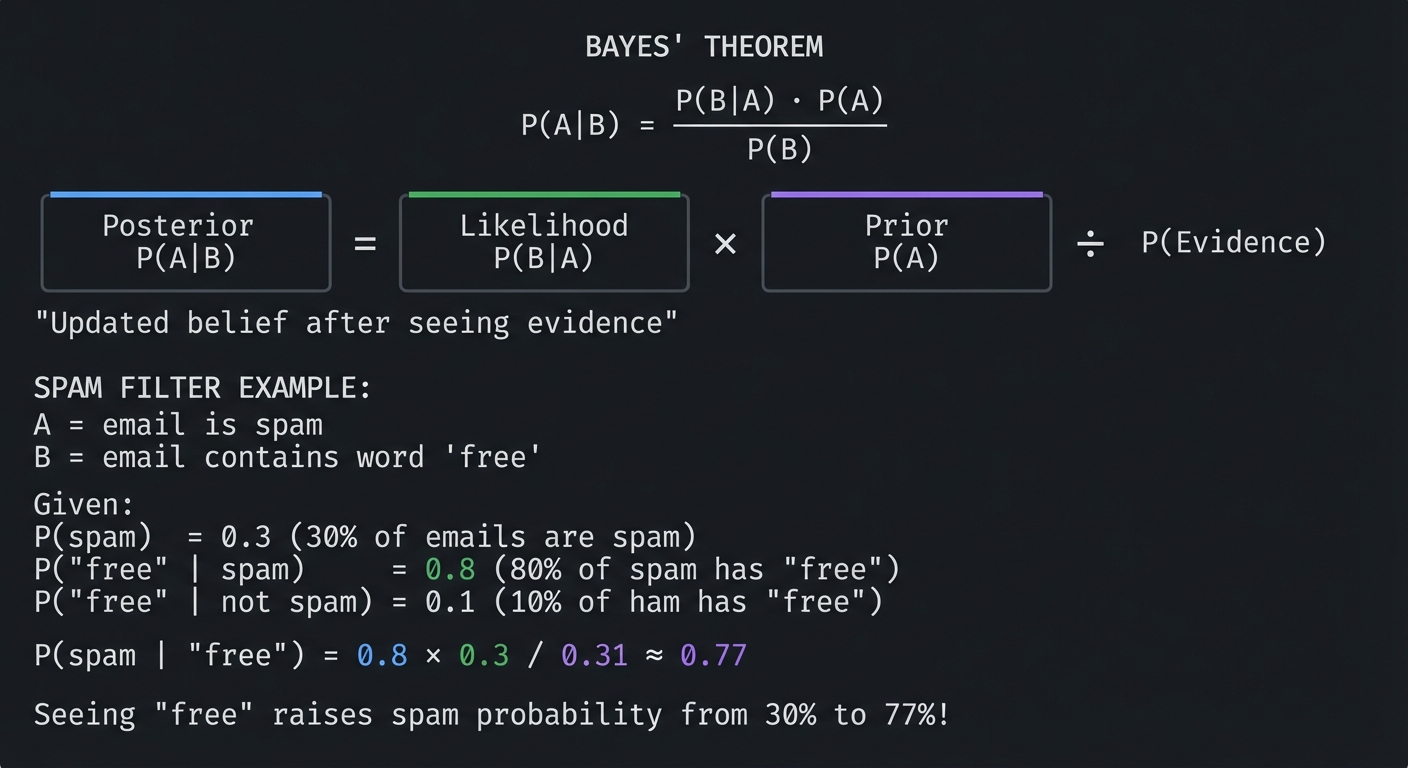

Bayes’ Theorem: Updating Beliefs

BAYES' THEOREM

P(A|B) = P(B|A) · P(A)

─────────────

P(B)

┌─────────┐ ┌─────────┐ ┌─────────┐

│Posterior│ = │Likelihood│ × │ Prior │ ÷ P(Evidence)

│ P(A|B) │ │ P(B|A) │ │ P(A) │

└─────────┘ └─────────┘ └─────────┘

"Updated belief after seeing evidence"

SPAM FILTER EXAMPLE:

A = email is spam

B = email contains word "free"

Given:

P(spam) = 0.3 (30% of emails are spam)

P("free" | spam) = 0.8 (80% of spam has "free")

P("free" | not spam) = 0.1 (10% of ham has "free")

P(spam | "free") = 0.8 × 0.3 / 0.31 ≈ 0.77

Seeing "free" raises spam probability from 30% to 77%!

Key Distributions for ML

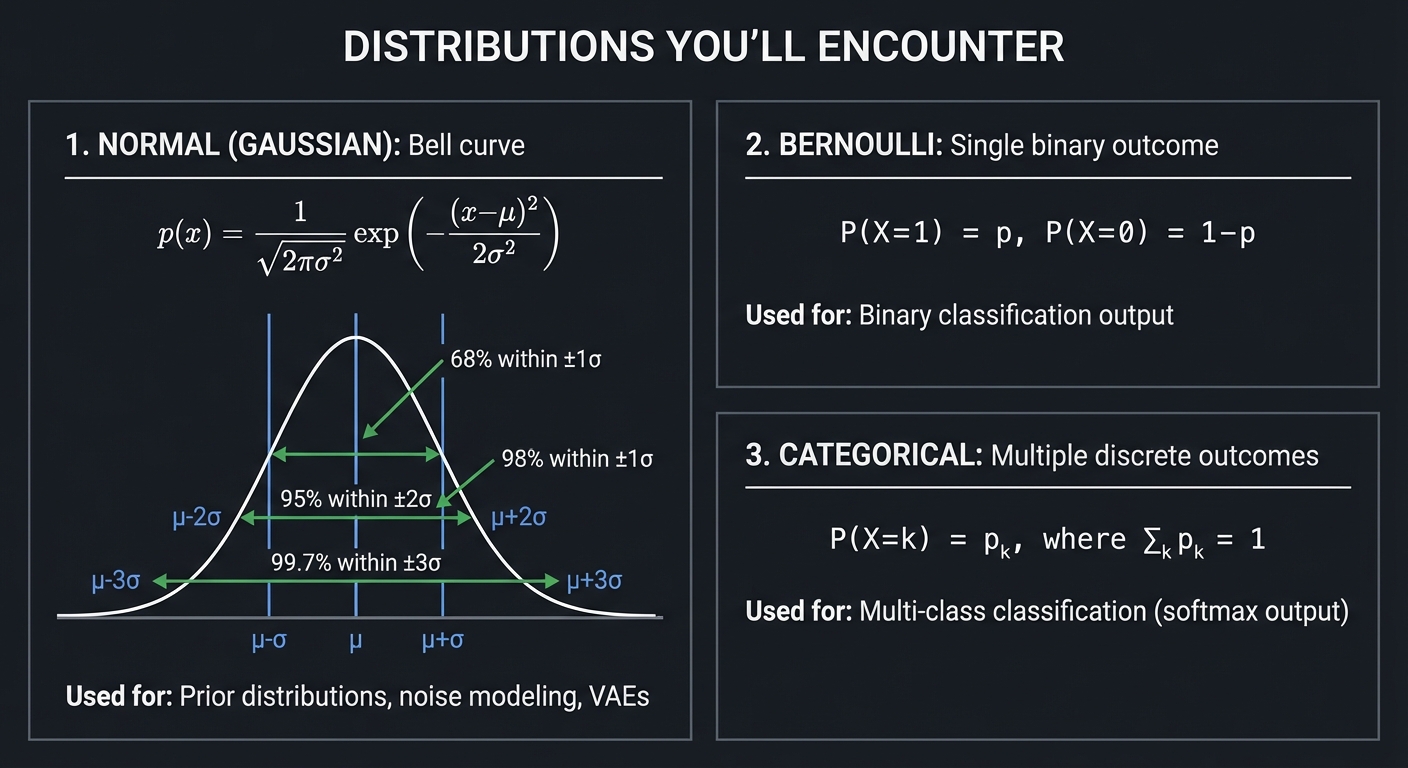

DISTRIBUTIONS YOU'LL ENCOUNTER

1. NORMAL (GAUSSIAN): Bell curve

────────────────────────────────

p(x) = (1/√(2πσ²)) exp(-(x-μ)²/(2σ²))

│ ╭───╮

│ ╱ ╲ 68% within ±1σ

│ ╱ ╲ 95% within ±2σ

│ ╱ ╲ 99.7% within ±3σ

│ ╱ ╲

└───────────────────

μ-σ μ μ+σ

Used for: Prior distributions, noise modeling, VAEs

2. BERNOULLI: Single binary outcome

────────────────────────────────

P(X=1) = p, P(X=0) = 1-p

Used for: Binary classification output

3. CATEGORICAL: Multiple discrete outcomes

─────────────────────────────────────────

P(X=k) = pₖ, where Σₖ pₖ = 1

Used for: Multi-class classification (softmax output)

Reference: “Think Bayes” by Allen Downey provides an intuitive, computational approach to probability.

\1

All of machine learning reduces to optimization: define a loss function that measures how wrong your model is, then find parameters that minimize it.

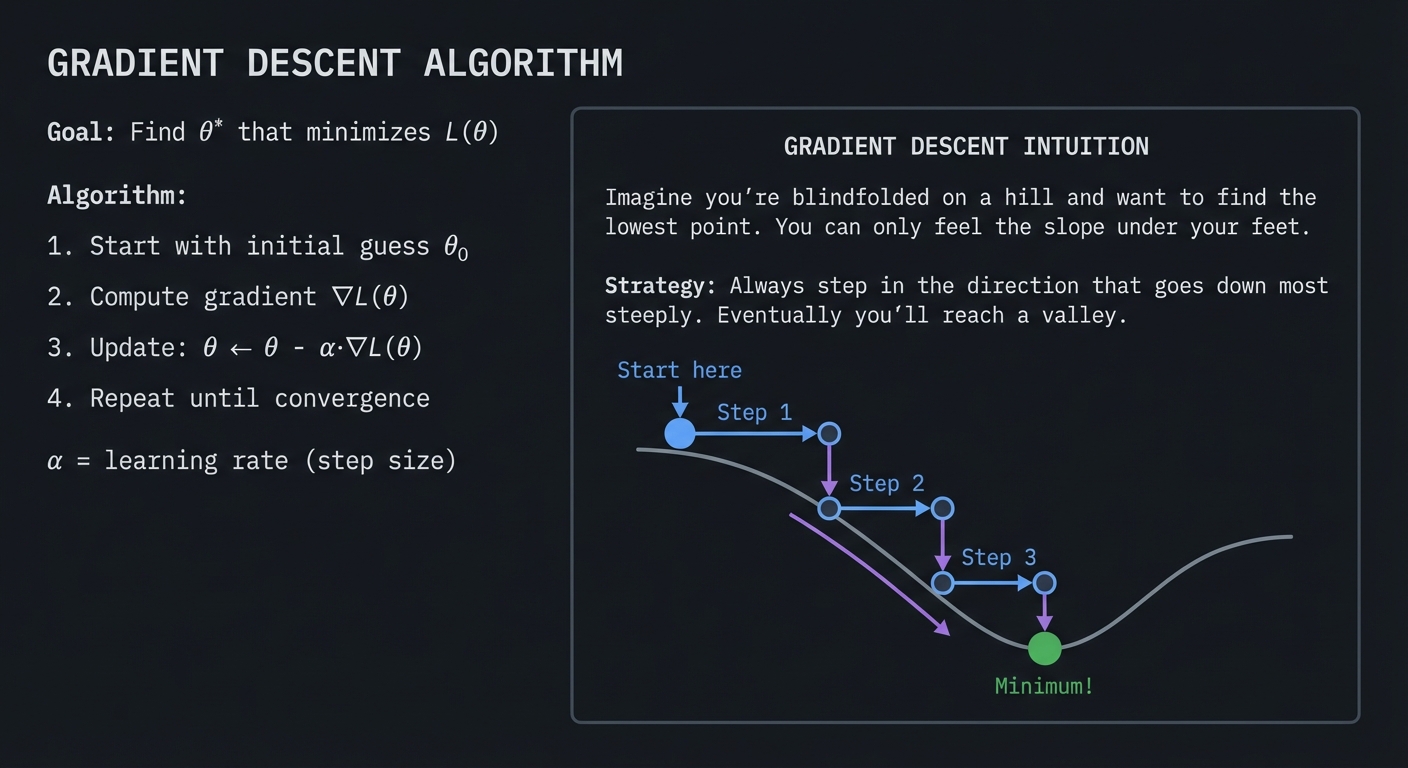

Gradient Descent: Walking Downhill

GRADIENT DESCENT ALGORITHM

Goal: Find θ* that minimizes L(θ)

Algorithm:

1. Start with initial guess θ₀

2. Compute gradient ∇L(θ)

3. Update: θ ← θ - α·∇L(θ)

4. Repeat until convergence

α = learning rate (step size)

┌─────────────────────────────────────────────────────────────┐

│ │

│ GRADIENT DESCENT INTUITION │

│ │

│ Imagine you're blindfolded on a hill and want to find │

│ the lowest point. You can only feel the slope under │

│ your feet. │

│ │

│ Strategy: Always step in the direction that goes down │

│ most steeply. Eventually you'll reach a valley. │

│ │

│ Start here │

│ ↓ │

│ ●───→ Step 1 │

│ ╲ │

│ ●───→ Step 2 │

│ ╲ │

│ ●───→ Step 3 │

│ ╲ │

│ ● Minimum! │

│ │

└─────────────────────────────────────────────────────────────┘

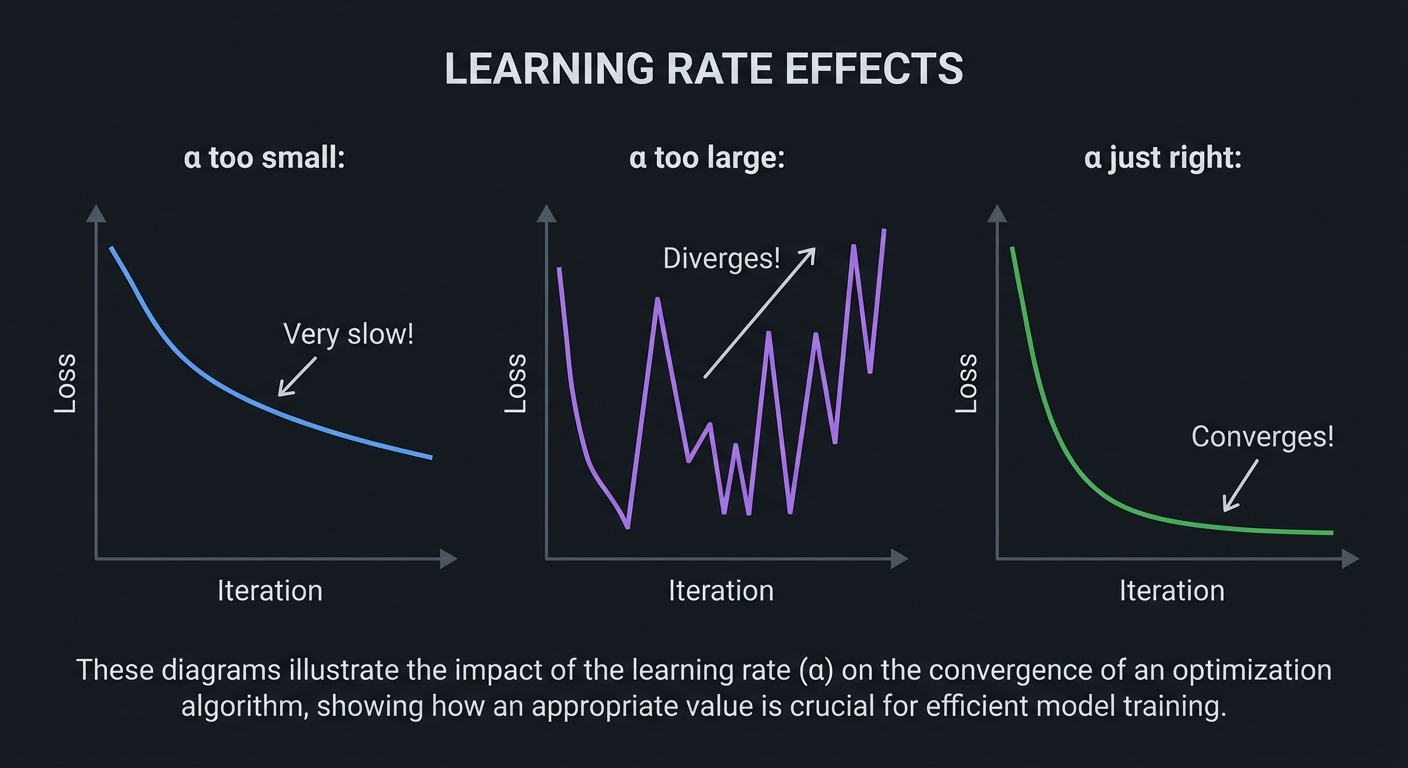

Learning Rate Effects

LEARNING RATE EFFECTS

α too small: α too large:

Loss Loss

│ │ ╱╲ ╱╲

│╲ │ ╱ ╲ ╱ ╲

│ ╲ │ ╱ ╲╱ ╲

│ ╲ │ ╱ ↗ Diverges!

│ ╲ │╱

│ ╲ └────────────────

│ ╲ Iteration

│ ╲

│ ╲ Very slow!

└────────╲─────────────

Iteration

α just right:

Loss

│╲

│ ╲

│ ╲

│ ╲

│ ╲

│ ╲_______________ Converges!

└──────────────────────

Iteration

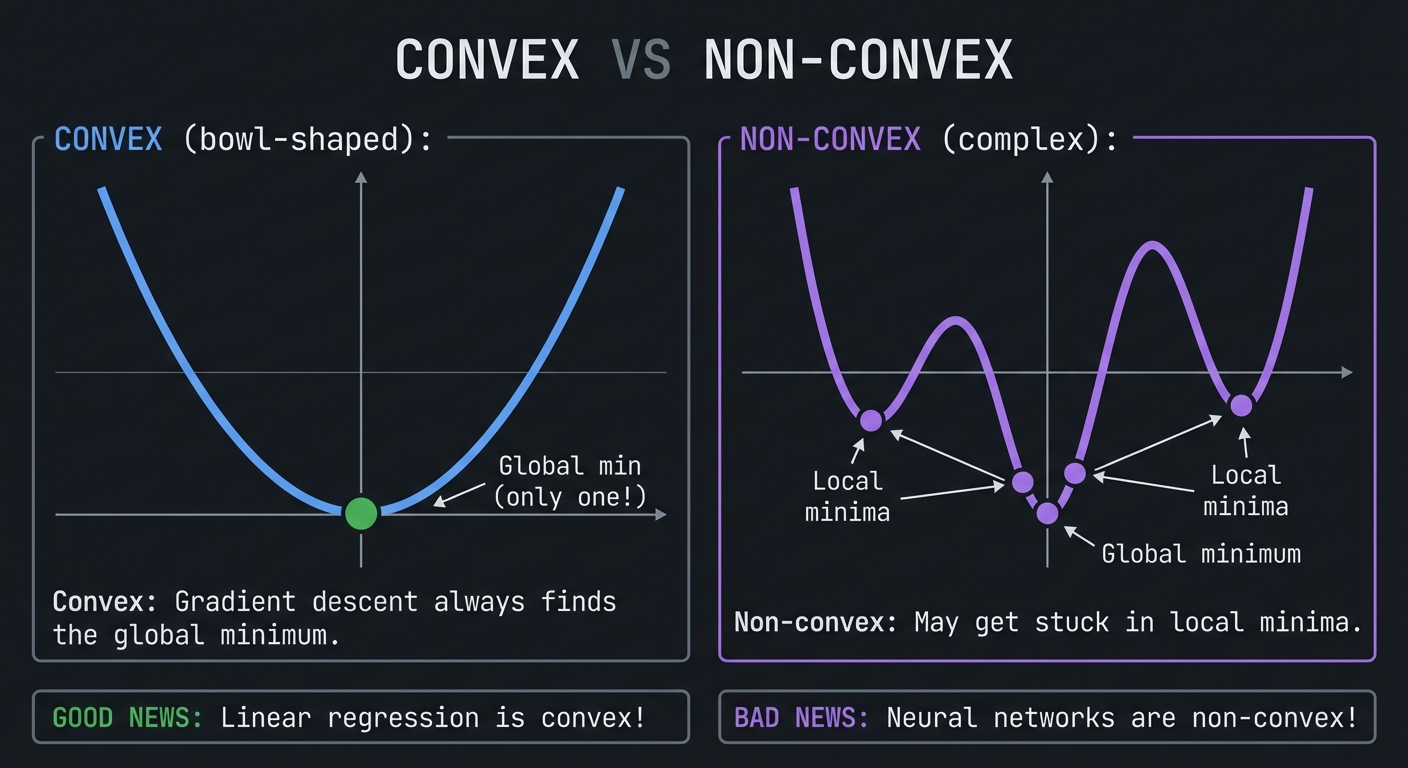

Convexity: When Optimization is Easy

CONVEX VS NON-CONVEX

CONVEX (bowl-shaped): NON-CONVEX (complex):

╲ ╱ ╱╲ ╱╲

╲ ╱ ╱ ╲ ╱ ╲

╲ ╱ ╱ ● ╲

● ● ●

Global min Local Local

(only one!) minima minima

Convex: Gradient descent always finds the global minimum.

Non-convex: May get stuck in local minima.

GOOD NEWS: Linear regression is convex!

BAD NEWS: Neural networks are non-convex!

Reference: “Hands-On Machine Learning” by Aurelien Geron, Chapter 4, provides practical coverage of gradient descent. “Deep Learning” by Goodfellow, Bengio, and Courville gives theoretical depth.

\1

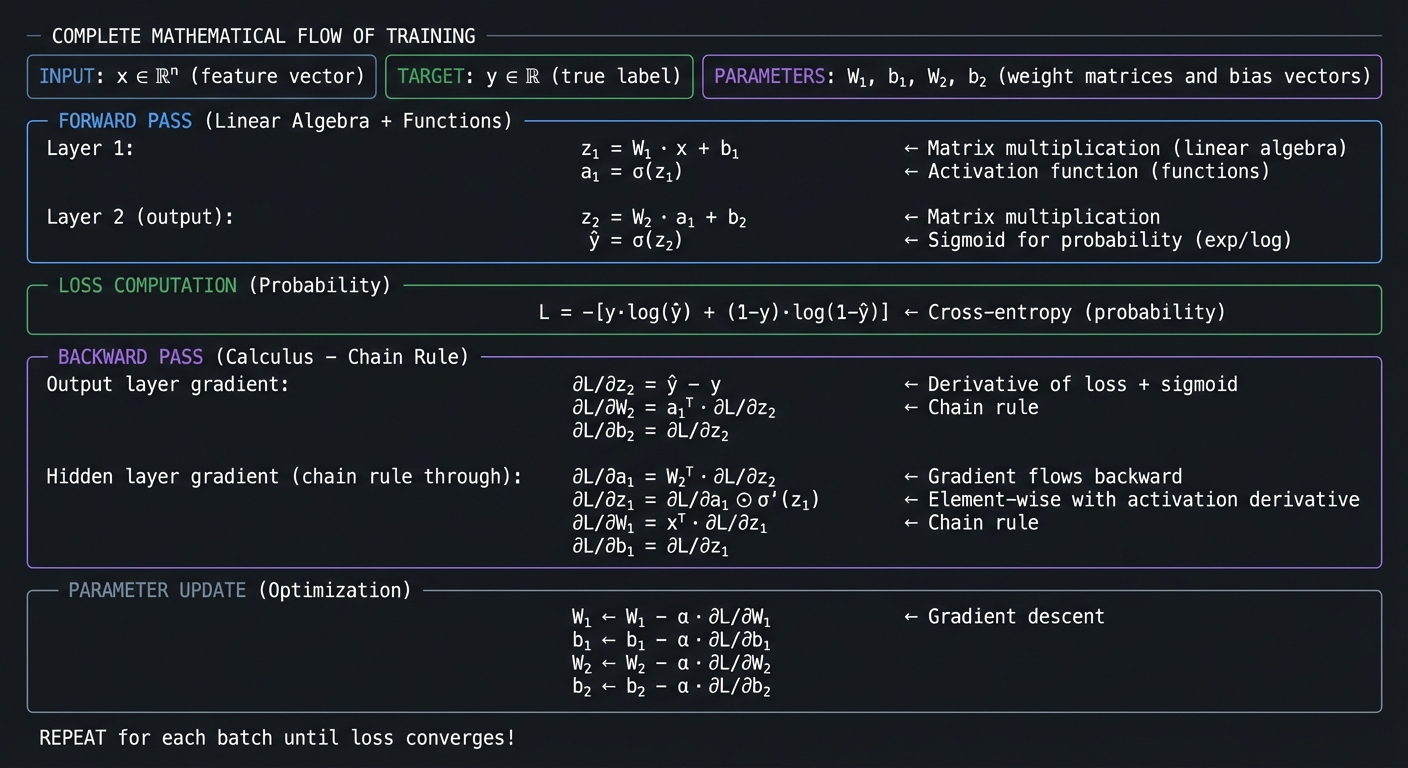

COMPLETE MATHEMATICAL FLOW OF TRAINING

INPUT: x ∈ ℝⁿ (feature vector)

TARGET: y ∈ ℝ (true label)

PARAMETERS: W₁, b₁, W₂, b₂ (weight matrices and bias vectors)

═══════════════════════════════════════════════════════════════════

FORWARD PASS (Linear Algebra + Functions)

──────────────────────────────────────────

Layer 1:

z₁ = W₁ · x + b₁ ← Matrix multiplication (linear algebra)

a₁ = σ(z₁) ← Activation function (functions)

Layer 2 (output):

z₂ = W₂ · a₁ + b₂ ← Matrix multiplication

ŷ = σ(z₂) ← Sigmoid for probability (exp/log)

═══════════════════════════════════════════════════════════════════

LOSS COMPUTATION (Probability)

───────────────────────────────

L = -[y·log(ŷ) + (1-y)·log(1-ŷ)] ← Cross-entropy (probability)

═══════════════════════════════════════════════════════════════════

BACKWARD PASS (Calculus - Chain Rule)

──────────────────────────────────────

Output layer gradient:

∂L/∂z₂ = ŷ - y ← Derivative of loss + sigmoid

∂L/∂W₂ = a₁ᵀ · ∂L/∂z₂ ← Chain rule

∂L/∂b₂ = ∂L/∂z₂

Hidden layer gradient (chain rule through):

∂L/∂a₁ = W₂ᵀ · ∂L/∂z₂ ← Gradient flows backward

∂L/∂z₁ = ∂L/∂a₁ ⊙ σ'(z₁) ← Element-wise with activation derivative

∂L/∂W₁ = xᵀ · ∂L/∂z₁ ← Chain rule

∂L/∂b₁ = ∂L/∂z₁

═══════════════════════════════════════════════════════════════════

PARAMETER UPDATE (Optimization)

─────────────────────────────────

W₁ ← W₁ - α · ∂L/∂W₁ ← Gradient descent

b₁ ← b₁ - α · ∂L/∂b₁

W₂ ← W₂ - α · ∂L/∂W₂

b₂ ← b₂ - α · ∂L/∂b₂

═══════════════════════════════════════════════════════════════════

REPEAT for each batch until loss converges!

This is what happens inside model.fit(). Every concept we’ve covered—algebra, functions, exponents, linear algebra, calculus, probability, and optimization—comes together in this elegant mathematical dance.

When you complete these 31 projects, you won’t just understand this diagram—you’ll have built every component yourself.

Glossary

- Feature: A measurable input variable used by a model to make predictions.

- Parameter: A learnable value (for example, a weight or bias) updated during training.

- Loss Function: A numeric score that measures prediction error; training minimizes this value.

- Gradient: Vector of partial derivatives indicating the direction of steepest increase in loss.

- Convexity: A shape property where any local minimum is also a global minimum.

- Overfitting: When a model memorizes training data patterns that do not generalize to new data.

- Regularization: A constraint added to reduce overfitting by penalizing complex models.

- Likelihood: Probability of observing the data under a chosen model and parameter set.

- Covariance: Measure of how two variables vary together around their means.

- Condition Number: A measure of how sensitive a numerical problem is to small input perturbations.

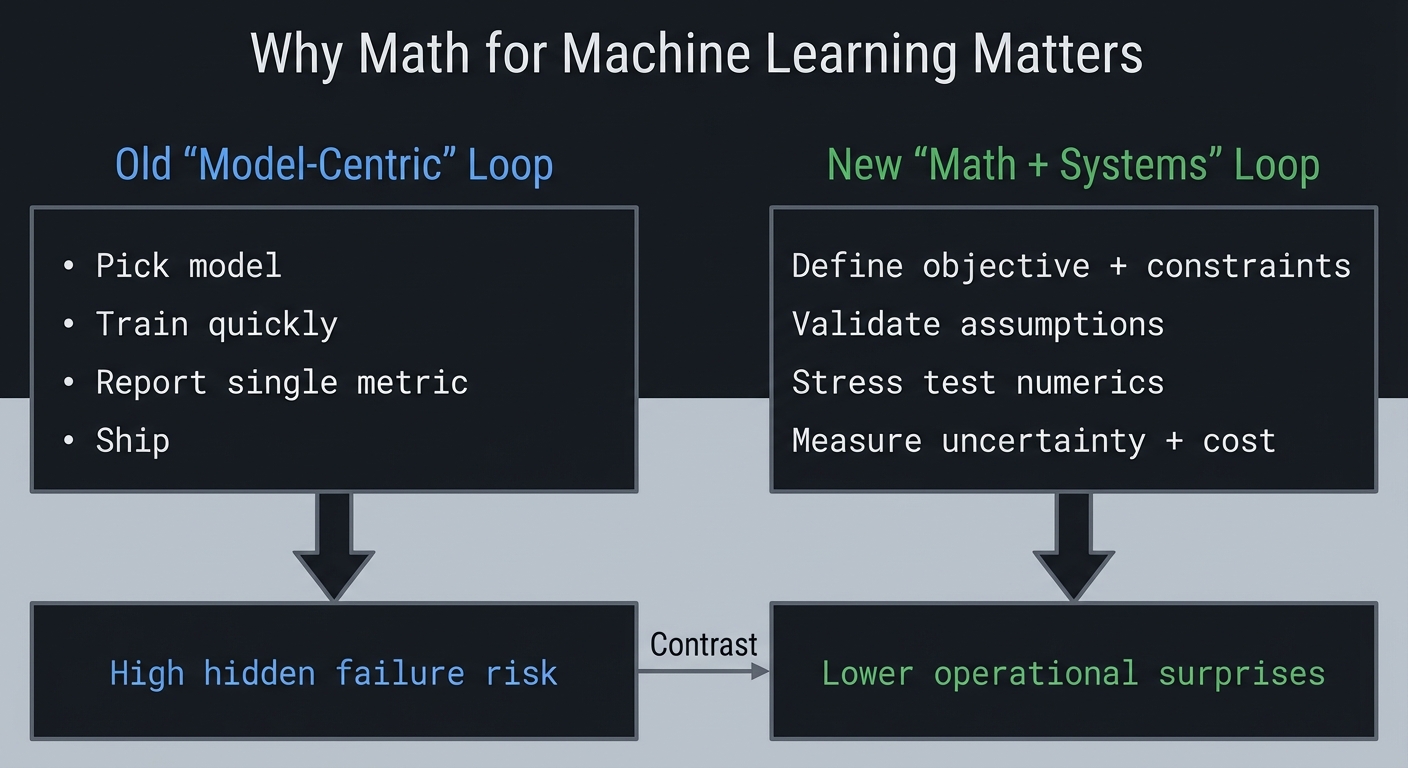

Why Math for Machine Learning Matters

Modern ML systems are not bottlenecked by model APIs; they are bottlenecked by data quality, objective design, numerical behavior, and evaluation rigor.

- In 2024, 78% of organizations reported using AI, up from 55% in 2023 (Stanford AI Index 2025).

- The same report notes inference-cost declines for GPT-3.5-class systems of more than 280x between November 2022 and October 2024, which increases pressure to ship faster but safely (Stanford AI Index 2025).

- U.S. labor demand is also strong: Data Scientist employment is projected to grow 36% from 2023 to 2033 (U.S. Bureau of Labor Statistics).

- Developer workflow impact is now mainstream: 84.6% of developers are using or planning to use AI tools (Stack Overflow Developer Survey 2025).

Old "Model-Centric" Loop New "Math + Systems" Loop

+-----------------------+ +------------------------------+

| Pick model | | Define objective + constraints|

| Train quickly | | Validate assumptions |

| Report single metric | | Stress test numerics |

| Ship | | Measure uncertainty + cost |

+-----------------------+ +------------------------------+

| |

v v

High hidden failure risk Lower operational surprises

Context and evolution: early ML education emphasized algorithms and libraries; current practice increasingly emphasizes data/metric realism, failure analysis, and reproducibility discipline.

Concept Summary Table

| Concept Cluster | What You Need to Internalize | |||

|---|---|---|---|---|

| Arithmetic & Order of Operations | PEMDAS is not arbitrary - it reflects how mathematical expressions compose. Parentheses group, exponents bind tightest, multiplication/division before addition/subtraction. This hierarchy appears everywhere: in code parsing, in how neural networks process layer-by-layer, in how we decompose complex problems. | |||

| Variables & Algebraic Expressions | Variables are placeholders that let you express relationships abstractly. The power of algebra is generalization - once you solve ax + b = c for x, you’ve solved infinitely many specific problems. In ML, model parameters (weights) are variables we solve for. |

|||

| Equations & Solving Systems | An equation is a constraint that must be satisfied. Solving means finding values that satisfy all constraints simultaneously. Linear systems (Ax = b) are the foundation of regression. The key insight: transforming equations preserves solutions - you can manipulate freely as long as you apply operations to both sides. |

|||

| Functions (Domain, Range, Mapping) | Functions are deterministic input-output machines: each input maps to exactly one output. Domain = valid inputs, Range = possible outputs. ML models ARE functions: they take features as input and produce predictions as output. Understanding functions means understanding that f(x) is a recipe, not a number. |

|||

| Function Composition & Inverse | Composing functions (f(g(x))) chains transformations - this is exactly what neural network layers do. Inverse functions “undo” each other (f(f^{-1}(x)) = x). Log and exp are inverses. Understanding composition is essential for backpropagation - gradients flow backward through composed functions via the chain rule. |

|||

| Exponents & Exponential Functions | Exponents represent repeated multiplication: a^n means “multiply a by itself n times.” Exponential functions (e^x) grow explosively and appear everywhere: compound interest, population growth, neural network activations (softmax, sigmoid). The number e (2.718…) is special because d/dx(e^x) = e^x. |

|||

| Logarithms | Logarithms are inverse of exponentiation: log_b(x) = y means b^y = x. They convert multiplication to addition (log(ab) = log(a) + log(b)), making them invaluable for numerical stability. In ML: log-probabilities prevent underflow, cross-entropy loss uses logs, and we often work in log-space for numerical reasons. |

|||

| Trigonometry (Sine, Cosine, Unit Circle) | Trig functions encode circular relationships: given an angle, sin/cos return coordinates on the unit circle. They’re periodic (repeat every 2pi), which makes them useful for modeling cycles. In ML: positional encodings in transformers use sin/cos, rotation matrices use them, and Fourier transforms decompose signals into sines and cosines. | |||

| Vectors & Vector Spaces | Vectors are ordered lists of numbers that represent points or directions in space. They can be added and scaled. A vector space is the set of all vectors you can create through these operations. In ML, every data point is a vector (feature vector), every word is a vector (embedding), every image is a vector (flattened pixels). | |||

| Vector Operations (Dot Product, Norm) | The dot product a.b = sum(a_i * b_i) measures similarity and alignment between vectors. The norm ||a|| measures vector length. Dot products are everywhere in ML: computing weighted sums, measuring cosine similarity, the forward pass of neurons. The norm appears in regularization and normalization. |

|||

| Matrices & Matrix Operations | Matrices are 2D arrays of numbers that represent linear transformations. Matrix multiplication applies one transformation after another. In ML, weight matrices transform inputs, batch computations use matrices, and images are matrices. Understanding matrix multiplication as “row-column dot products” is essential. | |||

| Linear Transformations | A linear transformation preserves addition and scaling: T(a+b) = T(a) + T(b) and T(ca) = cT(a). Every matrix represents a linear transformation. Neural network layers (before activation) are linear transformations. Understanding this geometric view - matrices rotate, scale, shear, project - builds deep intuition. |

|||

| Gaussian Elimination & Solving Linear Systems | Gaussian elimination systematically solves Ax = b by reducing to row echelon form. It reveals whether solutions exist (consistency), how many (uniqueness vs infinite), and finds them. This is the foundation of understanding when linear systems have solutions - critical for regression and optimization. |

|||

| Determinants | The determinant measures how a matrix transformation scales volume. If det(A) = 0, the matrix squashes space to a lower dimension (singular/non-invertible). Non-zero determinant means the transformation is reversible. In ML, determinants appear in Gaussian distributions, change of variables, and checking matrix invertibility. | |||

| Matrix Inverse | The inverse A^{-1} “undoes” matrix A: A * A^{-1} = I. Only square matrices with non-zero determinant have inverses. The normal equation for linear regression uses matrix inverse: w = (X^T X)^{-1} X^T y. Understanding when inverses exist and how to compute them is fundamental. |

|||

| Eigenvalues & Eigenvectors | Eigenvectors are special directions that don’t change orientation under transformation - they only scale by the eigenvalue: Av = lambda*v. They reveal the “natural axes” of a transformation. In ML: PCA finds eigenvectors of covariance, PageRank is an eigenvector problem, stability analysis uses eigenvalues. This is arguably THE most important linear algebra concept for ML. |

|||

| Eigendecomposition & Diagonalization | When a matrix can be written as A = PDP^{-1} where D is diagonal, we’ve diagonalized it. The columns of P are eigenvectors, diagonal of D are eigenvalues. This simplifies matrix powers: A^n = PD^nP^{-1}. Understanding diagonalization makes PCA, spectral clustering, and matrix analysis tractable. |

|||

| Singular Value Decomposition (SVD) | SVD generalizes eigendecomposition to non-square matrices: A = U*Sigma*V^T. It reveals the fundamental structure of any matrix. In ML: recommendation systems, image compression, noise reduction, and understanding what neural networks learn. SVD shows the “most important” directions in data. |

|||

| Derivatives & Rates of Change | The derivative measures instantaneous rate of change: how fast f(x) changes as x changes. It’s the slope of the tangent line. In ML, derivatives tell us how loss changes as we adjust parameters - the foundation of learning. Without derivatives, we couldn’t train neural networks. | |||

| Differentiation Rules (Power, Product, Quotient) | Rules that make differentiation mechanical: power rule (d/dx(x^n) = nx^{n-1}), product rule (d/dx(fg) = f'g + fg'), quotient rule. Knowing these rules means you can differentiate any algebraic expression. They appear constantly when deriving gradients for optimization. |

|||

| Chain Rule | The chain rule handles function composition: d/dx[f(g(x))] = f'(g(x)) * g'(x). This is THE most important derivative rule for ML because backpropagation IS the chain rule. Every gradient that flows backward through a neural network uses the chain rule. Master this and you understand backprop. |

|||

| Partial Derivatives | When functions have multiple inputs, partial derivatives measure change with respect to one variable while holding others fixed: df/dx. In ML, loss depends on many parameters, and we need to know how changing each one affects the loss. Partial derivatives give us this sensitivity. |

|||

| Gradients | The gradient grad(f) = [df/dx1, df/dx2, ...] collects all partial derivatives into a vector pointing in the direction of steepest increase. In ML, we subtract the gradient to descend toward minima. The gradient is how we navigate loss landscapes. |

|||

| Optimization & Finding Extrema | Optimization finds inputs that minimize or maximize functions. Critical points occur where gradient = 0. Second derivatives tell us if it’s a min, max, or saddle point. All of ML training is optimization: finding parameters that minimize loss. | |||

| Numerical Differentiation | When we can’t derive formulas, we approximate: f'(x) ~ (f(x+h) - f(x-h))/(2h). Understanding numerical differentiation helps you debug gradient implementations and understand autodiff. It’s also how we check if our analytical gradients are correct (gradient checking). |

|||

| Integration & Area Under Curve | Integration is the inverse of differentiation and computes accumulated quantities (area under curve). In ML: computing expected values, normalizing probability distributions, understanding cumulative distributions. Numerical integration (Riemann sums, Simpson’s rule) approximates integrals computationally. | |||

| Probability Fundamentals | Probability quantifies uncertainty: P(A) is between 0 and 1, P(certain) = 1, P(impossible) = 0. Probabilities of mutually exclusive events add. In ML, we model uncertainty in predictions, quantify confidence, and make decisions under uncertainty. | |||

| Conditional Probability | P(A | B) is probability of A given that B occurred. It’s fundamental for updating beliefs with evidence. In ML: P(class | features) for classification, P(next_word | previous_words) for language models. Understanding conditioning is essential for probabilistic reasoning. |

| Bayes’ Theorem | P(A|B) = P(B|A) * P(A) / P(B) - relates forward and backward conditional probabilities. It’s how we update beliefs with evidence: prior belief P(A) becomes posterior P(A |

B) after observing B. Foundation of Bayesian ML, spam filters, medical diagnosis, and probabilistic inference. | ||

| Independence & Conditional Independence | Events are independent if P(A,B) = P(A)P(B). Conditional independence: P(A,B | C) = P(A | C)P(B | C). The “naive” in Naive Bayes assumes feature independence given class. Understanding independence simplifies many probability calculations. |

| Random Variables & Probability Distributions | Random variables map outcomes to numbers. Distributions describe the probability of each value: discrete (PMF: P(X=x)) or continuous (PDF: area under curve gives probability). In ML: model outputs as distributions, understanding data, sampling from distributions. | |||

| Uniform Distribution | Every value in a range is equally likely: P(x) = 1/(b-a) for x in [a,b]. The starting point for random number generation. Understanding uniform distribution is foundational - we transform uniform samples to generate other distributions. | |||

| Normal (Gaussian) Distribution | The bell curve: N(mu, sigma^2) with mean mu and variance sigma^2. Appears everywhere due to Central Limit Theorem (sum of many independent effects -> normal). In ML: weight initialization, regularization (L2 = Gaussian prior), Gaussian processes, maximum likelihood often assumes normality. |

|||

| Exponential & Poisson Distributions | Exponential: time between events, memoryless property. Poisson: count of events in fixed interval. Both model “random arrivals” and appear in survival analysis, queueing, count data. Understanding these connects probability to real-world phenomena. | |||

| Binomial Distribution | Number of successes in n independent trials, each with probability p. The discrete analog of normal for large n. Foundation for A/B testing, understanding conversion rates, and modeling binary outcomes. | |||

| Expectation (Expected Value) | E[X] = sum of x*P(x) - the “average” value weighted by probability. In ML: expected loss is what we minimize, expected reward in reinforcement learning, making decisions under uncertainty. Expectation is the bridge between probability and optimization. | |||

| Variance & Standard Deviation | Var(X) = E[(X - E[X])^2] measures spread around the mean. Standard deviation sigma = sqrt(Var) is in same units as X. In ML: understanding data spread, batch normalization, uncertainty quantification. Low variance = consistent, high variance = spread out. | |||

| Covariance & Correlation | Cov(X,Y) measures how two variables move together. Correlation normalizes to [-1, 1]. Positive = move together, negative = move opposite, zero = no linear relationship. Foundation of PCA, understanding feature relationships, and multivariate analysis. | |||

| Law of Large Numbers | Sample average converges to true mean as sample size increases. This is why Monte Carlo methods work: enough samples -> accurate estimates. In ML: training loss converges to expected loss, sampling-based methods become accurate with enough samples. | |||

| Central Limit Theorem | Sum/average of many independent random variables -> normal distribution, regardless of original distribution. This is why normal is everywhere and why we can use normal-based statistics on averages. Foundation of confidence intervals and hypothesis testing. | |||

| Maximum Likelihood Estimation | Find parameters that maximize probability of observed data: argmax_theta P(data|theta). The principled way to fit models to data. Many ML methods (logistic regression, neural networks) optimize log-likelihood. Understanding MLE connects probability to optimization. |

|||

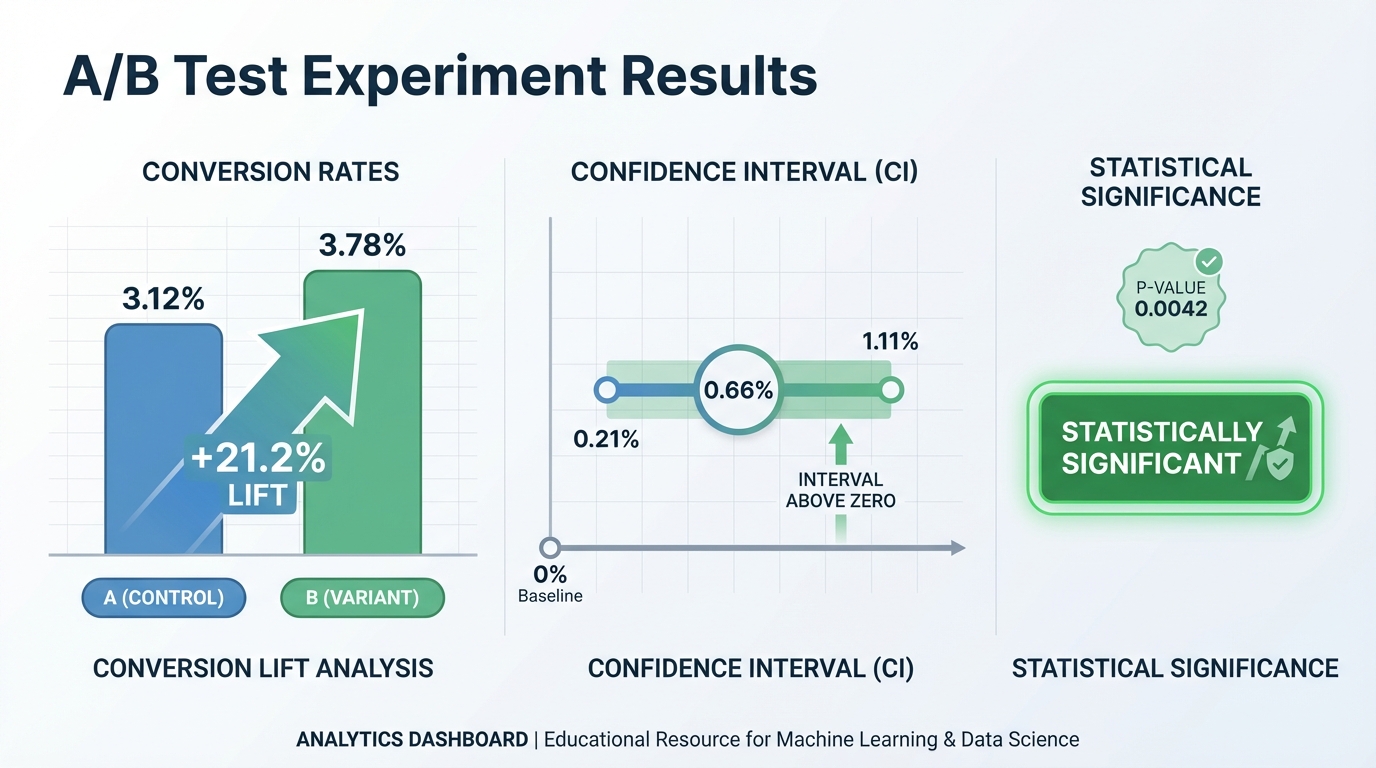

| Hypothesis Testing & p-values | Test whether observed effect is statistically significant vs random chance. p-value = probability of seeing result this extreme if null hypothesis is true. In ML: A/B testing, model comparison, determining if improvements are real. Critical for scientific validity. | |||

| Confidence Intervals | Range of plausible values for a parameter, with stated confidence (e.g., 95%). Wider = more uncertainty. In ML: uncertainty in predictions, model comparison, communicating results. Confidence intervals quantify what we don’t know. | |||

| Loss Functions | Functions that measure prediction error: MSE = mean squared error for regression, cross-entropy for classification. The loss is what we minimize during training. Understanding loss functions means understanding what the model is optimizing for. | |||

| Mean Squared Error (MSE) | MSE = (1/n) * sum((predicted - actual)^2) - penalizes large errors quadratically. Used in regression. Minimizing MSE = finding mean of conditional distribution. Understanding MSE geometrically (distance in output space) builds intuition. |

|||

| Cross-Entropy Loss | -sum(y * log(p)) - measures difference between true distribution y and predicted distribution p. Used for classification. Cross-entropy = KL divergence + constant, so minimizing cross-entropy = matching distributions. Foundation of probabilistic classification. |

|||

| Gradient Descent | Iterative optimization: theta_new = theta_old - alpha * grad(L(theta)). Move in direction opposite to gradient (downhill). Learning rate alpha controls step size. This is how neural networks learn. Variations: SGD (stochastic), momentum, Adam. Understanding GD = understanding training. |

|||

| Learning Rate | Step size in gradient descent. Too large = overshooting/divergence, too small = slow convergence. Finding good learning rates is crucial for training. Learning rate schedules (decay) help navigate loss landscapes. | |||

| Stochastic & Mini-batch Gradient Descent | Instead of computing gradient on all data, use random subsets (batches). Trades accuracy for speed, adds noise that helps escape local minima. Batch size affects training dynamics. Standard practice in modern ML. | |||

| Convexity | A function is convex if line segment between any two points lies above the curve. Convex optimization has no local minima - any local minimum is global. Linear regression loss is convex; neural network loss is not. Understanding convexity predicts optimization difficulty. | |||

| Local vs Global Minima | Local minimum: lower than nearby points. Global minimum: lowest overall. Non-convex functions have local minima that gradient descent can get stuck in. Neural network training navigates complex landscapes with many local minima (though empirically this works). | |||

| Regularization | Adding penalty to loss to prevent overfitting: L2 (sum of squared weights) encourages small weights, L1 (sum of absolute weights) encourages sparsity. Regularization trades training accuracy for generalization. Mathematically: adding prior beliefs in Bayesian view. |

Project-to-Concept Map

| Project | Concepts Applied |

|---|---|

| Projects 1-3 | Algebraic modeling, function composition, exponentials/logs, numerical parsing |

| Projects 4-7 | Vector spaces, matrix operations, eigendecomposition, SVD intuition |

| Projects 8-11 | Derivatives, chain rule, gradients, numerical integration, backprop mechanics |

| Projects 12-16 | Probability distributions, Bayes theorem, sampling, hypothesis testing, stochastic processes |

| Projects 17-20 | Loss design, optimization dynamics, regularization, model selection, full ML pipeline decisions |

| Projects 21-25 | Sequences/series convergence, matrix calculus, information-theoretic losses, numerical stability, convex optimization and KKT conditions |

| Projects 26-31 | Measure-theoretic foundations, real/functional analysis intuition, graph/spectral methods, and random matrix effects in high dimensions |

Stage-to-Project Coverage Matrix

This table explicitly maps the requested Stage 0–8 roadmap topics to at least one project in this guide.

| Stage | Topic | Covered By |

|---|---|---|

| Stage 0 | Algebra Mastery | Project 1, Project 3 |

| Stage 0 | Function Thinking | Project 2 |

| Stage 0 | Trigonometry | Project 1, Project 2 |

| Stage 0 | Basic Probability | Project 12, Project 14 |

| Stage 0 | Sequences & Series | Project 21 |

| Stage 1 | Vectors | Project 4, Project 5 |

| Stage 1 | Matrices | Project 4 |

| Stage 1 | Vector Spaces | Project 5, Project 6 |

| Stage 1 | Eigenvalues & Eigenvectors | Project 6 |

| Stage 1 | Matrix Decompositions (SVD) | Project 7 |

| Stage 2 | Single Variable Calculus | Project 8 |

| Stage 2 | Multivariable Calculus | Project 9, Project 11 |

| Stage 2 | Optimization | Project 9, Project 17, Project 18 |

| Stage 3 | Random Variables | Project 13 |

| Stage 3 | Common Distributions | Project 13 |

| Stage 3 | Statistical Inference | Project 15 |

| Stage 3 | Bayesian Thinking | Project 14 |

| Stage 4 | Matrix Calculus | Project 22 |

| Stage 5 | Information Theory | Project 23 |

| Stage 6 | Numerical Methods & Stability | Project 24 |

| Stage 7 | Convex Optimization | Project 25 |

| Stage 8 (Research) | Measure Theory | Project 26 |

| Stage 8 (Research) | Real Analysis | Project 27 |

| Stage 8 (Research) | Functional Analysis | Project 28 |

| Stage 8 (Applied) | Graph Theory | Project 29 |

| Stage 8 (Applied) | Spectral Methods | Project 30 |

| Stage 8 (Applied) | Random Matrix Theory | Project 31 |

Deep Dive Reading by Concept

This table maps every mathematical concept to specific chapters in recommended books for deeper understanding.

\1

| Concept | Book | Chapter(s) | Notes |

|---|---|---|---|

| Order of Operations (PEMDAS) | “C Programming: A Modern Approach” - K.N. King | Ch 4: Expressions | How operators bind in code |

| Order of Operations | “Math for Programmers” - Paul Orland | Ch 2 | Mathematical foundations |

| Variables & Expressions | “Math for Programmers” - Paul Orland | Ch 1-2 | Programming perspective on algebra |

| Solving Equations | “Math for Programmers” - Paul Orland | Ch 2 | From algebra to code |

| Functions (concept) | “Math for Programmers” - Paul Orland | Ch 2-3 | Functions as transformations |

| Functions & Graphs | “Math for Programmers” - Paul Orland | Ch 3 | Visualizing function behavior |

| Function Composition | “Math for Programmers” - Paul Orland | Ch 3 | Building complex from simple |

| Inverse Functions | “Math for Programmers” - Paul Orland | Ch 3 | Undoing transformations |

| Exponents & Powers | “Math for Programmers” - Paul Orland | Ch 2 | Exponential growth patterns |

| Logarithms | “Math for Programmers” - Paul Orland | Ch 2 | Log as inverse of exp |

| Logarithms (numerical) | “Computer Systems: A Programmer’s Perspective” - Bryant & O’Hallaron | Ch 2.4 | Floating point representation |

| Trigonometry Basics | “Math for Programmers” - Paul Orland | Ch 2, 4 | Sin, cos, and the unit circle |

| Complex Numbers | “Math for Programmers” - Paul Orland | Ch 9 | Beyond real numbers |

| Polynomials | “Algorithms” - Sedgewick & Wayne | Ch 4.2 | Root finding, numerical methods |

\1

| Concept | Book | Chapter(s) | Notes |

|---|---|---|---|

| Vectors Introduction | “Math for Programmers” - Paul Orland | Ch 3-4 | Vectors as arrows and lists |

| Vector Operations | “Math for Programmers” - Paul Orland | Ch 3-4 | Addition, scaling, dot product |

| Dot Product | “Math for Programmers” - Paul Orland | Ch 3 | Geometric and algebraic views |

| Vector Norms | “Math for Programmers” - Paul Orland | Ch 3 | Measuring vector length |

| Matrices Introduction | “Math for Programmers” - Paul Orland | Ch 5 | Matrices as data structures |

| Matrix Operations | “Math for Programmers” - Paul Orland | Ch 5 | Add, multiply, transpose |

| Matrix Multiplication | “Math for Programmers” - Paul Orland | Ch 5 | Row-column dot products |

| Matrix Multiplication | “Algorithms” - Sedgewick & Wayne | Ch 5.1 | Algorithmic perspective |

| Linear Transformations | “Math for Programmers” - Paul Orland | Ch 4-5 | Matrices as transformations |

| 2D/3D Transformations | “Computer Graphics from Scratch” - Gabriel Gambetta | Ch 11 | Rotation, scaling, shear |

| Gaussian Elimination | “Algorithms” - Sedgewick & Wayne | Ch 5.1 | Solving linear systems |

| Determinants | “Linear Algebra Done Right” - Sheldon Axler | Ch 4 | Volume scaling interpretation |

| Matrix Inverse | “Math for Programmers” - Paul Orland | Ch 5 | When and how matrices invert |

| Matrix Inverse | “Algorithms” - Sedgewick & Wayne | Ch 5.1 | Computational methods |

| Eigenvalues & Eigenvectors | “Linear Algebra Done Right” - Sheldon Axler | Ch 5 | The definitive treatment |

| Eigenvalues & Eigenvectors | “Math for Programmers” - Paul Orland | Ch 7 | Practical perspective |

| Power Iteration | “Algorithms” - Sedgewick & Wayne | Ch 5.6 | Finding dominant eigenvector |

| Eigendecomposition | “Linear Algebra Done Right” - Sheldon Axler | Ch 5-7 | Diagonalization theory |

| SVD (Singular Value Decomposition) | “Numerical Linear Algebra” - Trefethen & Bau | Ch 4 | Complete mathematical treatment |

| Numerical Stability | “Computer Systems: A Programmer’s Perspective” - Bryant & O’Hallaron | Ch 2.4 | Floating point pitfalls |

| Numerical Linear Algebra | “Computer Systems: A Programmer’s Perspective” - Bryant & O’Hallaron | Ch 2 | Machine representation |

\1

| Concept | Book | Chapter(s) | Notes |

|---|---|---|---|

| Limits & Continuity | “Calculus” - James Stewart | Ch 1-2 | Foundation of calculus |

| Derivatives Introduction | “Calculus” - James Stewart | Ch 2-3 | Rates of change |

| Derivatives | “Math for Programmers” - Paul Orland | Ch 8 | Programmer’s perspective |

| Power Rule | “Calculus” - James Stewart | Ch 3 | d/dx(x^n) = nx^{n-1} |

| Product Rule | “Calculus” - James Stewart | Ch 3 | d/dx(fg) = f’g + fg’ |

| Quotient Rule | “Calculus” - James Stewart | Ch 3 | d/dx(f/g) |

| Chain Rule | “Calculus” - James Stewart | Ch 3 | Composition of functions |

| Chain Rule | “Math for Programmers” - Paul Orland | Ch 8 | Connection to backpropagation |

| Transcendental Derivatives | “Calculus” - James Stewart | Ch 3 | sin, cos, exp, log derivatives |

| Partial Derivatives | “Calculus” - James Stewart | Ch 14 | Multivariable calculus |

| Partial Derivatives | “Math for Programmers” - Paul Orland | Ch 12 | Multiple inputs |

| Gradients | “Math for Programmers” - Paul Orland | Ch 12 | Vector of partials |

| Gradients | “Hands-On Machine Learning” - Aurelien Geron | Ch 4 | ML context |

| Optimization (finding extrema) | “Calculus” - James Stewart | Ch 4, 14 | Min/max problems |

| Definite Integrals | “Calculus” - James Stewart | Ch 5 | Area under curve |

| Numerical Integration | “Numerical Recipes” - Press et al. | Ch 4 | Computational methods |

| Riemann Sums | “Math for Programmers” - Paul Orland | Ch 8 | Approximating integrals |

| Taylor Series | “Calculus” - James Stewart | Ch 11 | Function approximation |

\1

| Concept | Book | Chapter(s) | Notes |

|---|---|---|---|

| Probability Fundamentals | “Think Stats” - Allen Downey | Ch 1-2 | Computational approach |

| Probability | “All of Statistics” - Larry Wasserman | Ch 1-2 | Rigorous treatment |

| Conditional Probability | “Think Bayes” - Allen Downey | Ch 1-2 | Bayesian perspective |

| Bayes’ Theorem | “Think Bayes” - Allen Downey | Ch 1-2 | Foundation of Bayesian inference |

| Bayes’ Theorem | “Data Science for Business” - Provost & Fawcett | Ch 5 | Business applications |

| Independence | “All of Statistics” - Larry Wasserman | Ch 2 | Statistical independence |

| Random Variables | “Think Stats” - Allen Downey | Ch 2-3 | Distributions introduction |

| Uniform Distribution | “Math for Programmers” - Paul Orland | Ch 15 | Equal probability |

| Normal Distribution | “All of Statistics” - Larry Wasserman | Ch 3 | The bell curve |

| Normal Distribution | “Think Stats” - Allen Downey | Ch 3-4 | Practical perspective |

| Exponential Distribution | “All of Statistics” - Larry Wasserman | Ch 3 | Time between events |

| Poisson Distribution | “All of Statistics” - Larry Wasserman | Ch 3 | Count data |

| Binomial Distribution | “Think Stats” - Allen Downey | Ch 3 | Binary outcomes |

| Expected Value | “All of Statistics” - Larry Wasserman | Ch 3 | Weighted average |

| Expected Value | “Data Science for Business” - Provost & Fawcett | Ch 6 | Business decisions |

| Variance & Standard Deviation | “Think Stats” - Allen Downey | Ch 4 | Measuring spread |

| Covariance & Correlation | “Data Science for Business” - Provost & Fawcett | Ch 5 | Relationships between variables |

| Covariance | “All of Statistics” - Larry Wasserman | Ch 3 | Mathematical definition |

| Law of Large Numbers | “All of Statistics” - Larry Wasserman | Ch 5 | Convergence of averages |

| Central Limit Theorem | “Data Science for Business” - Provost & Fawcett | Ch 6 | Why normal is everywhere |

| Central Limit Theorem | “All of Statistics” - Larry Wasserman | Ch 5 | Formal statement and proof |

| Maximum Likelihood | “All of Statistics” - Larry Wasserman | Ch 9 | Parameter estimation |

| Hypothesis Testing | “Think Stats” - Allen Downey | Ch 7 | Testing significance |

| Hypothesis Testing | “All of Statistics” - Larry Wasserman | Ch 10 | Rigorous framework |

| p-values | “Think Stats” - Allen Downey | Ch 7 | Interpreting significance |

| Confidence Intervals | “Data Science for Business” - Provost & Fawcett | Ch 6 | Uncertainty quantification |

| Confidence Intervals | “All of Statistics” - Larry Wasserman | Ch 6 | Construction and interpretation |

| Sample Size & Power | “Statistics Done Wrong” - Alex Reinhart | Ch 4 | Planning experiments |

| Monte Carlo Methods | “Grokking Algorithms” - Aditya Bhargava | Ch 10 | Random sampling approaches |

\1

| Concept | Book | Chapter(s) | Notes |

|---|---|---|---|

| Loss Functions Overview | “Hands-On Machine Learning” - Aurelien Geron | Ch 4 | What we optimize |

| Mean Squared Error | “Hands-On Machine Learning” - Aurelien Geron | Ch 4 | Regression loss |

| Cross-Entropy Loss | “Deep Learning” - Goodfellow et al. | Ch 3, 6 | Classification loss |

| Cross-Entropy | “Hands-On Machine Learning” - Aurelien Geron | Ch 4 | Practical perspective |

| Gradient Descent | “Hands-On Machine Learning” - Aurelien Geron | Ch 4 | The optimization workhorse |

| Gradient Descent | “Deep Learning” - Goodfellow et al. | Ch 4, 8 | Theory and practice |

| Learning Rate | “Neural Networks and Deep Learning” - Michael Nielsen | Ch 3 | Tuning optimization |

| Learning Rate | “Hands-On Machine Learning” - Aurelien Geron | Ch 4 | Practical guidance |

| SGD & Mini-batch | “Deep Learning” - Goodfellow et al. | Ch 8 | Stochastic optimization |

| Mini-batch Gradient Descent | “Hands-On Machine Learning” - Aurelien Geron | Ch 4 | Batch size effects |

| Momentum | “Deep Learning” - Goodfellow et al. | Ch 8 | Accelerating convergence |

| Adam Optimizer | “Deep Learning” - Goodfellow et al. | Ch 8 | Adaptive learning rates |

| Convexity | “Deep Learning” - Goodfellow et al. | Ch 4 | Optimization landscape |

| Local vs Global Minima | “Deep Learning” - Goodfellow et al. | Ch 4 | Non-convex optimization |

| Local Minima | “Hands-On Machine Learning” - Aurelien Geron | Ch 11 | Practical implications |

| Regularization (L1, L2) | “Hands-On Machine Learning” - Aurelien Geron | Ch 4 | Preventing overfitting |

| Regularization | “Deep Learning” - Goodfellow et al. | Ch 7 | Theory of regularization |

| Linear Regression | “Hands-On Machine Learning” - Aurelien Geron | Ch 4 | Foundation ML algorithm |

| Normal Equation | “Machine Learning” (Coursera) - Andrew Ng | Week 2 | Closed-form solution |

| Logistic Regression | “Hands-On Machine Learning” - Aurelien Geron | Ch 4 | Classification with probabilities |

| Sigmoid Function | “Neural Networks and Deep Learning” - Michael Nielsen | Ch 1 | Squashing to [0,1] |

| Softmax Function | “Deep Learning” - Goodfellow et al. | Ch 6 | Multi-class probabilities |

| Backpropagation | “Neural Networks and Deep Learning” - Michael Nielsen | Ch 2 | How gradients flow |

| Backpropagation | “Deep Learning” - Goodfellow et al. | Ch 6 | Mathematical derivation |

| Computational Graphs | “Deep Learning” - Goodfellow et al. | Ch 6 | Representing computation |

| PCA (Principal Component Analysis) | “Hands-On Machine Learning” - Aurelien Geron | Ch 8 | Dimensionality reduction |

| PCA Mathematics | “Math for Programmers” - Paul Orland | Ch 10 | Eigenvalue perspective |

| Naive Bayes | “Hands-On Machine Learning” - Aurelien Geron | Ch 3 | Probabilistic classification |

| Text Classification | “Speech and Language Processing” - Jurafsky & Martin | Ch 4 | NLP fundamentals |

| N-gram Models | “Speech and Language Processing” - Jurafsky & Martin | Ch 3 | Language modeling |

| Markov Chains | “All of Statistics” - Larry Wasserman | Ch 21 | Sequential probability |

| Neural Network Architecture | “Neural Networks and Deep Learning” - Michael Nielsen | Ch 1-2 | Building blocks |

| Weight Initialization | “Hands-On Machine Learning” - Aurelien Geron | Ch 11 | Starting right |

| Activation Functions | “Deep Learning” - Goodfellow et al. | Ch 6 | Non-linearity |

| Cross-Validation | “Hands-On Machine Learning” - Aurelien Geron | Ch 2 | Proper evaluation |

| Bias-Variance Tradeoff | “Machine Learning” (Coursera) - Andrew Ng | Week 6 | Underfitting vs overfitting |

| Hyperparameter Tuning | “Deep Learning” - Goodfellow et al. | Ch 11 | Optimization over hyperparameters |

| ML Pipeline Design | “Designing Machine Learning Systems” - Chip Huyen | Ch 2 | End-to-end systems |

| Feature Engineering | “Data Science for Business” - Provost & Fawcett | Ch 4 | Creating useful inputs |

| Feature Scaling | “Data Science for Business” - Provost & Fawcett | Ch 4 | Normalization for optimization |

\1

| Concept | Book | Chapter(s) | Notes |

|---|---|---|---|

| Floating Point Numbers | “Computer Systems: A Programmer’s Perspective” - Bryant & O’Hallaron | Ch 2.4 | How computers represent reals |

| Numerical Precision | “Computer Systems: A Programmer’s Perspective” - Bryant & O’Hallaron | Ch 2.4 | Avoiding numerical errors |

| Expression Parsing | “Compilers: Principles and Practice” - Parag H. Dave | Ch 4 | Precedence and parsing |

| Symbolic Computation | “SICP” - Abelson & Sussman | Section 2.3.2 | Manipulating expressions |

| Coordinate Systems | “Computer Graphics from Scratch” - Gabriel Gambetta | Ch 1 | Mapping math to pixels |

| Homogeneous Coordinates | “Computer Graphics: Principles and Practice” - Hughes et al. | Ch 7 | Translations as matrices |

| Algorithm Analysis | “Algorithms” - Sedgewick & Wayne | Ch 1-2 | Complexity and efficiency |

| Big-O Notation | “Grokking Algorithms” - Aditya Bhargava | Ch 1 | Algorithmic complexity |

| Binary Search | “Grokking Algorithms” - Aditya Bhargava | Ch 1 | Foundation algorithm |

| Root Finding | “Algorithms” - Sedgewick & Wayne | Ch 4.2 | Newton-Raphson and bisection |

Quick Start: Your First 48 Hours

Day 1:

- Read the sections on functions/logs and linear algebra in the Theory Primer.

- Start Project 1 and finish tokenization + precedence handling.

- Run 10 manual edge-case tests and log failures.

Day 2:

- Finish Project 1 Definition of Done.

- Start Project 2 and produce at least one plotted function with marked roots.

- Write a one-page reflection: where symbolic math diverged from numerical behavior.

Recommended Learning Paths

Path 1: The Career Switcher (Math Rusty)

- Projects 1 -> 2 -> 4 -> 8 -> 12 -> 17 -> 20

Path 2: The Practitioner (Can Train Models, Wants Depth)

- Projects 4 -> 6 -> 7 -> 11 -> 14 -> 18 -> 19 -> 20

Path 3: The Research-Oriented Builder

- Projects 3 -> 6 -> 7 -> 9 -> 11 -> 13 -> 16 -> 19 -> 20

Path 4: The Advanced Math Extension Track

- Projects 21 -> 22 -> 23 -> 24 -> 25 -> 26 -> 27 -> 28 -> 29 -> 30 -> 31

Success Metrics

- You can derive and explain gradients used in your own implementation without framework autograd.

- You can diagnose whether errors are due to model class, data quality, numerical instability, or metric mismatch.

- You can design and defend an evaluation protocol (validation/test split, uncertainty, significance) for a new task.

- You can reproduce project outcomes from a clean environment using only saved configs and fixed seeds.

- You can explain where finite-dimensional ML intuition breaks and how advanced math (measure/functional/random matrix theory) restores rigor.

Project Overview Table

| # | Project | Concept Cluster |

|---|---|---|

| 1 | Scientific Calculator from Scratch | Foundations |

| 2 | Function Grapher and Analyzer | Foundations |

| 3 | Polynomial Root Finder | Foundations |

| 4 | Matrix Calculator with Visualizations | Linear Algebra |

| 5 | 2D/3D Transformation Visualizer | Linear Algebra |

| 6 | Eigenvalue/Eigenvector Explorer | Linear Algebra |

| 7 | PCA Image Compressor | Linear Algebra |

| 8 | Symbolic Derivative Calculator | Calculus |

| 9 | Gradient Descent Visualizer | Calculus |

| 10 | Numerical Integration Visualizer | Calculus |

| 11 | Backpropagation from Scratch (Single Neuron) | Calculus |

| 12 | Monte Carlo Pi Estimator | Probability |

| 13 | Distribution Sampler and Visualizer | Probability |

| 14 | Naive Bayes Spam Filter | Probability |

| 15 | A/B Testing Framework | Probability |

| 16 | Markov Chain Text Generator | Probability |

| 17 | Linear Regression from Scratch | Optimization |

| 18 | Logistic Regression Classifier | Optimization |

| 19 | Neural Network from First Principles | Optimization |

| 20 | Complete ML Pipeline from Scratch | Optimization |

| 21 | Sequence and Series Convergence Lab | Stage 0 Completion |

| 22 | Matrix Calculus Backpropagation Workbench | Stage 4 |

| 23 | Information Theory Loss Engineering Lab | Stage 5 |

| 24 | Numerical Stability and Conditioning Stress Lab | Stage 6 |

| 25 | Convex Optimization and KKT Constraint Solver | Stage 7 |

| 26 | Measure-Theoretic Probability Sandbox | Stage 8 (Research) |

| 27 | Real Analysis Generalization Bounds Lab | Stage 8 (Research) |

| 28 | Functional Analysis and RKHS Kernel Lab | Stage 8 (Research) |

| 29 | Graph Theory Message Passing Playground | Stage 8 (Applied) |

| 30 | Spectral Methods and Graph Laplacian Clusterer | Stage 8 (Applied) |

| 31 | Random Matrix Theory in High-Dimensional ML | Stage 8 (Applied) |

Project List

The following projects guide you from high-school-level symbolic comfort to production-minded ML mathematical reasoning.

Part 1: High School Math Foundations (Review)

These projects help you rebuild your intuition for fundamental mathematical concepts.

Project 1: Scientific Calculator from Scratch

- File: P01-scientific-calculator-from-scratch.md

- Main Programming Language: Python

- Alternative Programming Languages: C, JavaScript, Rust

- Coolness Level: Level 2: Practical but Forgettable

- Business Potential: 1. The “Resume Gold” (Educational/Personal Brand)

- Difficulty: Level 1: Beginner (The Tinkerer)

- Knowledge Area: Expression Parsing / Numerical Computing

- Software or Tool: Calculator Engine

- Main Book: “C Programming: A Modern Approach” by K. N. King (Chapter 7: Basic Types)

What you’ll build: A command-line calculator that parses mathematical expressions like 3 + 4 * (2 - 1) ^ 2 and evaluates them correctly, handling operator precedence, parentheses, and mathematical functions (sin, cos, log, exp, sqrt).

Why it teaches foundational math: You cannot build a calculator without understanding the order of operations (PEMDAS), how functions transform inputs to outputs, and the relationship between exponents and logarithms. Implementing log(exp(x)) = x forces you to understand these as inverse operations.

Core challenges you’ll face:

- Expression parsing with precedence → maps to order of operations (PEMDAS)

- Implementing exponentiation → maps to understanding powers and roots

- Implementing log/exp functions → maps to logarithmic and exponential relationships

- Handling trigonometric functions → maps to unit circle and angle concepts

- Error handling (division by zero, log of negative) → maps to domain restrictions

Key Concepts:

- Order of Operations: “C Programming: A Modern Approach” Chapter 4 - K. N. King

- Operator Precedence Parsing: “Compilers: Principles and Practice” Chapter 4 - Parag H. Dave

- Mathematical Functions: “Math for Programmers” Chapter 2 - Paul Orland

- Floating Point Representation: “Computer Systems: A Programmer’s Perspective” Chapter 2 - Bryant & O’Hallaron

Difficulty: Beginner Time estimate: Weekend Prerequisites: Basic programming knowledge

Real World Outcome

$ ./calculator

> 3 + 4 * 2

11

> (3 + 4) * 2

14

> sqrt(16) + log(exp(5))

9.0

> sin(3.14159/2)

0.9999999999

> 2^10

1024

Implementation Hints:

The key insight is that mathematical expressions have a grammar. The Shunting Yard algorithm (by Dijkstra) converts infix notation to postfix (Reverse Polish Notation), which is trivial to evaluate with a stack. For functions like sin, cos, treat them as unary operators with highest precedence.

For the math itself:

- Exponentiation:

a^bmeans “multiply a by itself b times” - Logarithm:

log_b(x) = ymeans “b raised to y equals x” (inverse of exponentiation) - Trigonometry: Implement using Taylor series:

sin(x) = x - x³/3! + x⁵/5! - ...

Learning milestones:

- Basic arithmetic works with correct precedence → You understand PEMDAS deeply

- Parentheses and nested expressions work → You understand expression trees

- Transcendental functions (sin, log, exp) work → You understand these fundamental relationships

The Core Question You Are Answering

“What does it really mean for a computer to ‘understand’ mathematics?”

When you type 3 + 4 * 2 into any calculator, it returns 11, not 14. But how does the machine know that multiplication comes before addition? How does it know that sin(3.14159/2) should return approximately 1? This project forces you to confront a deep question: mathematical notation is a human invention with implicit rules that we’ve internalized since childhood. A computer has no such intuition—you must teach it every rule explicitly. By building a calculator from scratch, you discover that mathematics is not about numbers but about structure—and that structure can be represented as a tree.

Concepts You Must Understand First

Stop and research these before coding:

- Operator Precedence (PEMDAS/BODMAS)

- Why does multiplication happen before addition?

- What happens when operators have equal precedence (like

+and-)? - How do parentheses override the natural order?

- Book Reference: “C Programming: A Modern Approach” Chapter 4 - K. N. King

- Expression Trees (Abstract Syntax Trees)

- How can an equation be represented as a tree structure?

- What does “parsing” mean and why is it necessary?

- How do you traverse a tree to evaluate an expression?

- Book Reference: “Compilers: Principles, Techniques, and Tools” Chapter 2 - Aho et al.

- The Shunting Yard Algorithm

- How does Dijkstra’s algorithm convert infix to postfix notation?

- What is a stack and why is it essential here?

- How do you handle both left-associative and right-associative operators?

- Book Reference: “Algorithms” Chapter 4.3 - Sedgewick & Wayne

- Transcendental Functions and Their Domains

- Why can’t you take the logarithm of a negative number (in the reals)?

- What is the unit circle and how does it define sine and cosine?

- Why is

log(exp(x)) = xbutexp(log(x)) = xonly forx > 0? - Book Reference: “Calculus: Early Transcendentals” Chapter 1 - James Stewart

- Floating-Point Representation

- Why does

0.1 + 0.2not equal0.3exactly? - What are precision limits and how do they affect calculations?

- When should you worry about floating-point errors?

- Book Reference: “Computer Systems: A Programmer’s Perspective” Chapter 2.4 - Bryant & O’Hallaron

- Why does

- Taylor Series Expansions

- How can you compute

sin(x)from just addition and multiplication? - What is convergence and how many terms do you need?

- Why do Taylor series work for some functions but not others?

- Book Reference: “Calculus” Chapter 11 - James Stewart

- How can you compute

Questions to Guide Your Design

Before implementing, think through these:

- How will you represent mathematical expressions internally? As strings? As trees? As a list of tokens?

- What happens when the user enters invalid input like

3 + + 5orsin()? - How will you distinguish between the subtraction operator

-and a negative number? - Should your calculator support variables like

x = 5thenx + 3? - How will you handle functions that take multiple arguments, like

max(3, 5)? - What precision should your calculator use? How will you display results?

Thinking Exercise

Hand-trace the Shunting Yard algorithm before coding:

Take the expression: 3 + 4 * 2 ^ 2 - 1

Using a piece of paper, maintain two data structures:

- Output queue (will hold the result in postfix notation)

- Operator stack (temporary holding for operators)

Rules to apply:

- Numbers go directly to output queue

- Operators go to stack, BUT first pop higher-precedence operators from stack to output

^(exponentiation) is right-associative; others are left-associative- At the end, pop all remaining operators to output

After hand-tracing, your output queue should contain: 3 4 2 2 ^ * + 1 -

Now evaluate this postfix expression using a single stack:

- Numbers push to stack

- Operators pop two numbers, compute, push result

Verify you get 18 (since 2^2 = 4, then 4*4 = 16, then 3+16 = 19, then 19-1 = 18).

Did you catch all the steps? This is exactly why you need to implement and test carefully.

The Interview Questions They Will Ask

- “Explain how you would parse and evaluate the expression

2 + 3 * 4without usingeval()or a library.”- Expected: Describe tokenization, operator precedence, and either recursive descent parsing or Shunting Yard algorithm.

- “What data structure would you use to represent a mathematical expression, and why?”

- Expected: Expression tree (AST), because it naturally represents the hierarchical structure and makes evaluation recursive and clean.

- “How would you add support for user-defined functions like

f(x) = x^2?”- Expected: Store function definitions in a symbol table, substitute values when function is called.

- “What’s the difference between a syntax error and a semantic error in expression parsing?”

- Expected: Syntax error = malformed expression (

3 + + 5); semantic error = valid syntax but meaningless (sqrt(-1)in reals).

- Expected: Syntax error = malformed expression (

- “How would you implement implicit multiplication, like

2(3+4)instead of2*(3+4)?”- Expected: In tokenizer, insert implicit

*when a number is followed by(or a function name.

- Expected: In tokenizer, insert implicit

- “What are the trade-offs between recursive descent parsing and the Shunting Yard algorithm?”

- Expected: Recursive descent is more flexible and handles complex grammars; Shunting Yard is simpler for arithmetic expressions and easily handles precedence.

- “How would you test a calculator to ensure it handles edge cases correctly?”

- Expected: Test negative numbers, division by zero, very large/small numbers, deeply nested parentheses, and operator associativity.

Hints in Layers

Hint 1: Start with a simple grammar

Begin by only supporting +, -, *, / on integers with no parentheses. Get this working perfectly before adding complexity. Tokenize first (split "3+4" into ["3", "+", "4"]), then parse.

Hint 2: Use two stacks for simple evaluation For basic expressions, you can use the “two-stack algorithm”: one stack for numbers, one for operators. When you see a higher-precedence operator, push it. When you see a lower-precedence operator, pop and evaluate first.

Hint 3: For parentheses, use recursion or markers