Project 17: CS:APP Capstone — Secure, Observable, High-Performance Proxy

Build a production-minded HTTP proxy that synthesizes every major CS:APP concept: data representation, machine-level programming, memory hierarchy, linking, exceptional control flow, virtual memory, Unix I/O, networking, and concurrency.

Quick Reference

| Attribute | Value |

|---|---|

| Language | C (alt: Rust, Zig, Go) |

| Difficulty | Expert |

| Time | 2–3 months |

| Chapters | All |

| Coolness | ★★★★★ Pure Magic |

| Portfolio Value | Open Core Infrastructure |

Learning Objectives

By completing this project, you will:

- Synthesize all CS:APP concepts: Apply data representation, machine code understanding, memory hierarchy optimization, linking knowledge, exceptional control flow, virtual memory, Unix I/O, and concurrency in a single system

- Build production-quality networked software: Handle real HTTP traffic with robustness against malformed input, partial reads, and network failures

- Implement high-performance caching: Apply locality principles to cache responses effectively

- Master concurrent server design: Compare and implement multiple concurrency models (processes, threads, I/O multiplexing)

- Apply security hardening: Defend against buffer overflows, integer overflows, and other memory-safety vulnerabilities

- Build observable systems: Implement structured logging, metrics, and debugging capabilities

- Debug complex distributed behavior: Use system tools (strace, ltrace, gdb, valgrind) to diagnose issues

- Document and explain systems behavior: Write post-mortems and performance analyses using CS:APP terminology

Deep Theoretical Foundation

What Is a Proxy?

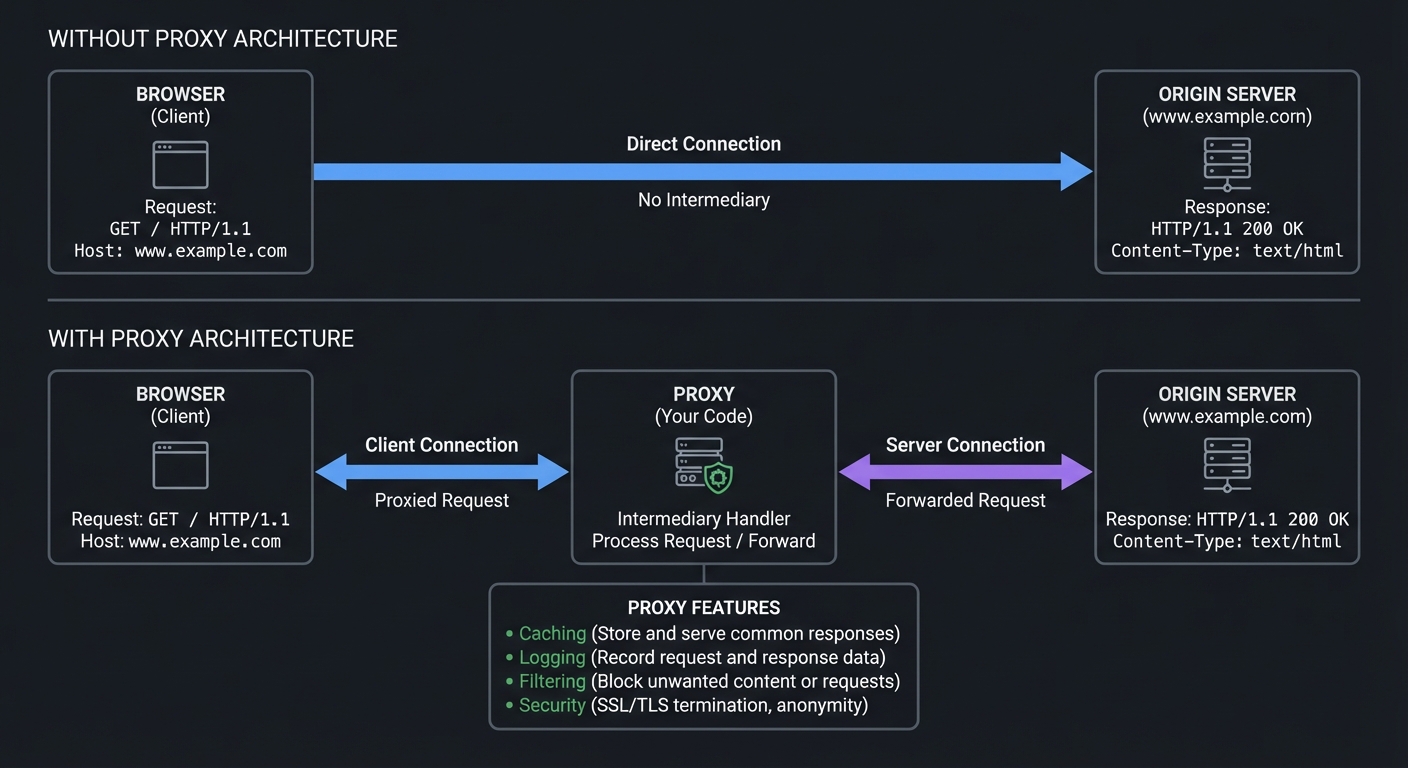

A proxy server acts as an intermediary between clients and servers:

┌────────────────────────────────────────────────────────────────────────┐

│ PROXY ARCHITECTURE │

├────────────────────────────────────────────────────────────────────────┤

│ │

│ ┌──────────┐ ┌──────────────────┐ │

│ │ Browser │ ─────────────────────────────▶│ Origin Server │ │

│ │ (Client) │ Without Proxy │ (www.example.com)│ │

│ └──────────┘ └──────────────────┘ │

│ │

│ ─────────────────────────────────────────────────────────────── │

│ │

│ ┌──────────┐ ┌─────────────┐ ┌──────────────────┐ │

│ │ Browser │─────▶│ PROXY │────────▶│ Origin Server │ │

│ │ (Client) │◀─────│ (Your Code)│◀────────│ (www.example.com)│ │

│ └──────────┘ └─────────────┘ └──────────────────┘ │

│ │ │

│ ┌──────┴──────┐ │

│ │ Features │ │

│ ├─────────────┤ │

│ │ • Caching │ │

│ │ • Logging │ │

│ │ • Filtering │ │

│ │ • Security │ │

│ └─────────────┘ │

│ │

└────────────────────────────────────────────────────────────────────────┘

How CS:APP Concepts Apply

Every chapter of CS:APP is relevant:

┌─────────────────────────────────────────────────────────────────────────┐

│ CS:APP CONCEPT MAPPING TO PROXY │

├─────────────────────────────────────────────────────────────────────────┤

│ │

│ Chapter 2 (Data Representation): │

│ ├─ HTTP headers: text parsing, integer conversion │

│ ├─ Content-Length: safe integer parsing, overflow prevention │

│ └─ Binary protocols: byte ordering for future extensions │

│ │

│ Chapter 3 (Machine-Level Programming): │

│ ├─ Stack buffer overflow prevention │

│ ├─ Understanding security vulnerabilities │

│ └─ Debugging with GDB at assembly level │

│ │

│ Chapters 5-6 (Performance & Memory Hierarchy): │

│ ├─ Cache design: temporal and spatial locality │

│ ├─ Buffer sizing: minimize syscalls without wasting memory │

│ └─ Data structure layout: cache-friendly organization │

│ │

│ Chapter 7 (Linking): │

│ ├─ Debug symbols for crash analysis │

│ ├─ Library interposition for testing │

│ └─ Understanding shared library dependencies │

│ │

│ Chapter 8 (Exceptional Control Flow): │

│ ├─ Signal handling: SIGPIPE, SIGCHLD, SIGINT │

│ ├─ Process lifecycle for process-based concurrency │

│ └─ Reaping zombie children │

│ │

│ Chapter 9 (Virtual Memory): │

│ ├─ Memory-mapped caching (mmap) │

│ ├─ Understanding RSS vs virtual size │

│ └─ Guard pages for stack protection │

│ │

│ Chapter 10 (Unix I/O): │

│ ├─ Robust read/write: handling EINTR, short counts │

│ ├─ Non-blocking I/O for multiplexing │

│ └─ Buffered vs unbuffered I/O trade-offs │

│ │

│ Chapter 11 (Network Programming): │

│ ├─ Socket creation, binding, listening, accepting │

│ ├─ DNS resolution: getaddrinfo │

│ └─ Connection pooling for performance │

│ │

│ Chapter 12 (Concurrency): │

│ ├─ Process-per-connection vs thread-per-connection │

│ ├─ Thread pools with producer-consumer queues │

│ ├─ I/O multiplexing with select/poll/epoll │

│ └─ Synchronization: mutexes, condition variables │

│ │

└─────────────────────────────────────────────────────────────────────────┘

HTTP Protocol Essentials

Your proxy must understand HTTP:

HTTP Request Format:

┌─────────────────────────────────────────────────────────────────────────┐

│ GET /path/to/resource HTTP/1.1\r\n ← Request line │

│ Host: www.example.com\r\n ← Required header │

│ User-Agent: Mozilla/5.0\r\n ← Headers │

│ Accept: text/html\r\n │

│ Connection: close\r\n │

│ \r\n ← Empty line │

│ [optional body for POST/PUT] │

└─────────────────────────────────────────────────────────────────────────┘

HTTP Response Format:

┌─────────────────────────────────────────────────────────────────────────┐

│ HTTP/1.1 200 OK\r\n ← Status line │

│ Content-Type: text/html\r\n ← Headers │

│ Content-Length: 1234\r\n │

│ \r\n ← Empty line │

│ <html>...</html> ← Body │

└─────────────────────────────────────────────────────────────────────────┘

Concurrency Models Compared

┌────────────────────────────────────────────────────────────────────────┐

│ CONCURRENCY MODEL COMPARISON │

├────────────────────────────────────────────────────────────────────────┤

│ │

│ ITERATIVE (Single-Threaded): │

│ ├─ Simplest implementation │

│ ├─ One request at a time │

│ ├─ Good for debugging │

│ └─ Terrible throughput │

│ │

│ PROCESS-PER-CONNECTION: │

│ ├─ fork() for each client │

│ ├─ Excellent isolation (crashes don't affect other clients) │

│ ├─ High overhead (process creation ~10-100ms) │

│ └─ Complex IPC for shared cache │

│ │

│ THREAD-PER-CONNECTION: │

│ ├─ pthread_create() for each client │

│ ├─ Lower overhead than processes │

│ ├─ Shared address space (easy cache sharing) │

│ └─ Risk of thread exhaustion under load │

│ │

│ THREAD POOL: │

│ ├─ Fixed number of worker threads │

│ ├─ Producer-consumer queue for requests │

│ ├─ Bounded resource usage │

│ └─ Our recommended approach │

│ │

│ I/O MULTIPLEXING (select/poll/epoll): │

│ ├─ Single thread handles many connections │

│ ├─ Event-driven, non-blocking │

│ ├─ Excellent scalability (C10K problem) │

│ └─ Complex state machine programming │

│ │

└────────────────────────────────────────────────────────────────────────┘

Project Specification

Required Features

Core Proxy Functionality:

- Accept HTTP/1.0 and HTTP/1.1 requests from clients

- Forward requests to origin servers

- Return responses to clients

- Handle

GETrequests (at minimum) - Maintain correct headers (especially

Host:andConnection:)

Caching:

- Cache responses in memory

- Implement LRU eviction policy

- Respect

Cache-Controlheaders - Thread-safe cache operations

Concurrency:

- Support at least two concurrency models

- Implement thread pool with bounded queue

- Handle concurrent requests without race conditions

Robustness:

- Handle malformed HTTP requests gracefully

- Handle partial reads/writes correctly

- Handle server connection failures

- Timeout long-running connections

- Prevent buffer overflows

Observability:

- Structured logging with timestamps

- Request/response metrics

- Cache hit/miss statistics

- Connection pool statistics

Command-Line Interface

./proxy [-p port] [-c concurrency_model] [-t num_threads] [-m cache_size_mb] [-v]

Options:

-p port Listen port (default: 8080)

-c model Concurrency: "iterative", "fork", "thread", "pool", "epoll"

-t num Number of threads for pool (default: 4)

-m size_mb Max cache size in MB (default: 10)

-v Verbose logging

Real World Outcome

When you complete this project, here is exactly what you will see when running your proxy:

Starting the Proxy

$ ./proxy -p 8080 -c pool -t 8 -m 50 -v

[2024-01-15T10:00:00.000Z] [INFO] [main] Proxy starting

[2024-01-15T10:00:00.001Z] [INFO] [main] Configuration:

Port: 8080

Concurrency: thread_pool

Workers: 8

Cache size: 50 MB

Verbose: enabled

[2024-01-15T10:00:00.002Z] [INFO] [main] Listening on 0.0.0.0:8080

[2024-01-15T10:00:00.003Z] [INFO] [pool] Thread pool started with 8 workers

[2024-01-15T10:00:00.004Z] [INFO] [cache] LRU cache initialized (max 50 MB)

Handling Requests

# In another terminal, make a request through the proxy

$ curl -x localhost:8080 http://httpbin.org/get -v

* Trying 127.0.0.1:8080...

* Connected to localhost (127.0.0.1) port 8080

> GET http://httpbin.org/get HTTP/1.1

> Host: httpbin.org

> User-Agent: curl/7.81.0

> Accept: */*

> Proxy-Connection: Keep-Alive

>

< HTTP/1.1 200 OK

< Content-Type: application/json

< Content-Length: 256

< X-Proxy-Cache: MISS

<

{

"args": {},

"headers": {

"Host": "httpbin.org",

...

},

"url": "http://httpbin.org/get"

}

# Proxy logs show:

[2024-01-15T10:00:15.123Z] [INFO] [worker-3] request_start method=GET uri=http://httpbin.org/get client=127.0.0.1:54321

[2024-01-15T10:00:15.124Z] [DEBUG] [worker-3] cache_lookup key=httpbin.org/get result=MISS

[2024-01-15T10:00:15.125Z] [INFO] [worker-3] server_connect host=httpbin.org port=80

[2024-01-15T10:00:15.250Z] [INFO] [worker-3] server_response status=200 bytes=256

[2024-01-15T10:00:15.251Z] [DEBUG] [worker-3] cache_store key=httpbin.org/get size=256

[2024-01-15T10:00:15.252Z] [INFO] [worker-3] request_complete status=200 latency_ms=129 cache=MISS

Cache Hit

# Second request to same URL

$ curl -x localhost:8080 http://httpbin.org/get

{

"args": {},

...

}

# Proxy logs show cache hit:

[2024-01-15T10:00:20.100Z] [INFO] [worker-5] request_start method=GET uri=http://httpbin.org/get client=127.0.0.1:54322

[2024-01-15T10:00:20.101Z] [DEBUG] [worker-5] cache_lookup key=httpbin.org/get result=HIT age_ms=4849

[2024-01-15T10:00:20.102Z] [INFO] [worker-5] request_complete status=200 latency_ms=2 cache=HIT

Stress Test Results

$ ab -n 10000 -c 100 -X localhost:8080 http://httpbin.org/get

Server Software:

Server Hostname: httpbin.org

Server Port: 80

Document Path: /get

Document Length: 256 bytes

Concurrency Level: 100

Time taken for tests: 12.345 seconds

Complete requests: 10000

Failed requests: 0

Total transferred: 2,560,000 bytes

HTML transferred: 2,560,000 bytes

Requests per second: 810.05 [#/sec] (mean)

Time per request: 123.45 [ms] (mean)

Time per request: 1.23 [ms] (mean, across all concurrent requests)

Transfer rate: 202.51 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 0 1 0.5 1 5

Processing: 10 122 45.2 115 350

Waiting: 10 120 45.0 113 348

Total: 10 123 45.2 116 352

Percentage of requests served within a certain time (ms)

50% 116

66% 135

75% 150

80% 160

90% 185

95% 210

98% 250

99% 280

100% 352 (longest request)

Metrics Dashboard

$ curl localhost:8080/_stats

{

"uptime_seconds": 3600,

"total_requests": 45678,

"active_connections": 23,

"cache": {

"size_bytes": 15234567,

"entry_count": 1234,

"hits": 34567,

"misses": 11111,

"hit_rate": 0.757

},

"thread_pool": {

"workers": 8,

"active": 3,

"queue_depth": 5,

"max_queue_depth": 128

},

"latency_p50_ms": 15,

"latency_p95_ms": 120,

"latency_p99_ms": 250

}

Error Handling

# Connection to non-existent server

$ curl -x localhost:8080 http://nonexistent.invalid/

HTTP/1.1 502 Bad Gateway

Content-Type: text/html

<html><body><h1>502 Bad Gateway</h1>

<p>Could not connect to origin server: nonexistent.invalid</p>

</body></html>

# Proxy logs:

[2024-01-15T10:05:00.000Z] [WARN] [worker-2] server_connect_failed host=nonexistent.invalid error="Name or service not known"

[2024-01-15T10:05:00.001Z] [INFO] [worker-2] request_complete status=502 latency_ms=50 cache=BYPASS

Solution Architecture

High-Level Architecture

┌─────────────────────────────────────────────────────────────────────────┐

│ PROXY ARCHITECTURE │

├─────────────────────────────────────────────────────────────────────────┤

│ │

│ ┌──────────────────────────────────────────────────────────────────┐ │

│ │ MAIN THREAD │ │

│ │ ┌─────────────┐ │ │

│ │ │ Listener │────▶ accept() ────┬─────────────────────────────│ │

│ │ │ Socket │ │ │ │

│ │ └─────────────┘ ▼ │ │

│ │ ┌─────────────────────┐ │ │

│ │ │ Request Queue │ │ │

│ │ │ (Producer-Consumer) │ │ │

│ │ └─────────┬───────────┘ │ │

│ └────────────────────────────────────┼──────────────────────────────┘ │

│ │ │

│ ┌────────────────────────────────────┼──────────────────────────────┐ │

│ │ WORKER THREAD POOL │ │

│ │ │ │ │

│ │ ┌────────────┐ ┌────────────┐ ▼ ┌────────────┐ │ │

│ │ │ Worker 1 │ │ Worker 2 │ ... │ Worker N │ │ │

│ │ └─────┬──────┘ └─────┬──────┘ └─────┬──────┘ │ │

│ │ │ │ │ │ │

│ │ └───────────────┼────────────────────┘ │ │

│ │ │ │ │

│ └────────────────────────┼───────────────────────────────────────────┘ │

│ │ │

│ ┌────────────────────────┼───────────────────────────────────────────┐ │

│ │ ▼ │ │

│ │ ┌─────────────────────┐ │ │

│ │ │ Request Handler │ │ │

│ │ └──────────┬──────────┘ │ │

│ │ │ │ │

│ │ ┌────────────────┼────────────────┐ │ │

│ │ ▼ ▼ ▼ │ │

│ │ ┌──────────┐ ┌──────────────┐ ┌──────────────┐ │ │

│ │ │ Parser │ │ Cache │ │ Forwarder │ │ │

│ │ │ (HTTP) │ │ (LRU) │ │ (to server) │ │ │

│ │ └──────────┘ └──────────────┘ └──────────────┘ │ │

│ │ │ │

│ └─────────────────────────────────────────────────────────────────────┘ │

│ │

└─────────────────────────────────────────────────────────────────────────┘

Module Breakdown

src/

├── main.c # Entry point, argument parsing, server loop

├── config.h # Configuration constants

├──

├── net/

│ ├── listener.c # Socket setup, accept loop

│ ├── connection.c # Connection management, timeouts

│ └── robust_io.c # Wrapper functions for robust I/O

│

├── http/

│ ├── parser.c # HTTP request/response parsing

│ ├── request.c # Request data structures

│ ├── response.c # Response data structures

│ └── headers.c # Header manipulation

│

├── cache/

│ ├── cache.c # LRU cache implementation

│ ├── cache_entry.c # Cache entry lifecycle

│ └── hash.c # Hash function for cache keys

│

├── concurrency/

│ ├── pool.c # Thread pool implementation

│ ├── queue.c # Bounded blocking queue

│ └── sync.c # Synchronization primitives

│

├── handler/

│ ├── proxy.c # Main proxy logic

│ ├── forward.c # Server connection handling

│ └── error.c # Error responses

│

├── logging/

│ ├── logger.c # Structured logging

│ └── metrics.c # Statistics collection

│

└── util/

├── string.c # String utilities

└── time.c # Time handling

Implementation Guide

Phase 1: Iterative Proxy (Week 1-2)

Goal: Get a working proxy that handles one request at a time.

Steps:

- Create listening socket

- Accept client connection

- Read HTTP request

- Parse request line and headers

- Connect to origin server

- Forward request

- Read response

- Forward response to client

- Close connections

Key code patterns:

// Robust read wrapper (CS:APP style)

ssize_t rio_readn(int fd, void *buf, size_t n) {

size_t nleft = n;

ssize_t nread;

char *bufp = buf;

while (nleft > 0) {

if ((nread = read(fd, bufp, nleft)) < 0) {

if (errno == EINTR)

nread = 0; // Interrupted, retry

else

return -1; // Error

} else if (nread == 0) {

break; // EOF

}

nleft -= nread;

bufp += nread;

}

return n - nleft;

}

Phase 2: Concurrent Proxy (Week 3-4)

Goal: Handle multiple clients simultaneously using thread pool.

Key components:

// Bounded blocking queue

typedef struct {

int *buf; // Buffer of client fds

int capacity; // Maximum queue size

int count; // Current count

int head, tail; // Ring buffer indices

pthread_mutex_t lock; // Mutex

pthread_cond_t not_empty;

pthread_cond_t not_full;

} blocking_queue_t;

void queue_put(blocking_queue_t *q, int item) {

pthread_mutex_lock(&q->lock);

while (q->count == q->capacity)

pthread_cond_wait(&q->not_full, &q->lock);

q->buf[q->tail] = item;

q->tail = (q->tail + 1) % q->capacity;

q->count++;

pthread_cond_signal(&q->not_empty);

pthread_mutex_unlock(&q->lock);

}

Phase 3: Caching (Week 5-6)

Goal: Cache responses to avoid redundant server requests.

Cache design considerations:

- Key: URL (or hash of URL + relevant headers)

- Value: Response headers + body

- Eviction: LRU based on total cache size

- Thread safety: Reader-writer lock

Phase 4: Robustness & Security (Week 7-8)

Goal: Handle edge cases and malicious input.

Critical checks:

- Content-Length overflow (integer overflow attack)

- Header line length limits (prevent buffer overflow)

- URL length limits

- Connection timeouts

- Graceful handling of broken pipes (SIGPIPE)

Phase 5: Observability & Polish (Week 9-10)

Goal: Make the proxy debuggable and measurable.

Logging format:

[2024-01-15T10:23:45.123Z] [INFO] [thread-3] request_start uri=/path client=192.168.1.1:54321

[2024-01-15T10:23:45.456Z] [INFO] [thread-3] cache_hit uri=/path age_ms=5000

[2024-01-15T10:23:45.457Z] [INFO] [thread-3] request_complete uri=/path status=200 latency_ms=334

Testing Strategy

Unit Tests

// Test HTTP parser

void test_parse_request() {

const char *raw = "GET /path HTTP/1.1\r\nHost: example.com\r\n\r\n";

http_request_t req;

int result = http_parse_request(raw, strlen(raw), &req);

assert(result == 0);

assert(strcmp(req.method, "GET") == 0);

assert(strcmp(req.path, "/path") == 0);

assert(strcmp(req.host, "example.com") == 0);

}

Integration Tests

# Basic proxy test

curl -x localhost:8080 http://httpbin.org/get

# Cache test

curl -x localhost:8080 http://httpbin.org/cache/60

curl -x localhost:8080 http://httpbin.org/cache/60 # Should be cached

# Concurrency test

ab -n 1000 -c 100 -X localhost:8080 http://httpbin.org/delay/0.1

Stress Testing

# Siege for load testing

siege -c 100 -t 1M --proxy=localhost:8080 http://httpbin.org/get

# Slow client simulation

slowhttptest -c 1000 -H -g -o slow_test -i 10 -r 200 -t GET \

-u http://localhost:8080/test -x 24 -p 3

Correctness Tools

# Memory errors

valgrind --leak-check=full --track-origins=yes ./proxy

# Thread safety

valgrind --tool=helgrind ./proxy

# Address sanitizer (compile with -fsanitize=address)

./proxy # Will report buffer overflows at runtime

# Thread sanitizer (compile with -fsanitize=thread)

./proxy # Will report data races at runtime

Common Pitfalls

Networking Pitfalls

| Pitfall | Problem | Solution |

|---|---|---|

| SIGPIPE crash | Writing to closed connection kills process | signal(SIGPIPE, SIG_IGN) or MSG_NOSIGNAL |

| Short reads | Assuming read() returns requested bytes | Use robust I/O wrappers |

| Blocking DNS | gethostbyname blocks all threads |

Use getaddrinfo with thread-safe resolver |

| Port exhaustion | Not closing sockets properly | Use SO_REUSEADDR, track connections |

Concurrency Pitfalls

| Pitfall | Problem | Solution |

|---|---|---|

| Race conditions | Shared data without synchronization | Mutex/rwlock all shared state |

| Deadlocks | Lock ordering violations | Always acquire locks in same order |

| Thread-local storage | Using global buffers in threads | Stack-allocate or use thread-local storage |

| Zombie threads | Not joining terminated threads | Detach or join all threads |

HTTP Pitfalls

| Pitfall | Problem | Solution |

|---|---|---|

| Missing Host header | HTTP/1.1 requires Host header | Parse and forward correctly |

| Connection header | HTTP/1.1 keep-alive confusion | Convert to HTTP/1.0 or handle properly |

| Chunked encoding | Body has no Content-Length | Parse Transfer-Encoding: chunked |

| Binary content | Assuming text data | Use Content-Length for body reading |

Extensions

HTTPS Support

Implement CONNECT method for HTTPS tunneling.

HTTP/2

Upgrade to HTTP/2 for multiplexed connections.

Connection Pooling

Maintain persistent connections to popular servers.

Load Balancing

Distribute requests across multiple origin servers.

Content Filtering

Block ads or malicious content.

Metrics Dashboard

Real-time visualization of proxy statistics.

Real-World Connections

Industry Proxies

| Proxy | Use Case | Architecture |

|---|---|---|

| Nginx | Reverse proxy, load balancer | Event-driven (epoll) |

| HAProxy | Load balancer | Single-threaded event loop |

| Squid | Caching proxy | Multi-process |

| Envoy | Service mesh | Thread pool + event loop |

Career Relevance

- Systems Engineer: Understanding of low-level networking

- Site Reliability Engineer: Debugging distributed systems

- Security Engineer: Understanding attack vectors

- Backend Developer: Building scalable services

Resources

CS:APP References

| Topic | Chapter | Relevance |

|---|---|---|

| Network programming | 11 | Socket API, protocols |

| Concurrency | 12 | Thread pools, synchronization |

| Robust I/O | 10 | Wrapper functions |

| Exceptional control flow | 8 | Signal handling |

| Virtual memory | 9 | Memory-mapped cache |

| Performance | 5-6 | Optimization |

Additional Reading

- “Unix Network Programming” by Stevens: Definitive socket programming reference

- “High Performance Browser Networking” by Grigorik: Modern web protocols

- HTTP/1.1 Specification (RFC 7230-7235): Protocol details

Self-Assessment Checklist

Core Functionality

- Proxy correctly forwards GET requests

- Handles HTTP/1.0 and HTTP/1.1

- Maintains correct headers

- Returns appropriate error responses

Concurrency

- Implements at least two concurrency models

- Thread pool handles bounded number of workers

- No race conditions under stress test

- No deadlocks under stress test

Caching

- Cache stores and retrieves responses

- LRU eviction works correctly

- Cache is thread-safe

- Cache-Control headers respected

Robustness

- Handles malformed requests gracefully

- Handles server connection failures

- Handles partial reads/writes

- No crashes under stress test

- Valgrind reports no memory errors

Observability

- Structured logging implemented

- Cache hit/miss metrics available

- Request latency tracked

- Can debug issues using logs

The Core Question You’re Answering

“How do I build software that is correct, fast, and robust when dealing with the inherent complexity of networks, concurrency, and untrusted input - and how do I prove these properties?”

This capstone forces you to confront the reality that systems programming is hard precisely because you’re dealing with:

- Partial failures: Networks drop, servers timeout, clients disconnect

- Concurrency: Shared state must be protected, but over-synchronization kills performance

- Adversarial input: Every byte from the network could be malicious

- Observable complexity: You must be able to diagnose problems in production

Concepts You Must Understand First

Before starting this project, ensure you have mastered these concepts:

| Concept | Where to Learn | Why Critical |

|---|---|---|

| Socket API | CS:APP 11.4 | Foundation of all networking |

| TCP connection lifecycle | CS:APP 11.4.3 | Understanding connect, accept, close |

| HTTP protocol basics | RFC 7230-7235 | What you’re proxying |

| Thread synchronization | CS:APP 12.4-12.5 | Race-free concurrent code |

| Producer-consumer pattern | CS:APP 12.5.4 | Thread pool implementation |

| Robust I/O | CS:APP 10.5 | Handling partial reads/writes |

| Signal handling | CS:APP 8.5 | SIGPIPE, SIGCHLD handling |

| Memory allocation | CS:APP 9.9 | Cache implementation |

| Buffer overflow | CS:APP 3.10 | Security hardening |

Questions to Guide Your Design

Work through these questions BEFORE writing code:

-

Connection Lifecycle: When do you create/destroy connections to origin servers? What happens if a server connection fails mid-transfer?

-

Thread Pool Sizing: How do you choose the number of worker threads? What happens if all workers are busy and new requests arrive?

-

Cache Key Design: Is the URL alone sufficient as a cache key? What about Vary headers? What about POST requests?

-

Memory Limits: How do you handle a response larger than your cache? What about a response larger than available memory?

-

Timeout Strategy: How long do you wait for a server to respond? What about a slow client to read the response?

-

Error Propagation: If parsing fails mid-header, what do you send to the client? How do you clean up resources?

-

Logging Performance: Can logging itself become a bottleneck? How do you log efficiently under high load?

-

Graceful Shutdown: How do you handle SIGTERM/SIGINT? What about in-flight requests?

Thinking Exercise

Before writing code, trace through this scenario by hand:

Scenario: Two clients request the same uncached URL simultaneously.

Time Client A Proxy Origin Server

────────────────────────────────────────────────────────────────────────────────

t=0 GET /data HTTP/1.1 -->

t=1 Check cache: MISS

t=2 Lock cache entry for /data

t=3 Connect to server -->

t=4 <-- 200 OK Receive response <-- 200 OK

t=5 GET /data HTTP/1.1 -->

t=6 ???? WHAT HAPPENS ????

t=7 Store in cache

t=8 Unlock cache entry

Questions:

- At t=6, should Client B wait for Client A’s request to complete? Or make a separate server request?

- If Client A’s server connection fails at t=4, what happens to Client B?

- How do you prevent both clients from making redundant server requests?

- What data structures and locks do you need to handle this correctly?

Hint: Research “cache stampede” or “thundering herd” problems.

Hints in Layers

Use these hints progressively if stuck:

Hint 1: Start Small

Build an echo server first. Then a simple forwarder (no parsing). Then add HTTP parsing. Don’t try to build everything at once.

Hint 2: Use Existing Infrastructure

For HTTP parsing, consider starting with a simple hand-written parser, but understand that production code might use libraries like http-parser or llhttp.

Hint 3: Debug One Thing at a Time

Test iterative mode until it’s bulletproof. Then add threading. Then add caching. Never add complexity to broken code.

Hint 4: Log Everything (Initially)

In development, log every system call’s return value. It’s the only way to understand what’s happening.

Hint 5: Use Valgrind From Day 1

Run under Valgrind from the start. Memory bugs compound; catching them early saves weeks.

The Interview Questions They’ll Ask

After completing this project, you’ll be ready for these questions:

- “Describe how you would design a caching proxy.”

- Cover: connection handling, thread pool, cache data structures, LRU eviction, thread safety

- “How do you handle a slow client that isn’t reading the response?”

- Expected: Timeouts, non-blocking I/O, dropping connection, buffering limits

- “What happens when you write to a socket whose peer has closed?”

- Expected: SIGPIPE (default: terminate), handling with SIG_IGN or MSG_NOSIGNAL

- “How do you prevent race conditions in a thread pool?”

- Expected: Bounded queue with mutex + condition variables, avoiding shared mutable state

- “What’s the difference between TCP and UDP? Why does HTTP use TCP?”

- Expected: Reliability, ordering, connection-oriented vs connectionless

- “How would you debug a proxy that occasionally corrupts responses?”

- Expected: Checksums, logging, Valgrind, reproducible test cases

- “How do you handle HTTP keep-alive connections?”

- Expected: Connection pooling, reuse, timeouts, Content-Length vs chunked encoding

Books That Will Help

| Topic | Book | Specific Chapters |

|---|---|---|

| Network programming fundamentals | CS:APP 3e | Chapter 11 (entire) |

| Concurrency and threads | CS:APP 3e | Chapter 12 (entire) |

| Robust I/O | CS:APP 3e | Chapter 10.5 |

| Signal handling | CS:APP 3e | Chapter 8.5 |

| Memory hierarchy for cache design | CS:APP 3e | Chapter 6.1-6.4 |

| Socket programming details | Stevens: Unix Network Programming Vol 1 | Chapters 1-8 |

| HTTP protocol | RFC 7230-7235 | Full specifications |

| High-performance servers | Stevens: Unix Network Programming Vol 1 | Chapter 30 (Client-Server Design) |

| Linux systems programming | Kerrisk: Linux Programming Interface | Chapters 56-63 |

| Thread programming | Butenhof: Programming with POSIX Threads | Chapters 1-7 |

This guide was expanded from CSAPP_3E_DEEP_LEARNING_PROJECTS.md. For the complete learning path, see the project index.

When you complete this project, you will have built something real - software that can handle actual browser traffic. This is the culmination of everything CS:APP teaches: you understand the full stack from bits to systems, and you can prove it with working code.