Learning the AI SDK (Vercel) Deeply

Goal: Master the Vercel AI SDK through hands-on projects that teach its core concepts by building real applications. By the end of these projects, you will understand how to generate text and structured data from LLMs, implement real-time streaming interfaces, build autonomous agents that use tools, and create production-ready AI systems with proper error handling, cost tracking, and multi-provider support.

Why the AI SDK Matters

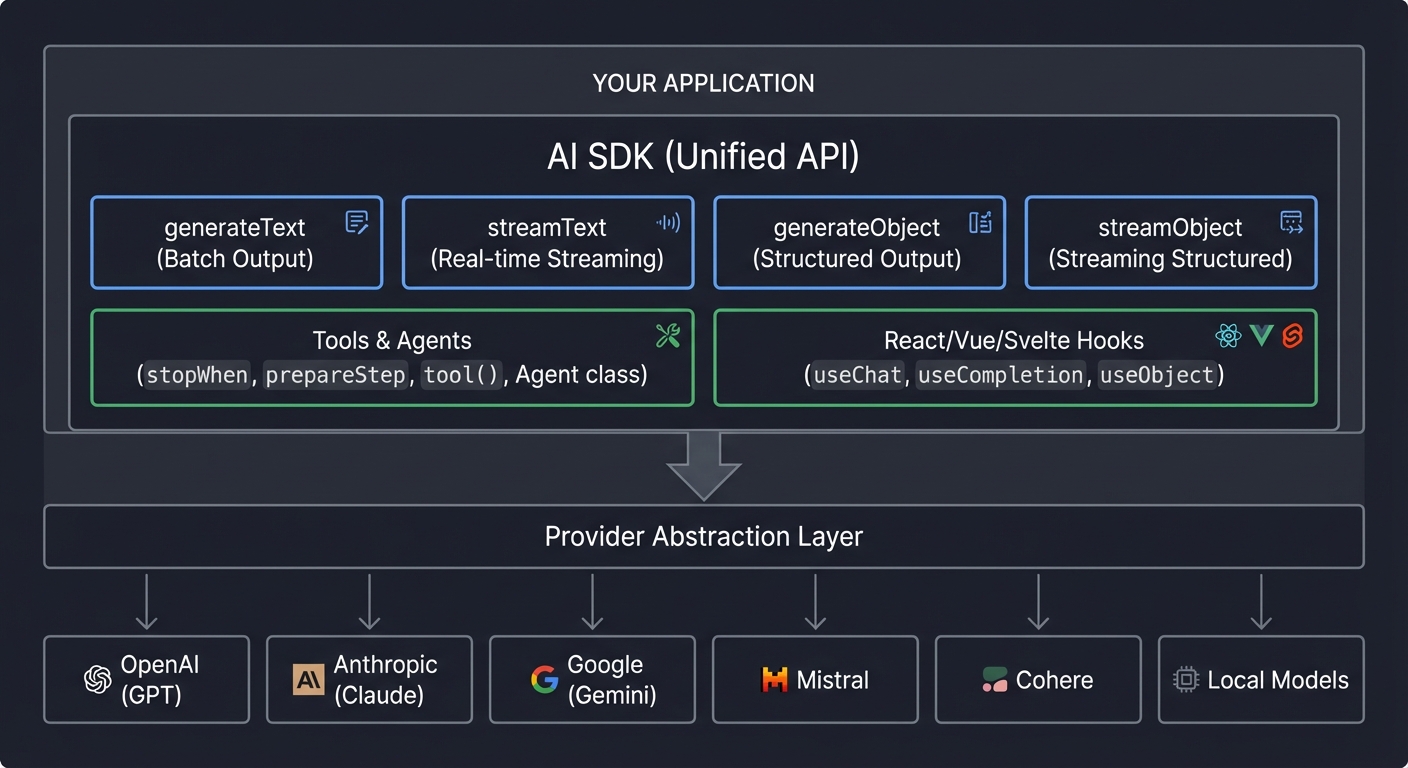

In 2023, when ChatGPT exploded onto the scene, developers scrambled to build AI-powered applications. The problem? Every LLM provider had a different API. OpenAI used one format, Anthropic another, Google yet another. Code written for one provider couldn’t be ported to another without significant rewrites.

Vercel’s AI SDK solved this problem with a radical idea: a unified TypeScript interface that abstracts provider differences. Write once, run on any model. But it’s not just about abstraction—the SDK provides:

- Type-safe structured output with Zod schemas

- First-class streaming with Server-Sent Events and React hooks

- Tool calling that lets LLMs take actions, not just generate text

- Agent loops that run autonomously until tasks complete

Today, the AI SDK powers thousands of production applications. Understanding it deeply means understanding how modern AI applications are built.

The AI SDK in the Ecosystem

┌─────────────────────────────────────────────────────────────────────────────┐

│ YOUR APPLICATION │

│ │

│ ┌───────────────────────────────────────────────────────────────────────┐ │

│ │ AI SDK (Unified API) │ │

│ │ │ │

│ │ ┌─────────────┐ ┌─────────────┐ ┌─────────────┐ ┌─────────────┐ │ │

│ │ │ generateText │ │ streamText │ │generateObject│ │ streamObject│ │ │

│ │ │ Batch │ │ Real-time │ │ Structured │ │ Streaming │ │ │

│ │ │ Output │ │ Streaming │ │ Output │ │ Structured │ │ │

│ │ └─────────────┘ └─────────────┘ └─────────────┘ └─────────────┘ │ │

│ │ │ │

│ │ ┌──────────────────────────────┐ ┌────────────────────────────────┐│ │

│ │ │ Tools & Agents │ │ React/Vue/Svelte Hooks ││ │

│ │ │ stopWhen, prepareStep, │ │ useChat, useCompletion, ││ │

│ │ │ tool(), Agent class │ │ useObject ││ │

│ │ └──────────────────────────────┘ └────────────────────────────────┘│ │

│ │ │ │

│ └────────────────────────────────┬──────────────────────────────────────┘ │

│ │ │

│ Provider Abstraction Layer │

│ │ │

│ ┌────────────┬──────────────┬────┴─────┬──────────────┬────────────────┐ │

│ │ │ │ │ │ │ │

│ ▼ ▼ ▼ ▼ ▼ ▼ │

│ ┌──────┐ ┌──────────┐ ┌───────┐ ┌───────┐ ┌──────────┐ ┌───────┐ │

│ │OpenAI│ │Anthropic │ │Google │ │Mistral│ │ Cohere │ │ Local │ │

│ │ GPT │ │ Claude │ │Gemini │ │ │ │ │ │Models │ │

│ └──────┘ └──────────┘ └───────┘ └───────┘ └──────────┘ └───────┘ │

└─────────────────────────────────────────────────────────────────────────────┘

Core Concepts Deep Dive

Before diving into projects, you must understand the fundamental concepts that make the AI SDK powerful. Each concept builds on the previous one—don’t skip ahead.

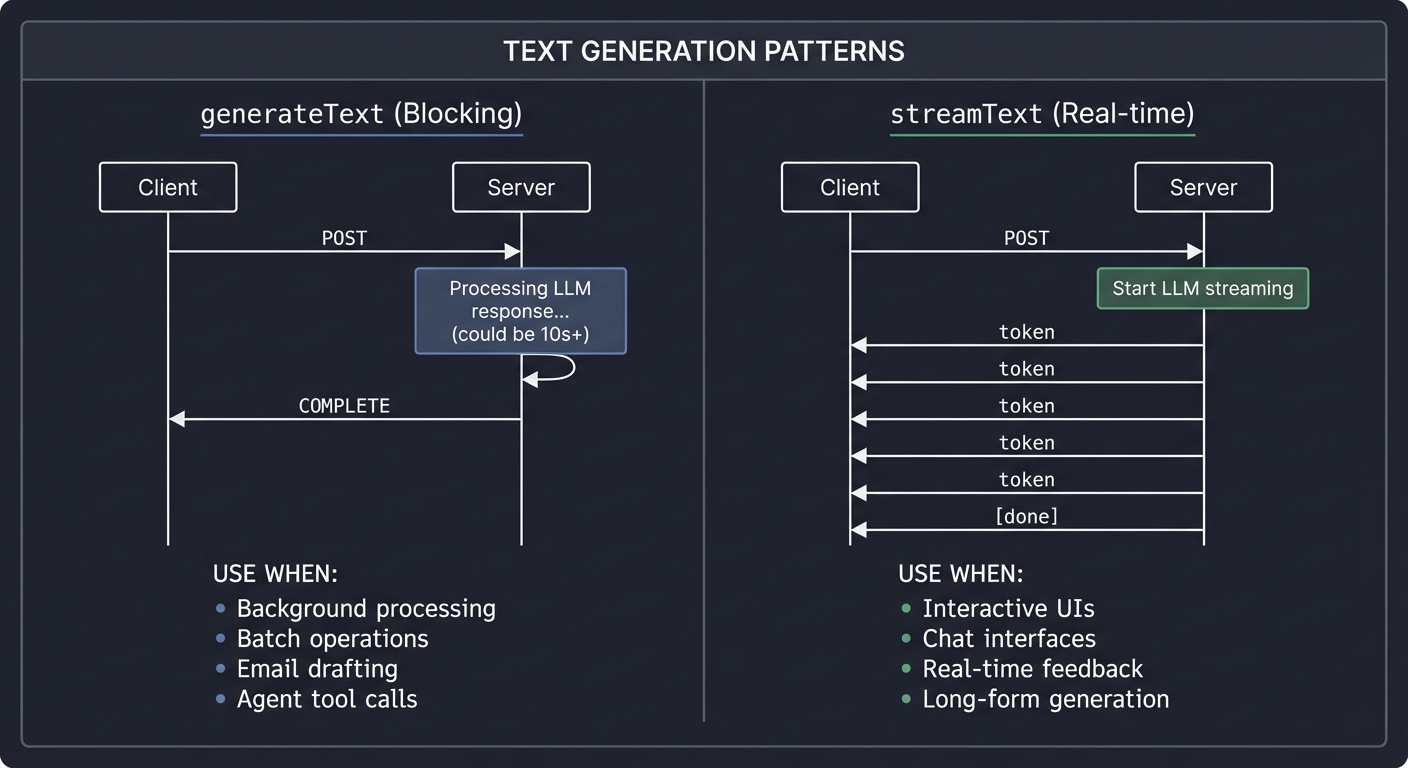

1. Text Generation: The Foundation

At its core, the AI SDK does one thing: sends prompts to LLMs and gets responses back. But HOW you get those responses matters enormously.

┌────────────────────────────────────────────────────────────────────────────┐

│ TEXT GENERATION PATTERNS │

├────────────────────────────────────────────────────────────────────────────┤

│ │

│ generateText (Blocking) streamText (Real-time) │

│ ───────────────────────── ────────────────────── │

│ │

│ Client Server Client Server │

│ │ │ │ │ │

│ │──── POST ────►│ │──── POST ────►│ │

│ │ │ │ │ │

│ │ (waiting) │ ◄─────────────────┐ │ (waiting) │ ◄──────────┐ │

│ │ │ Processing LLM │ │ │ Start LLM │ │

│ │ │ response... │ │◄── token ─────│ │ │

│ │ │ (could be 10s+) │ │◄── token ─────│ streaming │ │

│ │ │ │ │◄── token ─────│ │ │

│ │◄─ COMPLETE ───│ ──────────────────┘ │◄── token ─────│ │ │

│ │ │ │◄── [done] ────│ ───────────┘ │

│ │ │ │ │ │

│ │

│ USE WHEN: USE WHEN: │

│ • Background processing • Interactive UIs │

│ • Batch operations • Chat interfaces │

│ • Email drafting • Real-time feedback │

│ • Agent tool calls • Long-form generation │

│ │

└────────────────────────────────────────────────────────────────────────────┘

Key Insight: generateText blocks until the full response is ready. streamText returns an async iterator that yields tokens as they’re generated. For a 500-word response, generateText makes the user wait 5-10 seconds for anything to appear; streamText shows the first word in milliseconds.

// Blocking - waits for complete response

const { text } = await generateText({

model: openai('gpt-4'),

prompt: 'Explain quantum computing in 500 words'

});

console.log(text); // Full response after ~10 seconds

// Streaming - yields tokens as they arrive

const { textStream } = await streamText({

model: openai('gpt-4'),

prompt: 'Explain quantum computing in 500 words'

});

for await (const chunk of textStream) {

process.stdout.write(chunk); // Each word appears immediately

}

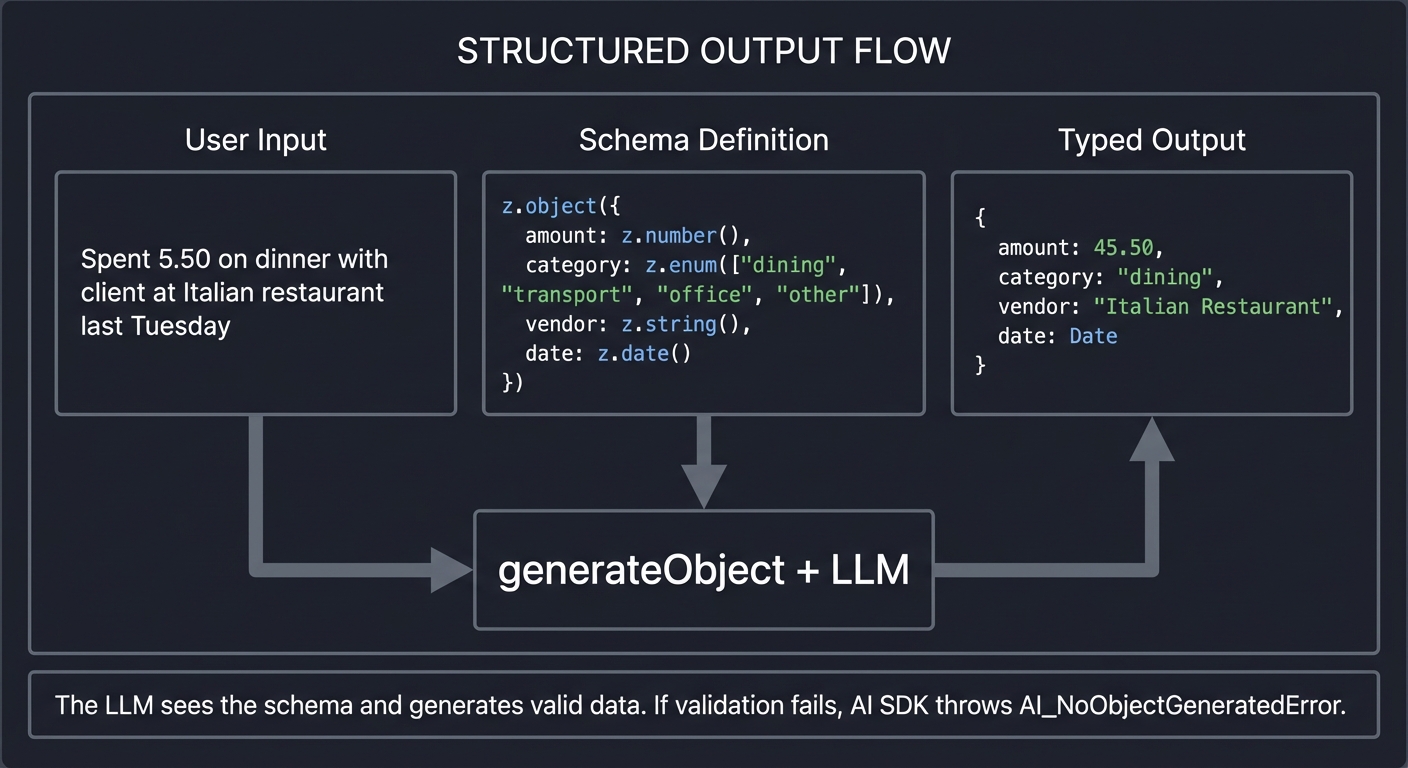

2. Structured Output: Type-Safe AI

Raw text from LLMs is messy. You ask for JSON, you might get markdown. You ask for a number, you might get “approximately 42.” generateObject solves this by enforcing Zod schemas:

┌────────────────────────────────────────────────────────────────────────────┐

│ STRUCTURED OUTPUT FLOW │

├────────────────────────────────────────────────────────────────────────────┤

│ │

│ User Input Schema Definition Typed Output │

│ ────────── ───────────────── ──────────── │

│ │

│ "Spent $45.50 on ┌─────────────────────┐ { │

│ dinner with client │ z.object({ │ amount: 45.50, │

│ at Italian │ amount: z.number │ category: │

│ restaurant │ category: z.enum │ "dining", │

│ last Tuesday" │ vendor: z.string │ vendor: "Italian │

│ │ date: z.date() │ Restaurant", │

│ │ │ }) │ date: Date │

│ │ └──────────┬──────────┘ } │

│ │ │ ▲ │

│ │ │ │ │

│ └───────────────────────────┼───────────────────────┘ │

│ │ │

│ ┌──────┴──────┐ │

│ │ generateObject│ │

│ │ + LLM │ │

│ └─────────────┘ │

│ │

│ The LLM "sees" the schema and generates valid data. │

│ If validation fails, AI SDK throws AI_NoObjectGeneratedError. │

│ │

└────────────────────────────────────────────────────────────────────────────┘

Key Insight: Schema descriptions are prompt engineering. The LLM reads your schema including field descriptions to understand what you want. Better descriptions = better extraction.

const expenseSchema = z.object({

amount: z.number().describe('The monetary amount spent in dollars'),

category: z.enum(['dining', 'travel', 'office', 'entertainment'])

.describe('The expense category for accounting'),

vendor: z.string().describe('The business name where money was spent'),

date: z.date().describe('When the expense occurred')

});

const { object } = await generateObject({

model: openai('gpt-4'),

schema: expenseSchema,

prompt: 'Spent $45.50 on dinner with client at Italian restaurant last Tuesday'

});

// object is fully typed: { amount: number, category: "dining" | ..., ... }

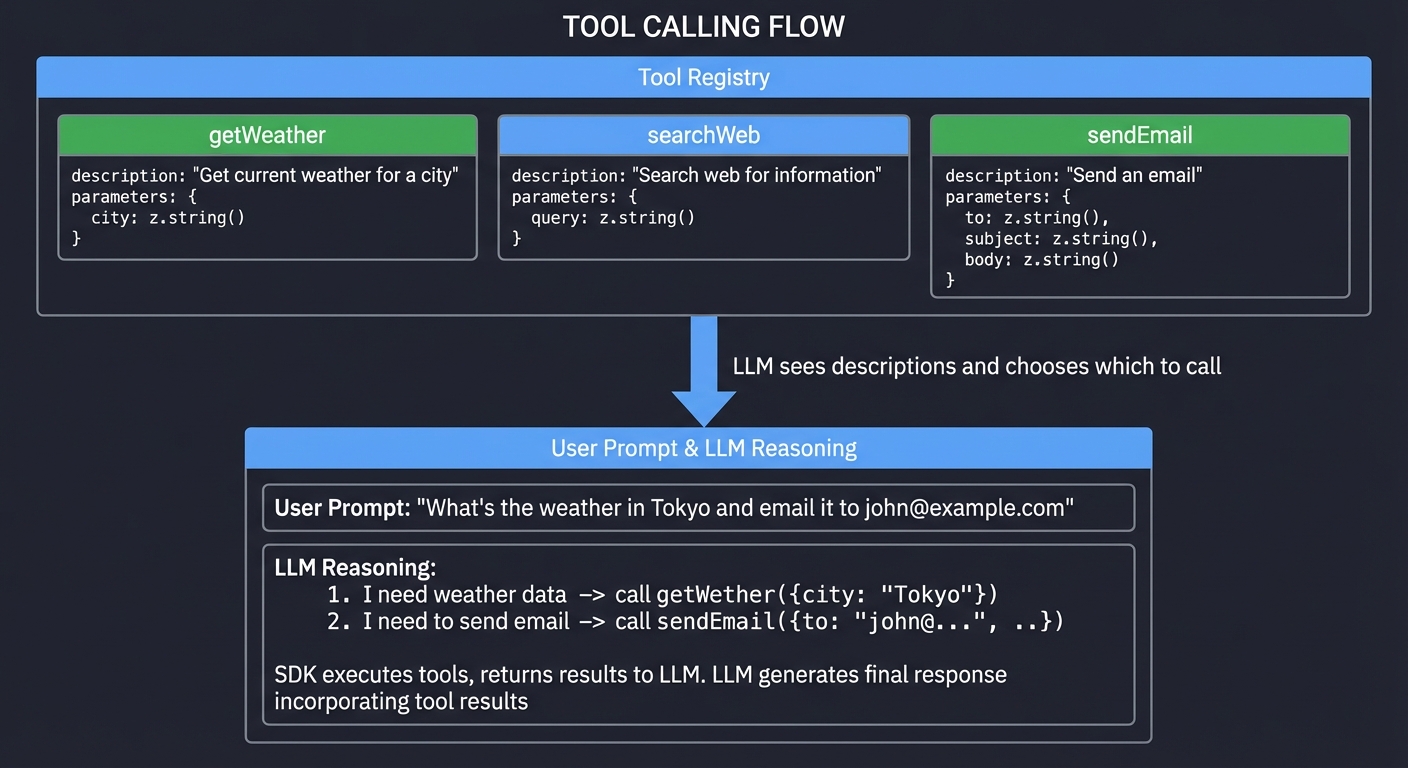

3. Tools: AI That Takes Action

Text generation is passive—the AI talks, you listen. Tools make AI active—the AI can DO things.

┌────────────────────────────────────────────────────────────────────────────┐

│ TOOL CALLING FLOW │

├────────────────────────────────────────────────────────────────────────────┤

│ │

│ ┌──────────────────────────────────────────────────────────────────────┐ │

│ │ Tool Registry │ │

│ │ │ │

│ │ ┌─────────────┐ ┌─────────────┐ ┌─────────────┐ │ │

│ │ │ getWeather │ │ searchWeb │ │ sendEmail │ │ │

│ │ │ │ │ │ │ │ │ │

│ │ │ description:│ │ description:│ │ description:│ │ │

│ │ │ "Get current│ │ "Search the │ │ "Send an │ │ │

│ │ │ weather │ │ web for │ │ email to │ │ │

│ │ │ for city" │ │ information│ │ a recipient│ │ │

│ │ │ │ │ " │ │ " │ │ │

│ │ │ input: │ │ input: │ │ input: │ │ │

│ │ │ {city} │ │ {query} │ │ {to,subj, │ │ │

│ │ │ │ │ │ │ body} │ │ │

│ │ └─────────────┘ └─────────────┘ └─────────────┘ │ │

│ └──────────────────────────────────────────────────────────────────────┘ │

│ │ │

│ │ LLM sees descriptions │

│ │ and chooses which to call │

│ ▼ │

│ ┌──────────────────────────────────────────────────────────────────────┐ │

│ │ User: "What's the weather in Tokyo and email it to john@example.com" │ │

│ │ │ │

│ │ LLM Reasoning: │ │

│ │ 1. I need weather data → call getWeather({city: "Tokyo"}) │ │

│ │ 2. I need to send email → call sendEmail({to: "john@...", ...}) │ │

│ │ │ │

│ │ SDK executes tools, returns results to LLM │ │

│ │ LLM generates final response incorporating tool results │ │

│ └──────────────────────────────────────────────────────────────────────┘ │

│ │

└────────────────────────────────────────────────────────────────────────────┘

Key Insight: The LLM decides WHEN and WHICH tools to call based on your descriptions. You don’t control the flow—you define capabilities and let the LLM orchestrate.

const tools = {

getWeather: tool({

description: 'Get current weather for a city',

parameters: z.object({

city: z.string().describe('City name')

}),

execute: async ({ city }) => {

const response = await fetch(`https://api.weather.com/${city}`);

return response.json();

}

})

};

const { text, toolCalls } = await generateText({

model: openai('gpt-4'),

tools,

prompt: 'What is the weather in Tokyo?'

});

// LLM called getWeather, got result, and incorporated it into response

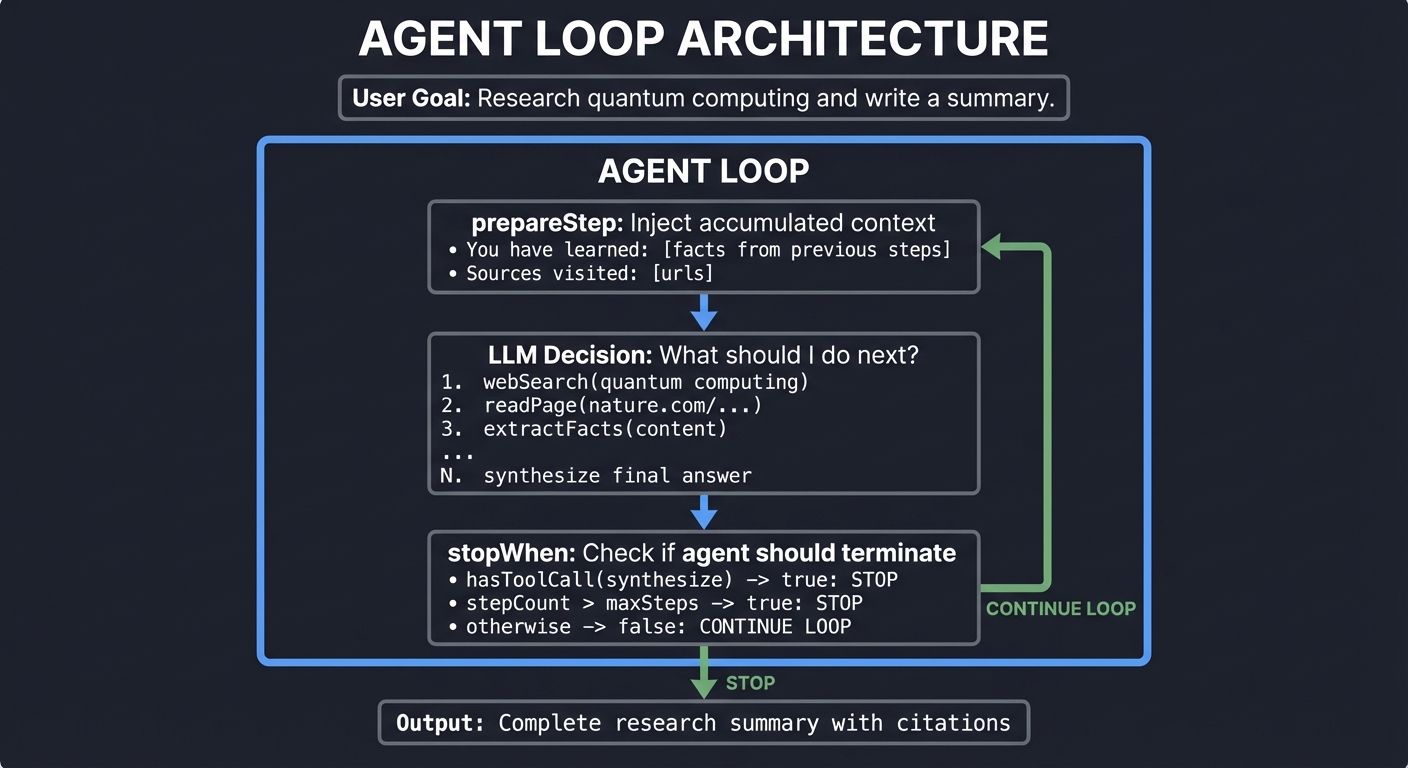

4. Agents: Autonomous AI

A tool call is a single action. An agent is an LLM in a loop, calling tools repeatedly until a task is complete.

┌────────────────────────────────────────────────────────────────────────────┐

│ AGENT LOOP ARCHITECTURE │

├────────────────────────────────────────────────────────────────────────────┤

│ │

│ User Goal: "Research quantum computing and write a summary" │

│ │

│ ┌────────────────────────────────────────────────────────────────────┐ │

│ │ AGENT LOOP │ │

│ │ │ │

│ │ ┌─────────────────────────────────────────────────────────────┐ │ │

│ │ │ prepareStep: Inject accumulated context │ │ │

│ │ │ • "You have learned: [facts from previous steps]" │ │ │

│ │ │ • "Sources visited: [urls]" │ │ │

│ │ └──────────────────────────┬──────────────────────────────────┘ │ │

│ │ │ │ │

│ │ ▼ │ │

│ │ ┌─────────────────────────────────────────────────────────────┐ │ │

│ │ │ LLM Decision: What should I do next? │ │ │

│ │ │ │ │ │

│ │ │ Step 1: "I need to search" → webSearch("quantum computing")│ │ │

│ │ │ Step 2: "I should read this" → readPage("nature.com/...") │ │ │

│ │ │ Step 3: "I found facts" → extractFacts(content) │ │ │

│ │ │ Step 4: "Need more info" → webSearch("quantum error...") │ │ │

│ │ │ ... │ │ │

│ │ │ Step N: "I have enough" → synthesize final answer │ │ │

│ │ └──────────────────────────┬──────────────────────────────────┘ │ │

│ │ │ │ │

│ │ ▼ │ │

│ │ ┌─────────────────────────────────────────────────────────────┐ │ │

│ │ │ stopWhen: Check if agent should terminate │ │ │

│ │ │ • hasToolCall('synthesize') → true: STOP │ │ │

│ │ │ • stepCount > maxSteps → true: STOP │ │ │

│ │ │ • otherwise → false: CONTINUE LOOP │ │ │

│ │ └─────────────────────────────────────────────────────────────┘ │ │

│ │ │ │

│ └────────────────────────────────────────────────────────────────────┘ │

│ │

│ Output: Complete research summary with citations │

│ │

└────────────────────────────────────────────────────────────────────────────┘

Key Insight: stopWhen and prepareStep are your control mechanisms. prepareStep injects state before each iteration; stopWhen decides when to stop. The agent is autonomous between these boundaries.

const { text, steps } = await generateText({

model: openai('gpt-4'),

tools: { search, readPage, synthesize },

stopWhen: hasToolCall('synthesize'), // Stop when synthesis tool is called

prepareStep: async ({ previousSteps }) => {

// Inject accumulated knowledge before each step

const facts = extractFacts(previousSteps);

return {

system: `You are a research agent. Facts learned so far: ${facts}`

};

},

prompt: 'Research quantum computing and write a summary'

});

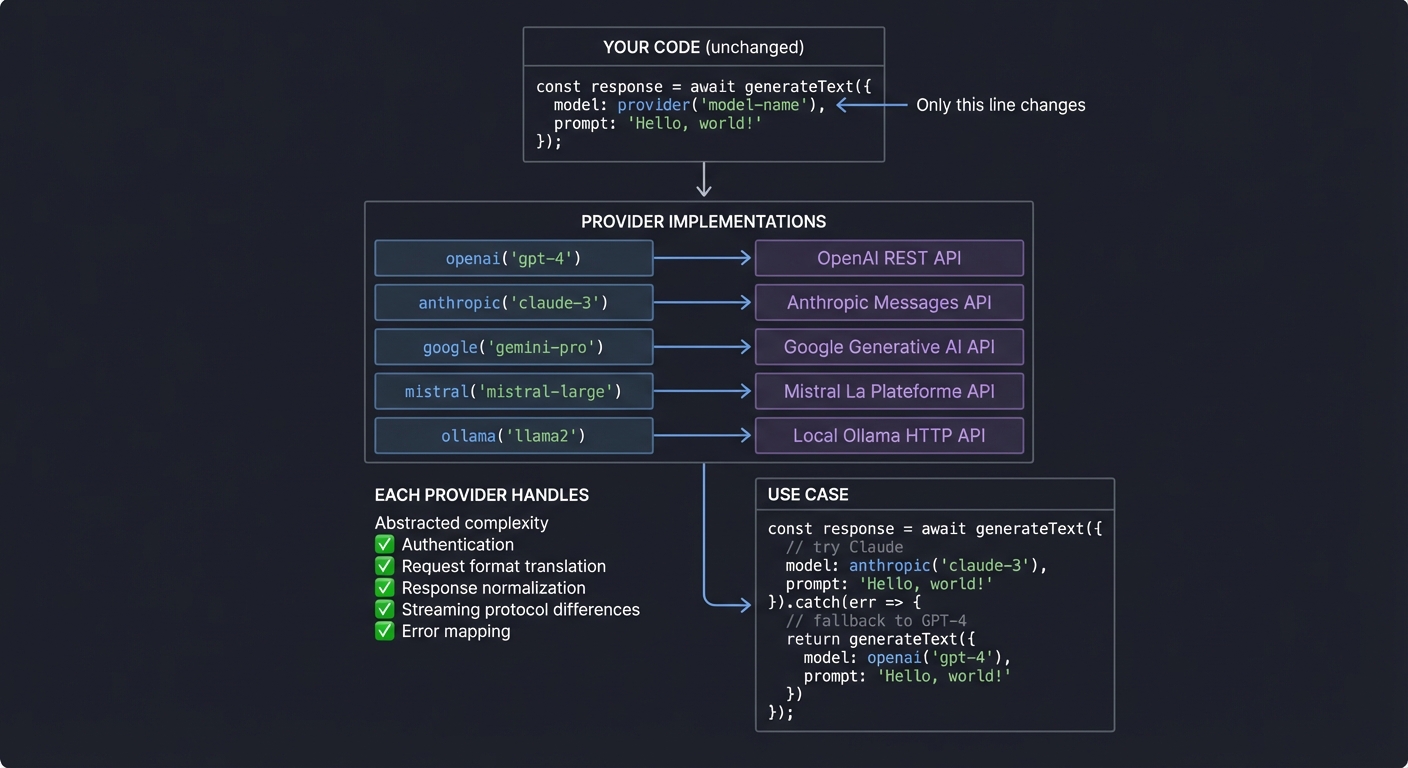

5. Provider Abstraction: Write Once, Run Anywhere

Different LLM providers have different APIs, capabilities, and quirks. The AI SDK normalizes them:

┌────────────────────────────────────────────────────────────────────────────┐

│ PROVIDER ABSTRACTION │

├────────────────────────────────────────────────────────────────────────────┤

│ │

│ YOUR CODE (unchanged) │

│ ───────────────────── │

│ │

│ const result = await generateText({ │

│ model: provider('model-name'), ◄── Only this line changes │

│ prompt: 'Your prompt here' │

│ }); │

│ │

│ ┌────────────────────────────────────────────────────────────────────┐ │

│ │ Provider Implementations │ │

│ │ │ │

│ │ openai('gpt-4') → OpenAI REST API │ │

│ │ anthropic('claude-3') → Anthropic Messages API │ │

│ │ google('gemini-pro') → Google Generative AI API │ │

│ │ mistral('mistral-large') → Mistral La Plateforme API │ │

│ │ ollama('llama2') → Local Ollama HTTP API │ │

│ │ │ │

│ │ Each provider handles: │ │

│ │ • Authentication (API keys, tokens) │ │

│ │ • Request format translation │ │

│ │ • Response normalization │ │

│ │ • Streaming protocol differences │ │

│ │ • Error mapping to AI SDK error types │ │

│ │ │ │

│ └────────────────────────────────────────────────────────────────────┘ │

│ │

│ USE CASE: Fallback chains, cost optimization, capability routing │

│ │

│ // Try Claude for reasoning, fall back to GPT-4 │

│ try { │

│ return await generateText({ model: anthropic('claude-3-opus') }); │

│ } catch { │

│ return await generateText({ model: openai('gpt-4') }); │

│ } │

│ │

└────────────────────────────────────────────────────────────────────────────┘

6. Streaming Architecture: Server-Sent Events

Understanding HOW streaming works is crucial for building real-time AI interfaces:

┌────────────────────────────────────────────────────────────────────────────┐

│ STREAMING DATA FLOW │

├────────────────────────────────────────────────────────────────────────────┤

│ │

│ Browser Next.js API Route LLM Provider │

│ │ │ │ │

│ │── POST /api/chat ───────►│ │ │

│ │ │── streamText() ─────────────►│ │

│ │ │ │ │

│ │ │◄─ AsyncIterableStream ───────│ │

│ │ │ (yields token by token) │ │

│ │ │ │ │

│ │ ┌──────┴──────┐ │ │

│ │ │ toDataStream│ │ │

│ │ │ Response() │ │ │

│ │ └──────┬──────┘ │ │

│ │ │ │ │

│ │◄─ SSE: data: {"type":"text","value":"The"} ─────────────│ │

│ │◄─ SSE: data: {"type":"text","value":" quantum"} ────────│ │

│ │◄─ SSE: data: {"type":"text","value":" computer"} ───────│ │

│ │◄─ SSE: data: {"type":"finish"} ─────────────────────────│ │

│ │ │ │ │

│ ┌─┴─┐ │ │ │

│ │useChat hook │ │ │

│ │processes SSE │ │ │

│ │updates React state │ │ │

│ │triggers re-render │ │ │

│ └───┘ │ │ │

│ │

│ SSE Format: │

│ ─────────── │

│ event: message │

│ data: {"type":"text-delta","textDelta":"The"} │

│ │

│ data: {"type":"text-delta","textDelta":" answer"} │

│ │

│ data: {"type":"finish","finishReason":"stop"} │

│ │

└────────────────────────────────────────────────────────────────────────────┘

Key Insight: Server-Sent Events are unidirectional (server → client), simpler than WebSockets, and perfect for LLM streaming. The AI SDK handles all the serialization and React state management.

Concept Summary Table

| Concept Cluster | What You Need to Internalize |

|---|---|

| Text Generation | generateText is blocking, streamText is real-time. Both are the foundation for all LLM interactions. |

| Structured Output | generateObject transforms unstructured text into typed, validated data. Zod schemas guide LLM output. Schema descriptions are prompt engineering. |

| Tool Calling | Tools are functions the LLM can invoke. The LLM decides WHEN and WHICH tool to call based on descriptions. You define capabilities; the LLM orchestrates. |

| Agent Loop | An agent is an LLM in a loop, calling tools until a task is complete. stopWhen and prepareStep are your control mechanisms. |

| Provider Abstraction | Switch between OpenAI, Anthropic, Google with one line. The SDK normalizes API differences, auth, streaming protocols. |

| Streaming Architecture | SSE transport, AsyncIterableStream, token-by-token delivery. React hooks (useChat, useCompletion) handle client-side state. |

| Error Handling | AI_NoObjectGeneratedError, provider failures, stream errors. Production AI needs graceful degradation and retry logic. |

| Telemetry | Track tokens, costs, latency per request. Essential for production AI systems and cost optimization. |

Deep Dive Reading By Concept

| Concept | Book Chapters & Resources |

|---|---|

| Text Generation | • “JavaScript: The Definitive Guide” by David Flanagan - Ch. 13 (Asynchronous JavaScript, Promises, async/await) • AI SDK generateText docs • AI SDK streamText docs |

| Structured Output | • “Programming TypeScript” by Boris Cherny - Ch. 3 (Types), Ch. 6 (Advanced Types) • AI SDK generateObject docs • Zod documentation - Schema validation patterns |

| Tool Calling | • “Building LLM Apps” by Harrison Chase (LangChain blog series) • AI SDK Tools and Tool Calling • How to build AI Agents with Vercel |

| Agent Loop | • “ReAct: Synergizing Reasoning and Acting” (Yao et al.) - The academic foundation • AI SDK Agents docs • “Artificial Intelligence: A Modern Approach” by Russell & Norvig - Ch. 2 (Intelligent Agents) |

| Provider Abstraction | • “Design Patterns” by Gang of Four - Adapter pattern • AI SDK Providers docs • “Designing Data-Intensive Applications” by Martin Kleppmann - Ch. 4 (Encoding and Evolution) |

| Streaming Architecture | • “JavaScript: The Definitive Guide” by David Flanagan - Ch. 13 (Async Iteration), Ch. 15.11 (Server-Sent Events) • “Node.js Design Patterns” by Mario Casciaro - Ch. 6 (Streams) • MDN Server-Sent Events • AI SDK UI hooks docs |

| Error Handling | • “Programming TypeScript” by Boris Cherny - Ch. 7 (Handling Errors) • “Release It!, 2nd Edition” by Michael Nygard - Ch. 5 (Stability Patterns) • AI SDK Error Handling docs |

| Telemetry | • AI SDK Telemetry docs • “Designing Data-Intensive Applications” by Martin Kleppmann - Ch. 1 (Reliability, Observability) • OpenTelemetry documentation for observability patterns |

Project 1: AI-Powered Expense Tracker CLI

- File: AI_SDK_LEARNING_PROJECTS.md

- Programming Language: TypeScript

- Coolness Level: Level 2: Practical but Forgettable

- Business Potential: 2. The “Micro-SaaS / Pro Tool”

- Difficulty: Level 1: Beginner

- Knowledge Area: Generative AI / CLI Tools

- Software or Tool: AI SDK / Zod

- Main Book: “Programming TypeScript” by Boris Cherny

What you’ll build: A command-line tool where you describe expenses in natural language (“Spent $45.50 on dinner with client at Italian restaurant”) and it extracts, categorizes, and stores structured expense records.

Why it teaches AI SDK: This forces you to understand generateObject and Zod schemas at their core. You’ll see how the LLM transforms unstructured human text into validated, typed data—the bread and butter of real AI applications.

Core challenges you’ll face:

- Designing Zod schemas that guide LLM output effectively (maps to structured output)

- Handling validation errors when the LLM produces invalid data (maps to error handling)

- Adding schema descriptions to improve extraction accuracy (maps to prompt engineering)

- Supporting multiple categories and edge cases (maps to schema design)

Key Concepts:

- Zod Schema Design: AI SDK Generating Structured Data Docs

- TypeScript Type Inference: “Programming TypeScript” by Boris Cherny - Ch. 3

- CLI Development: “Command-Line Rust” by Ken Youens-Clark (patterns apply to TS too)

Difficulty: Beginner Time estimate: Weekend Prerequisites: Basic TypeScript, npm/pnpm

Learning milestones:

- First

generateObjectcall returns parsed expense → you understand schema-to-output mapping - Adding descriptions to schema fields improves extraction → you grasp how LLMs consume schemas

- Handling

AI_NoObjectGeneratedErrorgracefully → you understand AI SDK error patterns

Real World Outcome

When you run the CLI, here’s exactly what you’ll see in your terminal:

$ expense "Coffee with team $23.40 at Starbucks this morning"

✓ Expense recorded

┌─────────────────────────────────────────────────────────────────┐

│ EXPENSE RECORD │

├─────────────────────────────────────────────────────────────────┤

│ Amount: $23.40 │

│ Category: dining │

│ Vendor: Starbucks │

│ Date: 2025-12-22 │

│ Notes: Coffee with team │

├─────────────────────────────────────────────────────────────────┤

│ ID: exp_a7f3b2c1 │

│ Created: 2025-12-22T10:34:12Z │

└─────────────────────────────────────────────────────────────────┘

Saved to ~/.expenses/2025-12.json

Try more complex natural language inputs:

$ expense "Took an Uber from airport to hotel, $67.80, for the Chicago conference trip"

✓ Expense recorded

┌─────────────────────────────────────────────────────────────────┐

│ EXPENSE RECORD │

├─────────────────────────────────────────────────────────────────┤

│ Amount: $67.80 │

│ Category: travel │

│ Vendor: Uber │

│ Date: 2025-12-22 │

│ Notes: Airport to hotel, Chicago conference │

├─────────────────────────────────────────────────────────────────┤

│ ID: exp_b8e4c3d2 │

│ Created: 2025-12-22T10:35:45Z │

└─────────────────────────────────────────────────────────────────┘

Generate reports:

$ expense report --month 2025-12

┌─────────────────────────────────────────────────────────────────┐

│ EXPENSE REPORT: December 2025 │

├─────────────────────────────────────────────────────────────────┤

│ │

│ SUMMARY BY CATEGORY │

│ ─────────────────── │

│ dining │████████████████ │ $234.50 (12 expenses) │

│ travel │████████████ │ $567.80 (5 expenses) │

│ office │████ │ $89.20 (3 expenses) │

│ entertainment │██ │ $45.00 (2 expenses) │

│ ───────────────────────────────────────────────────────────── │

│ TOTAL $936.50 (22 expenses) │

│ │

└─────────────────────────────────────────────────────────────────┘

Exported to ~/.expenses/report-2025-12.csv

Handle errors gracefully:

$ expense "bought something"

⚠ Could not extract expense details

Missing information:

• Amount: No monetary value found

• Vendor: No vendor/merchant identified

Please include at least an amount, e.g.:

expense "bought lunch $15 at Chipotle"

The Core Question You’re Answering

“How do I transform messy, unstructured human text into clean, typed, validated data structures using AI?”

This is THE fundamental pattern of modern AI applications. Every chatbot that fills out forms, every assistant that creates calendar events, every tool that extracts data from documents—they all use this pattern. You describe something in plain English, and the AI SDK + LLM extracts structured data.

Before you write code, understand: generateObject is not just “LLM call with schema.” The schema itself is part of the prompt. The LLM sees your Zod schema including field names, types, and descriptions. Better schemas = better extraction.

Concepts You Must Understand First

Stop and research these before coding:

- Zod Schemas as LLM Instructions

- What is a Zod schema and how does TypeScript infer types from it?

- How does

generateObjectsend the schema to the LLM? - Why do

.describe()methods on schema fields improve extraction? - Reference: Zod documentation - Start here

- generateObject vs generateText

- When would you use

generateTextvsgenerateObject? - What happens internally when you call

generateObject? - What is

AI_NoObjectGeneratedErrorand when does it occur? - Reference: AI SDK generateObject docs

- When would you use

- TypeScript Type Inference

- How does

z.infer<typeof schema>work? - Why is this important for type-safe AI applications?

- Book Reference: “Programming TypeScript” by Boris Cherny - Ch. 3 (Types)

- How does

- Error Handling in AI Systems

- What happens when the LLM generates data that doesn’t match the schema?

- How do you handle partial matches or missing fields?

- What’s the difference between validation errors and generation errors?

- Book Reference: “Programming TypeScript” by Boris Cherny - Ch. 7 (Handling Errors)

- CLI Design Patterns

- How do you parse command-line arguments in Node.js?

- What makes a good CLI user experience?

- Book Reference: “Command-Line Rust” by Ken Youens-Clark - Ch. 1-2 (patterns apply to TypeScript)

Questions to Guide Your Design

Before implementing, think through these:

- Schema Design

- What fields does an expense record need? (amount, category, vendor, date, notes?)

- What data types should each field be? (number, enum, string, Date?)

- Which fields are required vs optional?

- How do you handle ambiguous categories? (Is “Uber” travel or transportation?)

- Natural Language Parsing

- How many ways can someone describe “$45.50”? (“45.50”, “$45.50”, “forty-five fifty”, “about 45 bucks”)

- How do you handle relative dates? (“yesterday”, “last Tuesday”, “this morning”)

- What if the vendor is implied but not stated? (“got coffee” → Starbucks?)

- Storage and Persistence

- Where do you store expenses? (JSON file, SQLite, in-memory?)

- How do you organize by month/year for reporting?

- How do you handle concurrent writes?

- Error Recovery

- What do you do when extraction fails completely?

- How do you handle partial extraction (got amount but no vendor)?

- Should you prompt the user for missing information?

- CLI Interface

- What commands do you need? (

add,list,report,export?) - How do you handle interactive vs non-interactive modes?

- What output formats do you support? (JSON, table, CSV?)

- What commands do you need? (

Thinking Exercise

Before coding, design your schema on paper:

// Start with this skeleton and fill in the blanks:

const expenseSchema = z.object({

// What fields do you need?

// What types should they be?

// What descriptions will help the LLM understand what you want?

amount: z.number().describe('???'),

category: z.enum(['???']).describe('???'),

vendor: z.string().describe('???'),

date: z.string().describe('???'), // or z.date()?

notes: z.string().optional().describe('???'),

});

// Now trace through these inputs:

// 1. "Coffee $4.50 at Starbucks"

// 2. "Spent around 50 bucks on office supplies at Amazon yesterday"

// 3. "Uber to airport" ← No amount! What happens?

// 4. "Bought stuff" ← Very ambiguous! What happens?

Questions while tracing:

- Which inputs will extract cleanly?

- Which will cause validation errors?

- How would you modify your schema to handle more edge cases?

- What descriptions would help the LLM interpret “around 50 bucks”?

The Interview Questions They’ll Ask

Prepare to answer these:

- “What is the difference between generateText and generateObject?”

generateTextreturns unstructured text.generateObjectreturns a typed object validated against a Zod schema. UsegenerateObjectwhen you need structured, validated data.

- “How does Zod work with the AI SDK?”

- Zod schemas define the expected structure. The AI SDK serializes the schema (including descriptions) and sends it to the LLM. The LLM generates JSON matching the schema. The SDK validates the response and returns a typed object.

- “What happens if the LLM generates invalid data?”

- The SDK throws

AI_NoObjectGeneratedError. You can catch this and retry, prompt for more information, or fall back gracefully.

- The SDK throws

- “How do schema descriptions affect LLM output quality?”

- Descriptions are essentially prompt engineering embedded in your type definitions. Clear descriptions with examples dramatically improve extraction accuracy.

- “How would you handle partial extraction?”

- Use optional fields (

z.optional()) for non-critical data. For required fields, validate the error and prompt the user for missing information.

- Use optional fields (

- “What are the tradeoffs of different expense categories?”

z.enum()limits categories but ensures consistency.z.string()is flexible but may result in inconsistent categorization. A middle ground: usez.enum()with a catch-all “other” category.

Hints in Layers

Hint 1: Basic Setup Start with the simplest possible schema and a single command:

import { generateObject } from 'ai';

import { openai } from '@ai-sdk/openai';

import { z } from 'zod';

const expenseSchema = z.object({

amount: z.number(),

vendor: z.string(),

});

const { object } = await generateObject({

model: openai('gpt-4o-mini'),

schema: expenseSchema,

prompt: process.argv[2], // "Coffee $5 at Starbucks"

});

console.log(object);

Run it and see what you get. Does it work? What’s missing?

Hint 2: Add Descriptions Descriptions dramatically improve extraction:

const expenseSchema = z.object({

amount: z.number()

.describe('The monetary amount spent in US dollars. Extract from phrases like "$45.50", "45 dollars", "about 50 bucks".'),

vendor: z.string()

.describe('The business or merchant name where the purchase was made.'),

category: z.enum(['dining', 'travel', 'office', 'entertainment', 'other'])

.describe('The expense category. Use "dining" for restaurants and coffee shops, "travel" for transportation and hotels.'),

});

Hint 3: Handle Errors Wrap your call in try/catch:

import { AI_NoObjectGeneratedError } from 'ai';

try {

const { object } = await generateObject({ ... });

console.log('✓ Expense recorded');

console.log(object);

} catch (error) {

if (error instanceof AI_NoObjectGeneratedError) {

console.log('⚠ Could not extract expense details');

console.log('Please include an amount and vendor.');

} else {

throw error;

}

}

Hint 4: Add Persistence Store expenses in a JSON file:

import { readFileSync, writeFileSync, existsSync } from 'fs';

const EXPENSES_FILE = './expenses.json';

function loadExpenses(): Expense[] {

if (!existsSync(EXPENSES_FILE)) return [];

return JSON.parse(readFileSync(EXPENSES_FILE, 'utf-8'));

}

function saveExpense(expense: Expense) {

const expenses = loadExpenses();

expenses.push({ ...expense, id: crypto.randomUUID(), createdAt: new Date() });

writeFileSync(EXPENSES_FILE, JSON.stringify(expenses, null, 2));

}

Hint 5: Build the Report Command Group expenses by category:

const expenses = loadExpenses();

const byCategory = Object.groupBy(expenses, (e) => e.category);

for (const [category, items] of Object.entries(byCategory)) {

const total = items.reduce((sum, e) => sum + e.amount, 0);

console.log(`${category}: $${total.toFixed(2)} (${items.length} expenses)`);

}

Books That Will Help

| Topic | Book | Chapter |

|---|---|---|

| TypeScript fundamentals | “Programming TypeScript” by Boris Cherny | Ch. 3 (Types), Ch. 6 (Advanced Types) |

| Error handling patterns | “Programming TypeScript” by Boris Cherny | Ch. 7 (Handling Errors) |

| Zod and validation | Zod documentation | Entire guide |

| CLI design patterns | “Command-Line Rust” by Ken Youens-Clark | Ch. 1-2 (patterns apply to TS) |

| Async/await patterns | “JavaScript: The Definitive Guide” by David Flanagan | Ch. 13 (Asynchronous JavaScript) |

| AI SDK structured output | AI SDK Docs | Generating Structured Data |

Recommended reading order:

- Zod documentation (30 min) - Understand schema basics

- AI SDK generateObject docs (30 min) - Understand the API

- Boris Cherny Ch. 3 (1 hour) - Deep TypeScript types

- Then start coding!

Project 2: Real-Time Document Summarizer with Streaming UI

- File: AI_SDK_LEARNING_PROJECTS.md

- Main Programming Language: TypeScript

- Alternative Programming Languages: JavaScript, Python, Go

- Coolness Level: Level 3: Genuinely Clever

- Business Potential: Level 2: The “Micro-SaaS / Pro Tool”

- Difficulty: Level 2: Intermediate (The Developer)

- Knowledge Area: Web Streaming, AI Integration

- Software or Tool: Next.js, AI SDK, React

- Main Book: “JavaScript: The Definitive Guide” by David Flanagan

What you’ll build: A web application where users paste long documents (articles, papers, transcripts) and watch summaries generate in real-time, character by character, with a progress indicator and section-by-section breakdown.

Why it teaches AI SDK: streamText is what makes AI apps feel alive. You’ll implement the streaming pipeline end-to-end: from the SDK’s async iterators through Server-Sent Events to React state updates. This is how ChatGPT-style UIs work.

Core challenges you’ll face:

- Implementing SSE streaming from Next.js API routes (maps to streaming architecture)

- Consuming streams on the client with proper cleanup (maps to async iteration)

- Handling partial updates and rendering in-progress text (maps to state management)

- Graceful error handling mid-stream (maps to error boundaries)

Resources for key challenges:

- “The AI SDK UI docs on useChat/useCompletion” - Shows the React hooks that handle streaming

- “MDN Server-Sent Events guide” - Foundation for understanding the transport layer

Key Concepts:

- Streaming Responses: AI SDK streamText Docs

- React Server Components: “Learning React, 2nd Edition” by Eve Porcello - Ch. 12

- Async Iterators: “JavaScript: The Definitive Guide” by David Flanagan - Ch. 13

Difficulty: Beginner-Intermediate Time estimate: 1 week Prerequisites: React/Next.js basics, TypeScript

Real world outcome:

- Paste a 5,000-word article and watch the summary stream in real-time

- See a “Summarizing…” indicator with word count progress

- Final output shows key points, main themes, and a one-paragraph summary

- Copy button to grab the summary for use elsewhere

Learning milestones:

- First stream renders tokens in real-time → you understand async iteration

- Implementing abort controller cancels mid-stream → you grasp cleanup patterns

- Adding streaming structured output with

streamObject→ you combine both patterns

Real World Outcome

When you open the web app in your browser, here’s exactly what you’ll see and experience:

Initial State:

┌─────────────────────────────────────────────────────────────────────┐

│ 📄 Document Summarizer │

├─────────────────────────────────────────────────────────────────────┤

│ │

│ Paste your document here: │

│ ┌────────────────────────────────────────────────────────────────┐ │

│ │ │ │

│ │ Paste or type your document text... │ │

│ │ │ │

│ │ │ │

│ │ │ │

│ └────────────────────────────────────────────────────────────────┘ │

│ │

│ Document length: 0 words [✨ Summarize] │

│ │

└─────────────────────────────────────────────────────────────────────┘

After Pasting a Document (5,000+ words):

┌─────────────────────────────────────────────────────────────────────┐

│ 📄 Document Summarizer │

├─────────────────────────────────────────────────────────────────────┤

│ │

│ Paste your document here: │

│ ┌────────────────────────────────────────────────────────────────┐ │

│ │ The field of quantum computing has seen remarkable progress │ │

│ │ over the past decade. Recent breakthroughs in error │ │

│ │ correction, qubit stability, and algorithmic development │ │

│ │ have brought us closer than ever to practical quantum │ │

│ │ advantage. This comprehensive analysis examines... │ │

│ │ [... 5,234 more words ...] │ │

│ └────────────────────────────────────────────────────────────────┘ │

│ │

│ Document length: 5,847 words [✨ Summarize] │

│ │

└─────────────────────────────────────────────────────────────────────┘

While Streaming (the magic happens!):

┌─────────────────────────────────────────────────────────────────────┐

│ 📄 Document Summarizer │

├─────────────────────────────────────────────────────────────────────┤

│ │

│ 📝 Summary │

│ ───────────────────────────────────────────────────────────────── │

│ ⏳ Generating... Progress: 234 words │

│ ┌────────────────────────────────────────────────────────────────┐ │

│ │ │ │

│ │ ## Key Points │ │

│ │ │ │

│ │ The article examines recent quantum computing breakthroughs, │ │

│ │ focusing on three critical areas: │ │

│ │ │ │

│ │ 1. **Error Correction**: IBM's new surface code approach │ │

│ │ achieves 99.5% fidelity, a significant improvement over │ │

│ │ previous methods. This breakthrough addresses one of the█ │ │

│ │ │ │

│ └────────────────────────────────────────────────────────────────┘ │

│ │

│ [⏹ Cancel] │

│ │

└─────────────────────────────────────────────────────────────────────┘

The cursor (█) moves in real-time as each token arrives from the LLM. The user watches the summary build word by word—this is the “ChatGPT effect” that makes AI feel alive.

Completed Summary:

┌─────────────────────────────────────────────────────────────────────┐

│ 📄 Document Summarizer │

├─────────────────────────────────────────────────────────────────────┤

│ │

│ 📝 Summary ✓ Complete │

│ ───────────────────────────────────────────────────────────────── │

│ Generated in 4.2s Total: 312 words │

│ ┌────────────────────────────────────────────────────────────────┐ │

│ │ │ │

│ │ ## Key Points │ │

│ │ │ │

│ │ The article examines recent quantum computing breakthroughs, │ │

│ │ focusing on three critical areas: │ │

│ │ │ │

│ │ 1. **Error Correction**: IBM's new surface code approach │ │

│ │ achieves 99.5% fidelity, a significant improvement... │ │

│ │ │ │

│ │ 2. **Qubit Scaling**: Google's 1,000-qubit processor │ │

│ │ demonstrates exponential progress in hardware capacity... │ │

│ │ │ │

│ │ 3. **Commercial Applications**: First production deployments │ │

│ │ in drug discovery and financial modeling show... │ │

│ │ │ │

│ │ ## Main Themes │ │

│ │ - Race between IBM, Google, and emerging startups │ │

│ │ - Shift from theoretical to practical quantum advantage │ │

│ │ - Growing investment from pharmaceutical and finance sectors │ │

│ │ │ │

│ │ ## One-Paragraph Summary │ │

│ │ Quantum computing is transitioning from experimental to │ │

│ │ practical, with major players achieving key milestones in │ │

│ │ error correction and scaling that enable real-world use cases. │ │

│ │ │ │

│ └────────────────────────────────────────────────────────────────┘ │

│ │

│ [📋 Copy to Clipboard] [🔄 Summarize Again] [📄 New Doc] │

│ │

└─────────────────────────────────────────────────────────────────────┘

Error State (mid-stream failure):

┌─────────────────────────────────────────────────────────────────────┐

│ 📄 Document Summarizer │

├─────────────────────────────────────────────────────────────────────┤

│ │

│ 📝 Summary ⚠️ Error │

│ ───────────────────────────────────────────────────────────────── │

│ Stopped after 2.1s Partial: 156 words │

│ ┌────────────────────────────────────────────────────────────────┐ │

│ │ │ │

│ │ ## Key Points │ │

│ │ │ │

│ │ The article examines recent quantum computing breakthroughs, │ │

│ │ focusing on three critical areas: │ │

│ │ │ │

│ │ 1. **Error Correction**: IBM's new surface code approach │ │

│ │ achieves 99.5% fidelity... │ │

│ │ │ │

│ │ ───────────────────────────────────────────────────────────── │ │

│ │ ⚠️ Stream interrupted: Connection timeout │ │

│ │ Showing partial results above. │ │

│ │ │ │

│ └────────────────────────────────────────────────────────────────┘ │

│ │

│ [🔄 Retry] [📋 Copy Partial] [📄 New Doc] │

│ │

└─────────────────────────────────────────────────────────────────────┘

Key UX behaviors to implement:

- The text area scrolls automatically to keep the cursor visible

- Word count updates in real-time as tokens arrive

- “Cancel” button appears only during streaming

- Partial results are preserved even on error

- Copy button works even during streaming (copies current content)

The Core Question You’re Answering

“How do I stream LLM responses in real-time to create responsive, interactive UIs?”

This is about understanding the entire streaming pipeline from the AI SDK’s async iterators through Server-Sent Events to React state updates. You’re not just calling an API—you’re building a real-time data flow that makes AI feel alive and responsive.

Concepts You Must Understand First

- Server-Sent Events (SSE) - The transport layer, how events flow from server to client over HTTP

- Async Iterators - The

for await...ofpattern, AsyncIterableStream in JavaScript - React State with Streams - Updating state incrementally as chunks arrive without causing excessive re-renders

- AbortController - Cancellation patterns for stopping streams mid-flight

- Next.js API Routes - Server-side streaming setup with proper headers and response handling

Questions to Guide Your Design

- How do you send streaming responses from Next.js API routes?

- How do you consume Server-Sent Events on the client side?

- What happens if the user navigates away mid-stream? (Memory leaks, cleanup)

- How do you show a loading state vs partial content? (UX considerations)

- What do you do when the stream errors halfway through?

- How do you handle backpressure if the client can’t keep up with the stream?

Thinking Exercise

Draw a diagram of the data flow:

- User pastes text and clicks “Summarize”

- Client sends POST request to

/api/summarizewith document text - API route calls

streamText()from AI SDK - AI SDK returns an AsyncIterableStream

- Next.js converts this to Server-Sent Events (SSE) via

toDataStreamResponse() - Browser EventSource/fetch receives SSE chunks

- React hook (useChat/useCompletion) processes each chunk

- State updates trigger re-renders

- UI shows progressive text with cursor indicator

- Stream completes or user cancels with AbortController

Now trace what happens when:

- The network connection drops mid-stream

- The user clicks “Cancel”

- Two requests are made simultaneously

- The LLM returns an error after 50 tokens

The Interview Questions They’ll Ask

- “Explain the difference between WebSockets and Server-Sent Events”

- Expected answer: SSE is unidirectional (server → client), simpler, built on HTTP, auto-reconnects. WebSockets are bidirectional, require protocol upgrade, more complex but better for chat-like interactions.

- “How would you implement cancellation for a streaming request?”

- Expected answer: Use AbortController on the client, pass signal to fetch, clean up EventSource. On server, handle abort signals in the stream processing.

- “What happens if the stream errors mid-response?”

- Expected answer: Partial data is already rendered, need error boundary to catch and display error state, possibly implement retry logic, show user what was received + error message.

- “How do you handle back-pressure in streaming?”

- Expected answer: Browser EventSource buffers automatically, but you need to consider state update batching in React, potentially throttle/debounce updates, use React 18 transitions for non-urgent updates.

- “Why use Server-Sent Events instead of polling?”

- Expected answer: Lower latency, less server load, real-time updates, no missed messages between polls, built-in reconnection.

Hints in Layers

Hint 1 (Basic Setup): Use the AI SDK’s toDataStreamResponse() helper to convert the stream into a format Next.js can send via SSE.

Hint 2 (Client Integration): The AI SDK provides useChat or useCompletion hooks that handle SSE consumption, state management, and cleanup automatically.

Hint 3 (Cancellation): Implement AbortController on the client side and pass the signal to your fetch request. The AI SDK hooks support this with the abort() function they return.

Hint 4 (Error Handling): Add React Error Boundaries around your streaming component, and handle errors in the onError callback of the AI SDK hooks. Consider showing partial results even when errors occur.

Hint 5 (Progress Tracking): The streamText response includes token counts and metadata. Use onFinish callback to track completion, and parse the streaming chunks to count words/tokens for progress indicators.

Hint 6 (Performance): Use React 18’s useTransition for non-urgent state updates to prevent janky UI. Consider useDeferredValue for the streaming text to keep the UI responsive.

Books That Will Help

| Topic | Book | Chapter/Section |

|---|---|---|

| Async JavaScript & Iterators | “JavaScript: The Definitive Guide” by David Flanagan | Ch. 13 (Asynchronous JavaScript) |

| Server-Sent Events | “JavaScript: The Definitive Guide” by David Flanagan | Ch. 15.11 (Server-Sent Events) |

| React State Management | “Learning React, 2nd Edition” by Eve Porcello | Ch. 8 (Hooks), Ch. 12 (React and Server) |

| Streaming in Node.js | “Node.js Design Patterns, 3rd Edition” by Mario Casciaro | Ch. 6 (Streams) |

| Error Handling Patterns | “Release It!, 2nd Edition” by Michael Nygard | Ch. 5 (Stability Patterns) |

| Web APIs & Fetch | “JavaScript: The Definitive Guide” by David Flanagan | Ch. 15 (Web APIs) |

| React 18 Concurrent Features | “Learning React, 2nd Edition” by Eve Porcello | Ch. 8 (useTransition, useDeferredValue) |

Recommended reading order:

- Start with Flanagan Ch. 13 to understand async/await and async iterators

- Read Flanagan Ch. 15.11 for SSE fundamentals

- Move to Porcello Ch. 8 for React hooks patterns

- Then tackle the AI SDK documentation with this foundation

Online Resources:

- MDN Server-Sent Events

- AI SDK streamText Documentation

- AI SDK UI Hooks

- React 18 Working Group: useTransition

Project 3: Code Review Agent with Tool Calling

- File: AI_SDK_LEARNING_PROJECTS.md

- Main Programming Language: TypeScript

- Alternative Programming Languages: Python, Go, JavaScript

- Coolness Level: Level 3: Genuinely Clever

- Business Potential: Level 2: The “Micro-SaaS / Pro Tool”

- Difficulty: Level 2: Intermediate (The Developer)

- Knowledge Area: AI Agents, Tool Calling

- Software or Tool: AI SDK, GitHub API, CLI

- Main Book: “Building LLM Agents” by Harrison Chase (LangChain blog series)

What you’ll build: A CLI agent that takes a GitHub PR URL or local diff, then autonomously reads files, analyzes code patterns, checks for issues, and generates a structured code review with specific line-by-line feedback.

Why it teaches AI SDK: This is your first real agent—an LLM in a loop calling tools. You’ll define tools for file reading, pattern searching, and issue tracking. The LLM decides which tools to call and when, not you. This is where AI SDK becomes powerful.

Core challenges you’ll face:

- Defining tool schemas that the LLM can understand and invoke correctly (maps to tool definition)

- Implementing the agent loop with

maxStepsorstopWhen(maps to agent architecture) - Managing context as tools return data back to the LLM (maps to conversation state)

- Handling tool execution failures gracefully (maps to error recovery)

Resources for key challenges:

- “AI SDK Agents documentation” - The canonical reference for agentic patterns

- “Building LLM Agents” by Harrison Chase (LangChain blog series) - Mental models for agent design

Key Concepts:

- Tool Definition: AI SDK Tools and Tool Calling

- Agent Loop: AI SDK Agents

- Git/GitHub API: GitHub REST API documentation for PR data

Difficulty: Intermediate Time estimate: 1-2 weeks Prerequisites: Completed Projects 1-2, Git basics

Learning milestones:

- LLM calls your

readFiletool → you understand tool invocation flow - Agent makes multiple tool calls in sequence → you grasp the agentic loop

- Using

onStepFinishto log agent progress → you understand observability patterns - Agent decides it’s “done” autonomously → you understand completion conditions

Real World Outcome

When you run the CLI, here’s exactly what you’ll see in your terminal:

$ review-agent https://github.com/acme/widget-api/pull/47

🔍 Code Review Agent initialized

📋 Fetching PR #47 from acme/widget-api...

[Step 1] 🔧 Tool: fetchPRMetadata

Title: "Add user authentication middleware"

Files changed: 5

Additions: 234, Deletions: 12

[Step 2] 🔧 Tool: readFile("src/middleware/auth.ts")

Reading 89 lines...

[Step 3] 🔧 Tool: readFile("src/routes/users.ts")

Reading 156 lines...

[Step 4] 🔧 Tool: searchPattern("password", "*.ts")

Found 3 matches across 2 files

[Step 5] 🔧 Tool: readFile("src/utils/crypto.ts")

Reading 45 lines...

[Step 6] 🤔 Agent reasoning: "I've reviewed the authentication

implementation. I noticed potential security issues with

password handling and a missing rate limiter. Let me

generate the review."

[Step 7] 🔧 Tool: generateReview

═══════════════════════════════════════════════════════════════════════

CODE REVIEW: PR #47

"Add user authentication middleware"

═══════════════════════════════════════════════════════════════════════

## 🔴 Security Issues (2)

### Plaintext Password Logging

**File:** `src/middleware/auth.ts:34`

**Severity:** Critical

```typescript

console.log(`Login attempt for user: ${email}, password: ${password}`);

^^^^^^^^

Issue: Password is logged in plaintext. This exposes credentials in logs. Fix: Remove password from log statement or mask it.

Missing Rate Limiting

File: src/routes/users.ts:12-45

Severity: High

The /login endpoint has no rate limiting, making it vulnerable to

brute-force attacks.

Recommended: Add rate limiting middleware (e.g., express-rate-limit).

🟡 Code Quality (3)

Magic Number

File: src/middleware/auth.ts:56

const TOKEN_EXPIRY = 86400; // What is this number?

Suggestion: Use named constant: const ONE_DAY_IN_SECONDS = 86400;

Missing Error Type

File: src/utils/crypto.ts:23

} catch (e) {

throw e; // No type narrowing

}

Suggestion: Use catch (e: unknown) and proper error handling.

Inconsistent Async Pattern

File: src/routes/users.ts:78

Mixing .then() and async/await. Prefer consistent async/await.

🟢 Suggestions (2)

Add Input Validation

File: src/routes/users.ts:15

Consider adding Zod schema validation for the login request body.

Extract JWT Secret

File: src/middleware/auth.ts:8

JWT_SECRET should come from environment variables, not hardcoded.

Summary

| Category | Count |

|---|---|

| 🔴 Security Issues | 2 |

| 🟡 Code Quality | 3 |

| 🟢 Suggestions | 2 |

Overall: This PR introduces authentication but has critical security issues that must be addressed before merging.

Recommendation: Request changes

═══════════════════════════════════════════════════════════════════════

📁 Full review saved to: review-pr-47.md 🔗 Ready to post as PR comment? [y/N]

If the user confirms, the agent posts the review as a GitHub comment:

```bash

$ y

📤 Posting review to GitHub...

✓ Review posted: https://github.com/acme/widget-api/pull/47#issuecomment-1234567

Done! Agent completed in 12.3s (7 steps, 3 files analyzed)

The Core Question You’re Answering

“How do I build an AI that autonomously takes actions, not just generates text?”

This is the paradigm shift from AI as a “fancy autocomplete” to AI as an “autonomous agent.” You’re not just asking the LLM to write a review—you’re giving it tools to fetch PRs, read files, search patterns, and letting it decide what to do next.

The LLM is now in control of the flow. It chooses which files to read. It decides when it has enough information. It determines when to stop. Your job is to define the tools and constraints, then let the agent work.

Concepts You Must Understand First

Stop and research these before coding:

- Tool Definition with the AI SDK

- What is the

tool()function and how do you define a tool? - How does the LLM “see” your tool? (description + parameters schema)

- What’s the difference between

executeandgeneratein tools? - Reference: AI SDK Tools and Tool Calling

- What is the

- Agent Loop with stopWhen

- What does

stopWhendo ingenerateText? - How does the agent loop work internally?

- What is

hasToolCall()and how do you use it? - Reference: AI SDK Agents

- What does

- Context Management

- How do tool results get fed back to the LLM?

- What happens if the context gets too long?

- How do you use

onStepFinishfor observability? - Reference: AI SDK Agent Events

- GitHub API Basics

- How do you fetch PR metadata with the GitHub REST API?

- How do you get the list of changed files in a PR?

- How do you read file contents from a specific commit?

- Reference: GitHub REST API - Pull Requests

- Error Handling in Agents

- What happens if a tool fails mid-execution?

- How do you implement retry logic for transient failures?

- How do you handle LLM errors vs tool errors?

- Book Reference: “Release It!, 2nd Edition” by Michael Nygard - Ch. 5

Questions to Guide Your Design

Before implementing, think through these:

- What tools does a code review agent need?

fetchPRMetadata: Get PR title, description, files changedreadFile: Read a specific file’s contentssearchPattern: Search for patterns across files (likegrep)getDiff: Get the diff for a specific filegenerateReview: Final tool that triggers review synthesis

- How does the agent know what to review?

- Start with the list of changed files from the PR

- Agent decides which files are important to read

- Agent searches for patterns that indicate issues (e.g., “TODO”, “password”, “console.log”)

- How does the agent know when to stop?

- Use

stopWhen: hasToolCall('generateReview') - Agent calls

generateReviewwhen it has gathered enough information - Add

maxStepsas a safety limit

- Use

- How do you structure the review output?

- Use

generateObjectwith a schema for the review - Categories: security issues, code quality, suggestions

- Each issue has: file, line, description, severity, suggested fix

- Use

- How do you handle large PRs?

- Limit the number of files to analyze

- Summarize file contents if too long

- Prioritize files by extension (

.ts>.md)

Thinking Exercise

Design your tools on paper before implementing:

// Define your tool schemas:

const tools = {

fetchPRMetadata: tool({

description: '???', // What should this say?

parameters: z.object({

prUrl: z.string().describe('???')

}),

execute: async ({ prUrl }) => {

// What does this return?

// { title, description, filesChanged, additions, deletions }

}

}),

readFile: tool({

description: '???',

parameters: z.object({

path: z.string().describe('???')

}),

execute: async ({ path }) => {

// Return file contents as string

}

}),

searchPattern: tool({

description: '???',

parameters: z.object({

pattern: z.string(),

glob: z.string().optional()

}),

execute: async ({ pattern, glob }) => {

// Return matches: [{ file, line, match }]

}

}),

generateReview: tool({

description: 'Generate the final code review. Call this when you have gathered enough information.',

parameters: z.object({

summary: z.string(),

issues: z.array(issueSchema),

recommendation: z.enum(['approve', 'request-changes', 'comment'])

}),

execute: async (review) => review // Just return the structured review

})

};

// Trace through a simple PR with 2 files changed:

// 1. What tool does the agent call first?

// 2. How does it decide which file to read?

// 3. When does it decide it has enough information?

// 4. What triggers the generateReview call?

The Interview Questions They’ll Ask

Prepare to answer these:

- “What is an AI agent and how is it different from a simple LLM call?”

- An agent is an LLM in a loop that can call tools. Unlike a single LLM call that just generates text, an agent can take actions (read files, make API calls) and iterate until a task is complete. The agent autonomously decides which actions to take.

- “How do you define a tool for the AI SDK?”

- Use the

tool()function with a description (tells LLM when to use it), a Zod parameters schema (defines the input), and an execute function (performs the action). The description is critical—it’s prompt engineering for tool selection.

- Use the

- “What is stopWhen and how does it work?”

stopWhenis a condition that determines when the agent loop terminates. Common patterns:hasToolCall('finalTool')stops when a specific tool is called, or a custom function that checks step count or context.

- “How do you handle context growth in agents?”

- Use

prepareStepto summarize or filter previous steps. Limit tool output size. Implement context windowing. For code review: only include relevant file snippets, not entire files.

- Use

- “What happens if a tool fails during agent execution?”

- The error is returned to the LLM as a tool result. The LLM can decide to retry, try a different approach, or handle the error gracefully. You can also implement retry logic in the tool’s execute function.

- “How would you test an AI agent?”

- Mock the LLM responses to test tool orchestration. Test tools in isolation. Use deterministic prompts for reproducible behavior. Log all steps for debugging. Implement integration tests with real LLM calls for end-to-end validation.

Hints in Layers

Hint 1: Start with a single tool Get the agent loop working with just one tool:

import { generateText, tool } from 'ai';

import { openai } from '@ai-sdk/openai';

import { z } from 'zod';

const tools = {

readFile: tool({

description: 'Read a file from the repository',

parameters: z.object({

path: z.string().describe('Path to the file')

}),

execute: async ({ path }) => {

// For now, just return mock content

return `Contents of ${path}: // TODO: implement`;

}

})

};

const { text, steps } = await generateText({

model: openai('gpt-4'),

tools,

prompt: 'Read the file src/index.ts and tell me what it does.'

});

console.log('Steps:', steps.length);

console.log('Result:', text);

Run this and observe how the LLM calls your tool.

Hint 2: Add the agent loop with stopWhen

import { hasToolCall } from 'ai';

const tools = {

readFile: tool({ ... }),

generateSummary: tool({

description: 'Generate the final summary. Call this when done.',

parameters: z.object({

summary: z.string()

}),

execute: async ({ summary }) => summary

})

};

const { text, steps } = await generateText({

model: openai('gpt-4'),

tools,

stopWhen: hasToolCall('generateSummary'),

prompt: 'Read src/index.ts and src/utils.ts, then generate a summary.'

});

Hint 3: Add observability with onStepFinish

const { text, steps } = await generateText({

model: openai('gpt-4'),

tools,

stopWhen: hasToolCall('generateSummary'),

onStepFinish: ({ stepType, toolCalls }) => {

console.log(`[Step] Type: ${stepType}`);

for (const call of toolCalls || []) {

console.log(` Tool: ${call.toolName}(${JSON.stringify(call.args)})`);

}

},

prompt: 'Review the PR...'

});

Hint 4: Connect to real GitHub API

const fetchPRMetadata = tool({

description: 'Fetch metadata for a GitHub Pull Request',

parameters: z.object({

owner: z.string(),

repo: z.string(),

prNumber: z.number()

}),

execute: async ({ owner, repo, prNumber }) => {

const response = await fetch(

`https://api.github.com/repos/${owner}/${repo}/pulls/${prNumber}`,

{ headers: { Authorization: `token ${process.env.GITHUB_TOKEN}` } }

);

const pr = await response.json();

return {

title: pr.title,

body: pr.body,

changedFiles: pr.changed_files,

additions: pr.additions,

deletions: pr.deletions

};

}

});

Hint 5: Structure the review output

const reviewSchema = z.object({

securityIssues: z.array(z.object({

file: z.string(),

line: z.number(),

severity: z.enum(['critical', 'high', 'medium', 'low']),

description: z.string(),

suggestedFix: z.string()

})),

codeQuality: z.array(z.object({

file: z.string(),

line: z.number(),

description: z.string(),

suggestion: z.string()

})),

recommendation: z.enum(['approve', 'request-changes', 'comment']),

summary: z.string()

});

const generateReview = tool({

description: 'Generate the final structured code review',

parameters: reviewSchema,

execute: async (review) => review

});

Books That Will Help

| Topic | Book | Chapter |

|---|---|---|

| Agent mental models | “Artificial Intelligence: A Modern Approach” by Russell & Norvig | Ch. 2 (Intelligent Agents) |

| ReAct pattern | “ReAct: Synergizing Reasoning and Acting” (Yao et al.) | The academic paper |

| Error handling | “Release It!, 2nd Edition” by Michael Nygard | Ch. 5 (Stability Patterns) |

| Tool design | AI SDK Tools Docs | Entire section |

| Agent loops | AI SDK Agents Docs | stopWhen, prepareStep |

| TypeScript patterns | “Programming TypeScript” by Boris Cherny | Ch. 4 (Functions), Ch. 7 (Error Handling) |

| GitHub API | GitHub REST API Docs | Pull Requests, Contents |

| CLI development | “Command-Line Rust” by Ken Youens-Clark | Ch. 1-3 (patterns apply) |

Recommended reading order:

- AI SDK Tools and Tool Calling docs (30 min) - Understand tool definition

- AI SDK Agents docs (30 min) - Understand stopWhen and loop control

- Russell & Norvig Ch. 2 (1 hour) - Deep mental model for agents

- GitHub Pull Requests API (30 min) - Understand the data you’ll work with

- Then start coding!

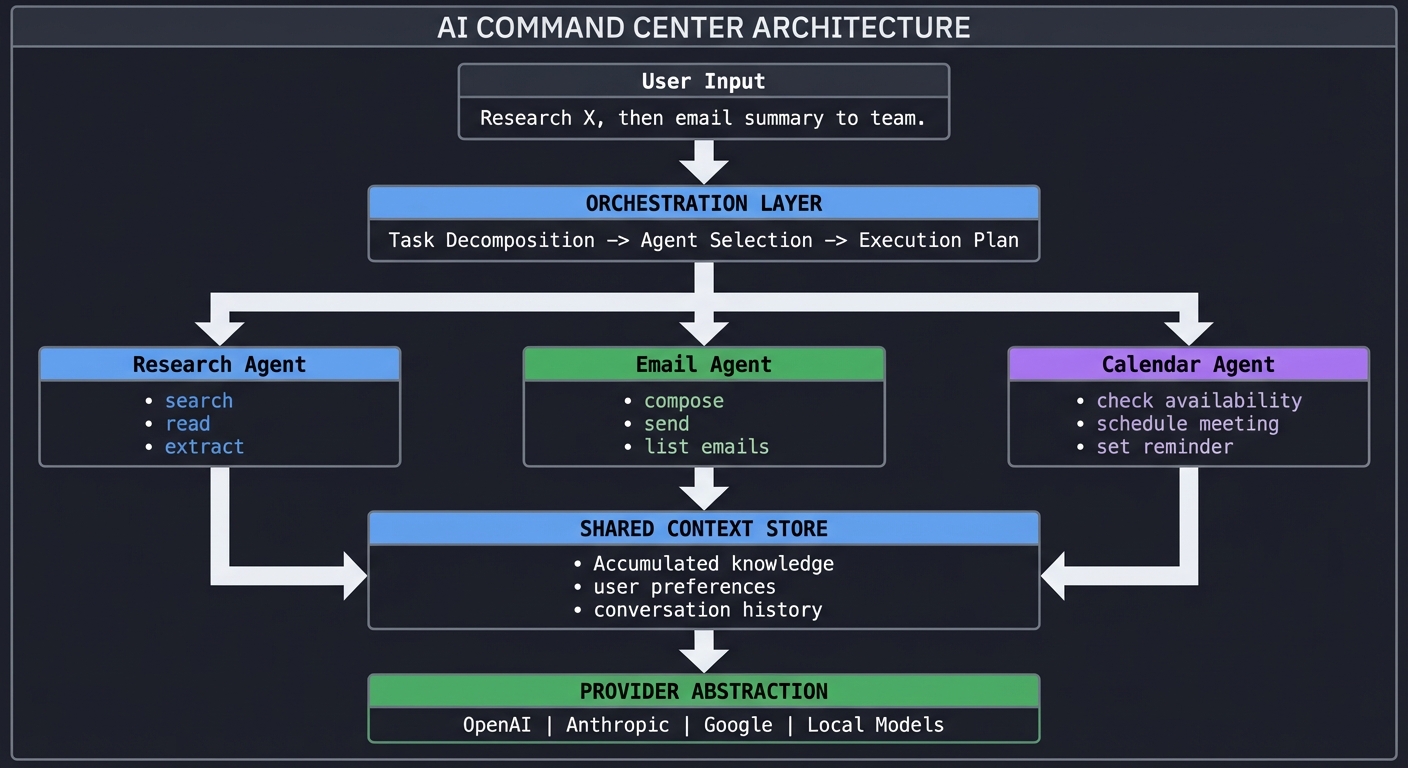

Project 4: Multi-Provider Model Router

- File: AI_SDK_LEARNING_PROJECTS.md

- Main Programming Language: TypeScript

- Alternative Programming Languages: Python, Go, JavaScript

- Coolness Level: Level 3: Genuinely Clever

- Business Potential: Level 3: The “Service & Support” Model

- Difficulty: Level 2: Intermediate (The Developer)

- Knowledge Area: API Gateway, AI Integration

- Software or Tool: AI SDK, OpenAI, Anthropic, Google AI

- Main Book: “Designing Data-Intensive Applications” by Martin Kleppmann

What you’ll build: A smart API gateway that accepts prompts and dynamically routes them to the optimal model (GPT-4 for reasoning, Claude for long context, Gemini for vision) based on task analysis, with fallback handling and cost tracking.

Why it teaches AI SDK: The SDK’s provider abstraction is its killer feature. You’ll implement a system that uses generateObject to classify tasks, then routes to different providers—all through the unified API. You’ll deeply understand how the SDK normalizes provider differences.

Core challenges you’ll face:

- Configuring multiple providers with their API keys and settings (maps to provider setup)

- Building a task classifier that determines optimal model (maps to structured output)

- Implementing fallback logic when primary provider fails (maps to error handling)

- Tracking token usage and costs across providers (maps to telemetry)

Key Concepts:

- Provider Configuration: AI SDK Providers

- Error Handling: AI SDK Error Handling

- Usage Tracking: AI SDK Telemetry

- API Gateway Patterns: “Designing Data-Intensive Applications” by Martin Kleppmann - Ch. 4

Difficulty: Intermediate Time estimate: 1-2 weeks Prerequisites: Multiple API keys (OpenAI, Anthropic, Google), completed Projects 1-3

Real world outcome:

- REST API endpoint that accepts

{ prompt, preferredCapability: "reasoning" | "vision" | "long-context" } - Automatically selects the best model, falls back on failure

- Dashboard showing requests per provider, costs, latency, and success rates

- Cost savings visible when cheaper models handle simple tasks

Learning milestones:

- Swapping providers with one line change → you understand the abstraction value

- Fallback chain executes on provider error → you grasp resilience patterns

- Telemetry shows cost per request → you understand production observability

Real World Outcome

When you run your Multi-Provider Model Router, here’s exactly what you’ll see and experience:

Testing the Router via HTTP:

$ curl -X POST http://localhost:3000/api/route \

-H "Content-Type: application/json" \

-d '{

"prompt": "Explain quantum entanglement in simple terms",

"preferredCapability": "reasoning"

}'

{

"provider": "openai",

"model": "gpt-4-turbo",

"response": "Quantum entanglement is a phenomenon where two particles...",

"metadata": {

"latency_ms": 1247,

"tokens_used": 156,

"cost_usd": 0.00468,

"fallback_attempted": false,

"routing_reason": "capability_match"

}

}

Vision Task Automatically Routes to Gemini:

$ curl -X POST http://localhost:3000/api/route \

-H "Content-Type: application/json" \

-d '{

"prompt": "What objects are in this image?",

"image_url": "https://example.com/photo.jpg",

"preferredCapability": "vision"

}'

{

"provider": "google",

"model": "gemini-2.0-flash-001",

"response": "The image contains: a wooden table, a laptop computer...",

"metadata": {

"latency_ms": 892,

"tokens_used": 89,

"cost_usd": 0.00089,

"fallback_attempted": false,

"routing_reason": "vision_capability"

}

}

Fallback Chain in Action (Primary Provider Down):

$ curl -X POST http://localhost:3000/api/route \

-H "Content-Type: application/json" \

-d '{

"prompt": "Summarize this 50-page legal document...",

"preferredCapability": "long-context"

}'

{

"provider": "anthropic",

"model": "claude-3-7-sonnet-20250219",

"response": "This legal document outlines a commercial lease agreement...",

"metadata": {

"latency_ms": 3421,

"tokens_used": 1247,

"cost_usd": 0.03741,

"fallback_attempted": true,

"fallback_chain": [

{

"provider": "openai",

"model": "gpt-4-turbo",

"error": "Rate limit exceeded (429)",

"timestamp": "2025-12-27T10:23:41Z"

},

{

"provider": "anthropic",

"model": "claude-3-7-sonnet-20250219",

"status": "success",

"timestamp": "2025-12-27T10:23:44Z"

}

],

"routing_reason": "fallback_success"

}

}

Dashboard View (Running at http://localhost:3000/dashboard):

┌──────────────────────────────────────────────────────────────────────────┐

│ 🎯 Multi-Provider Router Dashboard │

│ Last updated: 2025-12-27 10:25:43 │

├──────────────────────────────────────────────────────────────────────────┤

│ │

│ 📊 PROVIDER STATISTICS (Last 24 Hours) │

│ ──────────────────────────────────────────────────────────────────── │

│ │

│ Provider │ Requests │ Success │ Avg Latency │ Cost │ Uptime │

│ ──────────────────────────────────────────────────────────────────────│

│ OpenAI │ 1,247 │ 98.2% │ 1.2s │ $12.34 │ 99.8% │

│ Anthropic │ 834 │ 99.7% │ 2.1s │ $28.91 │ 100.0% │

│ Google │ 423 │ 97.4% │ 0.9s │ $4.23 │ 98.1% │

│ ──────────────────────────────────────────────────────────────────────│

│ TOTAL │ 2,504 │ 98.6% │ 1.5s │ $45.48 │ 99.3% │

│ │

│ 💰 COST SAVINGS │

│ ──────────────────────────────────────────────────────────────────── │

│ │

│ If all requests used GPT-4: $89.23 │

│ Actual cost with routing: $45.48 │

│ Savings: $43.75 (49.0%) │

│ │

│ 🔄 ROUTING BREAKDOWN │

│ ──────────────────────────────────────────────────────────────────── │

│ │

│ reasoning ████████████████░░░░ 62% → OpenAI GPT-4 │

│ vision ████████░░░░░░░░░░░░ 27% → Google Gemini │

│ long-context ████░░░░░░░░░░░░░░░░ 11% → Anthropic Claude │

│ │

│ ⚠️ RECENT FALLBACKS (Last 2 Hours) │

│ ──────────────────────────────────────────────────────────────────── │

│ │

│ 10:23:41 │ OpenAI → Anthropic │ Rate limit (429) │

│ 09:47:12 │ Google → OpenAI │ Timeout (>5s) │

│ 09:12:34 │ OpenAI → Anthropic │ Model unavailable (503) │

│ │

│ 📈 LIVE REQUEST RATE │

│ ──────────────────────────────────────────────────────────────────── │

│ │

│ 10:20 ▂▄▆█▆▄▂ │

│ 10:21 ▄▆█▆▄▂▁ │

│ 10:22 ▆█▆▄▂▁▂ │

│ 10:23 █▆▄▂▁▂▄ │

│ 10:24 ▆▄▂▁▂▄▆ │

│ 10:25 ▄▂▁▂▄▆█ ← Current │

│ │

└──────────────────────────────────────────────────────────────────────────┘

CLI Tool Output:

$ ai-router stats --provider openai

OpenAI Provider Statistics

─────────────────────────────────────────────────

Status: ✅ Healthy

Last request: 23 seconds ago

Requests today: 1,247

Success rate: 98.2% (1,225 successful)

Average latency: 1.24s

P50 latency: 1.12s

P95 latency: 2.34s

P99 latency: 4.56s

Cost today: $12.34

Total tokens: 1,247,834

- Input tokens: 847,234 ($8.47)

- Output tokens: 400,600 ($3.87)

Models used:

- gpt-4-turbo: 89% (1,110 requests)

- gpt-3.5-turbo: 11% (137 requests)

Recent errors:

[10:23:41] Rate limit exceeded (429)

[08:15:23] Timeout after 30s

[07:42:11] Invalid API key (401)

Config File (router.config.json):

{

"providers": {

"openai": {

"apiKey": "${OPENAI_API_KEY}",

"models": {

"reasoning": "gpt-4-turbo",

"fallback": "gpt-3.5-turbo"

},

"timeout": 30000,

"maxRetries": 2,

"circuitBreaker": {

"failureThreshold": 5,

"resetTimeout": 60000

}

},

"anthropic": {

"apiKey": "${ANTHROPIC_API_KEY}",

"models": {

"long-context": "claude-3-7-sonnet-20250219",

"reasoning": "claude-3-5-sonnet-20241022"

},

"timeout": 60000,

"maxRetries": 2

},

"google": {

"apiKey": "${GOOGLE_AI_API_KEY}",

"models": {

"vision": "gemini-2.0-flash-001",

"reasoning": "gemini-2.0-pro-001"

},

"timeout": 20000,

"maxRetries": 3

}

},

"routing": {

"defaultProvider": "openai",

"fallbackChain": ["openai", "anthropic", "google"],

"capabilityMapping": {

"reasoning": ["openai", "anthropic"],

"vision": ["google", "openai"],

"long-context": ["anthropic", "google"]

},

"costOptimization": {

"enabled": true,

"preferCheaperModels": true,

"costThreshold": 0.05

}

},

"telemetry": {

"enabled": true,

"logLevel": "info",

"metricsRetention": "7d",

"exportFormat": "prometheus"

}

}

Key behaviors you’ll implement:

- Request classifier analyzes the prompt and determines optimal provider

- Primary provider is attempted first based on capability match

- If primary fails, automatic fallback to secondary providers in chain

- All requests logged with timing, cost, and routing decisions

- Dashboard updates in real-time showing provider health and costs

- Circuit breaker pattern prevents cascading failures

- Cost tracking per request, per provider, and aggregate

The Core Question You’re Answering

“How do I build resilient, production-grade AI systems that don’t go down when a single provider fails?”

In production, relying on a single LLM provider is like having a single point of failure in your infrastructure. OpenAI, Anthropic, and Google have all experienced downtime in 2025. When your primary provider hits rate limits, experiences an outage, or becomes slow, what happens to your users?

This project teaches you the production architecture pattern that 78% of enterprises use: multi-provider routing with automatic fallback. You’re not just calling an LLM—you’re building an intelligent gateway that:

- Routes requests to the optimal model based on task requirements

- Automatically fails over when providers are down

- Tracks costs across all providers to optimize spend

- Provides observability into your AI infrastructure

The AI SDK’s provider abstraction makes this possible without writing provider-specific code. Change one configuration line, and you’ve switched from OpenAI to Anthropic. This is the power of abstraction.

Concepts You Must Understand First

Stop and research these before coding:

- API Gateway Pattern

- What is an API gateway and why do you need one?

- How does a gateway differ from a simple proxy?

- What responsibilities belong in the gateway layer vs application layer?

- Book Reference: “Designing Data-Intensive Applications” by Martin Kleppmann — Ch. 4 (Encoding and Evolution) & Ch. 12 (The Future of Data Systems)

- Circuit Breaker Pattern

- What is a circuit breaker and how does it prevent cascading failures?

- What are the three states: Closed, Open, Half-Open?

- How do you determine failure thresholds and reset timeouts?

- When should you open the circuit vs retry?

- Book Reference: “Release It!, 2nd Edition” by Michael Nygard — Ch. 5 (Stability Patterns)

- Fallback Chains and Resilience

- What’s the difference between retrying the same provider vs falling back to another?

- How do you design a fallback hierarchy?

- What happens if all providers in the chain fail?

- How do you avoid infinite loops in fallback logic?

- Book Reference: “Building Microservices, 2nd Edition” by Sam Newman — Ch. 11 (Resiliency)

- Provider Abstraction in AI SDK

- How does the AI SDK normalize differences between OpenAI, Anthropic, and Google?

- What is the unified interface that all providers implement?

- How do you configure multiple providers in one application?

- What provider-specific features can’t be abstracted?

- Reference: AI SDK Providers Documentation

- Structured Output with

generateObject- How do you use

generateObjectto classify tasks? - What’s the difference between

generateObjectandgenerateText? - How do you define a Zod schema for structured output?

- Why is structured output better than parsing text for classification?

- Reference: AI SDK Structured Outputs

- How do you use

- Telemetry and Observability

- What metrics should you track for LLM requests? (latency, tokens, cost, errors)