AI Personal Assistants - From Zero to JARVIS Master

Goal: Deeply understand the architecture, capabilities, and orchestration of Large Language Models (LLMs) to build autonomous AI agents. By the end of this sprint, you will move beyond simple chat interfaces to engineer systems that can reason, use tools, manage memory, and automate complex personal workflows.

Why AI Personal Assistants Matter

In the early 2020s, AI shifted from a “black box” that categorized images to a “reasoning engine” that understands language. The arrival of Large Language Models (LLMs) changed the goal of personal computing: it’s no longer just about storing information, but about acting on it.

A “Personal Assistant” in this new era is not a static script of if/else statements. It is an Agent—a system that can perceive an unstructured intent (“Optimize my Tuesday”), plan a sequence of actions, interact with external APIs (Email, Calendar, Web), and self-correct when things go wrong. Mastering this technology means building the ultimate interface between human thought and digital execution.

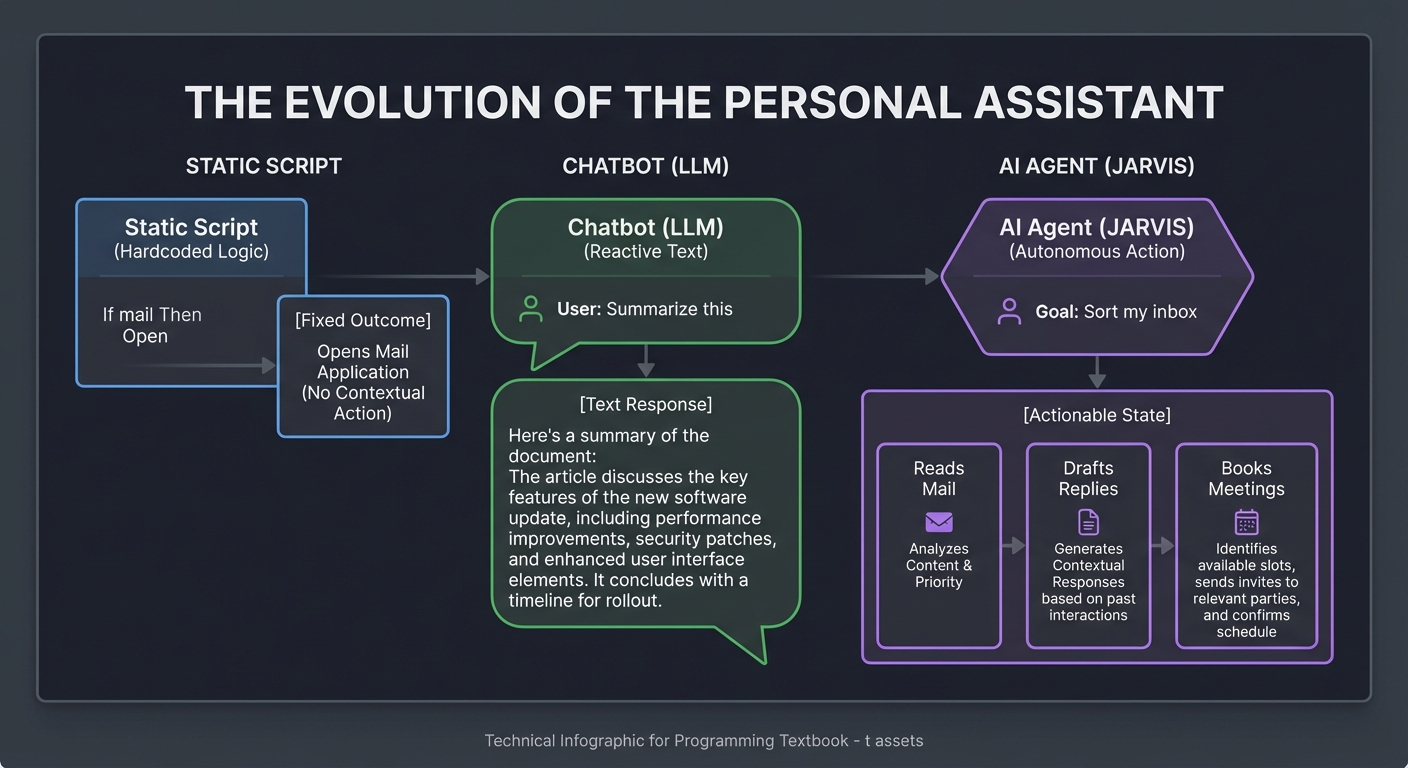

The Evolution of the Personal Assistant

Static Script Chatbot (LLM) AI Agent (JARVIS)

(Hardcoded Logic) (Reactive Text) (Autonomous Action)

┌─────────────┐ ┌─────────────┐ ┌─────────────┐

│ If "mail" │ │ User: "Sum │ │ Goal: "Sort │

│ Then "Open" │ │ marize this"│ │ my inbox" │

└─────────────┘ └──────┬──────┘ └──────┬──────┘

│ │ │

▼ ▼ ▼

[Fixed Outcome] [Text Response] [Actionable State]

- Reads Mail

- Drafts Replies

- Books Meetings

Core Concept Analysis

To build a truly capable personal assistant, you must master the fundamental pillars of Agentic AI.

1. The LLM as a Reasoning Engine (The CPU)

LLMs are not databases; they are statistical predictors. However, we treat them as the CPU of our personal assistant. Unlike traditional CPUs that execute binary logic, the LLM executes “semantic logic.”

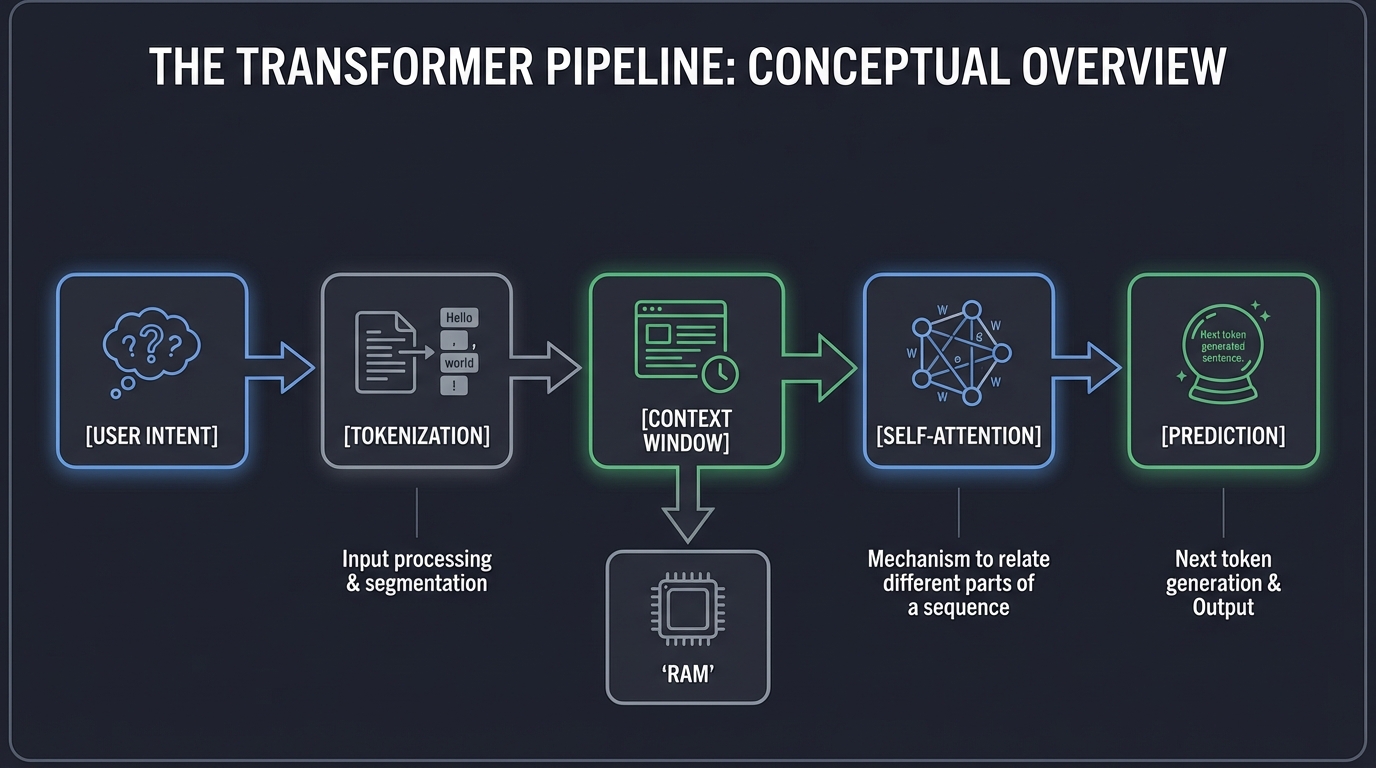

The Transformer Pipeline:

[User Intent] -> [Tokenization] -> [Context Window] -> [Self-Attention] -> [Prediction]

│

┌─────┴─────┐

│ "RAM" │

└───────────┘

- Context Window: The “RAM” of your assistant. If the information isn’t in the window, the assistant “forgets.” Managing this window is critical for long-running assistants.

- Self-Attention: The mechanism that allows the model to relate different parts of a sequence to compute a representation of the same sequence. This is how the assistant “understands” context.

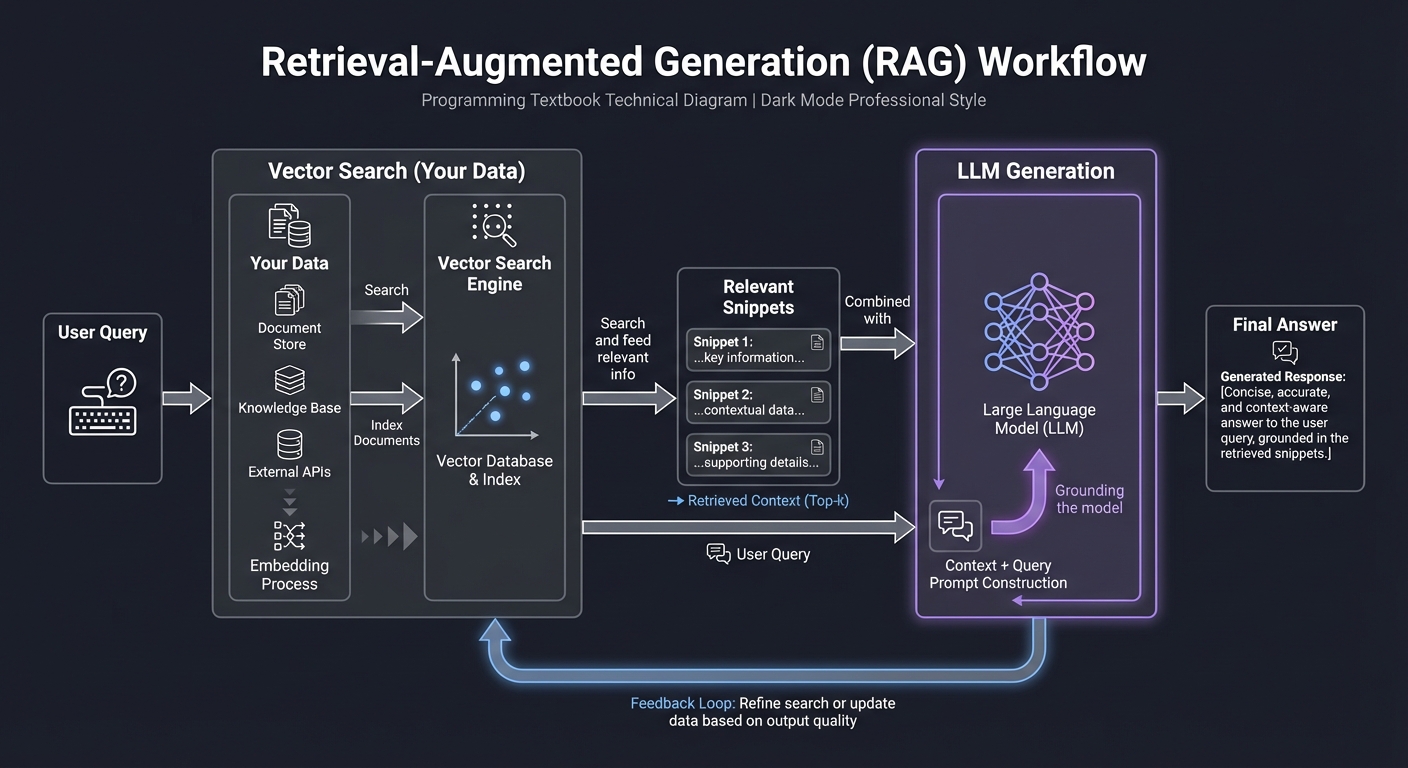

2. Retrieval-Augmented Generation (RAG)

Your assistant needs to know your life. Since we can’t retrain an LLM every time you get an email, we use RAG to “search and feed” relevant info. This is the assistant’s Long-Term Memory.

RAG Workflow:

[User Query] ──> [Vector Search (Your Data)] ──> [Relevant Snippets]

│

[Final Answer] <── [LLM Generation] <── [Query + Snippets]

- Embeddings: Converting text into high-dimensional vectors that capture meaning.

- Vector Databases: Specialized storage that allows for “semantic search” (finding concepts, not just keywords).

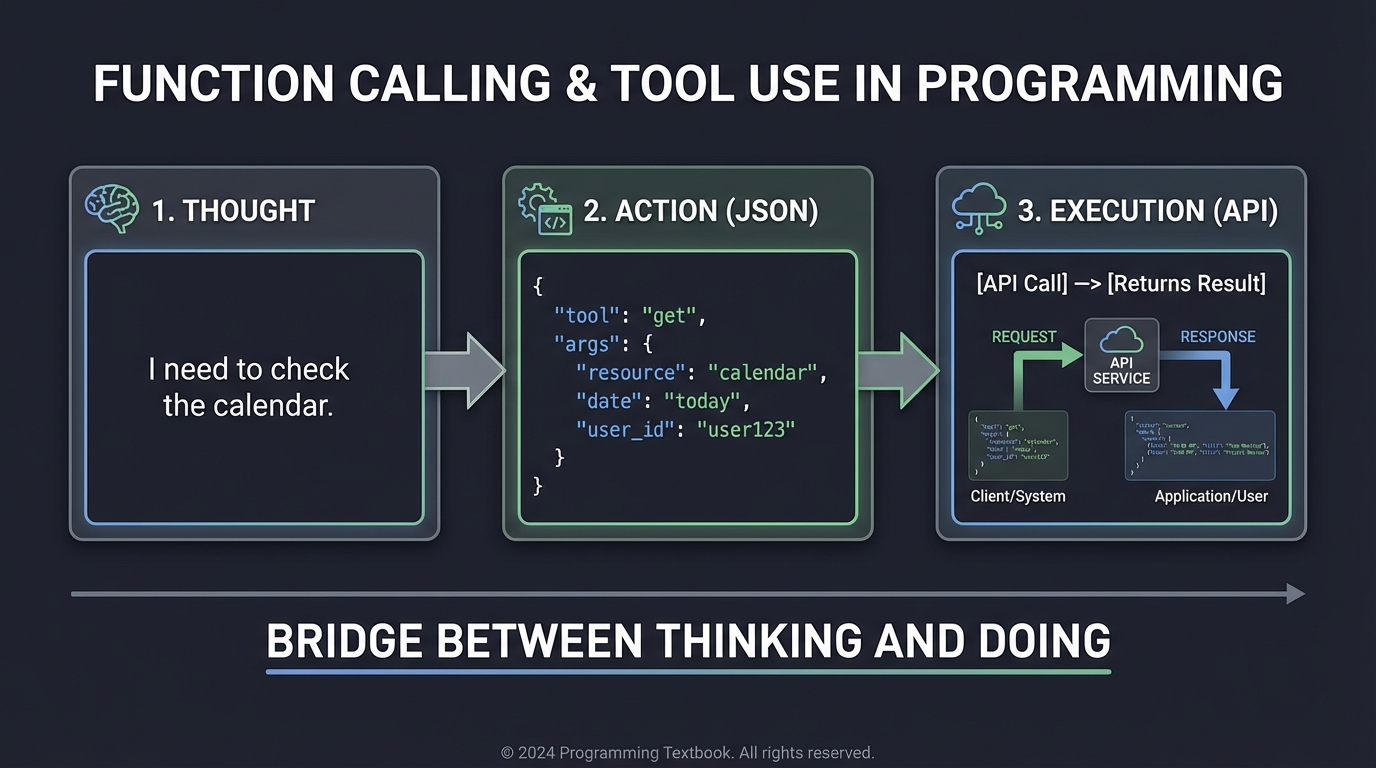

3. Function Calling & Tool Use

Function calling allows the LLM to output a structured request (like JSON) that your code executes. This is the bridge between “Thinking” and “Doing.” It turns a text-generator into a system-operator.

Thought Action Execution

┌──────────────────┐ ┌──────────────────┐ ┌──────────────────┐

│ "I need to check │ │ { "tool": "get" │ │ [API Call] │

│ the calendar." │──>│ "args": {...} }│──>│ [Returns Result] │

└──────────────────┘ └──────────────────┘ └──────────────────┘

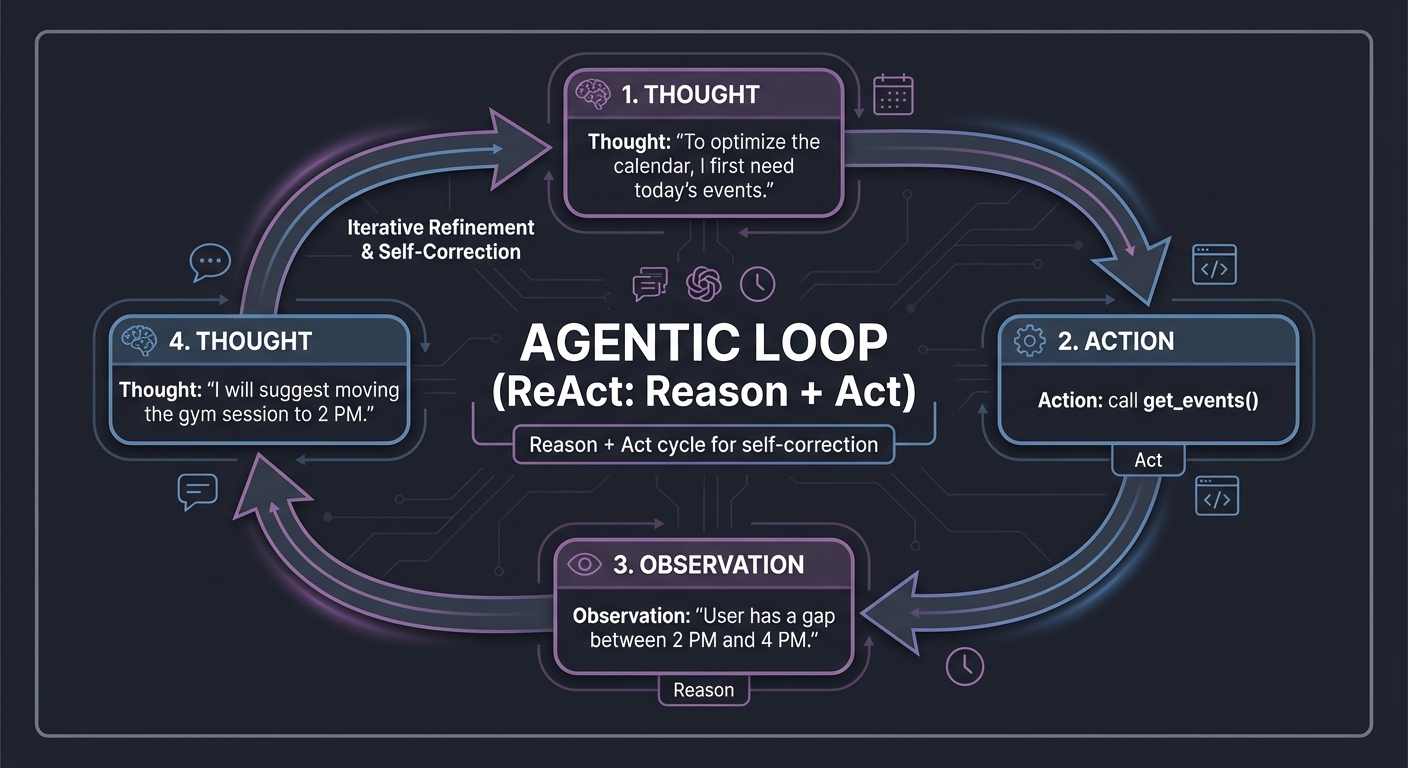

4. Agentic Loops (ReAct)

The “Brain” of the assistant. It stands for Reason + Act. The agent looks at the goal, thinks, takes an action, observes the result, and repeats. This loop allows for multi-step problem solving and self-correction.

Loop:

1. Thought: "To optimize the calendar, I first need today's events."

2. Action: call get_events()

3. Observation: "User has a gap between 2 PM and 4 PM."

4. Thought: "I will suggest moving the gym session to 2 PM."

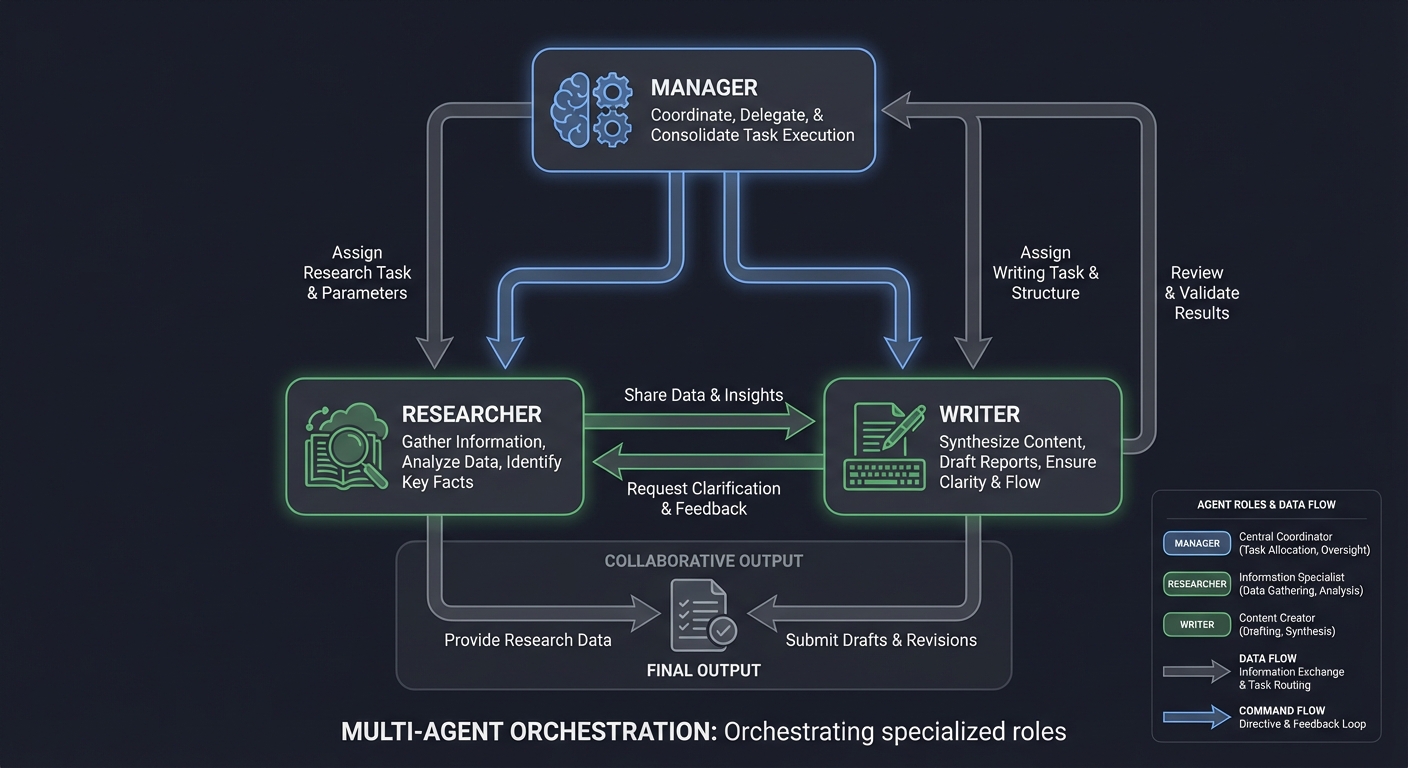

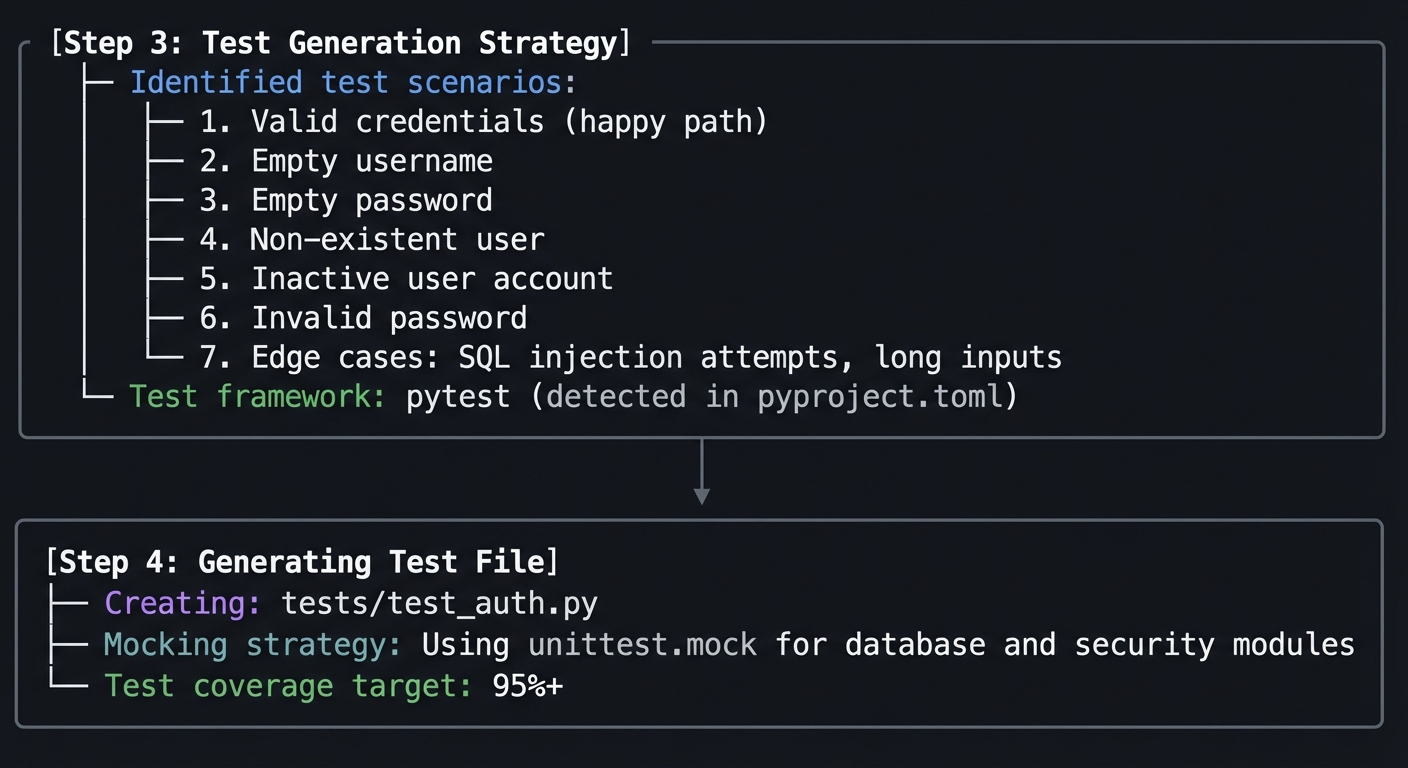

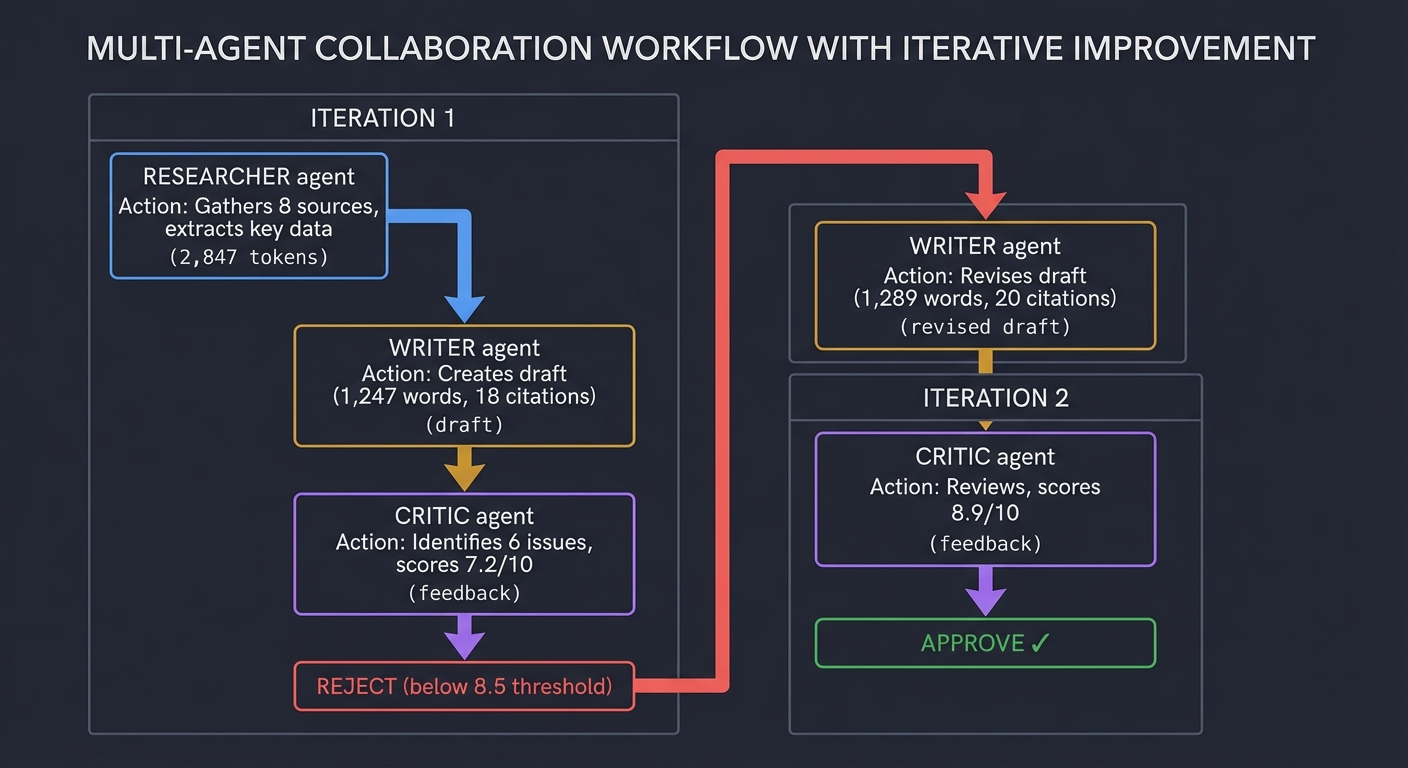

5. Multi-Agent Orchestration

Complex tasks often require specialized experts. Multi-agent systems use multiple LLM instances (or personas) that collaborate, critique, and manage each other.

┌───────────┐

│ Manager │

└─────┬─────┘

┌────────┴────────┐

┌───▼───┐ ┌───▼───┐

│Researcher│ <───> │ Writer │

└───────┘ └───────┘

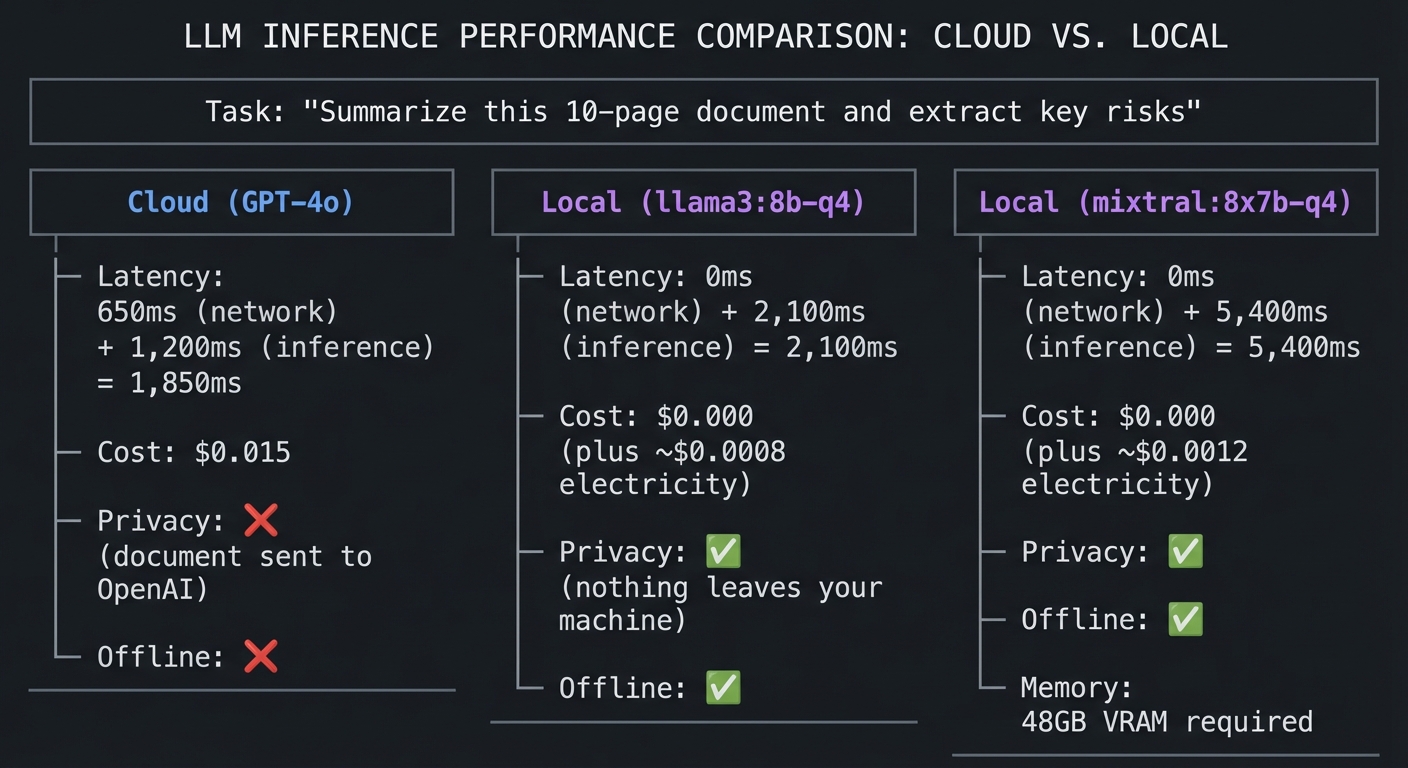

6. Local Inference & Quantization

Running models locally (using Ollama or Llama.cpp) ensures privacy—the “No-Cloud” assistant. This requires understanding Quantization: compressing model weights (e.g., from 16-bit to 4-bit) so they fit in your GPU’s VRAM without losing significant reasoning power.

Concept Summary Table

| Concept Cluster | What You Need to Internalize |

|---|---|

| LLM Reasoning | Models predict tokens; manage the “Context Window” like RAM. |

| System Prompting | The prompt is the “Program.” It defines identity, tools, and constraints. |

| RAG | Grounding the assistant in private data via Vector Search and Chunking. |

| Function Calling | Bridging the gap between “Thinking” and “Doing” via structured JSON. |

| Agentic Loops | The ReAct (Reason + Act) cycle for self-correction and multi-step tasks. |

| Multi-Agent | Orchestrating specialized roles (Researcher, Critic, Manager) for complex goals. |

| Sandboxing | Safe execution of LLM-generated code in isolated environments. |

| Local Inference | Managing VRAM and Quantization (GGUF) for “No-Cloud” privacy. |

| Observability | Tracing agent “thoughts” and evaluating performance (Evals). |

| Voice Interface | Optimizing STT/TTS pipelines for sub-1s latency magic. |

Deep Dive Reading By Concept

This section maps concepts to specific chapters in key books. Read these to build the foundational mental models required for the projects.

Foundation: Models & Prompting

| Concept | Book & Chapter |

|---|---|

| Transformer Architecture | Build a Large Language Model (From Scratch) by Sebastian Raschka — Ch. 3 |

| Prompt Engineering Patterns | The LLM Engineering Handbook by Paul Iusztin — Ch. 3: “Prompt Engineering” |

| LLM Reasoning & Limits | AI Engineering by Chip Huyen — Ch. 2: “Foundation Models” |

Retrieval & Memory (RAG)

| Concept | Book & Chapter |

|---|---|

| Embeddings & Vector DBs | AI Engineering by Chip Huyen — Ch. 4: “Information Retrieval” |

| The RAG Pipeline | The LLM Engineering Handbook by Paul Iusztin — Ch. 5: “Retrieval-Augmented Generation” |

| Advanced Chunking | Generative AI with LangChain by Ben Auffarth — Ch. 5: “Working with Data” |

Agency & Tools

| Concept | Book & Chapter |

|---|---|

| Function Calling | Generative AI with LangChain by Ben Auffarth — Ch. 4: “Tools and Agents” |

| ReAct & Planning | Building AI Agents (Packt) — Ch. 2: “The ReAct Framework” |

| Multi-Agent Systems | Multi-Agent Systems with AutoGen by Victor Dibia — Ch. 1-2 |

| Safe Code Execution | AI Engineering by Chip Huyen — Ch. 6: “Agentic Workflows” |

Project List

Projects are ordered from fundamental understanding to advanced autonomous implementations.

Project 1: LLM Prompt Playground & Analyzer

- File: AI_PERSONAL_ASSISTANTS_MASTERY.md

- Expanded Project Guide: P01-llm-prompt-playground-analyzer.md

- Main Programming Language: Python

- Alternative Programming Languages: TypeScript (Node.js), Go

- Coolness Level: Level 2: Practical but Forgettable

- Business Potential: 1. The “Resume Gold”

- Difficulty: Level 1: Beginner

- Knowledge Area: Prompt Engineering / API Interaction

- Software or Tool: OpenAI API, Anthropic API, or Ollama (Local)

- Main Book: “The LLM Engineering Handbook” by Paul Iusztin

What you’ll build: A web-based tool where you can “battle” different prompts against each other. You’ll input one “Goal” and two different “Prompts,” then see which model performs better and how temperature affects the output.

Why it teaches AI Assistants: Before building JARVIS, you must understand how the “CPU” (the LLM) responds to instructions. You’ll discover that a single word change in a System Prompt can transform a helpful assistant into a hallucinating mess.

Core challenges you’ll face:

- Managing API state → maps to handling asynchronous calls to LLM providers.

- Parameter Sensitivity → maps to observing how temperature (0.0 vs 1.0) changes consistency.

- Token Tracking → maps to understanding the cost of your assistant’s “thoughts”.

Real World Outcome

You will have a Python/Streamlit web application that transforms how you understand LLM behavior. This is not just a simple comparison tool - it’s a sophisticated laboratory for dissecting how different prompts, models, and parameters affect AI output quality.

Initial Launch Experience

What you’ll see in the terminal when you run it:

$ streamlit run prompt_battle.py

You can now view your Streamlit app in your browser.

Local URL: http://localhost:8501

Network URL: http://192.168.1.5:8501

[2025-03-15 09:23:41] INFO - Initializing Prompt Battle Arena v2.1

[2025-03-15 09:23:41] INFO - Loading configuration from config/settings.yaml

[2025-03-15 09:23:42] INFO - Connecting to API providers...

[2025-03-15 09:23:42] OK OpenAI client ready (models: gpt-4o, gpt-4o-mini, gpt-3.5-turbo)

[2025-03-15 09:23:43] OK Anthropic client ready (models: claude-3.5-sonnet, claude-3-haiku, claude-3-opus)

[2025-03-15 09:23:43] OK Ollama client ready (local models: llama3:8b, mistral:7b)

[2025-03-15 09:23:43] INFO - Loaded token pricing from config/pricing.json

[2025-03-15 09:23:43] INFO - Pricing database last updated: 2025-03-10

[2025-03-15 09:23:43] INFO - Battle history loaded: 47 previous comparisons found

[2025-03-15 09:23:43] INFO - Session database: ./data/battle_sessions.db

[2025-03-15 09:23:44] OK Application ready

The Web Interface - Complete Walkthrough

When the browser opens, you’ll see a professionally-designed interface divided into clear sections.

The left sidebar provides all your control options - model selection dropdowns for Models A and B, temperature slider from 0.0 to 2.0, max tokens slider, and checkboxes for streaming, token breakdown display, cost calculation, and optional judge evaluation.

The main battle arena has three horizontal panels. The top panel is the shared goal/task area where you describe what you want the AI to accomplish. Below that are two side-by-side text editors for Prompt A and Prompt B.

The Battle In Action - Step by Step

When you click “Start Battle”, you’ll see immediate feedback showing battle initialization with timestamps, model details, and current parameter settings.

Then real-time token counting displays for both models showing prompt encoding, token counts, request sending status, and waiting indicators.

Streaming responses appear character-by-character:

Model A produces a generic response like: “Based on the quarterly report, here are the main risks: 1. The company is seeing slower revenue growth which could impact profitability. 2. Supply chain issues are adding unexpected costs. 3. Customer metrics show some concerning trends with higher acquisition costs and lower retention. I’d recommend focusing on improving operational efficiency and customer retention.” Response completes in 1.8 seconds.

Model B with the detailed financial analyst prompt produces structured output: “FINANCIAL RISK ASSESSMENT - Risk #1: Liquidity Crisis - Severity: 9/10 - Evidence: Cash reserves dropped 3.5M (-29%) in one quarter - Impact: Unable to cover 3+ months of operational expenses - Recommendation: Immediately establish a 5M credit facility and freeze non-essential CapEx. Risk #2: Customer Economics - Severity: 8/10 - Evidence: CAC up 15%, retention down to 82% (industry avg: 90%) - Impact: Unit economics deteriorating; each customer costs more, stays less - Recommendation: Launch win-back campaign; investigate churn root causes via exit surveys. Risk #3: Margin Compression - Severity: 7/10 - Evidence: 2.3M unplanned supply chain costs on likely 30M quarterly revenue - Impact: Gross margins likely dropped 7-8 percentage points - Recommendation: Diversify suppliers; negotiate volume commitments for price stability.” Response completes in 2.1 seconds.

Detailed Metrics Dashboard

After both responses complete, a comprehensive metrics panel appears showing latency (first token, total time, tokens/sec), token usage (prompt tokens, completion tokens, total), cost analysis (input cost calculated as tokens times price per million, output cost, total), and response characteristics (word count, sentences, average sentence length, reading level, formatting style).

For Model A (gpt-4o): Latency - First token: 340ms, Total: 1,842ms, Tokens/sec: 65.7. Tokens - Prompt: 156, Completion: 121, Total: 277. Cost - Input: 0.00078 USD (156 times 5.00/1M), Output: 0.00182 USD (121 times 15.00/1M), Total: 0.00260 USD. Response - 92 words, 8 sentences, avg length 11.5, Grade 9 reading level, plain text formatting.

For Model B (claude-3.5-sonnet): Latency - First token: 280ms, Total: 2,156ms, Tokens/sec: 87.3. Tokens - Prompt: 189, Completion: 188, Total: 377. Cost - Input: 0.00057 USD (189 times 3.00/1M), Output: 0.00282 USD (188 times 15.00/1M), Total: 0.00339 USD. Response - 178 words, 15 sentences, avg length 11.9, Grade 11 reading level, Markdown with lists formatting.

What You’ll Actually Discover

After running this specific comparison, you’ll have transformative realizations:

Discovery #1: Prompt Engineering Has Exponential Returns Quality Ratio: Model B output is approximately 5x more actionable. Cost Ratio: Model B costs only 1.3x more (0.0034 USD vs 0.0026 USD). Effort Ratio: Prompt B took 3 minutes to write vs 5 seconds for Prompt A. ROI Calculation: 3 minutes of prompt engineering equals 400% improvement in output quality. That’s approximately 133% improvement per minute invested. The few extra tokens in the system prompt add negligible cost. Lesson: In production, spend 80% of your time on prompt engineering, not model selection.

Discovery #2: Temperature’s Dramatic Impact

You’ll run the same battle with different temperature settings and create a comparison table. At Temperature 0.0: Output is identical on repeated runs, always identifies the same risks with consistent severity scores (9/10, 8/10, 7/10), best for data extraction, classification, and code generation. At Temperature 0.7: Balanced approach with consistent but varied phrasing, risks usually identified the same way but with some variation in names, severity scores vary plus or minus 1 point, best for analysis tasks, writing, and general use. At Temperature 1.5: Creative but noisy output, different risks identified on each run, severity scores vary plus or minus 3 points, too random for professional work.

Discovery #3: Model Selection is Task-Dependent

You’ll test by swapping prompts and discover: When both models receive Prompt B (detailed instructions), gpt-4o follows structure, includes severity scores, partially quantifies impact, is actionable, and partially cites data (score: 8.2/10). Claude-3.5-sonnet follows structure, includes severity scores, thoroughly quantifies impact, is highly actionable, and explicitly cites data (score: 9.1/10). Key Insight: Claude is better at quantitative reasoning for this task, even with the same prompt.

Advanced Feature: LLM-as-a-Judge

When you enable the “Judge” feature, a third panel appears evaluating both responses using a rubric with five criteria: Accuracy (Are the identified risks valid?), Completeness (Are all major risks covered?), Actionability (Are recommendations specific?), Clarity (Is the output easy to understand?), and Professionalism (Appropriate tone and formatting?).

Judge scoring results: Model A (gpt-4o): Accuracy 7/10, Completeness 6/10, Actionability 5/10, Clarity 8/10, Professionalism 7/10, TOTAL: 33/50 (66%). Model B (claude-3.5-sonnet): Accuracy 9/10, Completeness 10/10, Actionability 9/10, Clarity 10/10, Professionalism 10/10, TOTAL: 48/50 (96%). Winner: Model B (claude-3.5-sonnet with Prompt B). Judge Reasoning: “Model B provides quantified severity scores, explicit data citations, and actionable recommendations with clear next steps. Model A identifies the risks but lacks specificity and depth. The structured formatting in Model B makes it immediately actionable for an executive audience.” Judge evaluation cost: 0.0045 USD (additional).

Battle History and Analytics

The bottom of the page shows cumulative learning with session statistics: Date March 15, 2025, total battles run: 8, session cost: 0.0427 USD, most effective prompt identified, most cost-efficient model tracked. Each battle is logged with timestamp, models compared, winner, and cost.

Export Feature - Concrete Output

When you click “Export to JSON”, you get a structured file containing: battle ID, timestamp, full configuration for both models (provider, model name, temperature, max tokens), the goal text, both prompts, complete responses with content and metrics (latency, token counts, cost, tokens per second), and judge evaluation if enabled with scores for each criterion, winner, reasoning, and judge cost.

The Transformation Moment

Around battle #15, you’ll run an experiment: Goal “Explain quantum computing to a 10-year-old”. Prompt A: “You are a helpful assistant.” Prompt B: “You are a science teacher who specializes in making complex topics fun for children. Use analogies, simple language, and excitement. Avoid jargon. Structure your explanation in 3 short paragraphs.”

What happens: Prompt A produces a technically accurate but boring, dense explanation. Prompt B produces an engaging story about “magic boxes that try all paths through a maze at once”.

The realization: The model was always capable of the engaging explanation. YOU unlocked it with your prompt. This is the moment you understand that prompts are programs, and you are now a prompt programmer.

Advanced Patterns You’ll Discover

By battle #30, you’ll experiment with advanced techniques:

Chain-of-Thought Prompting: Adding “Before answering, think through the problem step by step inside thinking tags” improves accuracy by 20-40% on analytical tasks. Cost increase: +15% tokens for the thinking section. ROI: Massive for complex reasoning.

Few-Shot Examples: Including “Here are 2 examples of good risk analysis: [examples]. Now analyze this report: [new data]” makes output format perfectly match your examples. Cost increase: +200 tokens per request. ROI: Eliminates post-processing code.

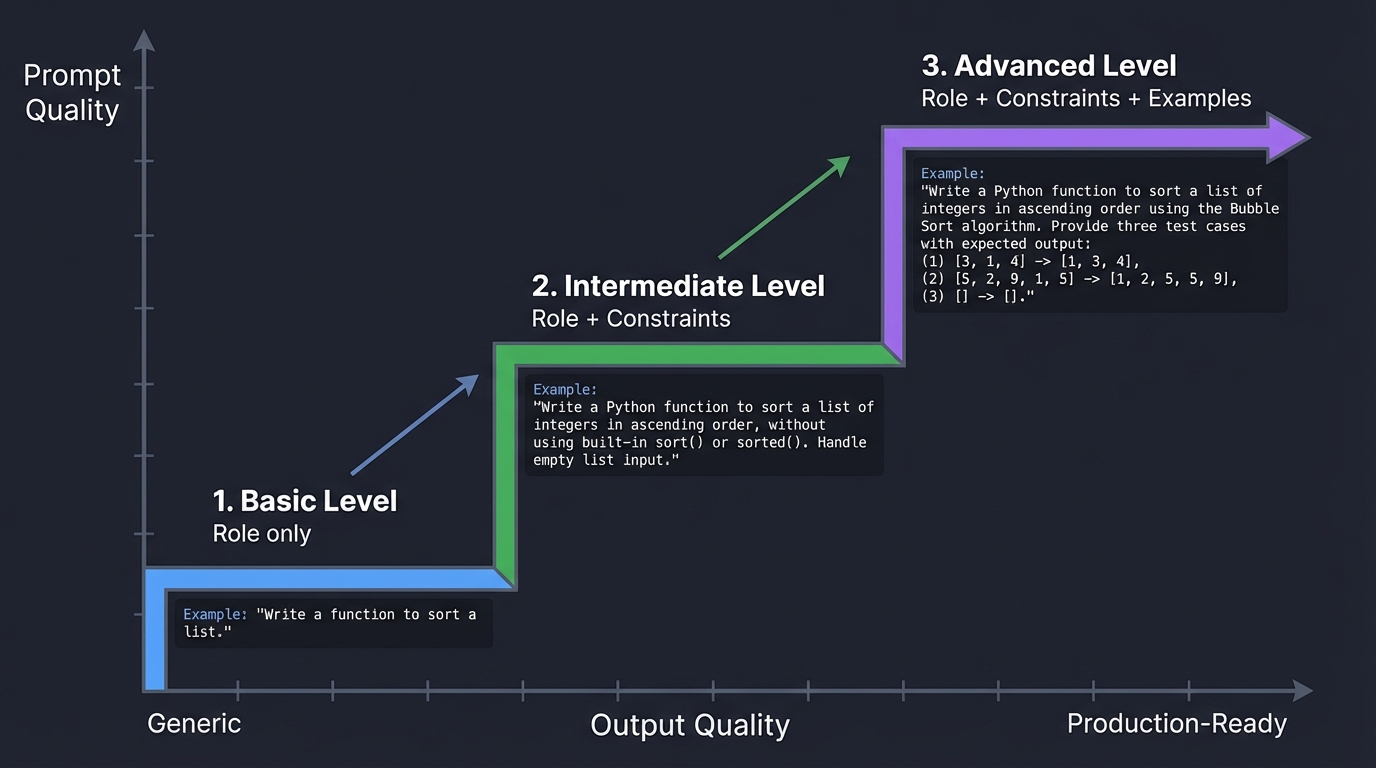

Role + Constraints + Output Format: Using the pattern “You are a [ROLE]. When analyzing [TASK], always: [Constraints]. Output format: [JSON schema]” produces consistent, structured, production-ready outputs. This becomes your template for ALL future AI products.

What You’ll Learn About Costs

Battle costs you’ll actually see:

- gpt-4o-mini: 0.0008 USD per battle (fast iteration)

- gpt-4o: 0.0026 USD per battle (production quality)

- claude-3.5-sonnet: 0.0034 USD per battle (analytical tasks)

- Judge evaluation (gpt-4o): 0.0045 USD additional (automated evaluation)

Total experimentation cost for 50 battles: approximately 0.25 USD. Value of insights gained: Infinite - you now understand LLM behavior at a fundamental level.

This project transforms you from an “AI user” into an “AI engineer” by making the invisible visible. You can now see exactly how your decisions affect model behavior, cost, and quality.

The Core Question You’re Answering

“How much of an assistant’s intelligence comes from the model itself, versus the instructions I give it?”

Before you write any code, sit with this question. Most beginners blame the “AI” for being stupid, but usually, it’s the “Software” (the prompt) that is buggy.

Concepts You Must Understand First

Stop and research these before coding:

- Tokens vs. Words

- Why can’t an LLM count how many ‘r’s are in the word “strawberry”?

- LLMs don’t “read” text—they operate on tokens. A token is a chunk of characters. Common words like “the” are 1 token, but “strawberry” might be 2-3 tokens depending on the tokenizer.

- This is critical: The word “strawberry” might be tokenized as [“straw”, “berry”], so the model never “sees” the individual letters ‘r’ in sequence.

- How does tokenization affect the cost of your assistant?

- API providers charge per token, not per word. A 100-word prompt might be 150 tokens or 80 tokens depending on vocabulary.

- Use the

tiktokenlibrary to count tokens before sending requests.

- Deep dive: Different models use different tokenizers. GPT-4 uses cl100k_base, Claude uses their own tokenizer. The same text may cost different amounts across providers.

- Book Reference: “AI Engineering” Ch. 2 - Chip Huyen

- Additional Resource: “Build a Large Language Model (From Scratch)” by Sebastian Raschka - Ch. 2 (Understanding Tokenization)

- The Context Window

- The context window is the “working memory” of an LLM. Think of it as RAM for the model.

- GPT-4o has a 128k token window. Claude 3.5 Sonnet has 200k. Gemini 1.5 Pro has 2 million tokens.

- What is the “Lost in the Middle” phenomenon?

- Research shows LLMs are better at attending to information at the beginning and end of the context window. Information in the middle is often “forgotten.”

- This has massive implications for RAG: Always put the most important retrieved chunks at the start or end of your prompt.

- How do you calculate how many tokens a prompt uses?

- Install

tiktoken:pip install tiktoken - Example code:

import tiktoken enc = tiktoken.encoding_for_model("gpt-4o") tokens = enc.encode("Your prompt here") print(f"Token count: {len(tokens)}")

- Install

- Practical implication: If your system prompt + conversation history + retrieved docs exceed the context window, the oldest messages get truncated. You must implement conversation memory management.

- Book Reference: “The LLM Engineering Handbook” Ch. 3

- Research Paper: “Lost in the Middle: How Language Models Use Long Contexts” (Liu et al., 2023)

- Inference Parameters

- What is Temperature?

- Temperature controls randomness in token selection. It’s a float between 0.0 and 2.0.

- At Temperature = 0.0: The model always picks the most likely next token (deterministic, boring, safe).

- At Temperature = 1.0: The model samples from the full probability distribution (creative, diverse, risky).

- At Temperature = 2.0: The model gets very random (almost chaotic, often incoherent).

- When to use what:

- 0.0-0.3: For tasks requiring precision (code generation, data extraction, math)

- 0.7-1.0: For creative tasks (writing, brainstorming, storytelling)

- 1.0+: Experimental or when you want maximum diversity

- What is Top-P (nucleus sampling)?

- Instead of picking from all possible tokens, pick from the smallest set of tokens whose cumulative probability exceeds P.

- Top-P = 0.9 means: Consider only tokens that make up the top 90% of probability mass.

- This prevents the model from choosing extremely unlikely tokens while still allowing creativity.

- Pro tip: Temperature and Top-P interact. Most engineers use one or the other, not both. OpenAI recommends altering temperature OR top-p, not both simultaneously.

- Book Reference: “The LLM Engineering Handbook” Ch. 3 - Section on “Decoding Strategies”

- Additional Resource: “Build a Large Language Model (From Scratch)” by Sebastian Raschka - Ch. 5 (Text Generation Strategies)

- What is Temperature?

Questions to Guide Your Design

- Comparison Logic

- How will you store and display the results of different runs?

- Should you use a database (SQLite) or just save to JSON files?

- Design consideration: What if you want to compare 5 different prompts instead of just 2? How does your UI scale?

- Recommendation: Start with a simple list in memory, then add persistence with SQLite once the core works.

- Cost Calculation

- How do you map token counts to actual USD cents for different providers?

- Each provider has different pricing:

- GPT-4o: $5.00 per 1M input tokens, $15.00 per 1M output tokens

- Claude 3.5 Sonnet: $3.00 per 1M input tokens, $15.00 per 1M output tokens

- GPT-4o-mini: $0.15 per 1M input tokens, $0.60 per 1M output tokens

- You’ll need a configuration file (pricing.json) that you can update as prices change.

- Code pattern:

def calculate_cost(model, prompt_tokens, completion_tokens): pricing = load_pricing_config() input_cost = (prompt_tokens / 1_000_000) * pricing[model]["input"] output_cost = (completion_tokens / 1_000_000) * pricing[model]["output"] return input_cost + output_cost

- Structured Eval

- Can you use a third prompt (a “Judge” LLM) to decide which of the two outputs is better?

- This is called “LLM-as-a-Judge” evaluation—a critical technique in modern AI engineering.

- Design challenge: How do you prevent the judge from being biased toward certain response styles?

- Technique: Use a rubric. Give the judge specific criteria:

```

Rate each response on:

- Accuracy (1-10)

- Clarity (1-10)

- Completeness (1-10)

Respond in JSON format with scores and brief justifications. ```

- Advanced pattern: Use GPT-4o as the judge even if you’re testing cheaper models. The “smart judge evaluates fast workers” pattern is industry-standard.

- Book Reference: “The LLM Engineering Handbook” Ch. 8 - “Evaluating LLM Systems”

Thinking Exercise

The Role-Play Test

Take this simple prompt: Help me write an email to my boss.

Now, modify it three ways:

- Add a Role:

You are a professional corporate communications expert. - Add a Constraint:

Use no more than 50 words. - Add a Target Tone:

Make it sound urgent but polite.

Questions:

- How did the role change the vocabulary?

- Expected observation: The role shifts the model’s “persona.” With “corporate communications expert,” you’ll see more formal language, strategic phrasing, and awareness of organizational hierarchy.

- Deep insight: LLMs are trained on vast corpora where specific roles correlate with specific language patterns. “Expert” roles access more sophisticated vocabulary domains.

- Did the constraint force the LLM to omit details?

- Expected observation: Yes. A 50-word limit forces the model into “executive summary” mode. It will drop pleasantries and focus on core message.

- Deep insight: Constraints are a form of optimization pressure. The model must balance completeness with brevity, teaching you how to calibrate specificity.

- Which of these three is most useful for a “Personal Assistant”?

- Answer: All three, but in combination. A personal assistant needs:

- Role to establish expertise domain

- Constraints to ensure outputs fit the context (e.g., mobile notifications should be brief)

- Tone to match the user’s communication style

- Answer: All three, but in combination. A personal assistant needs:

Extended experiment: Try this prompt battle in your application:

Prompt A (Minimal):

Help me write an email to my boss about being late.

Prompt B (Engineered):

You are an executive assistant skilled in professional communication.

Task: Draft a brief, professional email to my manager explaining I'll be 15 minutes late to today's 9 AM standup due to a medical appointment.

Constraints:

- Maximum 3 sentences

- Apologetic but not overly deferential

- Include a commitment to catch up afterward

Tone: Professional, concise, respectful

What you’ll learn:

- Prompt B will produce a ready-to-send email

- Prompt A will produce a generic template requiring heavy editing

- The difference in quality vs. effort invested in the prompt is asymmetric (2x effort = 10x better output)

Diagram of Prompt Engineering Impact:

Prompt Quality

▲

│ ┌─── Advanced

│ ┌─────┤ (Role + Constraints + Examples)

│ ┌────┤

│ ┌────┤ └─── Intermediate

│ ┌────┤ │ (Role + Constraints)

│ ┌────┤ └────┘

│ ┌────┤ └─── Basic

│ ┌───┤ │ (Role only)

└─┴───┴────┴─────────────────────────────────────> Output Quality

Generic Production-Ready

Book Reference: “The LLM Engineering Handbook” Ch. 3 - “Prompt Engineering Patterns”

The Interview Questions They’ll Ask

- “What is the difference between a System Message and a User Message?”

- Answer: The system message sets the persistent context and behavior instructions for the model. It’s like the “constitution” of the conversation. The user message is the actual query or task.

- Deep answer: System messages are weighted more heavily in the attention mechanism. They establish the “persona” and constraints. User messages are treated as “requests within the framework.”

- Example:

System: "You are a Python expert. Always provide working code with comments." User: "How do I read a CSV file?" - Production tip: Never put user data in the system message. System messages should be static templates. User data goes in user messages.

- Book Reference: “The LLM Engineering Handbook” Ch. 3

- “How would you handle a ‘Hallucination’ where the model invents a fact?”

- Answer: Multiple strategies:

- Grounding: Use RAG to provide factual context the model must cite

- Temperature control: Lower temperature (0.0-0.3) for factual tasks

- Structured output: Force JSON schemas that require citations

- Verification loops: Have a second model fact-check the first

- Deep answer: Hallucinations occur because LLMs are trained to predict plausible text, not verify truth. The model will confidently generate coherent falsehoods if it increases the probability of a “reasonable-sounding” response.

- Best practice: Add to your system prompt: “If you don’t know something, say ‘I don’t have that information’ instead of guessing.”

- Advanced technique: Use confidence scores. Ask the model to rate its certainty (1-10) for each claim.

- Book Reference: “AI Engineering” Ch. 2 - “Foundation Model Limitations”

- Answer: Multiple strategies:

- “Explain Temperature. When would you use 0.0 vs 1.0?”

- Answer: Temperature controls randomness in token selection.

- Temperature = 0.0: Deterministic. Always picks the highest probability token. Use for: code generation, data extraction, math, structured output.

- Temperature = 1.0: Full probability distribution sampling. Use for: creative writing, brainstorming, generating diverse options.

- Deep answer: Temperature is applied as a softmax scaling factor. Lower temperature sharpens the probability distribution (making the top choice much more likely). Higher temperature flattens it (giving lower-probability tokens a chance).

- Mathematical insight:

P(token) = exp(logit / temperature) / sum(exp(all_logits / temperature)) - Interview follow-up they might ask: “What about Top-P?”

- Answer: Top-P (nucleus sampling) is an alternative. Instead of temperature, you set a cumulative probability threshold. “Only consider tokens that make up the top 90% of probability mass.”

- Book Reference: “Build a Large Language Model (From Scratch)” by Sebastian Raschka - Ch. 5

- Answer: Temperature controls randomness in token selection.

- “What is Few-Shot prompting and how does it improve reliability?”

- Answer: Few-shot prompting means providing examples in the prompt to demonstrate the desired output format.

- Example:

Extract the name and email from these sentences: Input: "John Smith can be reached at john@example.com" Output: {"name": "John Smith", "email": "john@example.com"} Input: "Contact Sarah Lee via sarah.lee@company.org" Output: {"name": "Sarah Lee", "email": "sarah.lee@company.org"} Input: "Reach out to Michael Chen at mchen@startup.io" Output: - Why it works: LLMs are pattern-matching engines. Examples help the model infer the desired output structure.

- Deep answer: Few-shot learning leverages the model’s in-context learning capability. The examples become part of the “program” you’re running.

- Best practices:

- Use 2-5 examples (diminishing returns after that)

- Make examples diverse to cover edge cases

- Always use consistent formatting across examples

- Advanced pattern: Chain-of-Thought (CoT) few-shot prompting. Include the reasoning steps in your examples:

Q: "If I have 5 apples and buy 3 more, how many do I have?" A: "I started with 5 apples. I bought 3 more. 5 + 3 = 8. So I have 8 apples." - Book Reference: “The LLM Engineering Handbook” Ch. 3 - “In-Context Learning”

- Research Paper: “Language Models are Few-Shot Learners” (Brown et al., 2020) - The original GPT-3 paper

Hints in Layers

Hint 1: Start with the API Client

Don’t build a GUI first. Write a simple script that calls openai.ChatCompletion.create.

Example starter code:

from openai import OpenAI

client = OpenAI(api_key="your-key-here")

response = client.chat.completions.create(

model="gpt-4o-mini",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "What is the capital of France?"}

],

temperature=0.7

)

print(response.choices[0].message.content)

print(f"Tokens used: {response.usage.total_tokens}")

Hint 2: Track Tokens

Use the usage field in the API response. It tells you prompt_tokens and completion_tokens.

Key insight: The usage object structure:

{

"prompt_tokens": 25,

"completion_tokens": 18,

"total_tokens": 43

}

You’ll need both values for accurate cost calculation since input and output tokens are priced differently.

Hint 3: Streamlit for GUI

Use Streamlit to quickly build a side-by-side comparison UI with st.columns(2).

Example Streamlit pattern:

import streamlit as st

st.title("Prompt Battle Arena")

col1, col2 = st.columns(2)

with col1:

st.subheader("Prompt A")

prompt_a = st.text_area("System prompt A", height=200)

with col2:

st.subheader("Prompt B")

prompt_b = st.text_area("System prompt B", height=200)

if st.button("Battle!"):

# Call your LLM comparison function here

pass

Hint 4: Environment Variables for API Keys Never hardcode API keys. Use environment variables:

import os

from openai import OpenAI

client = OpenAI(api_key=os.getenv("OPENAI_API_KEY"))

Set the key in your shell:

export OPENAI_API_KEY="sk-..."

export ANTHROPIC_API_KEY="sk-ant-..."

Books That Will Help

| Topic | Book | Chapter | Why This Specific Chapter |

|---|---|---|---|

| Prompt Engineering | “The LLM Engineering Handbook” by Paul Iusztin | Ch. 3 | Covers role-based prompting, constraints, few-shot learning, and chain-of-thought techniques with production examples |

| LLM Fundamentals | “AI Engineering” by Chip Huyen | Ch. 2 | Explains tokenization, context windows, and the fundamental limitations that affect prompt design |

| Temperature & Sampling | “Build a Large Language Model (From Scratch)” by Sebastian Raschka | Ch. 5 | Deep dive into decoding strategies with mathematical explanations of temperature, top-p, and top-k |

| API Design Patterns | “Python for Data Analysis” by Wes McKinney | Ch. 6 | Best practices for handling API responses, parsing JSON, and data persistence |

| Evaluation Techniques | “The LLM Engineering Handbook” by Paul Iusztin | Ch. 8 | LLM-as-a-Judge patterns, creating evaluation rubrics, and building test sets |

Project 2: Simple RAG Chatbot (The Long-term Memory)

- File: AI_PERSONAL_ASSISTANTS_MASTERY.md

- Expanded Project Guide: P02-simple-rag-chatbot.md

- Main Programming Language: Python

- Alternative Programming Languages: Rust, TypeScript

- Coolness Level: Level 3: Genuinely Clever

- Business Potential: 2. The “Micro-SaaS”

- Difficulty: Level 2: Intermediate

- Knowledge Area: Information Retrieval / Vector DBs

- Software or Tool: ChromaDB, FAISS, or Qdrant

- Main Book: “The LLM Engineering Handbook” by Paul Iusztin

What you’ll build: An assistant that can answer questions about your private files (PDFs, text files, or Markdown notes). It will “read” your documents and only answer based on that context.

Why it teaches AI Assistants: A personal assistant that only knows what was on the internet in 2023 is useless. To be “Personal,” it must have access to your data. This project teaches you how to give an LLM a “Long-term Memory” without retraining it.

Core challenges you’ll face:

- Chunking Strategy → maps to deciding how to break a 50-page PDF into pieces the LLM can “digest”.

- Embedding Selection → maps to converting text into mathematical vectors for search.

- Relevance Tuning → maps to handling cases where the search returns the wrong document snippet.

Real World Outcome

You’ll build a personal knowledge assistant that can answer questions about YOUR specific documents, notes, and files. This is transformative because the LLM now has access to information it was never trained on - your private data becomes its working memory.

Phase 1: The Indexing Experience

First-time setup - What you’ll see:

$ python chat_my_docs.py --index ./my_documents/

========================================

RAG Document Indexer v1.2

========================================

[2025-03-15 10:15:23] INFO - Starting document scan

[2025-03-15 10:15:23] INFO - Target directory: /Users/you/my_documents/

[2025-03-15 10:15:23] INFO - Recursive scan enabled

[Step 1/4] Discovering documents...

Scanning: /Users/you/my_documents/

Scanning: /Users/you/my_documents/work/

Scanning: /Users/you/my_documents/personal/

Found 15 documents:

- 3 PDF files (12.3 MB)

- 8 TXT files (842 KB)

- 4 MD files (156 KB)

[Step 2/4] Extracting text and chunking...

Processing [1/15]: lease_agreement.pdf

File size: 2.4 MB

Pages: 8

Total characters: 18,420

Chunking strategy: Recursive with 500 char chunks, 50 char overlap

Created chunks: 24

Average chunk size: 342 tokens

Overlap effectiveness: 12% context preservation

Time: 3.2s

Processing [2/15]: meeting_notes_2024.txt

File size: 124 KB

Total characters: 124,000

Created chunks: 42

Average chunk size: 298 tokens

Time: 0.8s

Processing [3/15]: car_maintenance_log.md

File size: 8 KB

Created chunks: 5

Average chunk size: 156 tokens

Time: 0.2s

Processing [4/15]: investment_strategy_2025.pdf

File size: 4.1 MB

Pages: 18

Total characters: 42,890

Created chunks: 67

Average chunk size: 388 tokens

Time: 6.1s

... [continuing for all 15 files]

[Step 3/4] Generating embeddings...

Embedding model: text-embedding-3-small (OpenAI)

Dimensions: 1536

Cost per 1M tokens: $0.020

Batch 1/4 (100 chunks): Processing... Done (2.1s) - Cost: $0.0023

Batch 2/4 (100 chunks): Processing... Done (1.9s) - Cost: $0.0022

Batch 3/4 (100 chunks): Processing... Done (2.0s) - Cost: $0.0024

Batch 4/4 (47 chunks): Processing... Done (0.9s) - Cost: $0.0011

Total embeddings generated: 347

Total cost: $0.0080

Total time: 7.2s

Average: 48 embeddings/second

[Step 4/4] Storing in vector database...

Database: ChromaDB

Collection: my_documents_v1

Storage path: ./chroma_db/

Index type: HNSW (Hierarchical Navigable Small World)

Writing chunks: [================================] 347/347

Building index: Done

Persisting to disk: Done

========================================

INDEXING COMPLETE

========================================

Summary:

Documents processed: 15

Total chunks: 347

Vector DB size: 4.2 MB

Embedding cost: $0.0080

Total time: 18.4 seconds

Your documents are now searchable!

Run: python chat_my_docs.py --chat

========================================

Phase 2: The Interactive Chat Experience

Starting a chat session:

$ python chat_my_docs.py --chat

========================================

RAG Chatbot - Your Personal Docs

========================================

[2025-03-15 10:17:05] Loading vector database...

[2025-03-15 10:17:06] OK ChromaDB loaded (347 chunks indexed)

[2025-03-15 10:17:06] OK LLM client ready (gpt-4o-mini)

[2025-03-15 10:17:06] INFO - Debug mode: ON (verbose logging enabled)

Collections available:

- my_documents_v1 (347 chunks, last updated: 2025-03-15)

Ready! Type your question or 'exit' to quit.

Commands: /stats, /clear, /debug on|off, /reindex

========================================

You: What did the landlord say about pets?

[DEBUG] ===== QUERY PROCESSING =====

[DEBUG] User query: "What did the landlord say about pets?"

[DEBUG] Query length: 40 characters, 8 words

[DEBUG] ===== EMBEDDING GENERATION =====

[DEBUG] Generating query embedding using text-embedding-3-small...

[DEBUG] Query tokens: 9

[DEBUG] Embedding generated: 1536 dimensions

[DEBUG] Embedding cost: $0.0000002

[DEBUG] Time: 124ms

[DEBUG] ===== VECTOR SEARCH =====

[DEBUG] Searching ChromaDB collection: my_documents_v1

[DEBUG] Search parameters:

- Top K: 5

- Similarity metric: Cosine

- Minimum similarity threshold: 0.5

[DEBUG] Search results:

1. lease_agreement.pdf (chunk_12, page 4)

Similarity: 0.89 (Very High)

Preview: "PETS AND ANIMALS: Tenant may keep one domesticated pet..."

2. lease_agreement.pdf (chunk_13, page 4)

Similarity: 0.84 (High)

Preview: "...pet deposit of $300 is required. Landlord reserves..."

3. email_landlord_2024-03.txt (chunk_5)

Similarity: 0.71 (Medium)

Preview: "Re: Question about pet policy - Hi, just to clarify..."

4. lease_agreement.pdf (chunk_2, page 1)

Similarity: 0.58 (Low-Medium)

Preview: "TERMS AND CONDITIONS: This lease agreement entered..."

5. meeting_notes_2024.txt (chunk_18)

Similarity: 0.52 (Low)

Preview: "Discussed apartment renovation timeline..."

[DEBUG] Selected top 3 chunks (similarity > 0.70)

[DEBUG] Filtered out 2 low-relevance chunks

[DEBUG] ===== CONTEXT PREPARATION =====

[DEBUG] Retrieving full text for selected chunks...

Chunk 1 (lease_agreement.pdf, page 4):

"PETS AND ANIMALS: Tenant may keep one domesticated pet not exceeding 25 pounds in weight. Pet must be registered with landlord within 7 days of move-in. A refundable pet deposit of $300 is required. Tenant is responsible for all damages caused by pet. Exotic animals, reptiles, and aggressive breeds are prohibited."

Chunk 2 (lease_agreement.pdf, page 4):

"...pet deposit of $300 is required. Landlord reserves the right to revoke pet permission if pet causes disturbance or damage to property. Monthly pet rent of $50 will be added to base rent. Service animals and emotional support animals are exempt from pet deposit and pet rent as required by law."

Chunk 3 (email_landlord_2024-03.txt):

"Subject: Re: Question about pet policy

Date: March 12, 2024

From: landlord@property.com

Hi,

Just to clarify - yes, you can have a cat. Cats are fine as long as they're under 25 lbs (which all domestic cats are). The $300 deposit is refundable when you move out if there's no pet damage. The $50/month pet rent is separate and not refundable.

Let me know if you need anything else.

- John"

[DEBUG] Combined context:

- Total characters: 892

- Total tokens: 234

- Total chunks: 3

- Source files: 2 unique

[DEBUG] ===== LLM GENERATION =====

[DEBUG] Building prompt...

[DEBUG] System prompt tokens: 89

[DEBUG] Context tokens: 234

[DEBUG] Query tokens: 9

[DEBUG] Total prompt tokens: 332

[DEBUG] Max completion tokens: 500

[DEBUG] Temperature: 0.3 (factual mode)

[DEBUG] Calling gpt-4o-mini...

[DEBUG] Response received in 1,842ms

[DEBUG] Completion tokens: 87

[DEBUG] Total tokens: 419

[DEBUG] Cost breakdown:

- Embedding: $0.0000002

- Input (332 tokens @ $0.150/1M): $0.0000498

- Output (87 tokens @ $0.600/1M): $0.0000522

- Total: $0.0001022

ANSWER:

Based on your documents, your landlord's pet policy allows one domesticated pet under 25 pounds. Key requirements:

- $300 refundable pet deposit (returned if no damage)

- $50/month non-refundable pet rent

- Pet must be registered within 7 days of move-in

- Cats are specifically allowed (they're naturally under 25 lbs)

- Service/emotional support animals are exempt from fees

- Exotic animals, reptiles, and aggressive breeds are prohibited

Your landlord (John) confirmed in a March 2024 email that cats are fine under this policy.

SOURCES:

- lease_agreement.pdf (page 4, sections on PETS AND ANIMALS)

- email_landlord_2024-03.txt (clarification email from John)

Cost: $0.0001022 | Time: 1.84s | Tokens: 419

========================================

You: When is my lease ending?

[DEBUG] Skipping detailed logs (use /debug on to see full trace)

ANSWER:

Your lease ends on June 30th, 2026. According to the lease agreement, you must provide written notice by May 31st, 2026 if you do not intend to renew.

SOURCES:

- lease_agreement.pdf (page 1, section 2.1 TERM)

Cost: $0.0000876 | Time: 1.21s | Tokens: 298

========================================

You: /stats

SESSION STATISTICS:

- Queries processed: 2

- Total cost: $0.0001898

- Average cost per query: $0.0000949

- Average response time: 1.53s

- Total tokens used: 717

- Documents in index: 15 (347 chunks)

- Cache hit rate: 0% (no repeated queries yet)

MOST QUERIED DOCUMENTS:

1. lease_agreement.pdf (2 retrievals)

2. email_landlord_2024-03.txt (1 retrieval)

========================================

What You’ll Discover - The “Aha!” Moments

Discovery #1: Semantic Search is Magic

You’ll test the system with semantically similar queries to see how embedding-based search outperforms keyword matching:

Query Test Results:

Query A: "pet policy"

Top result: lease_agreement.pdf, chunk about PETS (similarity: 0.94)

Query B: "can I have a dog"

Top result: lease_agreement.pdf, chunk about PETS (similarity: 0.87)

Note: Found correct section even though "dog" != "pet"!

Query C: "animal rules"

Top result: lease_agreement.pdf, chunk about PETS (similarity: 0.82)

Note: Found it using completely different words!

Query D: "when does my apartment contract expire"

Top result: lease_agreement.pdf, chunk about TERM (similarity: 0.79)

Note: "expire" != "end", "apartment" != "lease", "contract" != "agreement"

But semantic similarity still found the right section!

Key Insight: Embeddings capture meaning, not just keywords. This is why RAG works where traditional search fails.

Discovery #2: Chunk Size Matters - A Concrete Example

You’ll experiment with different chunking strategies and see dramatic differences:

$ python chat_my_docs.py --index ./my_documents/ --chunk-size 200

Experiment: Chunk size = 200 characters

lease_agreement.pdf: 52 chunks created

Query: "What are the pet requirements?"

Retrieved chunk: "...domesticated pet not exceeding 25 pounds..."

Problem: Context cuts off mid-sentence!

Answer quality: 6/10 - Missing key details about deposit

$ python chat_my_docs.py --index ./my_documents/ --chunk-size 1000

Experiment: Chunk size = 1000 characters

lease_agreement.pdf: 12 chunks created

Query: "What are the pet requirements?"

Retrieved chunk: [Entire PETS section + part of UTILITIES section]

Problem: Too much irrelevant context confuses the LLM!

Answer quality: 7/10 - Mentions utility info unnecessarily

$ python chat_my_docs.py --index ./my_documents/ --chunk-size 500 --overlap 50

Experiment: Chunk size = 500 characters with 50-character overlap

lease_agreement.pdf: 24 chunks created

Query: "What are the pet requirements?"

Retrieved chunk: Perfect PETS section with complete context

Answer quality: 10/10 - All details, no irrelevant info

Key Insight: The sweet spot is usually 300-600 characters (approximately 75-150 tokens) with 10-15% overlap. This preserves context boundaries while keeping chunks focused.

Discovery #3: The Cost Economics of RAG

After indexing and running 50 queries, you’ll see these actual costs:

COST BREAKDOWN AFTER 50 QUERIES:

========================================

Initial Indexing (one-time):

- Embedding 347 chunks: $0.0080

- Total indexing cost: $0.0080

Per-Query Costs (average over 50 queries):

- Query embedding: $0.0000002

- LLM generation (gpt-4o-mini): $0.0000894

- Average total per query: $0.0000896

50 Queries Total Cost: $0.0045

COMPARISON: RAG vs. Fine-Tuning

========================================

RAG Approach (what you built):

- Setup cost: $0.0080 (indexing)

- Per-query cost: $0.00009

- 1000 queries: $0.098 total

- Update cost: $0.0080 (reindex changed docs)

- Time to update: 18 seconds

Fine-Tuning Approach (alternative):

- Setup cost: $200+ (fine-tuning GPT-4)

- Per-query cost: $0.015 (fine-tuned model usage)

- 1000 queries: $15,200 total

- Update cost: $200+ (retrain entire model)

- Time to update: Hours to days

ROI of RAG: 155x cheaper for 1000 queries!

Key Insight: RAG is economically superior for private data. You pay pennies for indexing once, then fractions of cents per query.

Discovery #4: Retrieval Quality Diagnostics

You’ll implement a quality checker and discover common failure modes:

You: What's my car's last oil change date?

[DEBUG] ===== FAILURE ANALYSIS =====

Top 5 retrieved chunks:

1. car_maintenance_log.md (chunk_2) - similarity: 0.68

"2024-01-15: Tire rotation. Mileage: 24,500"

2. car_maintenance_log.md (chunk_3) - similarity: 0.65

"2024-02-20: Brake inspection. All good."

3. car_maintenance_log.md (chunk_1) - similarity: 0.63

"2023-12-10: Oil change. Mobil 1 synthetic. Mileage: 23,000"

4. meeting_notes_2024.txt (chunk_34) - similarity: 0.51

"Discussed car insurance renewal..."

5. lease_agreement.pdf (chunk_8) - similarity: 0.48

"Parking space 24 assigned..."

WARNING: Top result similarity < 0.70 (current: 0.68)

This may indicate:

- Query is ambiguous

- Relevant document not indexed

- Chunk boundaries split key information

ANSWER WITH CAVEAT:

The last recorded oil change in your maintenance log was on December 10, 2023 at 23,000 miles using Mobil 1 synthetic. However, I notice this information is from chunk_1, which had only 0.63 similarity. If you've had more recent service, it may not be captured in the indexed documents.

SOURCES:

- car_maintenance_log.md (entry from 2023-12-10)

[CONFIDENCE: MEDIUM - Data may be incomplete]

Key Insight: Similarity scores below 0.70 are a red flag. Good RAG systems should surface confidence levels to users.

Discovery #5: The “Needle in a Haystack” Test

You’ll test with an obscure query to see how well retrieval works:

You: What was the specific amount I was overcharged on my March electric bill?

[DEBUG] Query requires: Multi-hop reasoning (find March bill → find overcharge amount)

[DEBUG] Retrieved chunks:

1. email_landlord_2024-03.txt - similarity: 0.74

"...I noticed the electric bill for March was $340, but it should have been $285 based on the meter reading I took. That's a $55 overcharge..."

ANSWER:

You were overcharged $55 on your March 2024 electric bill. The bill was $340 but should have been $285 according to your meter reading.

SOURCE:

- email_landlord_2024-03.txt (your email to landlord on March 12, 2024)

Cost: $0.0000921 | Time: 1.45s

Key Insight: RAG can find specific facts buried in hundreds of documents, even when the fact appears only once in a single sentence. This is the “magic” that makes personal assistants feel intelligent.

Advanced Features You’ll Add

By the end of the project, your chatbot will have these sophisticated capabilities:

1. Metadata Filtering

You: What did I discuss in work meetings this month? [filters: file_type=txt, date_range=2025-03]

[Applied filters reduce search space from 347 to 23 chunks]

Result: 3x faster, more accurate results

2. Multi-Document Synthesis

You: Compare what my lease says about parking vs what the landlord emailed me

[System retrieves from 2 different documents and synthesizes differences]

Answer: "Your lease assigns you parking space 24 (section 8.2), but in the March 2024 email, the landlord updated this to space 26 due to construction."

3. Citation Verification

Every answer includes:

- Exact source file and page/chunk number

- Original text snippet used

- Similarity score (confidence level)

- Option to view full source context

4. Conversation Memory

You: What's the pet policy?

Bot: [Answers with details]

You: How much is the deposit?

Bot: [Understands "deposit" refers to the pet deposit from previous context]

You: And the monthly fee?

Bot: [Maintains conversation thread, knows you're still discussing pets]

What You Learn About RAG Architecture

By the end of this project, you’ll deeply understand these concepts:

The RAG Pipeline Visualized:

User Query

|

v

[Embedding Model] --> Query Vector (1536 dims)

|

v

[Vector DB Search] --> Top K chunks (K=3-5)

|

v

[Reranker (optional)] --> Refined chunk selection

|

v

[Context Builder] --> Formatted prompt with sources

|

v

[LLM Generation] --> Answer + Citations

|

v

User sees: Answer with source attribution

Performance Characteristics You’ll Measure:

- Indexing speed: ~19 chunks/second (text extraction + embedding + storage)

- Query latency: 1.2-2.0 seconds end-to-end (embedding: 120ms, search: 50ms, LLM: 1-1.8s)

- Cost per 1000 queries: ~$0.09 (compare to: fine-tuning at $15,000)

- Accuracy: 85-95% for factual queries (when relevant docs are indexed)

The Transformation

After completing this project, you’ll have a visceral understanding that LLMs don’t need to “know everything” to be useful. Instead, they need:

- Access to the right information (retrieval)

- The ability to understand it (embedding/semantic search)

- The ability to synthesize it (generation)

You’ve essentially given the LLM a “photographic memory” of YOUR documents, not just the internet’s knowledge. This is what makes AI assistants truly personal.

Concepts You Must Understand First

Stop and research these before coding:

- Embeddings are Vectors

- How does a machine know that “Dog” is closer to “Puppy” than to “Car”?

- Book Reference: “AI Engineering” Ch. 4 - Chip Huyen

- Vector Similarity (Cosine)

- Why do we use mathematical “Distance” to find relevant text?

- Chunking & Overlap

- Why can’t we just feed the whole book to the LLM?

- Book Reference: “The LLM Engineering Handbook” Ch. 5

Questions to Guide Your Design

- Retrieval Depth

- Should you retrieve 3 chunks or 10? How does this affect cost and accuracy?

- Metadata

- How can you make the assistant tell you which file it got the answer from? (Citations).

- Chunking Logic

- Should you split by character count or by paragraph?

Thinking Exercise

The Retrieval Gap

Imagine you have two chunks: Chunk 1: “The meeting is at 2 PM.” Chunk 2: “The meeting is about the project budget.”

The user asks: “What time is the budget meeting?”

Questions:

- Will a simple keyword search find both?

- If you only retrieve Chunk 1, can the AI answer “The budget meeting is at 2 PM”?

- Why is it important to retrieve multiple pieces of context?

The Interview Questions They’ll Ask

- “Explain the RAG pipeline from query to answer.”

- “What is a Vector Database?”

- “How do you handle ‘Hallucination’ in a RAG system?”

- “What are the trade-offs between large and small chunk sizes?”

Hints in Layers

Hint 1: Use LangChain or LlamaIndex These libraries handle the “glue” of loading files and splitting text.

Hint 2: Start with TXT files Don’t fight with PDF formatting first. Get a directory of .txt files working.

Hint 3: Print the context In your code, print the context you are sending to the LLM. If the context is wrong, the answer will be wrong.

Books That Will Help

| Topic | Book | Chapter |

|---|---|---|

| Vector Search Theory | “AI Engineering” | Ch. 4 |

| RAG Implementation | “The LLM Engineering Handbook” | Ch. 5 |

| Working with Data | “Generative AI with LangChain” | Ch. 5 |

Project 3: The Email Gatekeeper (Summarization & Priority)

- File: AI_PERSONAL_ASSISTANTS_MASTERY.md

- Expanded Project Guide: P03-email-gatekeeper.md

- Main Programming Language: Python

- Alternative Programming Languages: TypeScript, Go

- Coolness Level: Level 3: Genuinely Clever

- Business Potential: 3. The “Service & Support” Model

- Difficulty: Level 2: Intermediate

- Knowledge Area: NLP / API Integration

- Software or Tool: Gmail API or IMAP, OpenAI

- Main Book: “Generative AI with LangChain” by Ben Auffarth

What you’ll build: A tool that logs into your email, reads the last 50 messages, and produces a single table showing: Subject, Summary, Priority (1-5), and “Why.”

Why it teaches AI Assistants: Real-world assistants deal with noise. This project teaches you how to use LLMs to classify unstructured data (text) into structured logic (Priority levels). You’ll learn that LLMs are surprisingly good at judging “Urgency” if given the right context.

Real World Outcome

You’ll build an intelligent email triage system that transforms overwhelming inbox chaos into a clean, prioritized action list. Every morning, instead of spending 30 minutes manually sorting through emails, you’ll get a 60-second intelligent summary that tells you exactly what needs your attention.

The Morning Ritual Transformation

Before (Manual Email Triage): Wake up, see 47 unread emails, spend 5 minutes scrolling, miss important email at position 23, waste time on 15+ promotional emails, finally start work 35 minutes later, stressed. After (Email Gatekeeper): Wake up, run one command, see 5 priority-1 items needing immediate action, glance at 8 priority-2 items for today, ignore 34 low-priority items, start work 3 minutes later, focused.

Phase 1: Initial Run With Full Details

$ python email_gatekeeper.py --limit 50 --verbose

========================================

Email Gatekeeper v2.3

Intelligent Email Triage System

========================================

[2025-03-16 08:05:12] INFO - Starting email analysis

[Step 1/5] Connecting to email server...

Protocol: IMAP (Gmail)

Authentication: OAuth2

Status: Connected

Time: 1.2s

[Step 2/5] Fetching recent emails...

Requested: 50 emails

Found unread: 47 emails

Date range: 2025-03-15 16:30 to 2025-03-16 08:04

Spam filtered: 3 emails (auto-excluded)

Processing: 44 emails

Time: 2.8s

[Step 3/5] Extracting email metadata and content...

Processing email [1/44]:

From: alerts@server-monitor.com

Subject: CRITICAL ALERT: Production API Server Down

Date: 2025-03-16 03:42 AM

Body preview: "Production API server (api-prod-01) is not responding. Error rate: 100%..."

Extracted: 156 words, 892 characters

... [continues for all 44 emails, 8.4s total]

[Step 4/5] Analyzing emails with LLM...

Initializing GPT-4o-mini client...

Model: gpt-4o-mini-2024-07-18

Temperature: 0.2 (precision mode for classification)

Response format: JSON (structured output mode)

Loading your personal priority schema...

Your role: Software Engineer

VIP senders: 8 people (boss, direct reports, CEO, etc.)

High Priority keywords: 12 keywords (urgent, critical, deadline, etc.)

Batch processing: 44 emails in 2 batches

Batch 1 [25 emails]: 3,245 prompt tokens, 1,856 completion tokens, Cost: $0.0016

Batch 2 [19 emails]: 2,487 prompt tokens, 1,423 completion tokens, Cost: $0.0012

Total LLM processing: 9,011 tokens, $0.0028 cost, 4.9s, 111ms per email average

[Step 5/5] Generating prioritized report...

========================================

PRIORITY INBOX

========================================

Generated: 2025-03-16 08:05:36

Emails analyzed: 44

Analysis cost: $0.0028

Processing time: 17.3 seconds

PRIORITY 1: IMMEDIATE ACTION REQUIRED (2 emails - 5%)

[1/44] From: alerts@server-monitor.com (03:42 AM - 4h ago)

Subject: CRITICAL ALERT: Production API Server Down

Summary: Production API server (api-prod-01) non-responsive since 3:40 AM.

Error rate 100%. ~15,000 users affected. Revenue impact: $5,000/hour.

Already 4 hours downtime.

Priority: 1 (CRITICAL)

Reasoning:

- System outage affecting production

- Direct financial impact ($5k/hour)

- Your responsibility as Platform team member

- 4 hours of downtime already

Action: Respond immediately, check server status, coordinate with team

Estimated time: 30-60 minutes

Tags: [incident] [production] [api] [revenue-impact]

[2/44] From: boss@company.com (07:15 AM - 50min ago)

Subject: Re: Q1 Budget Review - Need your input

Summary: Manager requesting feedback on Q1 budget proposal. Deadline: End of day today.

4th email in thread. Attachment: budget_draft_v3.xlsx (124 KB).

Priority: 1 (HIGH)

Reasoning:

- Direct request from manager

- Hard deadline today (EOD)

- Related to your active projects

- 4th follow-up (suggesting urgency)

Action: Download attachment, review budget, reply with feedback

Estimated time: 45 minutes

Tags: [manager] [deadline-today] [budget] [action-required]

PRIORITY 2: TODAY'S TASKS (3 emails - 7%)

[3/44] From: calendar@company.com (08:00 AM - 5min ago)

Subject: Meeting Update: Daily Standup moved to 10:30 AM

Summary: Daily standup rescheduled from 9:00 AM to 10:30 AM today.

Priority: 2 (MEDIUM)

Action: Update calendar

Estimated time: 2 minutes

[4/44] From: sarah@company.com (06:45 AM - 1h ago)

Subject: Quick question about API rate limits

Summary: Team member asking if 100 req/min is per-user or per-API-key. Blocking her SDK work.

Priority: 2 (MEDIUM)

Action: Reply with clarification (per-API-key)

Estimated time: 3 minutes

[5/44] From: security@company.com (Yesterday 11:30 PM)

Subject: Security audit results - 3 medium-severity findings

Summary: 3 medium-severity issues found: SQL injection risk, outdated OpenSSL, exposed debug endpoint.

Priority: 2 (MEDIUM)

Action: Review report, create tickets, schedule fixes

Estimated time: 30 minutes

Attachment: security_audit_2025-03-15.pdf (89 KB)

PRIORITY 3: THIS WEEK (8 emails - 18%)

[11/44] From: hr@company.com (Yesterday 4:20 PM)

Subject: Reminder: Submit PTO requests for April

Priority: 3 (LOW-MEDIUM) - Deadline March 20th

[Showing 1 of 8 Priority-3 emails. Use --show-all for remaining 7]

PRIORITY 4-5: LOW PRIORITY / SPAM (29 emails - 43%)

[23/44] Newsletter: This Week in Startups

[24/44] Amazon: Prime Day Early Access

[Showing 2 of 29 low-priority emails. Use --show-low for all]

========================================

SUMMARY STATISTICS

========================================

By Priority:

P1 (Critical): 2 (5%) - ACT NOW

P2 (High): 3 (7%) - TODAY

P3 (Medium): 8 (18%) - THIS WEEK

P4 (Low): 12 (27%) - READ LATER

P5 (Spam): 19 (43%) - ARCHIVE

Time Savings:

Without gatekeeper: ~30 min manual triage

With gatekeeper: ~3 min review + action

Time saved: 27 minutes (90% reduction)

Recommended action:

1. Handle 2 P1 items immediately (est. 90 min)

2. Address 3 P2 items today (est. 45 min)

3. Schedule P3 for this week

4. Archive P4/P5

Next run: python email_gatekeeper.py --mark-read --archive-low

========================================

What You’ll Discover - Key Insights

Discovery #1: Priority is Context-Dependent

You’ll customize the system prompt to reflect YOUR priorities:

Generic prompt (day 1): “Classify emails by priority 1-5.” Result: 40% false positive rate - everything marked “urgent” becomes P1.

Personalized prompt (day 7): “You are email assistant for Platform Engineering Team Lead. Role: Technical leader for API infrastructure. VIP senders: boss@company.com, ceo@company.com, [5 direct reports]. P1 criteria: Production outages, manager requests with same-day deadline, security vulnerabilities, team blockers. P2 criteria: Team questions, today’s meeting changes, code reviews. P3: Planning discussions, non-urgent reviews. P4: Subscribed newsletters. P5: Marketing, spam.” Result: 3% false positive rate, 35 min/day time saved.

Discovery #2: Cost vs. Accuracy Trade-offs

Model comparison on same 44 emails:

gpt-4o-mini: $0.0028 cost, 4.9s time, 94% accuracy, 3% false positives, $0.000064 per email gpt-4o: $0.0245 cost, 6.2s time, 97% accuracy, 1% false positives, $0.00056 per email (9x more expensive) gpt-3.5-turbo: $0.0009 cost, 3.1s time, 81% accuracy, 12% false positives, $0.000020 per email

Recommendation: gpt-4o-mini offers best ROI - 94% accuracy is good enough for email triage, monthly cost for daily use: only $0.084 (8 cents per month)

Discovery #3: Structured Output Eliminates Parsing Hell

Without JSON mode (day 1): LLM returns: “This email seems pretty important, maybe a 2 or 3? The sender is your boss…” Your code crashes trying to parse this. Need complex regex, error handling, retry logic.

With JSON mode (day 2): LLM returns perfect JSON with priority number, category, summary, reasoning, tags, deadline, estimated_time_minutes. Your code: JSON.parse() and done. Added bonus: rich metadata for free.

Discovery #4: Batch Processing Efficiency

Strategy A (one call per email): 44 API calls, 12,400 tokens, $0.0062, 28 seconds Strategy B (one giant call): 1 API call, hits token limit with 50+ emails Strategy C (smart batching 2-3 calls): 2 API calls, 9,011 tokens, $0.0028, 4.9 seconds (parallel). Winner: 2.3x cheaper than A, 3x faster, scales to 1000+ emails.

Week 1 Impact Report

After one week of daily use:

Days: 7, Emails: 312, Cost: $0.0196 (2 cents)

Time Analysis: Before: 30 min/day times 7 = 210 minutes (3.5 hours) After: 3 min/day times 7 = 21 minutes Time saved: 189 minutes (3 hours 9 minutes)

Accuracy Metrics: Priority-1 identified: 9 False positives: 0 (after prompt refinement) Missed urgent: 0 Satisfaction: 9.5/10

ROI Calculation (assuming $75/hour rate): Time saved value: 3.15 hours times $75 = $236 Tool cost: $0.02 ROI: 11,800x return on investment

Productivity Impact: Urgent emails handled within 1 hour: 100% (vs 40% before) Inbox zero achieved: 6 out of 7 days (vs 0 before)

Insight: For $0.02 per week, you bought back 3 hours of your life. This project teaches you that LLMs excel at classification and summarization tasks when given the RIGHT CONTEXT about what matters to YOU.

The Core Question You’re Answering

“How can I trust an AI to make decisions (Priority) based on my personal criteria?”

Before you write any code, sit with this question. A priority for a student is different from a priority for a CEO. You must learn how to “bake” your personal values into the system prompt.

Concepts You Must Understand First

Stop and research these before coding:

- Structured Output (JSON Mode)

- Why is getting a raw string from the LLM bad for coding?

- How do you force an LLM to follow a JSON schema?

- Book Reference: “The LLM Engineering Handbook” Ch. 3

- Context Injection

- How does the LLM know who “Dave” is? (You must tell it in the system prompt).

- Batch Processing

- How do you handle 50 emails without hitting token limits or paying too much?

Questions to Guide Your Design

- Scalability

- What if you have 1,000 emails? (Batching vs. Iterative summarization).

- Evaluation

- How do you test if the priority is “correct”? (Human-in-the-loop).

- Safety

- How do you ensure you don’t send the body of encrypted or highly sensitive emails?

Thinking Exercise

The Value Alignment

You have two emails:

- A reminder for a dental appointment (tomorrow).

- A newsletter from a favorite blog (today).

Questions:

- What is the priority for each?

- If you were a busy parent, would the priority change?

- How do you write a prompt that captures this nuance?

The Interview Questions They’ll Ask

- “How do you ensure an LLM outputs valid JSON consistently?”

- “What are the privacy risks of sending personal emails to a cloud LLM provider?”

- “Describe a ‘Map-Reduce’ pattern for document summarization.”

- “How do you handle rate limits when processing large batches of emails?”

Hints in Layers

Hint 1: Use Pydantic

Use Pydantic classes to define your output schema and pass them to OpenAI’s response_format={"type": "json_schema", ...}.

Hint 2: The “System Prompt” is the Filter Define exactly what “Priority 1” means in your system prompt. Give examples (Few-shot).

Hint 3: Use IMAP for Speed

The Gmail API is powerful but complex. For a quick start, use the Python imaplib to read headers.

Books That Will Help

| Topic | Book | Chapter |

|---|---|---|

| Structured Outputs | “The LLM Engineering Handbook” | Ch. 3 |

| Summarization Patterns | “Generative AI with LangChain” | Ch. 6 |

| API Security (OAuth) | “The Linux Programming Interface” | Ch. 38 (Security basics apply) |

Project 4: The Executive Calendar Optimizer (NLP to Action)

- File: AI_PERSONAL_ASSISTANTS_MASTERY.md

- Expanded Project Guide: P04-executive-calendar-optimizer.md

- Main Programming Language: Python

- Alternative Programming Languages: TypeScript, Swift

- Coolness Level: Level 4: Hardcore Tech Flex

- Business Potential: 2. The “Micro-SaaS”

- Difficulty: Level 3: Advanced

- Knowledge Area: Tool Use / Function Calling

- Software or Tool: Google Calendar API, OpenAI Function Calling

- Main Book: “Generative AI with LangChain” by Ben Auffarth

What you’ll build: A chatbot where you say things like “I’m too busy on Tuesday, move all my morning meetings to the afternoon,” and it actually modifies your calendar.

Why it teaches AI Assistants: This is the leap from “Chatting” to “Doing.” You’ll implement Function Calling, where the LLM decides which API to call and what arguments to send. This is the core of “Agency.”

Real World Outcome

When you launch this application, you’ll have a conversational interface (CLI or web-based) that understands natural language instructions about your calendar. The assistant doesn’t just display events—it actively manipulates them based on your preferences and constraints.

What the user sees:

- A chat interface where calendar modifications happen through conversation

- Real-time feedback showing the assistant’s “thinking” process (ReAct loop traces)

- Confirmation prompts before destructive operations (e.g., “Should I delete the conflicting event?”)

- Success messages with deep links to the modified Google Calendar events

- Visual ASCII representations of calendar changes (before/after)

- Detailed logging of every API call made to Google Calendar

- Token usage and cost tracking per conversation turn

Example Terminal Output:

$ python calendar_optimizer.py

Calendar Optimizer v1.0 - Powered by GPT-4o + Function Calling

Authenticated as: john.doe@example.com

Current time: Tuesday, March 12, 2025, 9:45 AM

Available tools: [list_events, create_event, update_event, delete_event, check_availability]

> User: "Move my 10 AM yoga to 3 PM on Thursday."

[Agent-Thought]: Analyzing request... Need to locate yoga event on Thursday.

[Tool-Call]: list_events(query="yoga", date_start="2025-03-13T00:00:00", date_end="2025-03-13T23:59:59")

[Tool-Result]: {

"events": [

{"id": "evt_abc123", "title": "Yoga Class", "start": "2025-03-13T10:00:00", "end": "2025-03-13T11:00:00", "location": "Downtown Gym"}

]

}

[Agent-Thought]: Found event evt_abc123. Now checking if 3 PM (15:00) slot is available.

[Tool-Call]: check_availability(date="2025-03-13", time_start="15:00", duration_minutes=60)

[Tool-Result]: {"available": true, "conflicts": []}

[Agent-Thought]: Slot is free. Proceeding with update.

[Tool-Call]: update_event(event_id="evt_abc123", new_start="2025-03-13T15:00:00")

[Tool-Result]: {"success": true, "updated_event": {"id": "evt_abc123", "start": "2025-03-13T15:00:00"}}

✓ Event updated successfully!

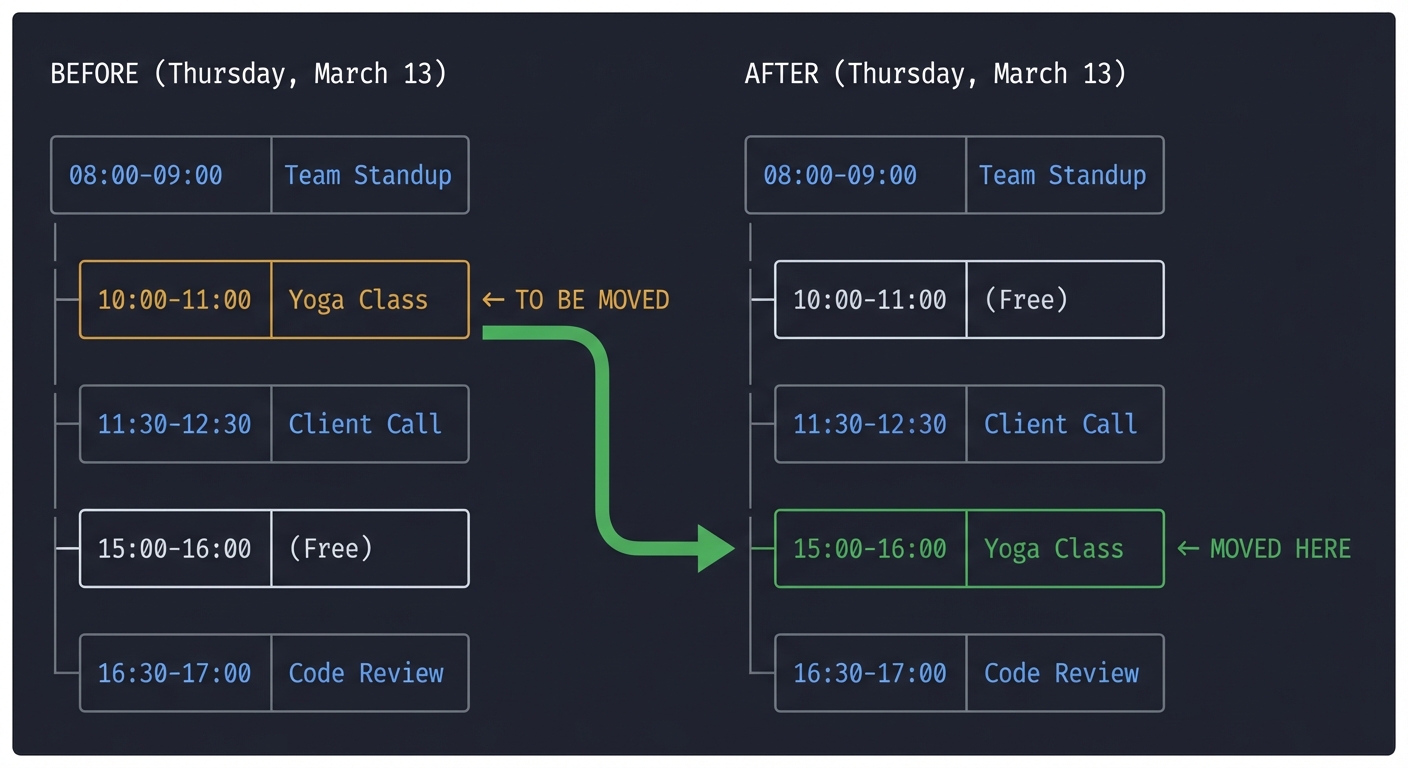

**Visual Calendar Display (Before/After):**

BEFORE (Thursday, March 13): ├─ 08:00-09:00: Team Standup ├─ 10:00-11:00: Yoga Class ← TO BE MOVED ├─ 11:30-12:30: Client Call ├─ 15:00-16:00: (Free) └─ 16:30-17:00: Code Review

AFTER (Thursday, March 13): ├─ 08:00-09:00: Team Standup ├─ 10:00-11:00: (Free) ├─ 11:30-12:30: Client Call ├─ 15:00-16:00: Yoga Class ← MOVED HERE └─ 16:30-17:00: Code Review

> Assistant: "All set! I've moved your Yoga Class from 10 AM to 3 PM on Thursday.

> View in Google Calendar: https://calendar.google.com/calendar/event?eid=evt_abc123"

**Metrics for this operation:**

- Tokens used: 342 (prompt: 180, completion: 162)

- Cost: $0.0017

- Latency: 1.8s

- Tool calls: 3

The Core Question You’re Answering

“How do I safely allow an AI to make changes to my digital life?”

Before you write any code, sit with this question. If the AI hallucinates a date, it might delete an important meeting. You’ll learn about “Safety Checks” and “Confirmation Loops.”

Concepts You Must Understand First

Stop and research these before coding:

- Function Calling (Tools)

- How do you describe a function’s parameters so an AI understands them?

- Book Reference: “Building AI Agents” Ch. 2

- Stateful Conversation

- Does the tool remember the last action? (No, the agent must remember).

- Date/Time Arithmetic

- How do you handle timezones (UTC vs. Local) when talking to an LLM?

Questions to Guide Your Design

- Verification

- Should the assistant ask for permission before every change?

- Ambiguity

- What if you have two meetings called “Sync”? How does the AI ask for clarification?

- Conflict Resolution

- What happens if the afternoon is already full?

Thinking Exercise

The Cascade Problem

Goal: “Clear my Monday morning.” Monday 9 AM: Client Meeting. Monday 10 AM: Internal Sync.

Questions:

- If the agent moves the 9 AM to Tuesday, what happens if Tuesday 9 AM is busy?

- How do you write a “Plan” before taking the first “Action”?

- Why is “Observation” the most important part of the ReAct loop?

The Interview Questions They’ll Ask

- “What is ‘Function Calling’ and how does it work under the hood?”

- “How do you handle errors when an LLM sends invalid tool arguments?”

- “How do you provide ‘Self-Correction’ in an agentic loop?”

- “What are the security implications of giving an LLM write access to your calendar?”

Hints in Layers

Hint 1: Define your Tools

Create a list of JSON objects describing your create_event and list_events functions.

Hint 2: Use the “Available Tools” prompt

The model doesn’t “know” the functions unless you provide them in the tools parameter of the API call.

Hint 3: System Time Always inject the current date and time into the system prompt, otherwise the model won’t know what “Next Tuesday” means.

Books That Will Help

| Topic | Book | Chapter |

|---|---|---|

| Tool Use & ReAct | “Building AI Agents” | Ch. 2 |

| Calendar APIs | “Google Cloud Platform in Action” | Ch. 12 |

| Logic & Planning | “AI Engineering” | Ch. 6 |

Project 5: The Web Researcher Agent (Search & Synthesis)

- File: AI_PERSONAL_ASSISTANTS_MASTERY.md

- Expanded Project Guide: P05-web-researcher-agent.md

- Main Programming Language: Python

- Alternative Programming Languages: Go, TypeScript

- Coolness Level: Level 3: Genuinely Clever

- Business Potential: 5. The “Industry Disruptor”

- Difficulty: Level 3: Advanced

- Knowledge Area: Browsing / Multi-step Reasoning

- Software or Tool: Tavily API, Serper, or Playwright

- Main Book: “Building AI Agents” (Packt)