AI Agents - From Black Boxes to Autonomous Systems

Goal: Deeply understand the architecture of AI agents—not just how to prompt them, but how to design robust, closed-loop control systems that reason, act, remember, and fail predictably. You will move from “magic black box” thinking to engineering autonomous systems with verifiable invariants, mastering the transition from transaction to iterative process.

Why AI Agents Matter

In 2023, we used LLMs as Zero-Shot or Few-Shot engines: you ask, the model answers. This was the “Mainframe” era of AI—one-way transactions. Then came Tool Calling, allowing models to interact with the world. But a single tool call is still just a “stateless” transaction.

AI Agents represent the shift from transaction to process.

According to Andrew Ng, agentic workflows—where the model iterates on a solution—can make a smaller model outperform a much larger model on complex tasks. This is because agents introduce iteration, critique, and correction.

However, the “Billion Dollar Loop” risk is real. In a world where agents can write code, access bank APIs, and manage infrastructure, the cost of a “hallucination” is no longer just a wrong word—it’s a production outage or a security breach.

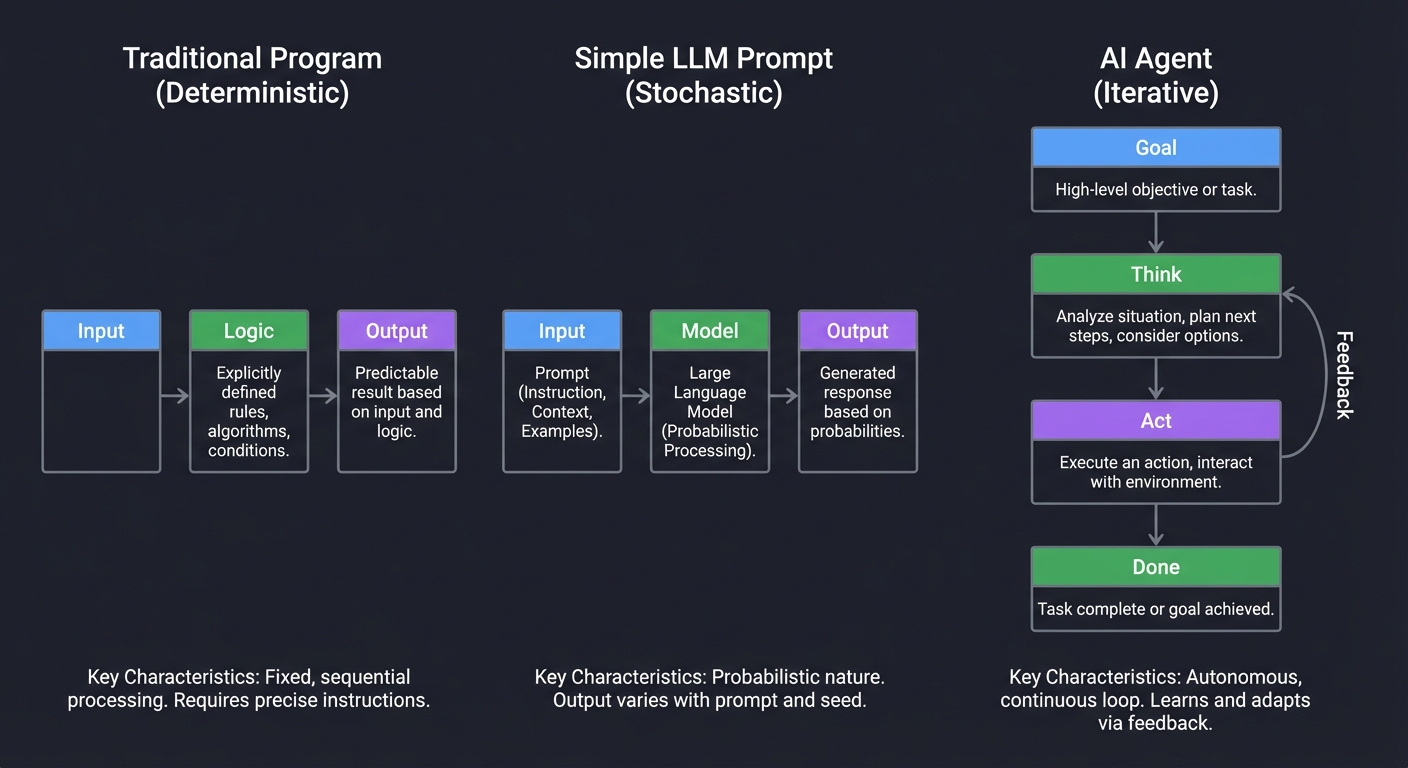

The Agentic Shift: From Pipeline to Loop

Traditional Program Simple LLM Prompt AI Agent

(Deterministic) (Stochastic) (Iterative)

↓ ↓ ↓

[Input] → [Logic] → [Output] [Input] → [Model] → [Output] [Goal]

[ ↓ ]

[Think] ← Feedback

[ ↓ ] ↑

[ Act ] ────┘

[ ↓ ]

[ Done]

Every major tech company is now pivoting from “Chatbots” to “Agents.” Understanding how to build them is understanding the future of software engineering where code doesn’t just process data—it makes decisions.

Enterprise Adoption in 2025

The AI agent revolution is not hypothetical—it’s happening now:

- Market Growth: The global AI agent market reached $7.38 billion in 2025, nearly doubling from $3.7 billion in 2023, and is projected to hit $103.6 billion by 2032 (45.3% CAGR).

- Enterprise Penetration: 85% of enterprises are expected to implement AI agents by end of 2025, with 79% already having deployed them at some level.

- ROI Reality: Organizations report an average ROI of 171% from agentic AI deployments, with U.S. enterprises forecasting 192% returns.

- Operational Impact: Businesses using AI agents report 55% higher operational efficiency and 35% cost reductions.

- Workflow Integration: 64% of AI agent deployments focus on automating workflows across support, HR, sales ops, and admin tasks.

- Future Momentum: 96% of IT leaders plan to expand their AI agent implementations during 2025.

Sources: AI Agents Statistics 2025, Enterprise State of AI, Agentic AI Adoption Trends

This isn’t “future tech”—it’s infrastructure being deployed in production right now. Understanding agent architecture is understanding the next 10 years of software engineering.

Prerequisites & Background Knowledge

Essential Prerequisites (Must Have)

Before starting these projects, you should be comfortable with:

- Programming Fundamentals

- Strong proficiency in Python or JavaScript/TypeScript

- Experience with async/await patterns and concurrent execution

- Understanding of object-oriented programming and design patterns

- Familiarity with JSON schema and data validation

- API Integration Experience

- Making HTTP requests and handling responses

- Working with REST APIs

- Understanding authentication (API keys, OAuth)

- Basic error handling and retry logic

- Large Language Model Basics

- Basic understanding of LLM prompting

- Familiarity with at least one LLM API (OpenAI, Anthropic, etc.)

- Understanding of temperature, tokens, and context windows

- Awareness of hallucination risks

- Version Control & Development Environment

- Git basics (commit, branch, merge)

- Command-line comfort

- Environment variables and secrets management

- Package managers (pip, npm)

Helpful But Not Required

You’ll learn these concepts through the projects:

- Advanced prompt engineering techniques

- Vector databases and embeddings

- Graph databases and knowledge representation

- Formal verification and invariant checking

- Distributed systems concepts

- Testing strategies for stochastic systems

Self-Assessment Questions

Check your readiness:

- Can you write a Python script that calls an API and handles errors gracefully?

- Do you understand what JSON Schema is and why validation matters?

- Have you used an LLM API programmatically (not just ChatGPT web interface)?

- Can you explain the difference between deterministic and stochastic systems?

- Are you comfortable reading technical papers and extracting key concepts?

- Do you understand what a feedback loop is in a control system?

If you answered “no” to 3+ questions: Start with Project 1 and proceed slowly. Spend extra time on the “Concepts You Must Understand First” sections.

If you answered “yes” to all: You’re ready. Consider starting with Project 2 (Minimal ReAct Agent) and referencing Project 1 only if needed.

Development Environment Setup

Required Tools:

# Python environment (recommended: Python 3.10+)

pip install openai anthropic pydantic python-dotenv requests

# Or JavaScript/TypeScript

npm install openai @anthropic-ai/sdk zod dotenv axios

Recommended Tools:

- IDE: VS Code with Python/JavaScript extensions

- API Key Management:

.envfile withpython-dotenvor equivalent - Database (for later projects): SQLite (built-in) or PostgreSQL

- Vector Store (Project 4+): Chroma, Pinecone, or Weaviate

- Observability (Project 9+): LangSmith, LangFuse, or custom logging

API Costs:

Most projects can be completed for $5-20 in API costs using GPT-4o-mini or Claude 3.5 Haiku. Budget $50-100 if using GPT-4 or Claude 3.5 Opus extensively.

Time Investment

Per-project time estimates:

| Project Level | Time Investment | Complexity |

|---|---|---|

| Projects 1-3 | 4-8 hours each | Foundation - implement core loop |

| Projects 4-6 | 8-16 hours each | Intermediate - add memory, planning, safety |

| Projects 7-9 | 12-24 hours each | Advanced - self-correction, multi-agent, eval |

| Project 10 | 20-40 hours | Capstone - integrate everything |

Total sprint time: 100-200 hours for all 10 projects (3-6 months part-time).

Important Reality Check

What these projects are NOT:

- ❌ Copy-paste tutorials with complete solutions

- ❌ “Build ChatGPT in 50 lines” type projects

- ❌ Production-ready systems you can deploy immediately

- ❌ Shortcuts to avoid reading papers and documentation

What these projects ARE:

- ✅ Deep explorations that force you to grapple with core challenges

- ✅ Learning vehicles that build mental models through struggle

- ✅ Foundations for understanding production agent frameworks (LangGraph, CrewAI, etc.)

- ✅ Preparation for building real-world agent systems professionally

Expected difficulty curve:

- Projects 1-2: You’ll feel confident—”This is easier than I thought!”

- Projects 3-5: You’ll struggle with edge cases and debugging stochastic systems

- Projects 6-8: You’ll have multiple “aha!” moments as patterns click

- Projects 9-10: You’ll understand why production frameworks exist and what they’re solving

This is normal. The struggle is the learning.

Core Concept Analysis

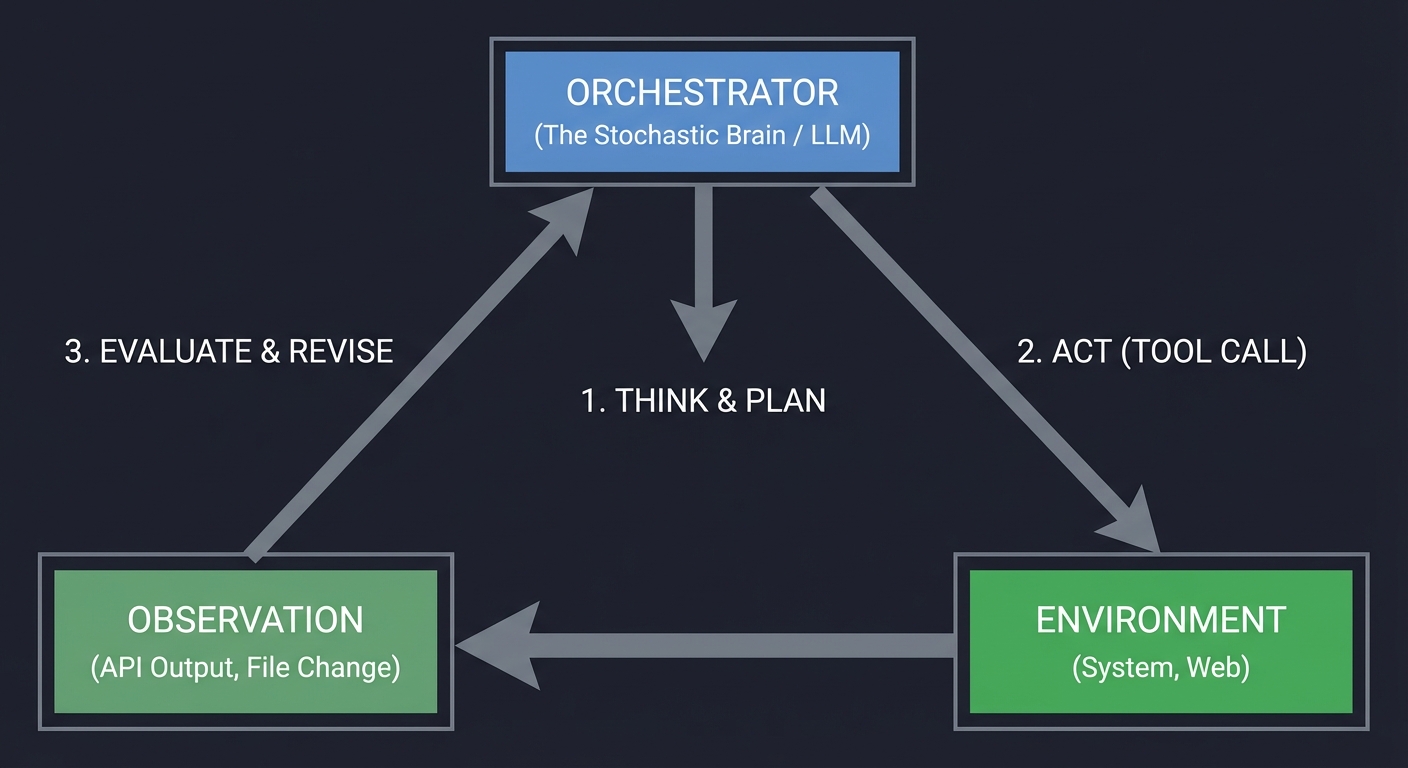

1. The Agent Loop: A Closed-Loop Control System

An agent is fundamentally a control loop, similar to a PID controller or a kernel scheduler. Unlike a simple script, it observes the environment and adjusts its next action based on feedback.

┌────────────────────────────────┐

│ ORCHESTRATOR │

│ (The Stochastic Brain / LLM) │

└───────────────┬────────────────┘

│

1. THINK & PLAN

│

▼

┌────────────────┐ 2. ACT (TOOL CALL)

│ OBSERVATION │ ┌───────────────┐

│ (API Output, │◄─────────────────┤ ENVIRONMENT │

│ File Change) │ │ (System, Web) │

└───────┬────────┘ └───────────────┘

│

3. EVALUATE & REVISE

│

└───────────────────────────────────┘

Key insight: The loop is the agent. If you don’t have a loop that processes feedback, you don’t have an agent; you have a pipeline. Book Reference: “AI Agents in Action” Ch. 3: “Building your first agent”.

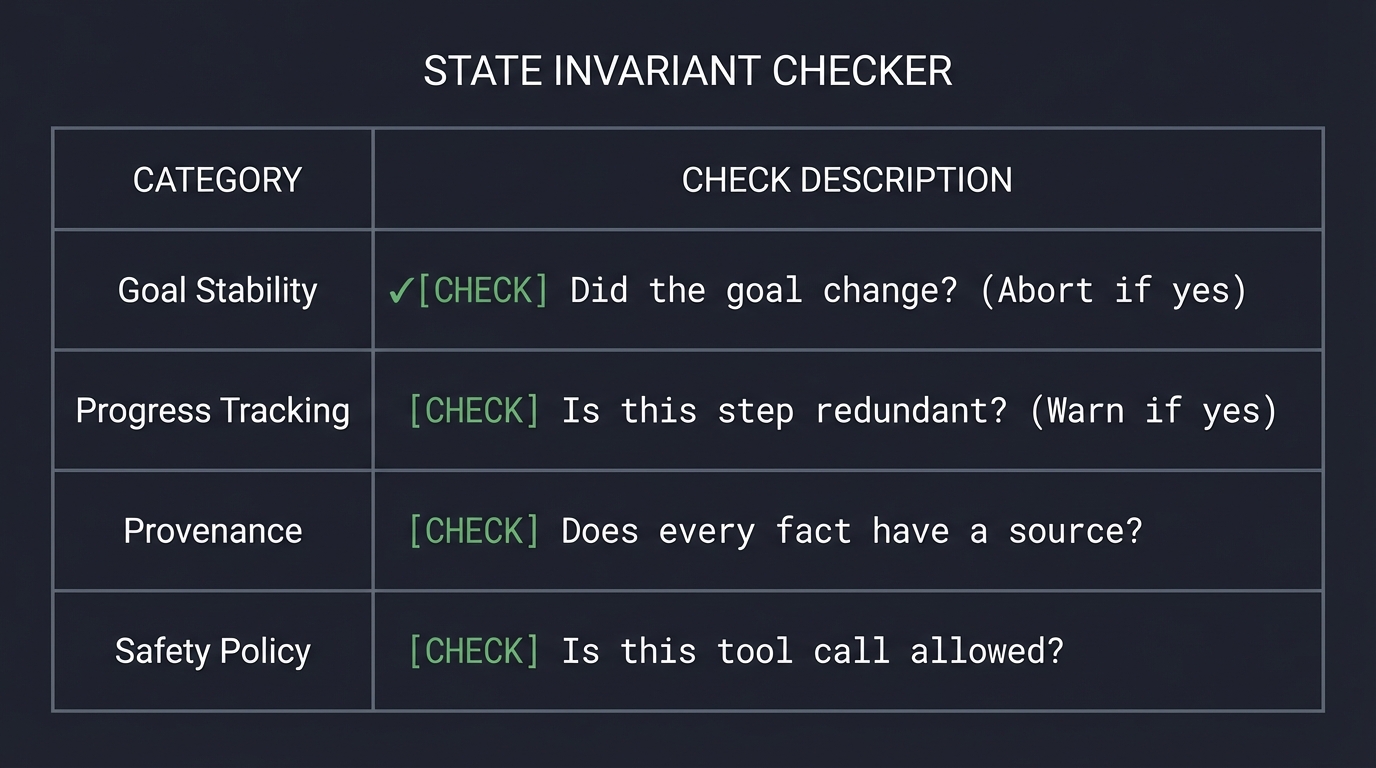

2. State Invariants: The Guardrails of Correctness

In traditional programming, an invariant is a condition that is always true. In AI agents, we must enforce “State Invariants” to prevent the model from drifting into hallucination. We treat the Agent’s state as a contract.

STATE INVARIANT CHECKER

─────────────────────────────────────────────────────────────

Goal Stability | [CHECK] Did the goal change? (Abort if yes)

─────────────────────────────────────────────────────────────

Progress Tracking | [CHECK] Is this step redundant? (Warn if yes)

─────────────────────────────────────────────────────────────

Provenance | [CHECK] Does every fact have a source?

─────────────────────────────────────────────────────────────

Safety Policy | [CHECK] Is this tool call allowed?

─────────────────────────────────────────────────────────────

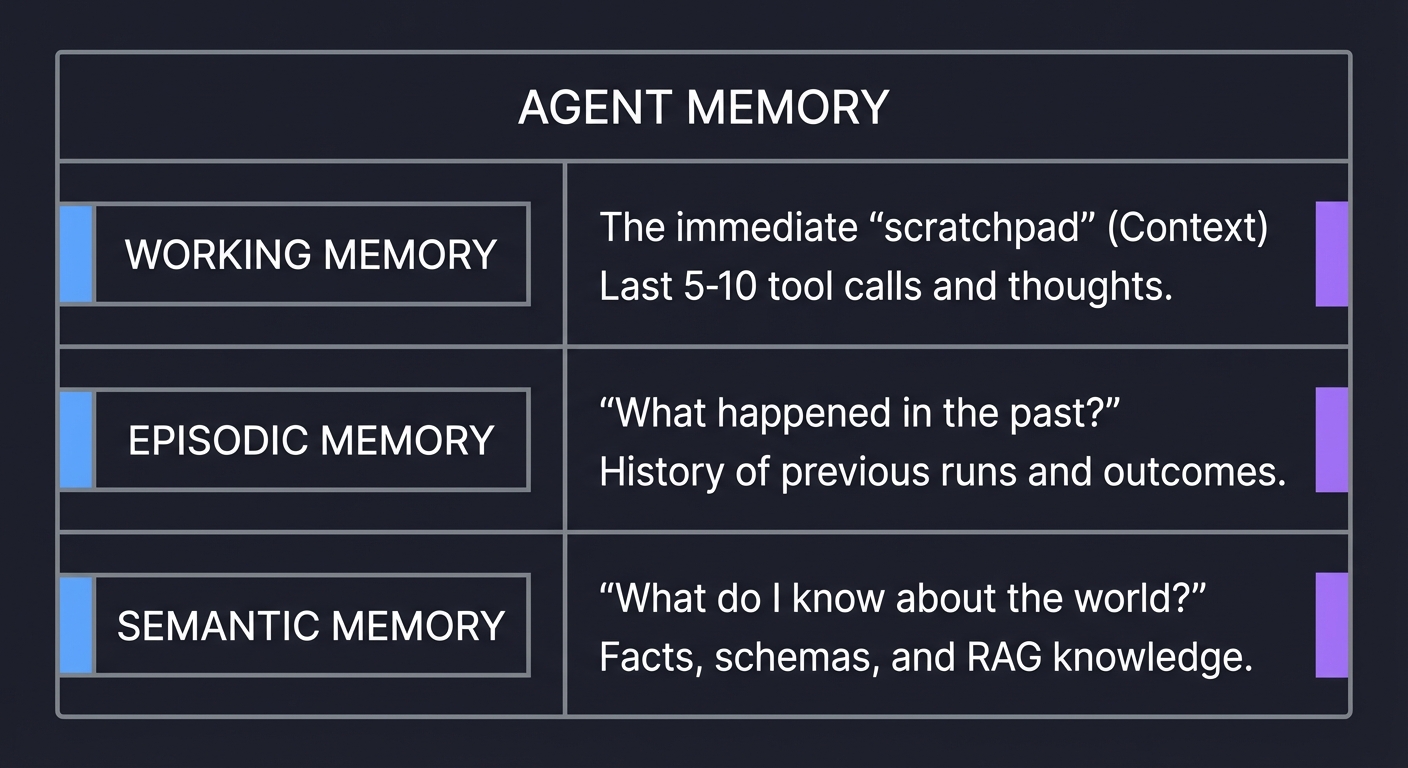

3. Memory Hierarchy: Episodic vs. Semantic

Agents need to remember what they’ve done. We model this after human cognitive architecture, moving from volatile “Working Memory” to persistent “Semantic Memory.”

┌─────────────────────────────────────────────────────────────┐

│ AGENT MEMORY │

├─────────────────────────────────────────────────────────────┤

│ WORKING MEMORY │ The immediate "scratchpad" (Context) │

│ │ Last 5-10 tool calls and thoughts. │

├──────────────────┼──────────────────────────────────────────┤

│ EPISODIC MEMORY │ "What happened in the past?" │

│ │ History of previous runs and outcomes. │

├──────────────────┼──────────────────────────────────────────┤

│ SEMANTIC MEMORY │ "What do I know about the world?" │

│ │ Facts, schemas, and RAG knowledge. │

└──────────────────┴─────────────────────────────────────────────┘

Book Reference: “Building AI Agents with LLMs, RAG, and Knowledge Graphs” Ch. 7.

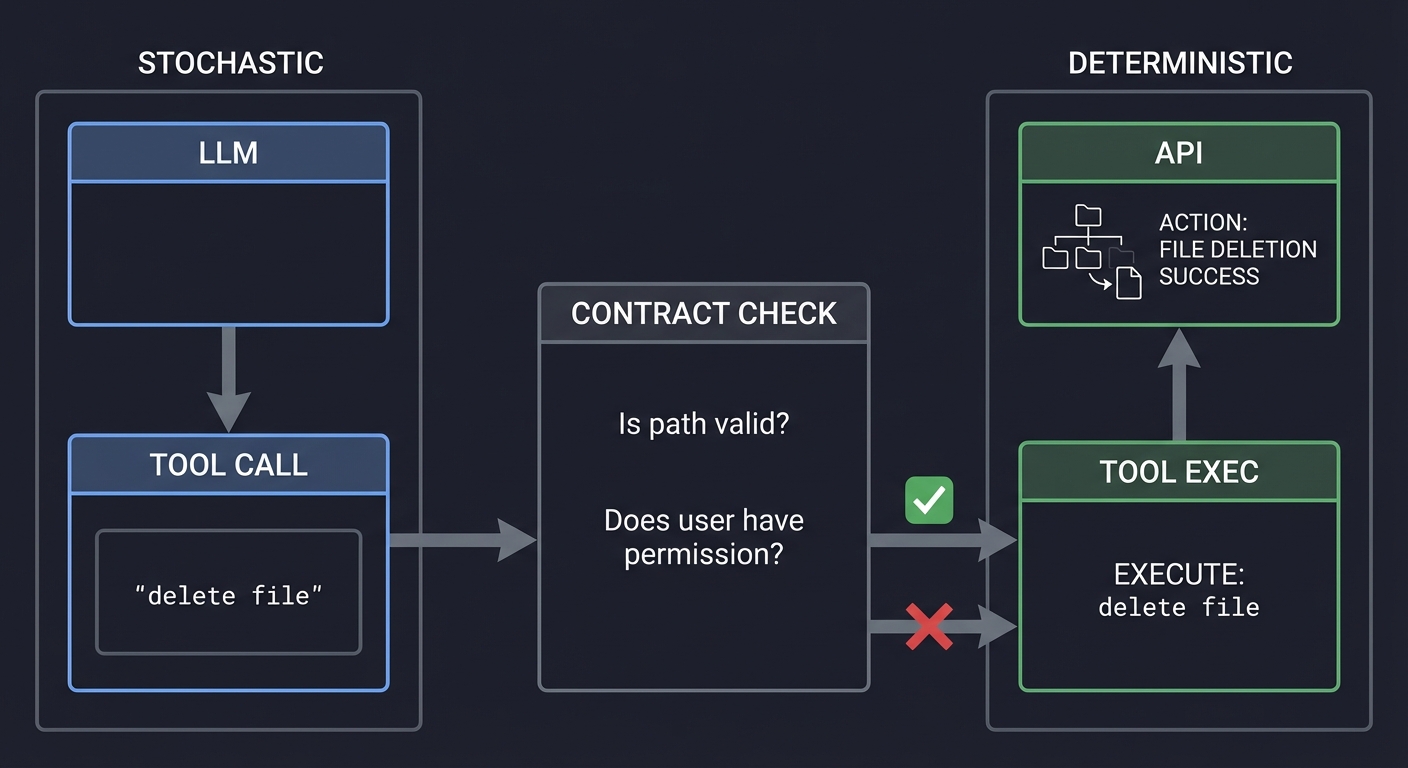

4. Tool Contracts: Deterministic Interfaces

You cannot trust an LLM to call a tool correctly 100% of the time. You must enforce Tool Contracts using JSON Schema. This acts as a firewall between the stochastic LLM and the deterministic API.

STOCHASTIC DETERMINISTIC

[ LLM ] [ API ]

│ ↑

▼ │

[ TOOL CALL ] ───────────┐ [ TOOL EXEC ]

"delete file" │ ↑

▼ │

[ CONTRACT CHECK ] ─┘

"Is path valid?"

"Does user have permission?"

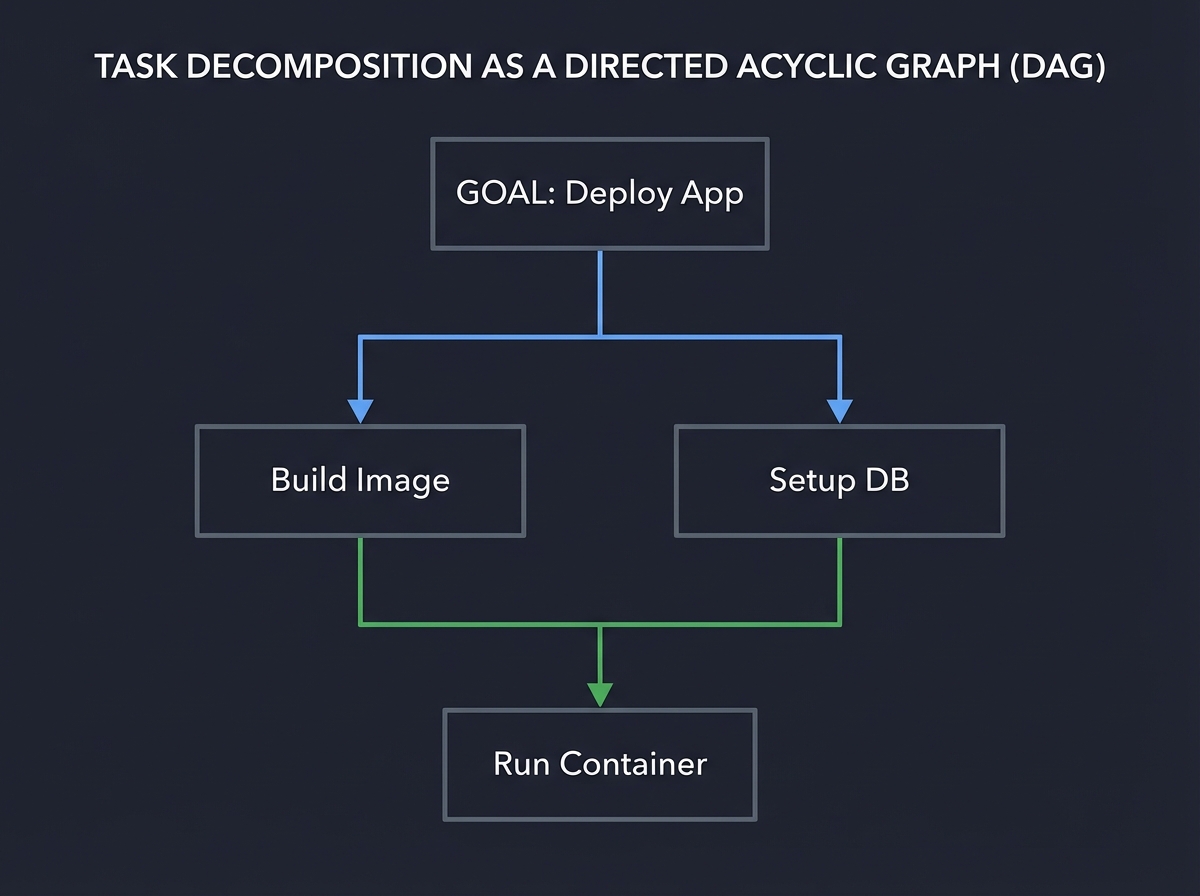

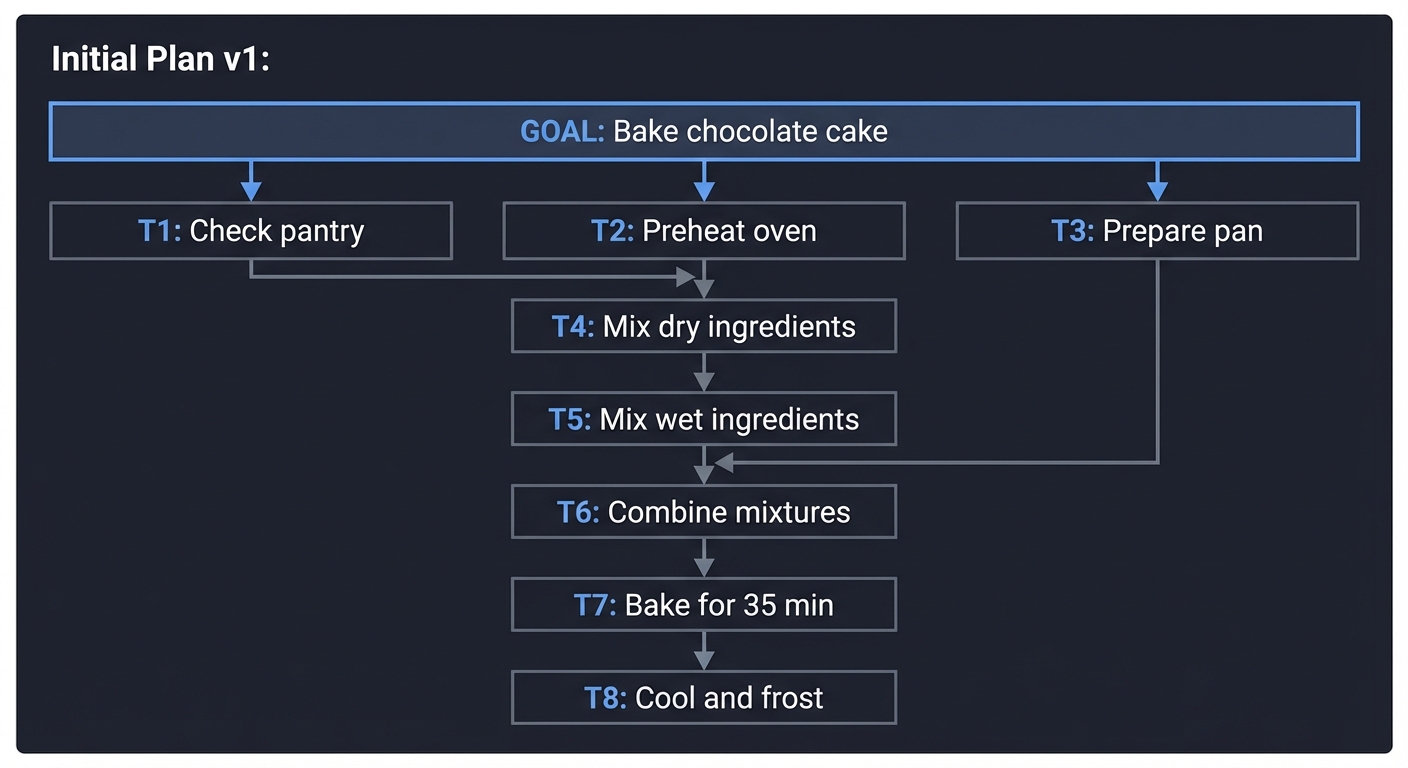

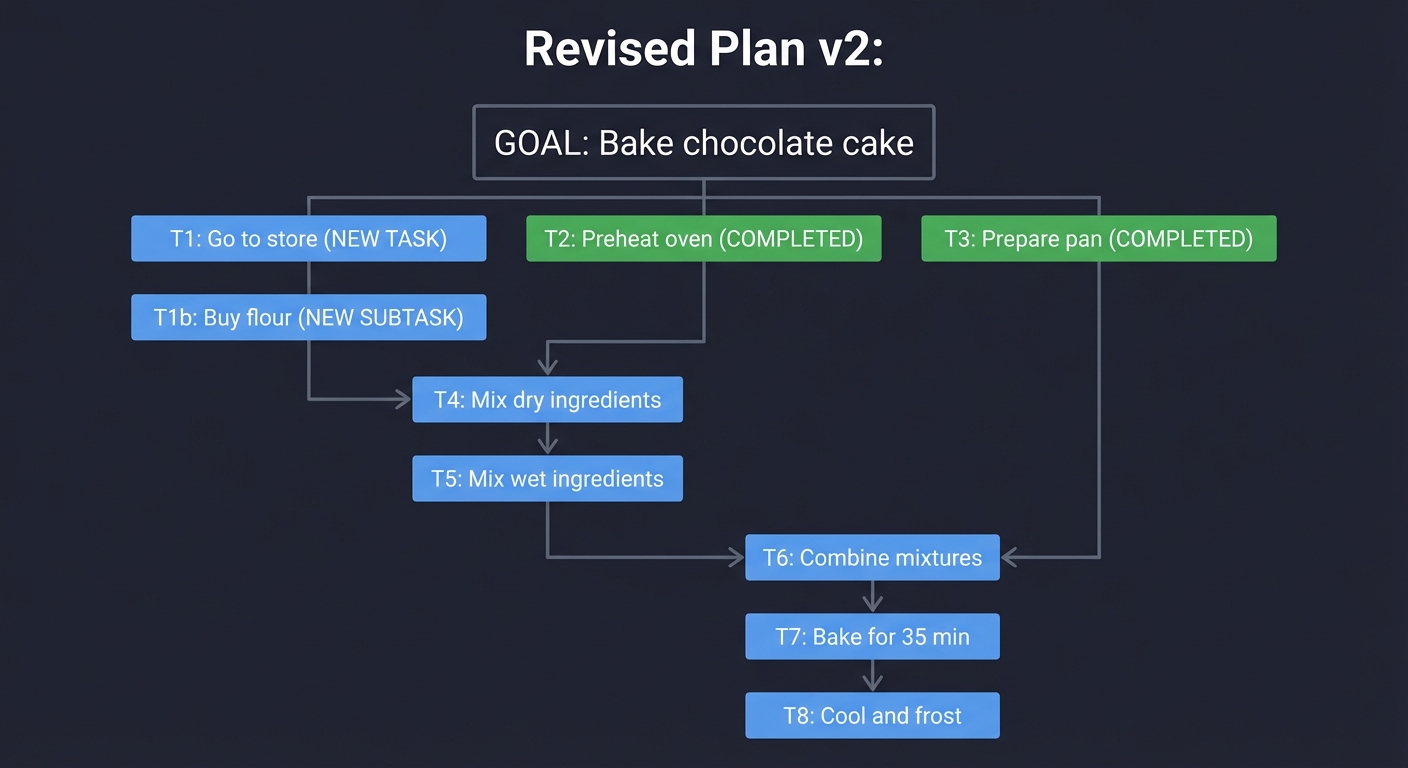

5. Task Decomposition: The Engine of Reasoning

Reasoning in agents is often just decomposition. A complex goal is broken into a Directed Acyclic Graph (DAG) of smaller, manageable tasks.

[ GOAL: Deploy App ]

│

┌─────────┴─────────┐

▼ ▼

[ Build Image ] [ Setup DB ]

│ │

└─────────┬─────────┘

▼

[ Run Container ]

Key insight: Failure in agents often happens at the decomposition stage. If the plan is wrong, the execution will fail. Book Reference: “AI Agents in Action” Ch. 5: “Planning and Reasoning”.

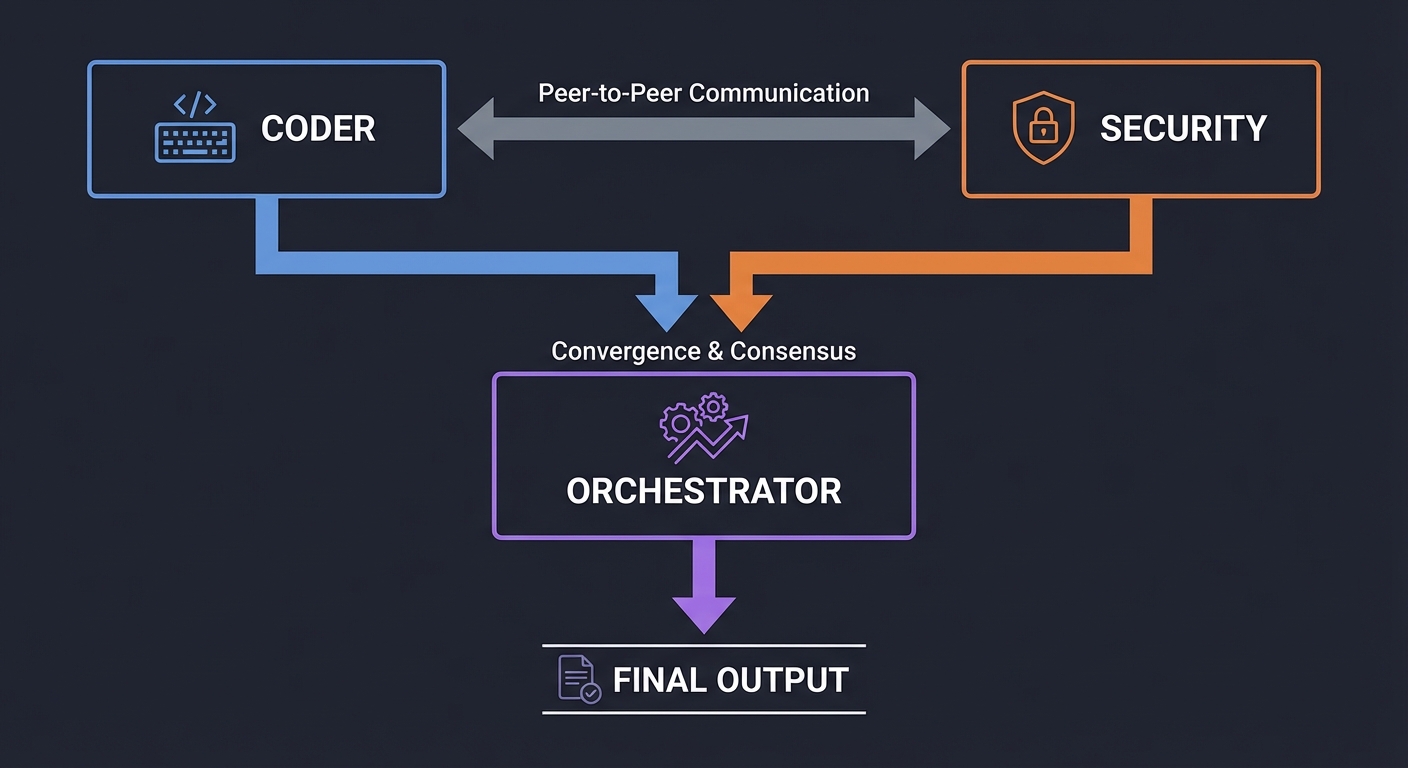

6. Multi-Agent Orchestration: Emergent Intelligence

When a task is too complex for one persona, we use Multi-Agent Systems (MAS). This follows the “Separation of Concerns” principle from software engineering. You have a specialized “Security Agent,” a “Coder Agent,” and a “QA Agent” debating the solution.

┌──────────┐ ┌──────────┐

│ CODER │ ◄───► │ SECURITY │

└────┬─────┘ └────┬─────┘

│ │

└────────┬─────────┘

▼

[ ORCHESTRATOR ]

│

▼

FINAL OUTPUT

Key insight: Conflict is a feature, not a bug. By forcing agents with different goals to reach consensus, we reduce the rate of “silent hallucinations.” Book Reference: “Multi-Agent Systems” by Michael Wooldridge.

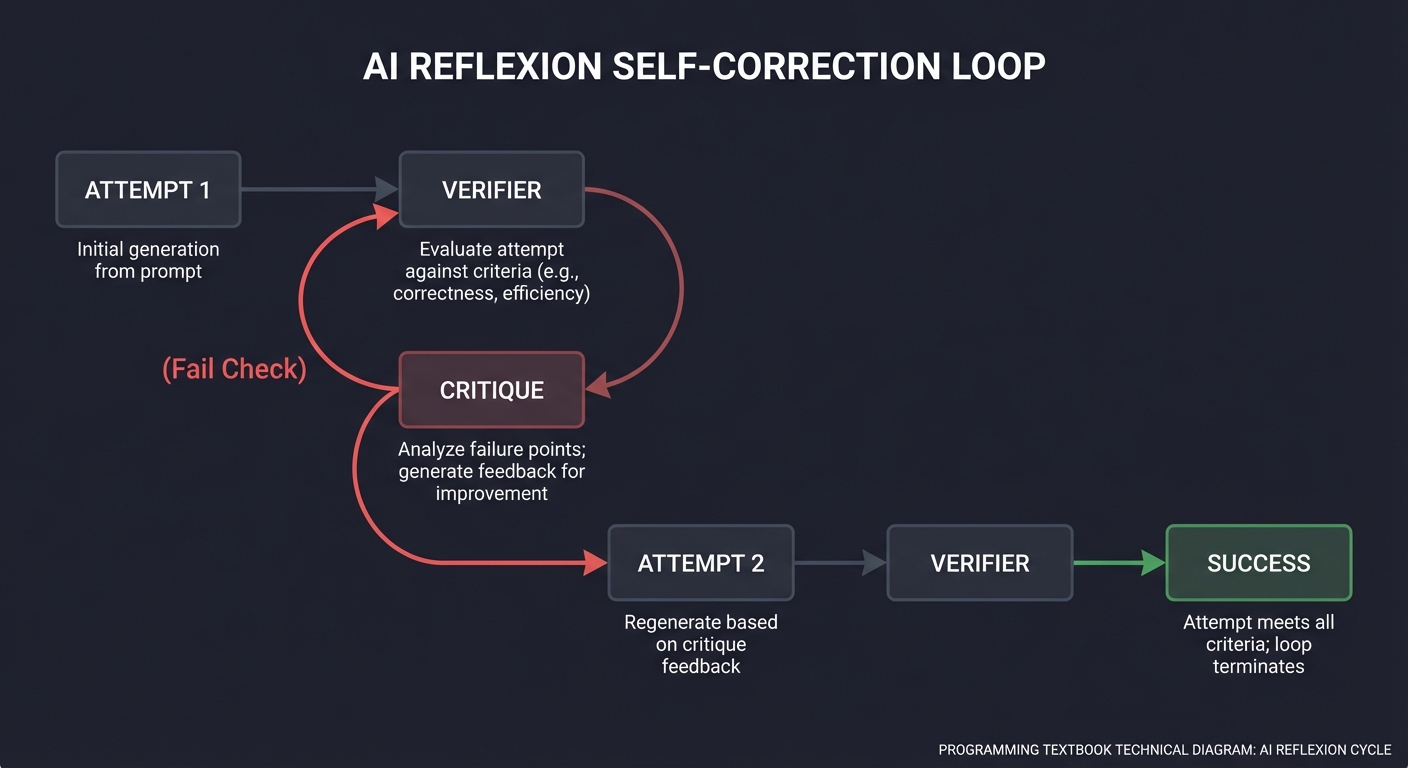

7. Self-Critique & Reflexion: The Feedback Loop

The highest form of agentic behavior is Reflexion. The agent doesn’t just act; it critiques its own performance and iterates until a verification condition is met.

[ ATTEMPT 1 ] ───▶ [ VERIFIER ] ───▶ [ CRITIQUE ]

│ │

(Fail Check) ◄──────────┘

│

[ ATTEMPT 2 ] ───▶ [ SUCCESS ]

Book Reference: “Reflexion: Language Agents with Iterative Self-Correction” (Shinn et al.).

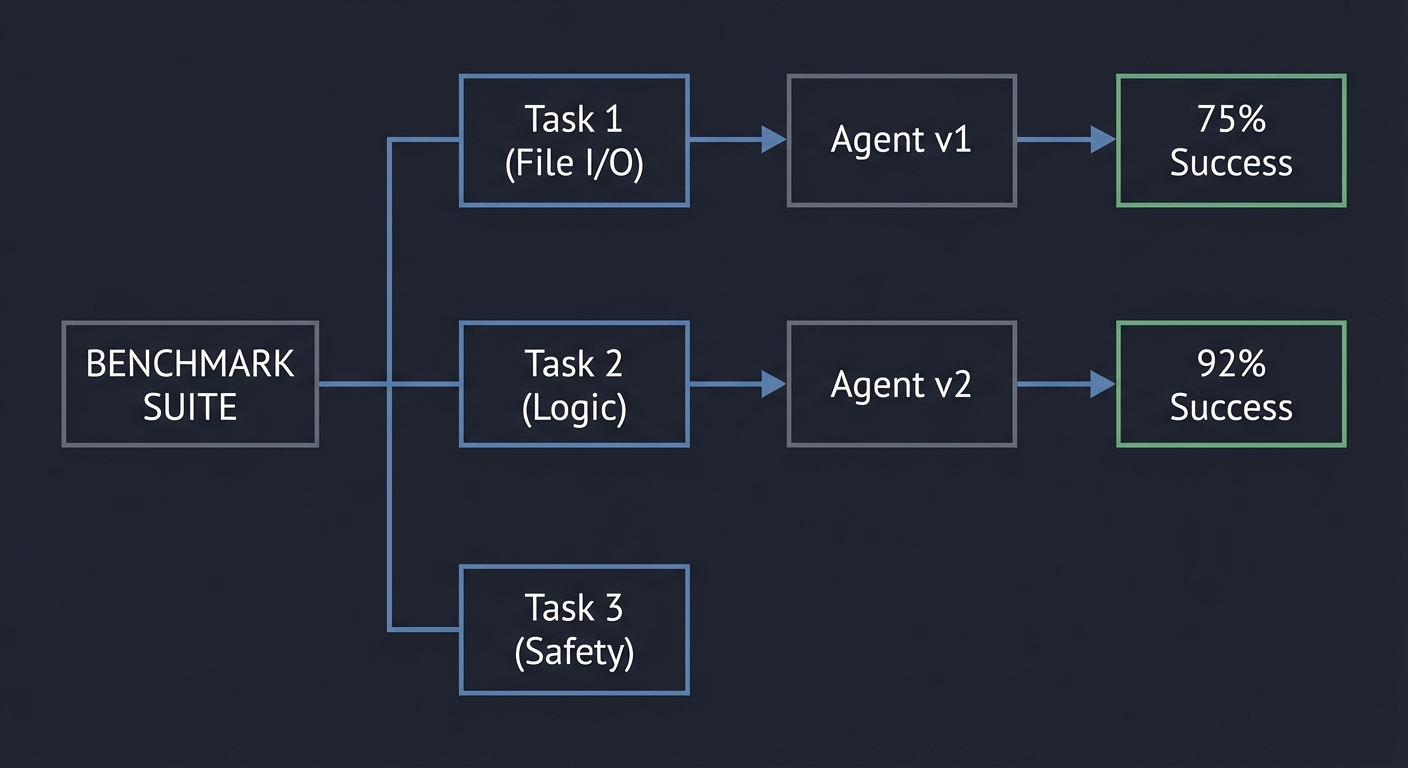

8. Agent Evaluation: Measuring the Stochastic

You cannot improve what you cannot measure. Agent evaluation moves from “vibes-based” testing to quantitative benchmarks, measuring success rate, cost, and latency.

[ BENCHMARK SUITE ]

├─ Task 1 (File I/O) ──▶ [ Agent v1 ] ──▶ 75% Success

├─ Task 2 (Logic) ──▶ [ Agent v2 ] ──▶ 92% Success

└─ Task 3 (Safety)

Book Reference: “Evaluation and Benchmarking of LLM Agents” (Mohammadi et al.).

Deep Dive Reading by Concept

This section maps each concept from above to specific book chapters or papers for deeper understanding. Read these before or alongside the projects to build strong mental models.

Agent Loops & Architectures

| Concept | Book & Chapter / Paper |

|---|---|

| The ReAct Pattern | “ReAct: Synergizing Reasoning and Acting” by Yao et al. (Full Paper) |

| Agentic Design Patterns | “Agentic Design Patterns” (Andrew Ng’s series / DeepLearning.AI) |

| Control Loop Fundamentals | “AI Agents in Action” by Manning — Ch. 3: “Building your first agent” |

| Multi-Agent Coordination | “Building Agentic AI Systems” by Packt — Ch. 4: “Multi-Agent Collaboration” |

State, Memory & Context

| Concept | Book & Chapter |

|---|---|

| Memory Architectures | “AI Agents in Action” by Manning — Ch. 8: “Understanding agent memory” |

| Knowledge Graphs as Memory | “Building AI Agents with LLMs, RAG, and Knowledge Graphs” by Raieli & Iuculano — Ch. 7 |

| Generative Agents | “Generative Agents: Interactive Simulacra of Human Behavior” by Park et al. (Full Paper) |

Safety, Guardrails & Policy

| Concept | Book & Chapter |

|---|---|

| Tool Calling Safety | “Function Calling and Tool Use” by Michael Brenndoerfer — Ch. 3: “Security and Reliability” |

| Alignment & Control | “Human Compatible” by Stuart Russell — Ch. 7: “The Problem of Control” |

| AI Ethics | “Introduction to AI Safety, Ethics, and Society” by Dan Hendrycks — Ch. 4 |

Essential Reading Order

For maximum comprehension, read in this order:

- Foundation (Week 1)

- ReAct paper (agent loop)

- Plan-and-Execute pattern notes (decomposition)

- Memory and State (Week 2)

- Generative Agents paper (memory)

- Agent survey (patterns)

- Safety and Tooling (Week 3)

- Tool calling docs (contracts)

- Agent eval tutorials (measurement)

Concept Summary Table

| Concept Cluster | What You Need to Internalize |

|---|---|

| Agent Loop | The loop is the agent; each step updates state and goals based on feedback. |

| State Invariants | Define what “valid” means and check it every step to prevent hallucination drift. |

| Memory Systems | Episodic (events) vs Semantic (facts). Provenance is mandatory for trust. |

| Tool Contracts | Never trust tool output without structure, validation, and error boundaries. |

| Planning & DAGs | Complex goals require decomposition into dependencies. Plans must be revisable. |

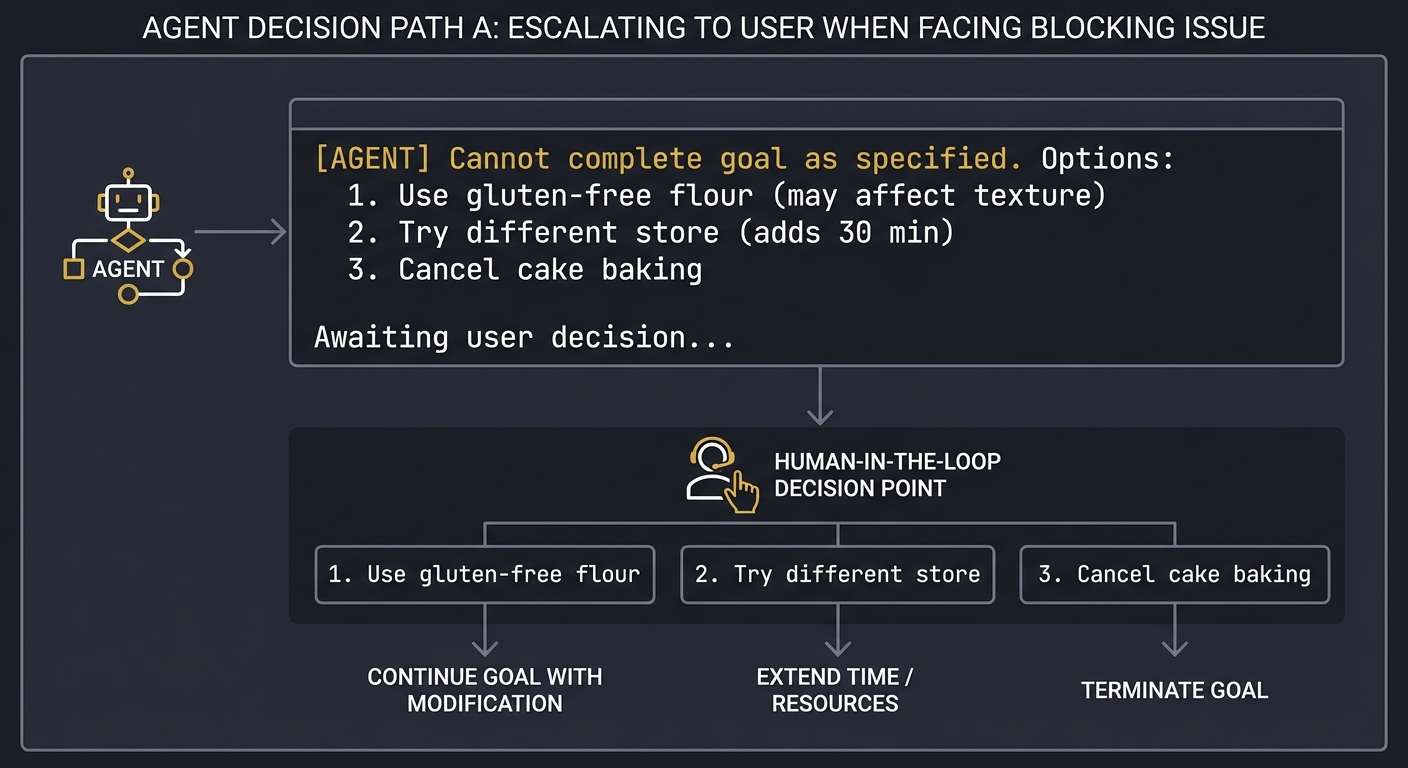

| Safety & Policy | Autonomy requires strict guardrails and human-in-the-loop triggers. |

Quick Start: Your First 48 Hours

Feeling overwhelmed? Start here. This is your practical entry point.

Day 1: Foundation (4-6 hours)

Morning: Understand the paradigm shift

- Read the ReAct paper introduction (30 minutes): ReAct: Synergizing Reasoning and Acting

- Watch Andrew Ng’s agentic patterns overview (20 minutes): Agentic Design Patterns

- Review the “Why AI Agents Matter” and “Core Concept Analysis” sections above (45 minutes)

Afternoon: Build your first tool caller

- Set up your development environment with API keys (30 minutes)

- Start Project 1: Tool Caller Baseline (3-4 hours)

- Don’t aim for perfection—aim for a working prototype

- Focus on: schema definition, one tool call, JSON validation

- Success = CLI that parses a log file and returns structured output

Evening reflection:

- Can you explain the difference between tool calling and an agent loop?

- Did your tool handle errors predictably?

Day 2: The Agent Loop (4-6 hours)

Morning: Understand iteration

- Re-read the ReAct paper Section 3 (implementation details) (45 minutes)

- Review “The Agent Loop” concept section above (30 minutes)

- Study the ReAct pattern in practice: Simon Willison’s implementation (30 minutes)

Afternoon: Build your first agent

- Start Project 2: Minimal ReAct Agent (3-4 hours)

- Implement: Think → Act → Observe → Repeat

- Add a max iteration limit (start with 5)

- Test with: “Find all ERROR logs from the last hour and count them”

- Success = Agent that iterates based on observations

Evening reflection:

- What happened when the agent got stuck in a loop?

- How did you implement termination?

- Can you trace the difference between Project 1 and Project 2?

After 48 Hours: Next Steps

If you found Projects 1-2 manageable:

- Move to Project 3 (State Invariants) within the next week

- Start thinking about production use cases in your work

- Join AI agent communities (LangChain Discord, r/LangChain)

If you struggled:

- That’s normal—agents are conceptually dense

- Re-do Project 2 with a different task (e.g., “Summarize a GitHub PR”)

- Focus on understanding the loop before adding complexity

- Review the “Thinking Exercise” sections more carefully

If you breezed through:

- You have strong foundations—accelerate to Projects 4-5

- Consider reading the full ReAct and Reflexion papers

- Start experimenting with production frameworks (LangGraph, CrewAI)

Recommended Learning Paths

Different backgrounds require different approaches. Choose your path:

Path A: Software Engineer (Backend/Systems Background)

Your strength: System design, APIs, deterministic logic Your challenge: Embracing stochastic behavior and probabilistic correctness

Recommended sequence:

- Week 1-2: Projects 1-2 (Foundation)

- Emphasize: Tool contracts as API contracts, state machines

- Week 3-4: Project 3 (State Invariants)

- Connect to: Database ACID properties, type systems

- Week 5-6: Projects 5-6 (Planning + Guardrails)

- Connect to: DAG schedulers (Airflow), access control systems

- Week 7-9: Projects 7-9 (Self-Critique, Multi-Agent, Eval)

- Connect to: CI/CD pipelines, distributed consensus, testing frameworks

- Week 10-12: Project 10 (End-to-End)

- Build a production-ready research agent

Key mental shift: Accept that 95% reliability with graceful failure is better than seeking 100% perfection.

Path B: ML/AI Engineer or Data Scientist

Your strength: Understanding LLMs, embeddings, prompting Your challenge: Building robust software systems with proper error handling

Recommended sequence:

- Week 1: Project 1 (Tool Caller)

- Focus on: JSON schema validation, type safety, error boundaries

- Week 2-3: Projects 2-3 (ReAct + Invariants)

- Emphasize: State management, debugging loops

- Week 4-5: Project 4 (Memory Store)

- Your sweet spot: RAG, embeddings, semantic search

- Week 6-7: Projects 5-6 (Planning + Guardrails)

- New territory: Formal decomposition, safety policies

- Week 8-10: Projects 7-9 (Self-Critique, Multi-Agent, Eval)

- Your sweet spot: Agent behavior, benchmarking, metrics

- Week 11-14: Project 10 (End-to-End)

- Integrate everything with production-grade memory

Key mental shift: Agents are systems, not models. Error handling and contracts matter as much as prompts.

Path C: Frontend/Full-Stack Web Developer

Your strength: User interaction, state management, async patterns Your challenge: Understanding agent reasoning patterns and LLM constraints

Recommended sequence:

- Week 1: Project 1 (Tool Caller)

- Connect to: API middleware, validation libraries (Zod)

- Week 2: Project 2 (ReAct Agent)

- Connect to: State machines (XState), async workflows

- Week 3-4: Project 4 (Memory Store)

- Connect to: Local storage, caching strategies

- Week 5: Project 3 (State Invariants)

- Connect to: Form validation, schema enforcement

- Week 6-7: Projects 6-7 (Guardrails + Self-Critique)

- Connect to: Input sanitization, retry logic

- Week 8-11: Projects 5, 8-10 (Planning, Multi-Agent, Eval, End-to-End)

- Focus on building a chat interface for your research agent

Key mental shift: LLM responses are like unreliable network requests—always validate, always have fallbacks.

Path D: Product Manager / Non-Coding Technical Leader

Your strength: System thinking, requirements, user needs Your challenge: Understanding technical constraints and implementation details

Recommended sequence:

- Week 1-2: Read deeply (don’t code)

- ReAct paper, Andrew Ng’s agentic patterns

- Study all “Core Concept Analysis” sections

- Review “Real World Outcome” sections for all projects

- Week 3-4: Pair programming on Projects 1-2

- Have an engineer implement while you guide

- Focus on: What can go wrong? What are the constraints?

- Week 5-6: Design exercises

- For Projects 5-6: Design a planning system on paper

- For Project 8: Design a multi-agent debate protocol

- Week 7-8: Focus on evaluation (Project 9)

- Define success metrics, benchmark suites

- Understand cost vs. quality tradeoffs

- Week 9-10: Spec out Project 10

- Write a PRD for a research assistant agent

- Define SLAs, failure modes, escalation paths

Key mental shift: Agents aren’t magic—they’re software with stochastic components. Design for failure.

Path E: Security Engineer / DevSecOps

Your strength: Threat modeling, access control, failure analysis Your challenge: Understanding agent architecture to secure it properly

Recommended sequence:

- Week 1-2: Projects 1-3 (Foundation + Invariants)

- Focus on: What can go wrong at each step?

- Week 3: Project 6 (Guardrails and Policy Engine) — Your priority

- Threat model: Prompt injection, tool misuse, data exfiltration

- Week 4: Project 3 (State Invariants) — deeper dive

- Connect to: Formal verification, security properties

- Week 5-6: Projects 5, 7-8 (Planning, Self-Critique, Multi-Agent)

- Focus on: Can agents be made to leak secrets? Bypass policies?

- Week 7-8: Project 9 (Evaluation) — security testing

- Build adversarial test suites

- Measure policy violation rates

- Week 9-10: Project 10 (End-to-End) — secure implementation

- Add: Audit logging, tool sandboxing, secret management

Key mental shift: Agents have agency—they can take actions you didn’t explicitly program. Security must be enforced, not assumed.

Project 1: Tool Caller Baseline (Non-Agent)

- Programming Language: Python or JavaScript

- Difficulty: Level 1: Intro

- Knowledge Area: Tool use vs agent loop

What you’ll build: A single-shot CLI assistant that calls tools for a fixed task (for example, parsing a log file and returning stats).

Why it teaches AI agents: This is your control group. You will directly compare what is possible without a loop.

Core challenges you’ll face:

- Defining tool schemas and validation

- Handling tool failures without an agent loop

Success criteria:

- Returns strict JSON output that validates against a schema

- Distinguishes tool errors from model errors in logs

- Produces a reproducible summary for the same input file

Real world outcome:

- A CLI tool that reads a log file and outputs a summary report with strict JSON IO

Real World Outcome

When you run your tool caller, here’s exactly what happens:

$ python tool_caller.py analyze --file logs/server.log

Calling tool: parse_log_file

Tool input: {"file_path": "logs/server.log", "filters": ["ERROR", "WARN"]}

Tool output received (347 bytes)

Calling tool: calculate_statistics

Tool input: {"events": [...], "group_by": "severity"}

Tool output received (128 bytes)

Analysis complete!

The program outputs a JSON file analysis_result.json:

{

"status": "success",

"timestamp": "2025-12-27T10:30:45Z",

"input_file": "logs/server.log",

"statistics": {

"total_lines": 1523,

"error_count": 47,

"warning_count": 132,

"top_errors": [

{"message": "Database connection timeout", "count": 23},

{"message": "Invalid auth token", "count": 15}

]

},

"tools_called": [

{"name": "parse_log_file", "duration_ms": 145},

{"name": "calculate_statistics", "duration_ms": 23}

]

}

If a tool fails, you see:

$ python tool_caller.py analyze --file missing.log

Calling tool: parse_log_file

Tool error: FileNotFoundError - File 'missing.log' not found

Analysis failed!

Exit code: 1

The output is always deterministic. Same input = same output. No retry logic, no planning, no adaptation. This is the baseline that demonstrates single-shot execution without an agent loop.

The Core Question You’re Answering

What can you accomplish with structured tool calling alone, without any feedback loop or multi-step reasoning?

This establishes the upper bound of non-agentic tool use and clarifies why agents are fundamentally different systems.

Concepts You Must Understand First

- Function Calling / Tool Calling

- What: LLMs can output structured function calls with typed parameters instead of just text

- Why: Enables reliable integration with external systems (APIs, databases, file systems)

- Reference: “Function Calling with LLMs” - Prompt Engineering Guide (2025)

- JSON Schema Validation

- What: Defining and enforcing the exact structure of inputs and outputs

- Why: Prevents silent failures and type mismatches that corrupt downstream logic

- Reference: OpenAI Function Calling Guide - parameter validation section

- Single-Shot vs Multi-Step Execution

- What: The difference between one call-and-return versus iterative decision loops

- Why: Understanding this distinction is the foundation of agent reasoning

- Reference: “ReAct: Synergizing Reasoning and Acting” (Yao et al., 2022) - Section 1 (Introduction)

- Tool Contracts and Error Boundaries

- What: Explicit specification of what a tool does, what it requires, and how it fails

- Why: Tools are untrusted external systems; contracts make behavior predictable

- Reference: “Building AI Agents with LLMs, RAG, and Knowledge Graphs” (Raieli & Iuculano, 2025) - Chapter 3: Tool Integration

- Deterministic vs Stochastic Execution

- What: Understanding when outputs should be identical for identical inputs

- Why: Reproducibility is essential for testing and debugging tool-based systems

- Reference: “Function Calling” section in OpenAI API documentation

Questions to Guide Your Design

-

What happens when a tool fails? Should the entire program fail, or should it return a partial result? How do you distinguish between expected failures (file not found) and unexpected ones (segmentation fault)?

-

How do you validate tool outputs? If a tool returns malformed JSON, who is responsible for catching it - the tool wrapper, the main program, or the caller?

-

What belongs in a tool vs what belongs in application logic? Should the log parser count errors, or should you have a separate “calculate_statistics” tool?

-

How do you make tool execution observable? What logging or tracing do you need to debug when a tool behaves unexpectedly?

-

What makes two tool calls equivalent? If you call

parse_log(file="test.log", filters=["ERROR"])twice, should you cache the result or re-execute? -

How do you test tools in isolation? Can you mock tool outputs without running actual file I/O or API calls?

Thinking Exercise

Before writing any code, trace this scenario by hand:

Scenario: You have two tools: read_file(path) -> string and count_pattern(text, pattern) -> int.

Task: Count how many times “ERROR” appears in server.log.

Draw a sequence diagram showing:

- The exact function calls made

- The data passed between components

- What happens if

read_filefails - What happens if

count_patternreceives invalid input

Label each step with: (1) who called it, (2) what data moved, (3) what validations occurred.

Now add: What changes if you want to support regex patterns instead of literal strings? Where does that complexity live?

This exercise reveals the boundaries between tool logic, validation logic, and orchestration logic.

The Interview Questions They’ll Ask

-

Q: What’s the difference between tool calling and function calling in LLMs? A: They’re often used interchangeably, but “function calling” emphasizes the structured output format (JSON with function name + parameters), while “tool calling” emphasizes the external action being performed. Both describe the same capability: LLMs generating structured invocations instead of freeform text.

-

Q: Why validate tool outputs if the LLM already generated valid inputs? A: The LLM generates the tool call, but the tool itself executes in an external environment. File systems change, APIs return errors, databases time out. Validation catches runtime failures, not just schema mismatches.

-

Q: How does single-shot tool calling differ from an agent loop? A: Single-shot: User -> LLM -> Tool -> Result. No feedback. Agent loop: Goal -> Plan -> Act -> Observe -> Update -> Repeat. The agent uses tool outputs to inform the next action.

-

Q: What’s a tool contract, and why does it matter? A: A contract specifies inputs (types, constraints), outputs (schema, possible values), and failure modes (exceptions, error codes). It matters because it makes tool behavior testable and predictable - you can validate inputs before calling and outputs before using them.

-

Q: When would you choose structured outputs over tool calling? A: Use structured outputs when you want the LLM to generate data (e.g., “extract entities from this text as JSON”). Use tool calling when you want the LLM to trigger actions (e.g., “search the database for matching records”). Structured outputs return data; tool calls invoke behavior.

-

Q: How do you handle non-deterministic tool outputs? A: Add timestamps and unique IDs to outputs. Log the exact input that produced each output. Use versioned tools (e.g.,

weather_api_v2) so you know which implementation ran. For testing, inject mock tools that return fixed outputs. -

Q: What’s the failure mode of skipping JSON schema validation? A: Silent data corruption. A tool might return

{"count": "42"}(string) instead of{"count": 42}(int). Without validation, downstream code might crash with type errors, or worse, produce subtly wrong results that pass tests.

Hints in Layers

Hint 1 (Architecture): Start with three components: (1) Tool definitions (schemas + implementations), (2) Tool executor (validates input, calls tool, validates output), (3) CLI interface (parses args, formats results). Keep them strictly separated.

Hint 2 (Validation): Use a schema library like Pydantic (Python) or Zod (JavaScript). Define tool schemas as classes/objects. Never use raw dictionaries or objects - always parse into validated types.

Hint 3 (Error Handling): Distinguish three error categories: (1) Invalid tool call (schema mismatch), (2) Tool execution failure (file not found), (3) Invalid tool output (schema mismatch). Return different exit codes for each.

Hint 4 (Testing): Write tests that inject mock tools. Your CLI should never directly import read_file - it should depend on a tool registry. This lets you swap real tools for mocks during testing.

Books That Will Help

| Topic | Book/Resource | Relevant Section |

|---|---|---|

| Tool Calling Fundamentals | OpenAI Function Calling Guide (2025) | “Function calling” section - parameters, schemas, error handling |

| Structured LLM Outputs | Prompt Engineering Guide (2025) | “Function Calling with LLMs” chapter - reliability patterns |

| Tool Integration Patterns | “Building AI Agents with LLMs, RAG, and Knowledge Graphs” (Raieli & Iuculano, 2025) | Chapter 3: Tool Integration and External APIs |

| JSON Schema Design | OpenAI API Documentation | “Function calling” section - defining parameters with JSON Schema |

| Agent vs Non-Agent Architecture | “ReAct: Synergizing Reasoning and Acting” (Yao et al., 2022) | Section 1: Introduction - contrasts single-step with multi-step reasoning |

| Error Handling in Tool Systems | “Build Autonomous AI Agents with Function Calling” (Towards Data Science, Jan 2025) | Section on robust error handling and retry logic |

Common Pitfalls & Debugging

Problem 1: “LLM returns invalid JSON for tool calls”

- Why: The model occasionally hallucinates malformed function signatures or adds extra text around the JSON

- Fix: Use structured output modes (OpenAI’s

response_format, Anthropic’s tool use) instead of relying on text parsing. If parsing text, add retry logic with error messages fed back to the LLM - Quick test:

echo '{"invalid_tool": "test"}' | python tool_caller.pyshould fail with a clear schema validation error, not a JSON parse error

Problem 2: “Tool execution succeeds but output doesn’t validate against schema”

- Why: The tool implementation doesn’t match its declared schema, or the schema is too permissive

- Fix: Add output validation in the tool wrapper, not just input validation. Return a validation error as a structured response rather than crashing

- Quick test: Inject a mock tool that returns

{"count": "42"}(string instead of int) and verify your validator catches it

Problem 3: “Can’t distinguish between tool failures and application logic errors”

- Why: Both raise generic exceptions, making logs hard to debug

- Fix: Define custom exception types:

ToolExecutionError,ToolValidationError,SchemaError. Log each with different severity levels - Quick test: Force a file-not-found error and check if the log clearly shows it’s a tool failure, not a bug in your code

Problem 4: “Same input produces different outputs on consecutive runs”

- Why: If using LLM to generate tool calls, temperature > 0 introduces randomness

- Fix: Set temperature=0 for tool call generation. For truly deterministic behavior, cache tool results or use a decision tree instead of an LLM

- Quick test: Run

python tool_caller.py analyze --file test.logfive times. Outputs should be byte-identical

Problem 5: “Tool calls work in isolation but fail when chained”

- Why: The output format of Tool A doesn’t match the expected input format of Tool B (implicit contract violation)

- Fix: Create integration tests that chain tools. Add schema compatibility checks in your tool registry

- Quick test: Define

read_file() -> stringandcount_words(text: list) -> int. This type mismatch should fail at registration time, not runtime

Project 2: Minimal ReAct Agent

- Programming Language: Python or JavaScript

- Difficulty: Level 2: Intermediate

- Knowledge Area: Agent loop, observation, action

What you’ll build: A ReAct-style agent that takes a goal, calls tools iteratively, and stops when success criteria are met.

Why it teaches AI agents: You implement the full loop: plan, act, observe, update.

Core challenges you’ll face:

- Building a loop with termination conditions

- Tracking state across steps

Success criteria:

- Logs each step with goal, action, observation, and state diff

- Stops within a max step budget or explicit success signal

- Uses tool outputs to update state rather than re-guessing

Real world outcome:

- A multi-step agent that can solve a file system task like “find, summarize, and compare the three largest markdown files”

Real World Outcome

When you run your ReAct agent, here’s the step-by-step execution:

$ python react_agent.py --goal "Find and compare the three largest markdown files in /docs"

=== ReAct Agent Starting ===

Goal: Find and compare the three largest markdown files in /docs

Max steps: 10

--- Step 1 ---

Thought: I need to first list all markdown files in the /docs directory to find the largest ones.

Action: list_files

Action Input: {"directory": "/docs", "pattern": "*.md"}

Observation: Found 47 markdown files. Total size: 2.3 MB

State Update: files_found = 47

--- Step 2 ---

Thought: Now I need to get file sizes for all markdown files to identify the three largest.

Action: get_file_sizes

Action Input: {"files": ["README.md", "ARCHITECTURE.md", ...]}

Observation: Retrieved sizes for 47 files. Largest: ARCHITECTURE.md (450 KB), API_GUIDE.md (380 KB), TUTORIAL.md (320 KB)

State Update: largest_files = ["ARCHITECTURE.md", "API_GUIDE.md", "TUTORIAL.md"]

--- Step 3 ---

Thought: I need to read the content of each of the three largest files to compare them.

Action: read_file

Action Input: {"path": "/docs/ARCHITECTURE.md"}

Observation: Read 450 KB. Contains system design documentation with 12 sections.

State Update: file_contents[0] = {...}

--- Step 4 ---

Thought: Read the second largest file.

Action: read_file

Action Input: {"path": "/docs/API_GUIDE.md"}

Observation: Read 380 KB. Contains API reference with 450 endpoints documented.

State Update: file_contents[1] = {...}

--- Step 5 ---

Thought: Read the third largest file.

Action: read_file

Action Input: {"path": "/docs/TUTORIAL.md"}

Observation: Read 320 KB. Contains step-by-step tutorials with code examples.

State Update: file_contents[2] = {...}

--- Step 6 ---

Thought: I now have all three files. I need to compare their characteristics.

Action: compare_documents

Action Input: {"files": ["ARCHITECTURE.md", "API_GUIDE.md", "TUTORIAL.md"]}

Observation: Comparison complete. Generated similarity matrix and topic analysis.

State Update: comparison_result = {...}

--- Step 7 ---

Thought: I have completed the goal. All three largest files have been identified and compared.

Action: finish

Action Input: {"status": "success", "result": "comparison_result"}

=== Agent Finished ===

Steps taken: 7 / 10

Final state:

{

"goal": "Find and compare the three largest markdown files in /docs",

"status": "completed",

"largest_files": [

{"name": "ARCHITECTURE.md", "size": "450 KB", "type": "design docs"},

{"name": "API_GUIDE.md", "size": "380 KB", "type": "reference"},

{"name": "TUTORIAL.md", "size": "320 KB", "type": "tutorials"}

],

"comparison": {

"total_size": "1.15 MB",

"average_sections": 8,

"topics_overlap": ["authentication", "deployment"],

"unique_topics": {

"ARCHITECTURE.md": ["system design", "database schema"],

"API_GUIDE.md": ["endpoints", "request/response"],

"TUTORIAL.md": ["getting started", "examples"]

}

}

}

If the agent gets stuck or exceeds max steps:

--- Step 10 ---

Thought: I still need to process more files but have reached the step limit.

Action: finish

Action Input: {"status": "partial", "reason": "max_steps_reached"}

=== Agent Stopped ===

Reason: Maximum steps (10) reached

Status: Partial completion - found 2 of 3 files

The trace file agent_trace.jsonl contains every step:

{"step": 1, "thought": "I need to first list...", "action": "list_files", "observation": "Found 47...", "state_diff": {"files_found": 47}}

{"step": 2, "thought": "Now I need to get...", "action": "get_file_sizes", "observation": "Retrieved sizes...", "state_diff": {"largest_files": [...]}}

...

This demonstrates the closed-loop control system: the agent observes results and makes decisions based on what it learned, not what it guessed.

The Core Question You’re Answering

How does an agent use observations from previous actions to inform subsequent decisions in a goal-directed loop?

This is the essence of agentic behavior: feedback-driven, multi-step reasoning toward an objective.

Concepts You Must Understand First

- ReAct Pattern (Reasoning + Acting)

- What: Interleaving thought traces with tool actions to solve multi-step problems

- Why: Explicit reasoning makes decisions auditable and correctable

- Reference: “ReAct: Synergizing Reasoning and Acting in Language Models” (Yao et al., 2022) - Sections 1-3

- Agent Loop / Control Flow

- What: The cycle of Observe -> Think -> Act -> Observe that continues until goal completion

- Why: This loop is what distinguishes agents from single-step tool callers

- Reference: “What is a ReAct Agent?” (IBM, 2025) - Agent Loop Architecture section

- State Management Across Steps

- What: Maintaining a working memory of what has been learned and what remains to be done

- Why: Without state tracking, agents repeat actions or lose progress

- Reference: “Building AI Agents with LangChain” (VinodVeeramachaneni, Medium 2025) - State Management section

- Termination Conditions

- What: Explicit criteria for when the agent should stop (goal achieved, budget exhausted, impossible task)

- Why: Agents without stop conditions run forever or until they crash

- Reference: “LangChain ReAct Agent: Complete Implementation Guide 2025” - Loop Termination Strategies

- Observation Processing

- What: Converting raw tool outputs into structured facts that update agent state

- Why: Observations must be validated and interpreted, not blindly trusted

- Reference: “ReAct Prompting” (Prompt Engineering Guide) - Observation Formatting section

Questions to Guide Your Design

-

What counts as “goal achieved”? Is it when the agent calls a

finishaction, when no more actions are needed, or when a specific state condition is met? -

How do you prevent infinite loops? What happens if the agent keeps calling the same tool with the same inputs, expecting different results?

-

What belongs in “state” vs “memory”? Should state include every tool output, or only the facts derived from them?

-

How do you handle contradictory observations? If Step 3 says “file exists” but Step 5 says “file not found,” which does the agent believe?

-

Should thoughts be generated by the LLM or inferred from actions? Can you build a ReAct agent where reasoning is implicit, or must it always be explicit?

-

How do you debug a failed agent run? What information do you need in your trace to understand why the agent made a wrong decision?

Thinking Exercise

Trace this scenario by hand using the ReAct pattern:

Goal: “Find the most common word in the three largest text files in /data.”

Available Tools:

list_files(directory) -> [files]get_file_size(path) -> bytesread_file(path) -> stringcount_words(text) -> {word: count}find_max(list) -> item

| Draw a table with columns: Step | Thought | Action | Observation | State |

Fill in at least 7 steps showing:

- How the agent discovers which files to process

- How it reads and analyzes each file

- How it combines results

- What happens if one file is unreadable

Label where the agent updates state based on observations. Circle any step where the agent might loop infinitely if not handled correctly.

Now add: What changes if you allow parallel tool calls (reading all three files simultaneously)?

The Interview Questions They’ll Ask

-

Q: How does ReAct differ from Chain-of-Thought (CoT) prompting? A: CoT produces reasoning traces before a final answer (think -> answer). ReAct interleaves reasoning with actions (think -> act -> observe -> think -> act…). CoT is single-shot; ReAct is iterative.

-

Q: What’s the role of the “Thought” step in ReAct? A: Thoughts make the agent’s reasoning explicit and auditable. They allow the LLM to plan the next action based on current state and previous observations. Without thoughts, you have no trace of WHY an action was chosen.

-

Q: How do you prevent the agent from calling the same tool repeatedly? A: Track action history in state. Implement rules like “if last 3 actions were identical, force a different action or terminate.” Use step budgets and diversity constraints.

-

Q: What’s the difference between observation and state? A: Observation is the raw output of a tool call. State is the accumulated knowledge derived from all observations. Example: Observation = “file size: 450 KB”. State = “largest_files: [ARCHITECTURE.md (450 KB), …]”.

-

Q: When should the agent terminate vs. ask for help? A: Terminate on success (goal met) or hard failure (impossible task, step limit). Ask for help on uncertainty (ambiguous goal, missing information, conflicting observations). The agent should distinguish “I’m done” from “I’m stuck.”

-

Q: How do you test a ReAct agent? A: Use deterministic mock tools that return fixed outputs for given inputs. Define test goals with known solution paths. Verify the trace matches expected Thought->Action->Observation sequences. Check that state updates are correct at each step.

-

Q: What happens if a tool call fails mid-loop? A: The observation should be “Error: [details]”. The agent’s next thought should reason about the error: retry with different inputs, try an alternative tool, or report failure. Never silently ignore tool errors.

Hints in Layers

Hint 1 (Loop Structure): Implement the loop as: while not done and step < max_steps: thought = think(goal, state), action = choose_action(thought), observation = execute(action), state = update(state, observation). Keep these phases strictly separated.

Hint 2 (State Tracking): Start with a simple state dict: {"goal": "...", "step": 0, "facts": {}, "actions_taken": [], "status": "in_progress"}. Update facts with each observation. Check actions_taken to detect loops.

Hint 3 (Termination): Implement three stop conditions: (1) Agent calls finish action, (2) step >= max_steps, (3) Same action repeated N times. Return different status codes for each.

Hint 4 (Debugging): Write every step to a trace file as JSON lines (JSONL). Each line = one Thought->Action->Observation->State cycle. This makes debugging visual and greppable.

Books That Will Help

| Topic | Book/Resource | Relevant Section |

|---|---|---|

| ReAct Pattern Fundamentals | “ReAct: Synergizing Reasoning and Acting in Language Models” (Yao et al., 2022) | Sections 1-3: Introduction, Method, Implementation |

| ReAct Implementation Guide | “LangChain ReAct Agent: Complete Implementation Guide 2025” | Full guide - loop structure, state management, termination |

| Agent Loop Architecture | “What is a ReAct Agent?” (IBM, 2025) | Agent Loop and Control Flow section |

| Practical Agent Building | “Building AI Agents with LangChain: Architecture and Implementation” (VinodVeeramachaneni, Medium 2025) | State management, tool integration patterns |

| ReAct Prompting Techniques | “ReAct Prompting” (Prompt Engineering Guide, 2025) | Prompt templates, observation formatting |

| Agent Implementation Patterns | “Building AI Agents with LLMs, RAG, and Knowledge Graphs” (Raieli & Iuculano, 2025) | Chapter 4: Agent Architectures - ReAct and Plan-Execute patterns |

| From Scratch Implementation | “Building a ReAct Agent from Scratch” (Plaban Nayak, Medium) | Full implementation walkthrough with code examples |

Common Pitfalls & Debugging

Problem 1: “Agent gets stuck in an infinite loop repeating the same action”

- Why: The agent doesn’t recognize that an action failed or that it’s not making progress toward the goal

- Fix: Add loop detection: if the same action+arguments appears 3+ times consecutively, force a different action or terminate with error. Better: track progress metrics (new information gained) and stop if progress stalls

- Quick test: Give the agent an impossible task (“Find a file called ‘nonexistent.txt’”). It should fail gracefully, not loop forever trying

list_filesrepeatedly

Problem 2: “Agent claims success but didn’t actually complete the goal”

- Why: The LLM hallucinates completion or misunderstands the success criteria

- Fix: Implement explicit success verification. Don’t rely on the agent’s self-assessment—check the actual state. For “find 3 largest files,” verify

len(largest_files) == 3before accepting success - Quick test: Ask agent to “find files larger than 1GB in a directory with no large files.” Agent should return “no results found,” not hallucinate file names

Problem 3: “State updates are inconsistent across steps”

- Why: State is passed as unstructured text instead of typed objects, leading to parsing errors or forgotten keys

- Fix: Use a typed state object (Pydantic model / TypeScript interface). Serialize/deserialize explicitly at each step. Validate state schema after every update

- Quick test: After step 3, manually inspect

agent_state. Every field should have the expected type. Nonull/undefinedfor required fields

Problem 4: “Observations are too verbose, causing context window overflow”

- Why: Tools return full file contents or API responses without summarization

- Fix: Add observation truncation: limit to 500 tokens per observation. For file reads, return summary statistics (“150 lines, 3 functions defined”) instead of full content

- Quick test: Make agent read a 50KB file. Observation should be <1KB summarized version, not the full file

Problem 5: “Agent forgets earlier observations after 5-6 steps”

- Why: Naive implementations concatenate all history into the prompt, but only the last N observations fit in context

- Fix: Implement state summarization: after each step, extract key facts and update a persistent “knowledge base” separate from raw observations. Include only the knowledge base + last 2-3 observations in the prompt

- Quick test: Give agent a 10-step task that requires remembering step 1’s result at step 10. If it asks for the same information again, state management is broken

Problem 6: “Hard to debug which step went wrong”

- Why: Logs are unstructured text without clear step boundaries

- Fix: Log each step as structured JSON with:

{step_num, thought, action, action_input, observation, state_before, state_after, timestamp}. Use JSON Lines format for easy parsing - Quick test: Run agent, then grep logs for

"action": "read_file". Should return all read operations with full context

Project 3: State Invariants Harness

- Programming Language: Python or JavaScript

- Difficulty: Level 2: Intermediate

- Knowledge Area: State validity and debugging

What you’ll build: A state validator that runs after every agent step and enforces invariants (goal defined, plan consistent, memory entries typed).

Why it teaches AI agents: It forces you to define the exact contract for your agent’s state.

Core challenges you’ll face:

- Defining invariants precisely

- Writing validators that catch subtle drift

Success criteria:

- Fails fast with a human-readable invariant report

- Covers goal, plan, memory, and tool-output validity

- Includes automated tests for at least 3 failure modes

Real world outcome:

- A reusable invariant-checking module with tests and failure reports

Real World Outcome

When you integrate the invariant harness into your agent, it validates state after every step:

$ python agent_with_invariants.py --goal "Summarize database schema"

=== Agent Step 1 ===

Action: connect_database

Observation: Connected to postgres://localhost:5432/app_db

Running invariant checks...

✓ Goal is defined and non-empty

✓ State contains required fields: [goal, step, status]

✓ Step counter is monotonically increasing (1 > 0)

✓ No circular plan dependencies

✓ All memory entries have timestamps and sources

All invariants passed (5/5)

=== Agent Step 2 ===

Action: list_tables

Observation: Found tables: [users, orders, products]

Running invariant checks...

✓ Goal is defined and non-empty

✓ State contains required fields: [goal, step, status, tables]

✓ Step counter is monotonically increasing (2 > 1)

✓ No circular plan dependencies

✓ All memory entries have timestamps and sources

All invariants passed (5/5)

=== Agent Step 3 ===

Action: describe_table

Observation: ERROR - table name missing

Running invariant checks...

✓ Goal is defined and non-empty

✓ State contains required fields: [goal, step, status, tables]

✓ Step counter is monotonically increasing (3 > 2)

✗ INVARIANT VIOLATION: Tool call missing required parameter 'table_name'

=== AGENT HALTED ===

Reason: Invariant violation at step 3

Invariant Report:

{

"step": 3,

"invariant": "tool_call_completeness",

"violation": "Tool 'describe_table' called without required parameter 'table_name'",

"state_snapshot": {

"goal": "Summarize database schema",

"step": 3,

"tables": ["users", "orders", "products"]

},

"expected": "All tool calls must include required parameters from tool schema",

"actual": "Missing parameter: table_name (type: string, required: true)",

"fix_suggestion": "Ensure action selection includes all required parameters before execution"

}

The harness catches violations and produces detailed reports:

{

"timestamp": "2025-12-27T11:15:30Z",

"agent_run_id": "run_abc123",

"total_steps": 3,

"invariants_checked": 15,

"violations": [

{

"step": 3,

"invariant_name": "tool_call_completeness",

"severity": "error",

"message": "Tool 'describe_table' missing required parameter 'table_name'",

"state_before": {...},

"state_after": {...}

}

],

"invariants_passed": [

"goal_defined",

"state_schema_valid",

"step_monotonic",

"no_circular_dependencies",

"memory_provenance"

]

}

When all invariants pass, the agent completes successfully:

=== Agent Completed ===

Total steps: 8

Invariants checked: 40 (8 steps × 5 invariants)

Violations: 0

Success: true

Final state passed all invariants:

✓ Goal achieved and marked complete

✓ All plan tasks have evidence

✓ No dangling references in memory

✓ Tool outputs match schemas

✓ State is serializable and recoverable

You can also run the harness in test mode to validate specific states:

$ python invariant_harness.py test --state-file corrupted_state.json

Testing invariants on provided state...

✓ goal_defined

✓ state_schema_valid

✗ plan_consistency: Plan references non-existent task 'task_99'

✗ memory_provenance: Memory entry missing 'source' field

✓ tool_output_schema

Result: 2 violations found

Details written to: invariant_test_report.json

This demonstrates how invariants catch bugs that would otherwise cause silent failures or incorrect agent behavior.

The Core Question You’re Answering

What exact conditions must hold true for an agent’s state to be valid, and how do you detect violations before they cause incorrect behavior?

This is the foundation of reliable agent systems: explicit contracts that fail loudly when violated.

Concepts You Must Understand First

- State Invariants / Preconditions

- What: Conditions that must always be true about agent state (e.g., “goal must be a non-empty string”)

- Why: Invariants catch bugs early and make debugging deterministic

- Reference: Classical software engineering - “Design by Contract” (Bertrand Meyer) applied to agent state

- Schema Validation and Type Safety

- What: Ensuring data structures match expected shapes and types at runtime

- Why: Agents manipulate dynamic state; type errors corrupt reasoning

- Reference: “Building AI Agents with LLMs, RAG, and Knowledge Graphs” (Raieli & Iuculano, 2025) - Chapter 5: State Management and Validation

- Assertion-Based Testing

- What: Explicitly checking conditions and failing fast when they’re violated

- Why: Assertions document assumptions and catch drift immediately

- Reference: “Build Autonomous AI Agents with Function Calling” (Towards Data Science, Jan 2025) - Testing and Validation section

- State Machine Constraints

- What: Rules about valid state transitions (e.g., “can’t finish before starting”)

- Why: Agents move through phases; invalid transitions indicate bugs

- Reference: “LangChain AI Agents: Complete Implementation Guide 2025” - State Lifecycle Management

- Provenance and Lineage Tracking

- What: Recording where each piece of state came from (which tool, which step)

- Why: Enables debugging “why does the agent believe X?” questions

- Reference: “Generative Agents” (Park et al., 2023) - Memory and Provenance section

Questions to Guide Your Design

-

Which invariants are critical vs nice-to-have? Should a missing timestamp fail the agent, or just log a warning?

-

When do you check invariants? After every step, before every action, or only at specific checkpoints?

-

What happens when an invariant fails? Halt immediately, retry the step, or degrade gracefully?

-

How do you make invariant failures debuggable? What information should the error report contain?

-

Can invariants depend on each other? If invariant A fails, should you still check invariant B?

-

How do you test the invariant checker itself? How do you know it catches all violations without false positives?

Thinking Exercise

Define invariants for this agent state:

{

"goal": "Find and summarize research papers on topic X",

"step": 5,

"status": "in_progress",

"plan": [

{"id": "task_1", "action": "search_papers", "status": "completed"},

{"id": "task_2", "action": "read_abstracts", "status": "in_progress", "depends_on": ["task_1"]},

{"id": "task_3", "action": "summarize", "status": "pending", "depends_on": ["task_2"]}

],

"memory": [

{"type": "fact", "content": "Found 15 papers", "source": "task_1", "timestamp": "2025-12-27T10:00:00Z"},

{"type": "fact", "content": "Read 8 abstracts", "source": "task_2", "timestamp": "2025-12-27T10:05:00Z"}

]

}

Write at least 8 invariants that this state must satisfy. For each, specify:

- The invariant rule (e.g., “all plan tasks must have unique IDs”)

- How to check it (pseudocode)

- What the error message should say if it fails

- Whether failure should halt the agent or just warn

Now introduce 3 bugs into the state (e.g., task depends on non-existent task, memory entry missing timestamp, status=”in_progress” but all tasks completed). Which of your invariants catch them?

The Interview Questions They’ll Ask

-

Q: What’s the difference between state validation and tool output validation? A: Tool output validation checks if a single tool’s response matches its schema. State validation checks if the entire agent state (goal, plan, memory, history) satisfies global invariants. Tool validation is local; state validation is global.

-

Q: Why check invariants at runtime instead of just using types? A: Static types catch structural errors (wrong field name, wrong type). Invariants catch semantic errors (circular dependencies, contradictory facts, violated business rules). Types say “this is a string”; invariants say “this string must be a valid URL that was observed in the last 10 steps.”

-

Q: When should an invariant violation halt the agent vs. just log a warning? A: Halt on violations that make the agent’s state unrecoverable or could lead to dangerous actions (missing goal, corrupted plan, untrusted memory). Warn on quality issues that don’t affect correctness (missing optional metadata, suboptimal plan structure).

-

Q: How do you test invariant checkers without running a full agent? A: Create synthetic state objects that violate specific invariants. Assert that the checker detects the violation and produces the expected error message. Use property-based testing to generate random invalid states.

-

Q: What’s the cost of checking invariants at every step? A: Compute cost (validating schemas, checking dependencies) and latency (agent pauses during checks). Optimize by: (1) checking critical invariants always, (2) checking expensive invariants periodically, (3) caching validation results when state hasn’t changed.

-

Q: How do invariants relate to debugging agent failures? A: Invariants turn debugging from “the agent did something wrong” to “invariant X failed at step Y with state Z.” The violation report is a precise bug description. Without invariants, you’re guessing what went wrong.

-

Q: Can you have too many invariants? A: Yes. Over-specifying makes the agent brittle (fails on edge cases) and slow (too many checks). Focus on invariants that detect actual bugs, not every possible condition. Prioritize: (1) safety (prevent harm), (2) correctness (catch logic errors), (3) quality (improve behavior).

Hints in Layers

Hint 1 (Architecture): Create an InvariantChecker class with a check_all(state) -> List[Violation] method. Each invariant is a function check_X(state) -> Optional[Violation]. Register invariants in a list and iterate through them.

Hint 2 (Critical Invariants): Start with these five: (1) goal_defined - goal field exists and is non-empty, (2) state_schema - state has required fields with correct types, (3) step_monotonic - step counter only increases, (4) plan_acyclic - no circular task dependencies, (5) memory_provenance - all memory entries have source and timestamp.

Hint 3 (Violation Reports): A violation should include: invariant name, step number, expected vs actual, state snapshot before/after, suggested fix. Make it actionable, not just “validation failed.”

Hint 4 (Testing): Write a test suite test_invariants.py with at least 3 tests per invariant: (1) valid state passes, (2) specific violation is caught, (3) error message is correct. Use parameterized tests to cover edge cases.

Books That Will Help

| Topic | Book/Resource | Relevant Section |

|---|---|---|

| Design by Contract | “Object-Oriented Software Construction” (Bertrand Meyer, 1997) | Chapter 11: Design by Contract - preconditions, postconditions, invariants |

| State Management in Agents | “Building AI Agents with LLMs, RAG, and Knowledge Graphs” (Raieli & Iuculano, 2025) | Chapter 5: State Management and Validation |

| Agent Testing and Validation | “Build Autonomous AI Agents with Function Calling” (Towards Data Science, Jan 2025) | Section on testing, error handling, state validation |

| Schema Validation Patterns | “LangChain AI Agents: Complete Implementation Guide 2025” | State lifecycle management, schema enforcement |

| Memory Provenance | “Generative Agents” (Park et al., 2023) | Memory architecture section - provenance and retrieval |

| Assertion-Based Testing | “The Pragmatic Programmer” (Thomas & Hunt) | Chapter on defensive programming and assertions |

| Agent Debugging Techniques | “LangChain ReAct Agent: Complete Implementation Guide 2025” | Debugging and monitoring section |

Common Pitfalls & Debugging

Problem 1: “Invariant checker passes but agent still behaves incorrectly”

- Why: You’re checking structural invariants (field exists, type correct) but missing semantic invariants (field value makes sense in context). For example, checking that

stepis an integer doesn’t catchstep = -1orstep = 1000when only 3 steps have executed. - Fix: Add semantic validators that check business logic: step must be >= 0, step must be <= total_executed_steps, plan tasks must reference valid tool names from your toolkit, memory timestamps must not be in the future.

- Quick test: Create a state with

{"step": 999999, "goal": ""}and verify your checker flags both the impossible step number AND the empty goal.

Problem 2: “Invariant violations produce cryptic error messages like ‘validation failed’“

- Why: Your violation report only contains

success: falsewithout explaining what failed, what was expected, or how to fix it. This makes debugging impossible. - Fix: Every violation must include: (1) invariant name, (2) expected vs actual values, (3) state snapshot at time of violation, (4) suggested fix. Use a structured format like

{"invariant": "step_monotonic", "expected": "step > previous_step", "actual": "step=3, previous_step=5", "suggestion": "Check for state rollback or concurrent modification"}. - Quick test: Trigger a violation and show the error to someone unfamiliar with your code. Can they understand what went wrong without reading the source?

Problem 3: “Invariant checking is too slow and dominates agent execution time”

- Why: You’re running expensive checks (deep graph traversal, regex on large strings, database queries) after every single step, even for cheap actions.

- Fix: Categorize invariants by cost and frequency. Check cheap structural invariants (required fields exist) every step. Check expensive semantic invariants (no circular dependencies, memory provenance chains valid) only at checkpoints (every 5 steps, before replanning, at goal completion). Use caching - if state hasn’t changed since last check, reuse results.

- Quick test: Add timing instrumentation:

time_invariants = time() - start. If invariant checking takes >10% of total execution time, you’re over-checking.

Problem 4: “False positives - checker flags valid states as violations”

- Why: Your invariants are too strict and don’t account for valid edge cases. Example: “all plan tasks must have status PENDING or COMPLETED” fails when a task is IN_PROGRESS (which is valid).

- Fix: Review each invariant against real execution traces. For every invariant, generate 5 test cases: 2 clear violations, 2 valid edge cases, 1 boundary case. If any valid case fails, relax the invariant. Add explicit allowlists for valid edge cases.

- Quick test: Run your invariant checker against 10 successful agent executions. If it flags >0 violations, you have false positives.

Problem 5: “Agent halts on invariant failure but state is actually recoverable”

- Why: You’re treating all violations as fatal errors (halt execution), but some are warnings (missing optional metadata, suboptimal but valid plan structure).

- Fix: Add severity levels to invariants: ERROR (halt immediately - corrupted state, dangerous action), WARNING (log but continue - quality issue), INFO (just record - for post-analysis). Only halt on ERROR-level violations. For warnings, log to a separate audit trail.

- Quick test: Introduce a minor issue like missing an optional

confidencefield in memory. Should the agent halt? If yes, downgrade that invariant to WARNING.

Problem 6: “Invariant checker itself has bugs and crashes the agent”

- Why: The checker assumes state structure that might not exist (accessing

state['plan'][0]when plan is empty), or uses unsafe operations (regex that hangs on large strings, infinite loops in graph traversal). - Fix: Wrap every invariant check in try-except with defensive coding. Before accessing

state['field'], check if field exists. Before iterating, check if collection is not None/empty. Use timeouts for expensive operations. Log checker errors separately from invariant violations. - Quick test: Feed the checker malformed states: empty dict, None, missing required fields, circular references. Checker should return violations, not crash.

Project 4: Memory Store with Provenance

- Programming Language: Python or JavaScript

- Difficulty: Level 3: Advanced

- Knowledge Area: Memory systems

What you’ll build: A memory store that separates episodic memory, semantic memory, and working memory, each with timestamps and sources.

Why it teaches AI agents: You learn how memory drives decisions and how bad memory corrupts behavior.

Core challenges you’ll face:

- Designing retrieval and decay policies

- Ensuring memory entries are attributable

Success criteria:

- Retrieves memories by time, type, and relevance query

- Stores provenance fields (source, timestamp, confidence)

- Explains a decision by tracing a memory chain end-to-end

Real world outcome:

- A memory module that can answer “why did the agent do this” by tracing the provenance chain

Real World Outcome

When you run this project, you will see a complete memory system that behaves like a forensic audit trail for agent decisions. Here’s exactly what success looks like:

Command-line example:

# Store a memory from a tool observation

$ python memory_store.py add-episodic \

--content "User requested file analysis of project.md" \

--source "tool:file_reader" \

--confidence 0.95 \

--timestamp "2025-12-27T10:30:00Z"

Memory ID: ep_001 stored successfully

# Query memory by relevance

$ python memory_store.py query \

--query "What file operations happened today?" \

--memory-type episodic \

--limit 5

Results (3 matches):

1. [ep_001] 2025-12-27T10:30:00Z [confidence: 0.95]

Source: tool:file_reader

Content: "User requested file analysis of project.md"

2. [ep_002] 2025-12-27T10:32:15Z [confidence: 0.88]

Source: tool:file_writer

Content: "Created summary.txt with 245 words"

3. [ep_003] 2025-12-27T10:35:00Z [confidence: 0.92]

Source: agent:decision_maker

Content: "Decided to compare project.md with backup.md based on user goal"

# Trace a decision backward through memory chain

$ python memory_store.py trace-decision \

--decision-id "decision_042" \

--output-format tree

Decision Provenance Chain:

decision_042: "Compare project.md with backup.md"

└─ memory_ep_003: "Decided to compare based on user goal"

└─ memory_ep_001: "User requested file analysis"

└─ tool_output: {"files_found": ["project.md", "backup.md"]}

└─ goal_state: "Analyze project files for changes"

What the output file looks like (memory_db.json):

{

"episodic": [

{

"id": "ep_001",

"content": "User requested file analysis of project.md",

"source": "tool:file_reader",

"timestamp": "2025-12-27T10:30:00Z",

"confidence": 0.95,

"provenance_chain": ["goal_001", "user_request_001"],

"decay_factor": 1.0

}

],

"semantic": [

{

"id": "sem_001",

"fact": "project.md contains deployment configuration",

"derived_from": ["ep_001", "ep_002"],

"confidence": 0.87,

"last_reinforced": "2025-12-27T10:35:00Z"

}

],

"working": {

"current_goal": "Analyze project files",

"active_hypotheses": ["Files may have diverged", "Need comparison"],

"scratchpad": ["Found 2 markdown files", "Both modified today"]

}

}

Step-by-step what happens:

- You start the agent with a goal like “analyze recent file changes”

- Each tool call creates an episodic memory entry with full provenance

- The agent extracts facts and stores them as semantic memories

- Working memory holds the current reasoning state

- When you query “why did you compare these files?”, the system traces backward through the provenance chain

- You get a human-readable explanation with timestamps, sources, and confidence scores

Success looks like: Being able to point at any decision and see the complete chain of memories that led to it, with no gaps or “I don’t know why” responses.

The Core Question You’re Answering

How do you make an AI agent’s memory trustworthy enough that you can audit its decisions like you would audit database transactions, rather than treating its reasoning as a black box?

Concepts You Must Understand First

- Memory Hierarchies in Cognitive Science

- What you need to know: The distinction between working memory (temporary scratchpad), episodic memory (time-stamped experiences), and semantic memory (extracted facts and rules). Each serves a different purpose in decision-making.

- Book reference: “Building LLM Agents with RAG, Knowledge Graphs & Reflection” by Mira S. Devlin - Chapter on short-term and long-term memory systems for continuous learning.

- Provenance Tracking in Data Systems

- What you need to know: Provenance is the “lineage” of data - where it came from, how it was transformed, and what decisions it influenced. Without provenance, you cannot audit or debug agent behavior.

- Book reference: “Memory in the Age of AI Agents” survey paper (December 2025) - Section on logging/provenance standards and lifecycle tracking.

- Retrieval Strategies and Relevance Scoring

- What you need to know: How to query memory based on recency (time-based decay), relevance (semantic similarity), and importance (reinforcement/confidence). Different queries need different strategies.

- Book reference: “Generative Agents” (Park et al.) - Memory retrieval mechanisms using reflection and importance scoring.

- Memory Decay and Forgetting Policies

- What you need to know: Not all memories should persist forever. Decay policies prevent memory bloat and reduce interference from outdated information. Balance retention with relevance.

- Book reference: “AI Agents in Action” by Micheal Lanham - Knowledge management and memory lifecycle patterns.

- Confidence Propagation Through Inference Chains

- What you need to know: When memory A derives from memory B, how does uncertainty propagate? Low-confidence observations should produce low-confidence semantic facts.

- Book reference: “Memory in the Age of AI Agents” survey - Section on memory evolution dynamics and confidence scoring.

Questions to Guide Your Design

-

Memory Storage: Should episodic memories be stored as raw tool outputs, natural language summaries, or structured objects? What are the tradeoffs for retrieval speed vs interpretability?

-

Provenance Granularity: How deep should the provenance chain go? Do you track every intermediate reasoning step, or just tool outputs and final decisions? When does provenance become noise?

-

Retrieval vs Recall: Should the agent retrieve the top-k most relevant memories every time, or should it maintain a “working set” of active memories that get updated? How do you prevent retrieval from dominating runtime?

-

Conflicting Memories: What happens when two episodic memories contradict each other? Do you store both with timestamps, or run a conflict resolution policy? How does this affect downstream semantic memory?

-

Memory Compression: As episodic memory grows, should older memories be summarized into semantic facts? What information is lost in compression, and when does that loss become a problem?

-

Auditability Requirements: If you had to explain a decision to a non-technical stakeholder, what fields would your memory entries need? How do you balance completeness with readability?

Thinking Exercise

Before writing any code, do this by hand:

-

Take a simple agent task: “Find the three largest files in a directory and summarize their purpose.”

- Trace the full execution on paper:

- Write down each tool call (e.g.,

list_files,get_file_size,read_file) - For each tool output, create a mock episodic memory entry with: content, source, timestamp, confidence

- When the agent makes a decision (e.g., “These are the top 3 files”), show which episodic memories it referenced

- Create a semantic memory entry for the extracted fact: “The largest file is config.yaml at 2.4MB”

- Write down each tool call (e.g.,

- Now trace a decision backward:

- Pick the final decision: “Summarize config.yaml, data.json, and README.md”

- Draw the provenance chain: decision → episodic memories → tool outputs → initial goal

- Label each link with what information flowed from parent to child

- Identify what would break without provenance:

- Cross out the source fields in your mock memories

- Try to answer: “Why did the agent summarize config.yaml?” without looking at sources

- Notice how quickly you lose the ability to explain behavior

This exercise will reveal:

- Which fields are actually necessary vs nice-to-have

- How deep the provenance chain needs to go

- Where your retrieval queries will be ambiguous

- What happens when memories conflict

The Interview Questions They’ll Ask

- “How would you implement memory retrieval for an AI agent that needs to answer questions based on past interactions?”